Chaos: Making a New Science - James Gleick (1988)

Chaos and Beyond

“The classification of the constituents of a chaos, nothing less here is essayed.”

—HERMAN MELVILLE, Moby-Dick

TWO DECADES AGO Edward Lorenz was thinking about the atmosphere, Michel Hénon the stars, Robert May the balance of nature. Benoit Mandelbrot was an unknown IBM mathematician, Mitchell Feigenbaum an undergraduate at the City College of New York, Doyne Farmer a boy growing up in New Mexico. Most practicing scientists shared a set of beliefs about complexity. They held these beliefs so closely that they did not need to put them into words. Only later did it become possible to say what these beliefs were and to bring them out for examination.

Simple systems behave in simple ways. A mechanical contraption like a pendulum, a small electrical circuit, an idealized population of fish in a pond—as long as these systems could be reduced to a few perfectly understood, perfectly deterministic laws, their long-term behavior would be stable and predictable.

Complex behavior implies complex causes. A mechanical device, an electrical circuit, a wildlife population, a fluid flow, a biological organ, a particle beam, an atmospheric storm, a national economy—a system that was visibly unstable, unpredictable, or out of control must either be governed by a multitude of independent components or subject to random external influences.

Different systems behave differently. A neurobiologist who spent a career studying the chemistry of the human neuron without learning anything about memory or perception, an aircraft designer who used wind tunnels to solve aerodynamic problems without understanding the mathematics of turbulence, an economist who analyzed the psychology of purchasing decisions without gaining an ability to forecast large-scale trends—scientists like these, knowing that the components of their disciplines were different, took it for granted that the complex systems made up of billions of these components must also be different.

Now all that has changed. In the intervening twenty years, physicists, mathematicians, biologists, and astronomers have created an alternative set of ideas. Simple systems give rise to complex behavior. Complex systems give rise to simple behavior. And most important, the laws of complexity hold universally, caring not at all for the details of a system’s constituent atoms.

For the mass of practicing scientists—particle physicists or neurologists or even mathematicians—the change did not matter immediately. They continued to work on research problems within their disciplines. But they were aware of something called chaos. They knew that some complex phenomena had been explained, and they knew that other phenomena suddenly seemed to need new explanations. A scientist studying chemical reactions in a laboratory or tracking insect populations in a three-year field experiment or modeling ocean temperature variations could not respond in the traditional way to the presence of unexpected fluctuations or oscillations—that is, by ignoring them. For some, that meant trouble. On the other hand, pragmatically, they knew that money was available from the federal government and from corporate research facilities for this faintly mathematical kind of science. More and more of them realized that chaos offered a fresh way to proceed with old data, forgotten in desk drawers because they had proved too erratic. More and more felt the compartmentalization of science as an impediment to their work. More and more felt the futility of studying parts in isolation from the whole. For them, chaos was the end of the reductionist program in science.

Uncomprehension; resistance; anger; acceptance. Those who had promoted chaos longest saw all of these. Joseph Ford of the Georgia Institute of Technology remembered lecturing to a thermodynamics group in the 1970s and mentioning that there was a chaotic behavior in the Duffing equation, a well-known textbook model for a simple oscillator subject to friction. To Ford, the presence of chaos in the Duffing equation was a curious fact—just one of those things he knew to be true, although several years passed before it was published in Physical Review Letters. But he might as well have told a gathering of paleontologists that dinosaurs had feathers. They knew better.

“When I said that? Jee-sus Christ, the audience began to bounce up and down. It was, ‘My daddy played with the Duffing equation, and my granddaddy played with the Duffing equation, and nobody seen anything like what you’re talking about.’ You would really run across resistance to the notion that nature is complicated. What I didn’t understand was the hostility.”

Comfortable in his Atlanta office, the winter sun setting outside, Ford sipped soda from an oversized mug with the word chaos painted in bright colors. His younger colleague Ronald Fox talked about his own conversion, soon after buying an Apple II computer for his son, at a time when no self-respecting physicist would buy such a thing for his work. Fox heard that Mitchell Feigenbaum had discovered universal laws guiding the behavior of feedback functions, and he decided to write a short program that would let him see the behavior on the Apple display. He saw it all painted across the screen—pitchfork bifurcations, stable lines breaking in two, then four, then eight; the appearance of chaos itself; and within the chaos, the astonishing geometric regularity. “In a couple of days you could redo all of Feigenbaum,” Fox said. Self-teaching by computing persuaded him and others who might have doubted a written argument.

Some scientists played with such programs for a while and then stopped. Others could not help but be changed. Fox was one of those who had remained conscious of the limits of standard linear science. He knew he had habitually set the hard nonlinear problems aside. In practice a physicist would always end up saying, This is a problem that’s going to take me to the handbook of special functions, which is the last place I want to go, and I’m sure as hell not going to get on a machine and do it, I’m too sophisticated for that.

“The general picture of nonlinearity got a lot of people’s attention—slowly at first, but increasingly,” Fox said. “Everybody that looked at it, it bore fruit for. You now look at any problem you looked at before, no matter what science you’re in. There was a place where you quit looking at it because it became nonlinear. Now you know how to look at it and you go back.”

Ford said, “If an area begins to grow, it has to be because some clump of people feel that there’s something it offers them—that if they modify their research, the rewards could be very big. To me chaos is like a dream. It offers the possibility that, if you come over and play this game, you can strike the mother lode.”

Still, no one could quite agree on the word itself.

Philip Holmes, a white-bearded mathematician and poet from Cornell by way of Oxford: The complicated, aperiodic, attracting orbits of certain (usually low-dimensional) dynamical systems.

Hao Bai-Lin, a physicist in China who assembled many of the historical papers of chaos into a single reference volume: A kind of order without periodicity. And: A rapidly expanding field of research to which mathematicians, physicists, hydrodynamicists, ecologists and many others have all made important contributions. And: A newly recognized and ubiquitous class of natural phenomena.

H. Bruce Stewart, an applied mathematician at Brookhaven National Laboratory on Long Island: Apparently random recurrent behavior in a simple deterministic (clockwork-like) system.

Roderick V. Jensen of Yale University, a theoretical physicist exploring the possibility of quantum chaos: The irregular, unpredictable behavior of deterministic, nonlinear dynamical systems.

James Crutchfield of the Santa Cruz collective: Dynamics with positive, but finite, metric entropy. The translation from mathese is: behavior that produces information (amplifies small uncertainties), but is not utterly unpredictable.

And Ford, self-proclaimed evangelist of chaos: Dynamics freed at last from the shackles of order and predictability…. Systems liberated to randomly explore their every dynamical possibility…. Exciting variety, richness of choice, a cornucopia of opportunity.

John Hubbard, exploring iterated functions and the infinite fractal wildness of the Mandelbrot set, considered chaos a poor name for his work, because it implied randomness. To him, the overriding messagewas that simple processes in nature could produce magnificent edifices of complexity without randomness. In nonlinearity and feedback lay all the necessary tools for encoding and then unfolding structures as rich as the human brain.

To other scientists, like Arthur Winfree, exploring the global topology of biological systems, chaos was too narrow a name. It implied simple systems, the one-dimensional maps of Feigenbaum and the two- or three- (and a fraction) dimensional strange attractors of Ruelle. Low-dimensional chaos was a special case, Winfree felt. He was interested in the laws of many-dimensional complexity—and he was convinced that such laws existed. Too much of the universe seemed beyond the reach of low-dimensional chaos.

The journal Nature carried a running debate about whether the earth’s climate followed a strange attractor. Economists looked for recognizable strange attractors in stock market trends but so far had not found them. Dynamicists hoped to use the tools of chaos to explain fully developed turbulence. Albert Libchaber, now at the University of Chicago, was turning his elegant experimental style to the service of turbulence, creating a liquid-helium box thousands of times larger than his tiny cell of 1977. Whether such experiments, liberating fluid disorder in both space and time, would find simple attractors, no one knew. As the physicist Bernardo Huberman said, “If you had a turbulent river and put a probe in it and said, ‘Look, here’s a low-dimensional strange attractor,’ we would all take off our hats and look.”

Chaos was the set of ideas persuading all these scientists that they were members of a shared enterprise. Physicist or biologist or mathematician, they believed that simple, deterministic systems could breed complexity; that systems too complex for traditional mathematics could yet obey simple laws; and that, whatever their particular field, their task was to understand complexity itself.

“LET US AGAIN LOOK at the laws of thermodynamics,” wrote James E. Lovelock, author of the Gaia hypothesis. “It is true that at first sight they read like the notice at the gate of Dante’s Hell…” But.

The Second Law is one piece of technical bad news from science that has established itself firmly in the nonscientific culture. Everything tends toward disorder. Any process that converts energy from one form to another must lose some as heat. Perfect efficiency is impossible. The universe is a one-way street. Entropy must always increase in the universe and in any hypothetical isolated system within it. However expressed, the Second Law is a rule from which there seems no appeal. In thermodynamics that is true. But the Second Law has had a life of its own in intellectual realms far removed from science, taking the blame for disintegration of societies, economic decay, the breakdown of manners, and many other variations on the decadent theme. These secondary, metaphorical incarnations of the Second Law now seem especially misguided. In our world, complexity flourishes, and those looking to science for a general understanding of nature’s habits will be better served by the laws of chaos.

Somehow, after all, as the universe ebbs toward its final equilibrium in the featureless heat bath of maximum entropy, it manages to create interesting structures. Thoughtful physicists concerned with the workings of thermodynamics realize how disturbing is the question of, as one put it, “how a purposeless flow of energy can wash life and consciousness into the world.” Compounding the trouble is the slippery notion of entropy, reasonably well-defined for thermodynamic purposes in terms of heat and temperature, but devilishly hard to pin down as a measure of disorder. Physicists have trouble enough measuring the degree of order in water, forming crystalline structures in the transition to ice, energy bleeding away all the while. But thermodynamic entropy fails miserably as a measure of the changing degree of form and formlessness in the creation of amino acids, of microorganisms, of self-reproducing plants and animals, of complex information systems like the brain. Certainly these evolving islands of order must obey the Second Law. The important laws, the creative laws, lie elsewhere.

Nature forms patterns. Some are orderly in space but disorderly in time, others orderly in time but disorderly in space. Some patterns are fractal, exhibiting structures self-similar in scale. Others give rise to steady states or oscillating ones. Pattern formation has become a branch of physics and of materials science, allowing scientists to model the aggregation of particles into clusters, the fractured spread of electrical discharges, and the growth of crystals in ice and metal alloys. The dynamics seem so basic—shapes changing in space and time—yet only now are the tools available to understand them. It is a fair question now to ask a physicist, “Why are all snowflakes different?”

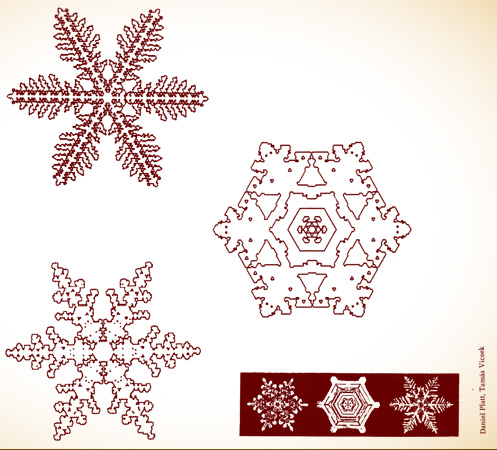

Ice crystals form in the turbulent air with a famous blending of symmetry and chance, the special beauty of six-fold indeterminacy. As water freezes, crystals send out tips; the tips grow, their boundaries becoming unstable, and new tips shoot out from the sides. Snowflakes obey mathematical laws of surprising subtlety, and it was impossible to predict precisely how fast a tip would grow, how narrow it would be, or how often it would branch. Generations of scientists sketched and cataloged the variegated patterns: plates and columns, crystals and polycrystals, needles and dendrites. The treatises treated crystal formation as a classification matter, for lack of a better approach.

Growth of such tips, dendrites, is now known as a highly nonlinear unstable free boundary problem, meaning that models need to track a complex, wiggly boundary as it changes dynamically. When solidification proceeds from outside to inside, as in an ice tray, the boundary generally remains stable and smooth, its speed controlled by the ability of the walls to draw away the heat. But when a crystal solidifies outward from an initial seed—as a snowflake does, grabbing water molecules while it falls through the moisture-laden air—the process becomes unstable. Any bit of boundary that gets out ahead of its neighbors gains an advantage in picking up new water molecules and therefore grows that much faster—the “lightning-rod effect.” New branches form, and then subbranches.

One difficulty was in deciding which of the many physical forces involved are important and which can safely be ignored. Most important, as scientists have long known, is the diffusion of the heat released when water freezes. But the physics of heat diffusion cannot completely explain the patterns researchers observe when they look at snowflakes under microscopes or grow them in the laboratory. Recently scientists worked out a way to incorporate another process: surface tension. The heart of the new snowflake model is the essence of chaos: a delicate balance between forces of stability and forces of instability; a powerful interplay of forces on atomic scales and forces on everyday scales.

BRANCHING AND CLUMPING, (on facing page). The study of pattern formation, encouraged by fractal mathematics, brought together such natural patterns as the lightning-like paths of an electrical discharge and the simulated aggregation of randomly moving particles (inset).

Where heat diffusion tends to create instability, surface tension creates stability. The pull of surface tension makes a substance prefer smooth boundaries like the wall of a soap bubble. It costs energy to make surfaces that are rough. The balancing of these tendencies depends on the size of the crystal. While diffusion is mainly a large-scale, macroscopic process, surface tension is strongest at the microscopic scales.

Traditionally, because the surface tension effects are so small, researchers assumed that for practical purposes they could disregard them. Not so. The tiniest scales proved crucial; there the surface effects proved infinitely sensitive to the molecular structure of a solidifying substance. In the case of ice, a natural molecular symmetry gives a built-in preference for six directions of growth. To their surprise, scientists found that the mixture of stability and instability manages to amplify this microscopic preference, creating the near-fractal lacework that makes snowflakes. The mathematics came not from atmospheric scientists but from theoretical physicists, along with metallurgists, who had their own interest. In metals the molecular symmetry is different, and so are the characteristic crystals, which help determine an alloy’s strength. But the mathematics are the same: the laws of pattern formation are universal.

Sensitive dependence on initial conditions serves not to destroy but to create. As a growing snowflake falls to earth, typically floating in the wind for an hour or more, the choices made by the branching tips at any instant depend sensitively on such things as the temperature, the humidity, and the presence of impurities in the atmosphere. The six tips of a single snowflake, spreading within a millimeter space, feel the same temperatures, and because the laws of growth are purely deterministic, they maintain a near-perfect symmetry. But the nature of turbulent air is such that any pair of snowflakes will experience very different paths. The final flake records the history of all the changing weather conditions it has experienced, and the combinations may as well be infinite.

BALANCING STABILITY AND INSTABILITY. As a liquid crystallizes, it forms a growing tip (shown in a multiple-exposure photograph) with a boundary that becomes unstable and sends off side-branches (left). Computer simulations of the delicate thermodynamic processes mimic real snowflakes (above).

Snowflakes are nonequilibrium phenomena, physicists like to say. They are products of imbalance in the flow of energy from one piece of nature to another. The flow turns a boundary into a tip, the tip into an array of branches, the array into a complex structure never before seen. As scientists have discovered such instability obeying the universal laws of chaos, they have succeeded in applying the same methods to a host of physical and chemical problems, and, inevitably, they suspect that biology is next. In the back of their minds, as they look at computer simulations of dendrite growth, they see algae, cell walls, organisms budding and dividing.

From microscopic particles to everyday complexity, many paths now seem open. In mathematical physics the bifurcation theory of Feigenbaum and his colleagues advances in the United States and Europe. In the abstract reaches of theoretical physics scientists probe other new issues, such as the unsettled question of quantum chaos: Does quantum mechanics admit the chaotic phenomena of classical mechanics? In the study of moving fluids Libchaber builds his giant liquid-helium box, while Pierre Hohenberg and Günter Ahlers study the odd-shaped traveling waves of convection. In astronomy chaos experts use unexpected gravitational instabilities to explain the origin of meteorites—the seemingly inexplicable catapulting of asteroids from far beyond Mars. Scientists use the physics of dynamical systems to study the human immune system, with its billions of components and its capacity for learning, memory, and pattern recognition, and they simultaneously study evolution, hoping to find universal mechanisms of adaptation. Those who make such models quickly see structures that replicate themselves, compete, and evolve by natural selection.

“Evolution is chaos with feedback,” Joseph Ford said. The universe is randomness and dissipation, yes. But randomness with direction can produce surprising complexity. And as Lorenz discovered so long ago, dissipation is an agent of order.

“God plays dice with the universe,” is Ford’s answer to Einstein’s famous question. “But they’re loaded dice. And the main objective of physics now is to find out by what rules were they loaded and how can we use them for our own ends.”

SUCH IDEAS HELP drive the collective enterprise of science forward. Still, no philosophy, no proof, no experiment ever seems quite enough to sway the individual researchers for whom science must first and always provide a way of working. In some laboratories, the traditional ways falter. Normal science goes astray, as Kuhn put it; a piece of equipment fails to meet expectations; “the profession can no longer evade anomalies.” For any one scientist the ideas of chaos could not prevail until the method of chaos became a necessity.

Every field had its own examples. In ecology, there was William M. Schaffer, who trained as the last student of Robert MacArthur, the dean of the field in the fifties and sixties. MacArthur built a conception of nature that gave a firm footing to the idea of natural balance. His models supposed that equilibriums would exist and that populations of plants and animals would remain close to them. To MacArthur, balance in nature had what could almost be called a moral quality—states of equilibrium in his models entailed the most efficient use of food resources, the least waste. Nature, if left alone, would be good.

Two decades later MacArthur’s last student found himself realizing that ecology based on a sense of equilibrium seems doomed to fail. The traditional models are betrayed by their linear bias. Nature is more complicated. Instead he sees chaos, “both exhilarating and a bit threatening.” Chaos may undermine ecology’s most enduring assumptions, he tells his colleagues. “What passes for fundamental concepts in ecology is as mist before the fury of the storm—in this case, a full, nonlinear storm.”

Schaffer is using strange attractors to explore the epidemiology of childhood diseases such as measles and chicken pox. He has collected data, first from New York City and Baltimore, then from Aberdeen, Scotland, and all England and Wales. He has made a dynamical model, resembling a damped, driven pendulum. The diseases are driven each year by the infectious spread among children returning to school, and damped by natural resistance. Schaffer’s model predicts strikingly different behavior for these diseases. Chicken pox should vary periodically. Measles should vary chaotically. As it happens, the data show exactly what Schaffer predicts. To a traditional epidemiologist the yearly variations in measles seemed inexplicable—random and noisy. Schaffer, using the techniques of phase-space reconstruction, shows that measles follow a strange attractor, with a fractal dimension of about 2.5.

Schaffer computed Lyapunov exponents and made Poincaré maps. “More to the point,” Schaffer said, “if you look at the pictures it jumps out at you, and you say, ‘My God, this is the same thing.’” Although the attractor is chaotic, some predictability becomes possible in light of the deterministic nature of the model. A year of high measles infection will be followed by a crash. After a year of medium infection, the level will change only slightly. A year of low infection produces the greatest unpredictability. Schaffer’s model also predicted the consequences of damping the dynamics by mass inoculation programs—consequences that could not be predicted by standard epidemiology.

On the collective scale and on the personal scale, the ideas of chaos advance in different ways and for different reasons. For Schaffer, as for many others, the transition from traditional science to chaos came unexpectedly. He was a perfect target for Robert May’s evangelical plea in 1975; yet he read May’s paper and discarded it. He thought the mathematical ideas were unrealistic for the kinds of systems a practicing ecologist would study. Oddly, he knew too much about ecology to appreciate May’s point. These were one-dimensional maps, he thought—what bearing could they have on continuously changing systems? So a colleague said, “Read Lorenz.” He wrote the reference on a slip of paper and never bothered to pursue it.

Years later Schaffer lived in the desert outside of Tucson, Arizona, and summers found him in the Santa Catalina mountains just to the north, islands of chaparral, merely hot when the desert floor is roasting. Amid the thickets in June and July, after the spring blooming season and before the summer rain, Schaffer and his graduate students tracked bees and flowers of different species. This ecological system was easy to measure despite all its year-to-year variation. Schaffer counted the bees on every stalk, measured the pollen by draining flowers with pipettes, and analyzed the data mathematically. Bumblebees competed with honeybees, and honeybees competed with carpenter bees, and Schaffer made a convincing model to explain the fluctuations in population.

By 1980 he knew that something was wrong. His model broke down. As it happened, the key player was a species he had overlooked: ants. Some colleagues suspected unusual winter weather; others unusual summer weather. Schaffer considered complicating his model by adding more variables. But he was deeply frustrated. Word was out among the graduate students that summer at 5,000 feet with Schaffer was hard work. And then everything changed.

He happened upon a preprint about chemical chaos in a complicated laboratory experiment, and he felt that the authors had experienced exactly his problem: the impossibility of monitoring dozens of fluctuating reaction products in a vessel matched the impossibility of monitoring dozens of species in the Arizona mountains. Yet they had succeeded where he had failed. He read about reconstructing phase space. He finally read Lorenz, and Yorke, and others. The University of Arizona sponsored a lecture series on “Order in Chaos.” Harry Swinney came, and Swinney knew how to talk about experiments. When he explained chemical chaos, displaying a transparency of a strange attractor, and said, “That’s real data,” a chill ran up Schaffer’s spine.

“All of a sudden I knew that that was my destiny,” Schaffer said. He had a sabbatical year coming. He withdrew his application for National Science Foundation money and applied for a Guggenheim Fellowship. Up in the mountains, he knew, the ants changed with the season. Bees hovered and darted in a dynamical buzz. Clouds skidded across the sky. He could not work the old way any more.