Denying to the Grave: Why We Ignore the Facts That Will Save Us - Sara E Gorman, Jack M Gorman (2016)

Chapter 4. Causality and Filling the Ignorance Gap

Coincidence obeys no laws and if it does we don’t know what they are. Coincidence, if you’ll permit me the simile, is like the manifestation of God at every moment on our planet. A senseless God making senseless gestures at his senseless creatures. In that hurricane, in that osseous implosion, we find communion.

—Roberto Bolaño

THERE IS AN OLD ADAGE: “WHAT YOU DON’T KNOW CAN’T hurt you.” In the science denial arena, however, this adage seems to have been recrafted to something like: “What you don’t know is an invitation to make up fake science.” Before it was discovered that tuberculosis is caused by a rather large bacteria called Mycobacterium tuberculosis it was widely believed to be the result of poor moral character. Similarly, AIDS was attributed to “deviant” lifestyles, like being gay or using intravenous drugs. When we don’t know what causes something, we are pummeled by “experts” telling us what to believe. Vaccines cause autism. ECT causes brain damage. GMOs cause cancer.

Interestingly, the leap by the public to latch onto extreme theories does not extend to all branches of science. Physicists are not certain how the force of gravity is actually conveyed between two bodies. The theoretical solutions offered to address this question involve mind-boggling mathematics and seemingly weird ideas like 12 dimensional strings buzzing around the universe. But we don’t see denialist theories about gravity all over the Internet. Maybe this is simply because the answer to the question does not seem to affect our daily lives one way or the other. But it is also the case that even though particle physics is no more or less complex than molecular genetics, we all believe the former is above our heads but the latter is within our purview. Nonphysicists rarely venture an opinion on whether or not dark matter exists, but lots of nonbiologists will tell you exactly what the immune system can and cannot tolerate. Even when scientific matters become a little more frightening, when they occur in some branches of science, they register rather mild attention. Some people decided that the supercollider in Switzerland called the Large Hadron Collider (LHC) might be capable of producing black holes that would suck in all of Earth. Right before the LHC was scheduled to be tested at full capacity, there were a few lawsuits filed around the world trying to stop it on the grounds that it might induce the end of the world. A few newspapers even picked up on the story. But there were no mass protests or Congressional hearings. Nobody tried to storm the LHC laboratories to stop the experiment. Scientists knew all along that the black hole prediction was mistaken. The LHC was turned on, high-energy beams of subatomic particles collided, and something called the Higgs boson (sometimes called the “God particle”) was discovered. Many popular news outlets celebrated this wonderful advance in particle physics, testimony to our enduring interest in science. How different the response of the media to the claim that nuclear power will destroy the world. Scientists tell us that isn’t the case, but to many their considered reassurance makes no difference at all.

Perhaps understandably, when it comes to our health, everyone is an expert, and when we don’t know the cause of something we care deeply about, we can gravitate en masse to all kinds of proposals. Sometimes those theories contain a germ of plausibility to them—the idea that a mercury preservative called thimerosal that was used to preserve vaccines might be linked to autism was not entirely out of the question. It is just that careful research proved it to be wrong. But before that happened, the idea that vaccines cause autism was already ingrained in the minds of many vulnerable people, especially parents of children with autism desperately searching for answers.

Similarly, we are frightened when we get coughs and colds. Viruses are mysterious entities, so we decide that a class of medication called antibiotics is going to kill them and cure us. Although we do now have some drugs that successfully treat viral illnesses like HIV and herpes, the ones we take for what we believe are sinus infections and “bad head colds,” drugs like “Z-Pak,” are totally ineffective against these viral illnesses. For the most part, scientists don’t know how to treat viral illnesses, and therefore the myth that common antibiotics will do the trick fills the knowledge gap. Even doctors behave as if they believe it, despite the fact that they are all taught in medical school that this is absolutely not the case. It is simply too frightening to accept the fact that we don’t know what causes something or how to cure it. So why do we trust experts when they tell us that black holes are not going to suck us up but think we are the experts when we tell our doctor we must have a Z-Pak? How can we live with uncertainty, even when that uncertainty extends to scientists who have not—and never will—figure out all the answers?

In this chapter, we seek to understand why people feel the need to fill the ignorance gap. We argue that it is highly adaptive to know how to attribute causality but that people are often too quick to do so. This is another instance in which adaptive, evolutionary qualities have done us a disservice in the face of complex debates and rational thinking. In particular, people have a difficult time sitting with uncertainty and an especially hard time accepting coincidence. We consider the evidence from decades of psychological research showing people’s misunderstanding of cause and effect and the elaborate coping mechanisms we have developed as a result. Finally, we suggest some ways to help us better comprehend true causality, without diminishing our ability to attribute cause when it is in fact appropriate.

What Is a Cause?

There is actually no easy answer to the question “What is a cause?” Philosophers, scientists, and economists have been arguing for centuries over what constitutes causality, and there is no reason to believe that any of these fields has a great answer to the question. The issue of causality really begins with Aristotle. Aristotle’s theory of the four causes (material, formal, efficient, and final) is extremely complex and not too central here, but an aspect of Aristotle’s philosophy of cause that is useful for our purposes is the notion that we do not have knowledge of something until we know its cause. Aristotle presented this notion in Posterior Analytics, and the concept behind it seems to describe a central inclination of human psychology. We want to know the why of everything. Anyone who has been around a small child has perhaps not so fond memories of the why game. The child will get into a mood or phase in which he or she asks “Why?” about everything you do or say. “I’m going to the grocery store,” you might say, and the child will immediately ask, “Why?” “Because I need to get bananas,” you might respond, and the child will immediately ask “Why?” again. “Because I like bananas in my cereal,” and the child asks, “Why?” and so forth. This behavior is extremely frustrating for an adult, since at a certain point we grow tired of providing explanations for everything we are doing. However, insofar as children represent uninhibited human inclinations, the desire to know the why of everything is a basic human instinct. Aristotle simply formalized this intuitive notion by making it clear that we are never comfortable with our knowledge of a subject until we know the “why” behind it. Scientists may know that the MERS coronavirus is a SARS-like contagious disease spreading throughout the Middle East, but they will not stop their investigation and proclaim “knowledge” of this particular outbreak until they understand where precisely it originated.

Aristotle is often referred to as the father of science, but a lot of our scientific thoughts about causality come more directly from the British empiricists of the 18th century, especially David Hume. Hume and his contemporaries were basically obsessed with the notion of “experience” and how it generates knowledge. Accordingly, Hume found a problem with using inductive reasoning to establish causation, a not inconsequential finding since this is the fundamental way that scientists attempt to establish that something causes something else. Hume basically left us with a puzzle: if we always observe B occurring after A, we will automatically think that A causes B due to their contiguity. Hume of course notes that this perception does not mean that A is actually the cause of B. Yet he is more interested in establishing how we perceive causality than in defining what a cause truly is. In this sense, Hume’s philosophy represents a kind of psychological theory. How we perceive causality will be based on temporality and contiguity, whether or not these are truly reliable ways of establishing causality. In other words, we are naturally inclined to attribute the experience of constant contiguity to causality.

In 1843, John Stuart Mill converted some of this philosophizing on causation into a more scientific process of inquiry. Mill posited five methods of induction that could lead us to understand causality. The first is the direct method of agreement. This method suggests that if something is a necessary cause, it must always be present when we observe the effect. For example, if we always observe that the varicella zoster virus causes chickenpox symptoms, then varicella zoster must be a necessary cause of chickenpox. We cannot observe any cases of chickenpox symptoms in which the varicella zoster virus is not present.

The second method is the method of difference. If two situations are exactly the same in every aspect except one, and the effect occurs in one but not the other situation, then the one aspect they do not have in common is likely to be the cause of the effect of interest. For example, if two people spent a day eating exactly the same foods except one person ate potato salad and the other did not, and one ends up with food poisoning and the other is not sick, Mill would say that the potato salad is probably the cause of the food poisoning. The third method is a simple combination of the methods of agreement and difference.

Mill’s fourth method is the method of residue. In this formulation, if a range of conditions causes a range of outcomes and we have matched the conditions to the outcomes on all factors except one, then the remaining condition must cause the remaining outcome. For example, if a patient goes to his doctor complaining of indigestion, rash, and a headache, and he had eaten pizza, coleslaw, and iced tea for lunch, and we have established that pizza causes rashes and iced tea causes headaches, then we can deduce that coleslaw must cause indigestion.

The final method is the method of concomitant variation. In this formulation, if one property of a phenomenon varies in tandem with some property of the circumstance of interest, then that property most likely causes the circumstance. For example, if various samples of water contain the same ratio of concentrations of salt and water and the level of toxicity varies in tandem with the level of lead in the water, then it could be assumed that the toxicity level is related to the lead level and not the salt level. Following on the heels of Mill, scientific theories of causation developed further with Karl Popper and Austin Bradford Hill. In 1965, Hill devised nine criteria for causal inference that continue to be taught in epidemiology classes around the world:

1.Strength: the larger the association, the more likely it is causal.

2.Consistency: consistent observations of suspected cause and effect in various times and places raise the likelihood of causality.

3.Specificity: the proposed cause results in a specific effect in a specific population.

4.Temporality: the cause precedes the effect in time.

5.Biological gradient: greater exposure to the cause leads to a greater effect.

6.Plausibility: the relationship between cause and effect is biologically and scientifically plausible.

7.Coherence: epidemiological observation and laboratory findings confirm each other.

8.Experiment: when possible, experimental manipulation can establish cause and effect.

9.Analogy: cause-and-effect relationships have been established for similar phenomena.

Some of these criteria are much more acceptable to modern epidemiologists than are others. For example, any scientist will agree that temporality is essential in proving causality. If the correct timeline of events is missing, causality cannot be established. On the other hand, criteria such as analogy and specificity are much more debated. These criteria are generally viewed as weak suggestions of causality but can by no means “prove” a cause-and-effect relationship.

If It Can Be Falsified It Might Be True

In the early 20th century, Popper defined what has come to be a central tenet of the scientific notion of causality and, more specifically, the kind of causality that becomes so difficult for nonscientists to grasp. For Popper, proving causality was the wrong goal. Instead, induction should proceed not by proving but by disproving. Popper is thus often seen as the forefather of empirical falsification, a concept central to modern scientific inquiry. Any scientific hypothesis must be falsifiable. This is why the statement “There is a God” is not a scientific hypothesis because it is impossible to disprove. The goal of scientific experimentation, therefore, is to try to disprove a hypothesis by a process that resembles experience or empirical observation. It is this line of thinking that informs the way hypothesis testing is designed in science and statistics. We are always trying to disprove a null hypothesis, which is the hypothesis that there is in fact no finding. Therefore, a scientific finding will always be a matter of rejecting the null hypothesis and never a matter of accepting the alternative hypothesis.

For example, in testing a new medication, the technical aspects of acceptable modern experimental design frame the question being asked not as “Can we prove that this new drug works?” but rather “With how much certainty can we disprove the idea that this drug does not work?” As we discuss further in the next chapter on complexity, this formulation is particularly counterintuitive and causes scientists to hesitate to make declarative statements such as “Vaccines do not cause autism.” Scientists are much more comfortable, simply because of the way experimentation is set up, to say something like “There is no difference in incidence of autism between vaccinated and non-vaccinated individuals.” For most of us, this kind of statement is unsatisfactory because we are looking for the magic word cause. Yet, following Popper, scientific experimentation is not set up to establish cause but rather to disprove null hypotheses, and therefore a statement with the word cause is actually somewhat of a misrepresentation of the findings of scientific experimentation.

So why is it so difficult to establish a cause in a scientific experiment? As Popper observed, and as many modern scientists have lamented, it is because it is impossible to observe the very condition that would establish causality once and for all: the counterfactual. In 1973, David Lewis succinctly summarized the relationship between causality and the idea of the counterfactual:

We think of a cause as something that makes a difference, and the difference it makes must be a difference from what would have happened without it. Had it been absent, its effects—some of them, at least, and usually all—would have been absent as well.1

The counterfactual condition refers to what would have happened in a different world. For example, if I am trying to figure out whether drinking orange juice caused you to break out in hives, the precise way to determine this would be to go back in time and see what would have happened had you not had the orange juice. Everything would have been exactly the same, except for the consumption of orange juice. I could then compare the results of these two situations, and whatever differences I observe would have to be due to the consumption or non-consumption of orange juice, since this is the only difference in the two scenarios. You are the same you, you have the same exact experiences, and the environment you are in is exactly the same in both scenarios. The only difference is whether or not you drink the orange juice. This is the proper way to establish causality.

Since it is impossible to observe counterfactuals in real life (at least until we have developed the ability to go back in time), scientists often rely on randomized controlled trials to best approximate counterfactual effects. The thinking behind the randomized trial is that since everyone has an equal chance of being in either the control or experimental group, the people who get the treatment will not be systematically different from those randomized to get no treatment and therefore observed results can be attributed to the treatment. There are, however, many problems with this approach. Sometimes the two groups talk to each other and some of the experimental approach can even spill over into the control group, a phenomenon called “contamination” or “diffusion.” Sometimes randomization does not work and the two groups turn out, purely by chance in most of these instances, to in fact be systematically different. Sometimes people drop out of the experiment in ways that make the two groups systematically different at the end of the experiment, a problem scientists refer to as “attrition bias.”

Perhaps most important for epidemiological studies of the sort most relevant for the issues we deal with in this book, randomized controlled trials are often either impractical or downright unethical. For example, it would be impractical to randomly assign some people to eat GMOs and some people to not eat GMOs and then to see how many people in each group developed cancer. The development of cancer occurs slowly over many years, and it would be difficult to isolate the effects of GMOs versus all the other factors people are exposed to in their everyday lives that could contribute to cancer. Never mind the issue that a very substantial amount of the food we have been eating for the last decade in fact contains GMOs, making it a lot harder than might be thought to randomize people to a no-GMO group without their knowing it by virtue of the strange food they would be asked to eat. If we wanted to have a tightly controlled experiment to test this directly, we would have to keep people in both control and experimental groups quarantined in experimental quarters for most of their adult lives and control everything: from what they ate to what occupations they pursued to where they traveled. This would be costly, impractical, and, of course, unethical. We also could not randomize some severely depressed individuals to have ECT and others to no ECT, because if we believe that ECT is an effective treatment, it is unethical to deny the treatment to people who truly need it. In other words, once you have established the efficacy of a treatment, you cannot deny it to people simply for experimental purposes.

For all of these reasons, epidemiologists often rely on other experimental designs, including quasi-experimental designs, cohort studies, and case-control studies, many of which include following people with certain exposures and those without those exposures and seeing who develops particular diseases or conditions. The precise details of these different methods is less important for us now than what they have in common: instead of randomly assigning participants to different groups before starting the study, in these designs the research subjects have either already been or not been exposed to one or more conditions for reasons not under the researchers’ control. Some people just happen to live near rivers that are polluted with industrial waste and others do not live as close to them; some people had the flu in 2009 and some didn’t; some people drink a lot of green tea and others don’t; or some people smoke and others don’t.

In a world in which theories of causality ideally rely heavily on counterfactuals, these study designs are imperfect for a number of reasons. There are so many factors that determine why a person is in one of these groups, or cohorts, and not in the other that it is never an easy matter to isolate the most important ones. Take a study trying to find out if living near a polluted river increases the risk for a certain type of cancer. It could be that people who live near rivers own more boats and are exposed to toxic fumes from the fuel they use to power those boats or that more of them actually work at the factory that is polluting the river and are exposed to chemicals there that aren’t even released into the river. If the researchers find more cancers in the group living near the polluted rivers, how do they know if the problem is the water pollution itself, inhaling fumes from boat fuel, or touching toxic chemicals in the factory?

On the other hand, cohort studies have managed to unearth findings as powerful as the irrefutable fact that cigarettes cause cancer. So while many of the tools we have at our disposal are imperfect, they are far from valueless. The problem is simply that establishing that cigarettes cause cancer required many decades of careful research and replicated studies before scientists were comfortable using the magic word “cause.” The reluctance of scientists to use this word often has nothing to do with the strength of the evidence but is simply a remnant of the way scientists are taught to think about causality in terms of counterfactuals. Scientists will be reluctant to say the magic word until someone develops a time machine to allow us to observe actual counterfactual situations. In contrast, as Aristotle so astutely observed, laypeople are primed to look for causality in everything and to never feel secure until they have established it. This disconnect between the crude way in which we are all primed to think about causality and the ways in which scientists are trained to think about it causes a great deal of miscommunication and sometimes a sense of public distrust in scientists’ knowledge.

Pies That Don’t Satisfy

This brings us to a concept of causality used commonly in epidemiology that is for some reason very difficult to wrap our minds around: the sufficient-component cause model. Invented by epidemiologist Ken Rothman in 1976, this model is the subject of numerous multiple-choice questions for epidemiologists and public health students across the globe every year. As one of your authors with training in epidemiology knows, these are the questions that many students get wrong on the test. They are notoriously complex and sometimes even counterintuitive. The model imagines the causes of phenomena as a series of “causal pies.” For example, obesity might be composed of several causal pies of different sorts. One pie might detail environmental causes, such as lack of a good walking environment, lack of healthy food options, and community or social norms. Another pie might include behavioral causes, such as a diet high in sugar and calories, lack of exercise, and a sedentary lifestyle. A third pie might include familial causes, such as poor parental modeling of healthy behaviors, a family culture of eating out or ordering takeout, and a lack of healthy food available in the home. A final pie might involve physiological factors like genes for obesity and hormonal abnormalities that cause obesity like Cushing’s disease or hypothyroidism. Taken together, these different “pies” cause obesity in this model. For a cause to be necessary, it must occur in every causal pie. For example, if factor A needs to be present to cause Disease X, but other factors are also needed to cause Disease X, then the different pies will all have factor A in combination with other factors. If a cause is sufficient, it can constitute its own causal pie, even if there are other possible causes. For example, HIV is a sufficient cause of AIDS. HIV alone causes AIDS, regardless of what other factors are present.

In this model, causes can come in four varieties: necessary and sufficient, necessary but not sufficient, sufficient but not necessary, or neither sufficient nor necessary. The presence of a third copy of chromosome 21 is a necessary and sufficient cause of Down syndrome. Alcohol consumption is a necessary but not sufficient cause of alcoholism. In order to be classified as an alcoholic, alcohol consumption is necessary, but the fact of drinking alcohol in and of itself is not enough to cause alcoholism, or else everyone who drank alcohol would automatically be an alcoholic. Exposure to high doses of ionizing radiation is a sufficient but not necessary cause of sterility in men. This factor can cause sterility on its own but it is not the only cause of sterility, and sterility can certainly occur without it. A sedentary lifestyle is neither sufficient nor necessary to cause coronary heart disease. A sedentary lifestyle on its own will not cause heart disease, and heart disease can certainly occur in the absence of a sedentary lifestyle.

Although the model may seem abstract and overly complex, it is actually more relevant to everyday life and everyday health decisions than many people realize. For example, people who refuse to believe that cigarettes cause cancer (and there are still people who believe this, although there are many fewer than there were a few decades ago) often invoke the following refrain: “My aunt is 90 years old, smoked every day of her life, and she does not have lung cancer. Therefore, cigarettes cannot possibly cause lung cancer.” This statement represents a misunderstanding of the sufficient-component cause model in its most devastating form. Smoking is neither a necessary nor sufficient cause of lung cancer. This means that lung cancer can develop in the absence of smoking and not everyone who smokes will develop lung cancer.2 In other words, people who smoke may not develop lung cancer and people who do not smoke may develop lung cancer. But this does not mean that smoking is not a cause of lung cancer. It certainly is part of the causal pie. It just is not part of every causal pie and it cannot constitute its own causal pie, since the fact of smoking by itself does not produce lung cancer but must be accompanied by a personal genetic susceptibility to cigarette-induced mutations that cause cancer of the lungs. The model shows us that the fact that 90-year-old Aunt Ruth, who has been smoking two packs a day since 1935, does not have lung cancer is actually irrelevant. Smoking causes lung cancer, even if we do not observe cancer in every case of smoking. This is important, because, as we can see, a simple misunderstanding of the sufficient-component cause model can lead people to make incorrect assumptions about the health risks of certain behaviors.

As should be obvious by now, the concept of causality is a difficult one. Almost every intellectual you can think of, from philosophers to scientists, has probably pondered the definition of causality and how to establish it at some point. As we can see, there are a multitude of problems with inferring causality, and, in fact, many epidemiologists and research scientists would say that establishing causality is, in a sense, impossible. Since we cannot observe the counterfactual, we can never know for certain whether input A causes outcome B or whether they are simply strongly associated. Randomized controlled experiments are the closest we can come to a good approximation of the counterfactual condition, but they are often just not possible in our attempt to answer important questions about human health.

Cherry-Picking Yields Bad Fruit

Combine the healthy dose of skepticism with which professional scientists approach the establishment of causality with the natural human desire, noted by Aristotle, for causal mechanisms and we have a very real problem. Because causality is so difficult and because randomized controlled trials are only an approximation of the counterfactual condition, scientists must repeat experiments many times and observe the same result in order to feel some level of confidence in the existence of a causal relationship. Since the probability of finding the same results in numerous trials is relatively low if the true relationship has no causal valence, scientists can eventually begin to posit that a causal relationship exists. For example, the discovery that smoking cigarettes causes lung cancer did not occur overnight. Even though scientists had a strong hunch that this was the case, and there was certainly anecdotal evidence to support it, they had to wait until a number of carefully designed experiments with the appropriate statistical analyses could establish that the relationship was strong enough to be causal and that the observation of lung cancer following smoking was not simply due to random chance. Accompanied by the biological plausibility of nicotine’s ability to cause carcinogenic mutations in lung cells, scientists were eventually able to communicate that the association between cigarette smoking and lung cancer was in all probability causal.

This point, about the necessity for multiple experimental demonstrations of the same effect before causality is declared, has another very important implication: in general, the results of a single experiment should not be taken as proof of anything. Unfortunately, the proselytes of anti-science ideas are constantly making this error. Thus, if 50 experiments contradict their beliefs and a single experiment seems consistent with them, certain individuals will seize upon that one experiment and broadcast it, complete with accusations that scientists knew about it all along but were covering up the truth. This is often referred to as cherry-picking the data.

While scientists verify and replicate the results of these experiments, we are always reluctant to wait patiently for an “answer.” And this is exactly what Aristotle observed. People are not comfortable with observing phenomena in their environments that cannot be explained. As a result, they come up with their own explanations. In many instances, these explanations are incorrect, and, as we shall see, they are very often based on misinterpretations of coincidence. Here, the rational brain’s ability to test and establish causality is completely at odds with the evolutionary impulse to assign causal explanations to everything in sight. The former requires long periods of thought, consideration, and a constant rethinking of what we even mean by cause. The latter feels necessary for everyday survival, to navigate a complex environment, and to satisfy a basic need to feel that the unknown is at least knowable.

Coincidence? I Think Not

A few months ago, one of us (Sara) lost the charger for her laptop and had to find a replacement. The replacement charger had an unusual feature: it needed a moment before the computer registered that the laptop was charging after being plugged in. Perhaps this was because this was not the charger originally designed for this particular laptop. In any case, the first time Sara plugged in her laptop with the new charger, she moved the laptop closer to the electrical socket and noticed that as she was doing this, the computer registered the charge. Sara immediately thought: “The laptop must need to be a certain distance from the electrical socket for the charger to work” and continued this ritual every time she plugged in her computer. One day, her husband interrupted her right after she plugged in her laptop and she did not have a chance to move the laptop closer to the electrical socket. When she sat back down at the computer, she saw that it had registered the charge. Being an adherent to the scientific method, Sara immediately realized that what she had thought to be a causal connection was a simple coincidence and that the real element that allowed the computer to register the charge was not the distance from the electrical socket but rather the simple passage of time. Nevertheless, the next time she plugged in her computer, she did not simply wait for the laptop to register the charge. Instead, she continued her ritual of moving the laptop closer to the electrical socket in what she rationally knew was a futile effort while she waited for the laptop to register the charge. Our readers will immediately recognize that this behavior has an element of confirmation bias to it: Sara refused to give up a belief she had acquired despite the presentation of new evidence to the contrary. But this time look at it from the perspective of causality. The new evidence specifically disconfirms the hypothesis that moving the computer closer to the electrical source causes the computer to register the charge.

This story is not simply meant to demonstrate the fallibility of your authors (although they readily admit they are indeed thus). It succinctly demonstrates the power of the human desire for causality, the extreme discomfort we all have with coincidence, and the devious ways in which our psychological processes, most of which are intuitive, can overcome, mute, and even sometimes obliterate our rational brains. Indeed, this kind of discomfort with coincidence, even when we know the true cause, has been established in a significant number of classic psychological studies, including experiments by the great B. F. Skinner. What is it about coincidence that makes us so uncomfortable? For one thing, we are primed to appreciate and recognize patterns in our environment.3 If you flash a dot on and off against a wall with a laser pointer and then another one on and off within a tight time frame, people will register this as the motion of one dot rather than the flashing of two distinct dots.4 In other words, people prefer sequences, and they will go to great lengths to rid their environments of randomness. Simple reinforcement studies, such as with Pavlov’s famous dogs, have shown that it is very difficult for us to dissociate things that have for whatever reason become associated in our minds. If your phone rang while you were eating carrots yesterday and then it rang again today while you were again eating carrots, we can probably all agree that this is most likely a coincidence. There is no reason to believe that eating carrots could possibly cause the phone to ring. But we guarantee you that if this happened to you on several occasions, as ridiculous as it is, the thought would cross your mind that maybe the act of eating carrots causes your phone to ring. You will know it is not true, and you may even feel silly for thinking of it, but your mind will almost certainly register the thought. In reality, you might have a routine in which you eat carrots at a certain time that is also a time when many people are home from work and make phone calls. Maybe your carrot cravings are associated with the period after work and before dinner and this time is likely to be associated with a larger volume of people who could potentially be available to call you. This is an example of two unrelated events co-occurring because of the presence of some common third variable. This example may seem ridiculous, but the principle holds true: our desire to attribute causality is strong enough to override even our own conscious rationality.

We Love Our Patterns

As we’ve just established, human beings are primed to look for contiguity. We react to patterns in our environment above all.5 This means that if two events just happen to occur together, we tend to assign a pattern to them that assumes that these events occur together regularly for a particular reason. Given our Aristotelian-defined inclination to assign causality to observed phenomena, we will likely begin to believe that not only do these events occur together regularly but they also occur together regularly because one causes the other. A belief is therefore the simple association the brain makes between two events when event B follows event A. The next time A occurs, our brains learn to expect B to follow. This is the most basic kind of belief formation: “I believe that eating carrots causes the phone to ring, since the last time I ate carrots the phone rang and now the next time eating carrots occurs, my brain will come to expect the phone to ring again.”

Psychologists have often noted that this kind of pattern and belief formation probably provided our primitive ancestors with an important survival advantage. Since these ancestors had little means available to them to systematically separate causal connections from coincidence, they learned to pay attention to every observed association and to assume causality, just in case.6 For example, if you were once chased and injured by an animal with orange and black stripes, you might soon associate the colors orange and black with danger. When you see orange and black in your environment subsequently, you will become anxious, your sympathetic nervous system will be activated, your psychological shortcut for “Orange and black cause danger” will be activated, and you will exhibit avoidant behavior—that is, you will run as fast as you can. This behavior might cause you to avoid orange-and-black objects that are not actually dangerous to you, but at the same time it will also help you to avoid tigers and any other orange-and-black objects that are dangerous to you, and you will therefore be much more likely to survive than someone who has not developed this pattern recognition. Thus in the absence of the ability to parse out causal from noncausal factors, it was evolutionarily beneficial for primitive human beings to err on the side of caution and assign causality to situations that might be dangerous or to elements of clearly dangerous situations that may or may not be truly causal. This evolutionary benefit of over-assigning causality probably became hardwired in human brains and has now become a fact of everyday existence and a natural human inclination.

Stuck in a Pattern Jam

It would therefore not be an exaggeration to assert that we are most comfortable when we are aware of what causes the phenomena in our environments. We can think back to the last time we were stuck in a horrendous traffic jam. After cursing our luck and swearing a bit, our first thought was “Why? Why is there a traffic jam? Is it an accident? Rush hour? Construction? Is everyone just going to the Jersey Shore because it is a nice day? Is it a holiday?” Then we turned on the radio and tuned into the traffic channel, waiting anxiously for news about the traffic on our route. We did not pay much attention to traffic reports for the route before we set out, which might actually have been helpful since we might have been able to plan an alternative route. But once in the traffic jam, we became completely focused on hearing about why we were stuck in this particular traffic jam at this particular moment.

In reality, when you are sitting in the middle of a traffic jam that extends for many miles, listening to the traffic report and even knowing the cause of the traffic has no practical value. You cannot change your route because you are stuck, and the next exit is many miles away. You cannot get out of your car and take the subway or walk instead. You cannot go back in time and take an alternative route or rush to the scene of the accident, get the site cleared up, and fix the traffic problem more efficiently. There is literally nothing you can do about the situation you are in, and knowing its cause will do nothing to help you. Nevertheless, we feel a strong need to know why. We are not satisfied just waiting in the traffic, listening to some music, and eventually getting home. The comforting feeling of being able to assign causes to observed phenomena in our environments probably has a lot to do with the evolutionary advantage of recognizing patterns and causes discussed earlier. Since we are hardwired to look for causes and since recognizing causes once allowed us a significant evolutionary advantage, we will most often feel a great sense of relief when we figure out the cause of something. Knowing what caused the traffic jam didn’t make it go away, but it did help us cope with it.

This is the sort of thinking that drives us to the doctor when we have unexplained symptoms. First and foremost, we believe that the doctor can offer us tangible relief and possibly even a cure. At the same time, we also take great relief in a diagnosis, not simply because it often moves us closer to a cure, but also because we intuitively feel better when we know the why behind the phenomena around us. How unsatisfied and even angry we feel if the doctor tells us she cannot find what is causing our symptoms, which is in fact very often the case. Unfortunately, this mentality leads both doctors and patients to embark on diagnostic test adventures. Our healthcare system is being bankrupted by unnecessary MRIs, CAT scans, and other expensive tests in futile searches for a cause that will make us feel we are in control. In medical school doctors are taught: “Do not order a test unless you know that the result of that test will make a difference in the treatment you recommend to the patient.” Yet, confronted with a patient who has symptoms, that wisdom goes right out the window. A doctor may know very well that the best treatment for a patient complaining of back pain who has a normal neurological examination is exercise and physical therapy. In the great majority of cases, nothing that turns up on an MRI of the back, even a herniated disc, will change that approach. But doctors still order the MRI so that they can tell the patient “Your back pain is caused by a herniated disc.”7 Then the doctor recommends exercise and physical therapy.

We therefore create a world of “arbitrary coherence,” as Dan Ariely has termed it.8 Our existence as “causal-seeking animals”9 means that the kinds of associations and correlations that researchers notice in the process of attempting to establish causality are immediately translated into true and established causes in our minds. We can know rationally that association does not prove causation, and we can even recognize examples in which this kind of thinking is a fallacy, and all the same, our innate psychological processes will still undermine us and assign causality whenever possible. And as many researchers have established, once we come to believe something, once we formulate our hypothesis that carrot-eating causes phone-ringing, we are extremely slow to change our beliefs, even if they have just been formulated or have been formulated on the basis of weak or inconsistent evidence.10

What does all of this mean for our evaluation of health-related information? Many phenomena associated with health are actually coincidental. For example, it is possible to have an allergic rash in reaction to a medication and also have an upset stomach, but the two symptoms might be coincidental rather than related. This is actually quite common in everyday life, but we tend to believe that any symptoms we have at the same time must be all causally related to the same condition.

It Really Is Just a Coincidence

In terms of health topics discussed in this book, coincidence can be interpreted as causality when no clear cause has been identified by science. The most potent example is the vaccines-autism issue. Scientists have some ideas about factors that may contribute to autism, such as older age of the father at conception and certain risk genes, but for the most part, the causes are a mystery.11 It becomes even more difficult to conceptualize cause in the case of chronic diseases, for which contributing factors can be diverse and every individual can have a different combination of risk factors that cause the same disease. The timing of childhood vaccines and of the onset of autism are so close that they can be considered synchronous. This synchronicity opens up the potential for coincidence to be interpreted as cause. Indeed, many people have viewed the rise in rates of autism and the synchronous rise in volume of childhood vaccines as more than coincidence. They insist that adding vaccines to the childhood regimen “overwhelms” the child’s immune system and results in devastating chronic health outcomes, including autism.

A similar coincidence could be observed with nuclear power and cancer. A rise in cases of cancer in a particular town might coincide in time with the building of a nuclear power plant. Based on what we know about the development of cancer, it is very unlikely that a nuclear power plant could immediately cause cancer. Even if there were an association between nuclear power plants and cancer, it would take a long time for cancer cases to rise after the building of the plant. But our innate search for causality might cause us to view this coincidence as cause and immediately decide that even the building of a nuclear power plant is a real cause of devastating disease.

The interpretation of coincidence as cause is extremely common in misunderstandings of disease causality and health dangers, and, as we have seen, the complexity of establishing cause in medical science and the divergence between the ways scientists and the rest of us conceptualize cause exacerbates the situation further, as we discussed in the introduction.

Rabbit Feet and Other Strange Beliefs

Many definitions of superstition involve the notion that superstition is established by a false assignment of cause and effect.12 Superstition is an extremely powerful human inclination that perfectly fits the notion of filling the ignorance gap. We cannot know why many things around us happen, so we invent reasons or ways to try to control occurrences and we assign causes to nonrelated incidents that may have occurred alongside an event. We all know how this works, of course, and we are all guilty of engaging in superstition, even if we do not consider ourselves particularly superstitious people. We might re-wear clothing that we thought brought us good luck at a recent successful interview or eat the same cereal before a test that we ate before another test on which we performed well. These are examples of superstitious behavior. Many psychologists, medical researchers, and philosophers have framed superstition as a cognitive error. Yet in his 1998 book Why People Believe Weird Things: Pseudoscience, Superstition, and Other Confusions of Our Time, Michael Shermer challenges the notion that superstition is altogether irrational. Shermer effectively asks why superstition would be so pervasive if it were a completely irrational, harmful behavior. Shermer hypothesizes that in making causal associations, humans confront the option to deal with one of two types of errors that are described in classic statistical texts: Type I errors involve accepting something that is not true, and Type II errors involve rejecting something that is true. Shermer proposes that in situations in which committing a Type II error would be detrimental to survival, natural selection favors strategies that involve committing Type I errors. Hence superstitions and false beliefs about cause and effect arise.13 This is precisely the argument we made earlier with our example about avoiding orange and black after an attack by an orange-and-black animal: erring on the side of caution and believing something that may not be true is more beneficial than potentially rejecting something that may be true—for example, that everything orange and black is a threat.

We Are All Pigeons

How do we know that superstitious beliefs are so pervasive? Many of us consider ourselves rational and might reject the notion that we have superstitious inclinations. Yet classic experiments by some of the greatest psychologists, including B. F. Skinner, have demonstrated how natural and ubiquitous superstitious behavior is, even among nonhuman species. Skinner’s experiments on superstitious behaviors in pigeons have become some of the most powerful evidence in favor of the idea that superstition is a natural, almost automatic, response in a variety of species. In one experiment, Skinner placed a hungry pigeon in a chamber where a feeder automatically released food every 15 seconds. At first, the pigeon was stationary and waited for the food every 15 seconds. After some time had passed, however, each pigeon developed distinctive rituals while waiting for the food to be released. Some walked in circles, some engaged in head thrusting, and others bobbed their heads.14 The interesting finding here is that even though the pigeons had already learned that the food was released automatically every 15 seconds, they still developed rituals while they were waiting that were continuously reinforced by the automatic release of the food after 15 seconds. Once the rituals were reinforced by the release of the food, they were simply strengthened in the minds of the pigeons, who then continued these particular rituals without fail until the end of the experiment. This experiment suggests that animals have some form of innate desire for control over causation. An unknown cause, such as an unknown mechanism releasing food every 15 seconds, is not acceptable. This example is exactly like Sara’s ritual of moving the computer closer to the electric socket, even though she had already learned that time was the real cause of the computer’s registering of the charge. Either Sara has the mind of a pigeon, or this need to fill the causal ignorance gap is so strong and so basic that all species, from pigeons to dogs to monkeys to humans, are capable of engaging in superstitious rituals to establish control over the outcomes observed in their environments.

A similar experiment with children established the same point. In this experiment, children ages 3 to 6 first chose a toy they wanted, then they were introduced to Bobo, a clown doll who automatically dispensed marbles every 15 seconds. If enough marbles gathered, the child would get the toy. Even though the children knew that Bobo automatically dispensed marbles every 15 seconds, they still developed unique ritual behaviors. Some children started touching Bobo’s nose, some smiled at Bobo, others grimaced at him. This experiment again showed that people want to create causes they have control over and they want to be able to explain effects through their own actions.16

According to a Gallup poll, about 50% of Americans believe that some kind of superstitious behavior, such as rubbing a rabbit’s foot, will result in a positive outcome, such as doing well on a test.15 Superstitious behaviors and beliefs may be reinforced because they seem to improve people’s performance. In one experiment, college students were given 10 attempts to putt a golf ball. Students who were told that the ball was “lucky” performed significantly better than those who were told nothing. Similarly, students who were given a task to perform in the presence of a “lucky charm” performed significantly better than students with no “lucky charms.”17 These types of experiments show us not only that superstition can be reinforced but also that, once again, irrational beliefs may be beneficial to us in some way. After all, superstition did improve the students’ performances without any negative consequences. As we have discussed throughout this book, being strictly rational is not always the most immediately adaptive strategy. Unfortunately, however, eschewing reason can produce disastrous misconceptions in situations in which rational objectivity is truly needed.

The Dreaded 2 × 2 Table

What are some of the fallacies, other than superstition and disbelief in coincidence, that people make when it comes to cause and effect? One major source of misunderstanding of cause and effect is our general inability to understand independent events. We tend to think that the probability of one event depends on the probability of another. If I just sat in traffic passing through Connecticut on my way to New York from Boston, I may conjecture that one traffic jam per trip is enough and that I am unlikely to hit traffic in New York. This is incorrect, because the probability of traffic in Connecticut and the probability of traffic in New York are independent phenomena that may or may not be influenced by a common third factor, like the fact that it is a holiday and many people are therefore on the road. Experiencing traffic in Connecticut does nothing to affect the probability of experiencing traffic in New York because the events are independent, even though you are experiencing them continuously. This is the central concept behind the gambler’s fallacy. People tend to think that the world is somehow “even,” so that if we spin 25 blacks in a row on the roulette wheel, we feel certain that the next spin has to be red. In reality, each spin is independent, and the fact of spinning 25 blacks in a row does not affect the probability of a red on the next spin.18

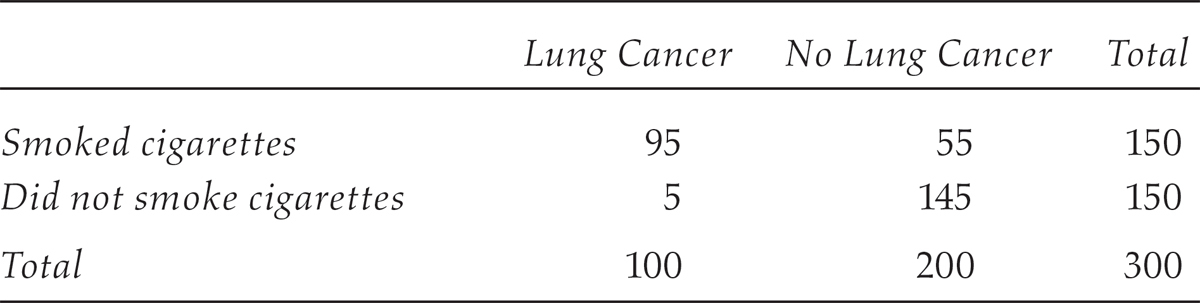

TABLE 2 A Classic 2 × 2 Table

Why do we make these mistakes? Cognitive psychologists have found that we are susceptible to two types of bias that make us more likely to make these particular kinds of errors. The first is the illusory correlation, a bias that leads us to believe that things are related when they are not and describes things like Sara’s belief about her computer charger. The second is an attentional bias, in which we pay too much attention to certain types of information and not others. To illustrate how this one works, it is best to think about a standard 2 × 2 table, a favorite of biostatisticians and epidemiologists, often to the dismay of their students. Table 2 is a classic example of a 2 × 2 table that epidemiologists and statisticians would use.

This is a sight that will send shudders down the backs of everyone who has ever taken a biostatistics course, and understandably so. The presentation of data in this way and the thinking that goes along with it is not intuitive. That is why when students see a table like this, they have to think about it for a while, they have to check any calculations they do with the table extensively, and they have to make sure they understand what the table is getting at before making any assumptions about the relationship between the exposure and the disease. So, what does this table mean, and how would we use it?

The table represents a complete picture of the relationship between exposure to cigarettes and the development of lung cancer. By complete, we do not mean that it captures the entire population, because obviously these numbers indicate only a sample of the population and capturing the entire population is always close to impossible. We use this term simply to indicate that the 2 × 2 table allows us to think about all of the possibilities associated with the exposure. The table allows us to think not only about the people who smoke cigarettes and develop cancer, but also about those who smoke cigarettes and do not develop cancer. It also allows us to think not only about those who do not smoke and do not develop cancer, but also about those who do not smoke and do develop cancer. The attentional bias effectively means that our inclination in these types of situations is to focus only on the first number, 95, in the upper left-hand corner of the 2 × 2 table, and not on the other numbers.19 Inventive studies with nurses and patient records have even confirmed this way of thinking among healthcare professionals.20 This means that we are always looking for a causal association, such as “Cigarette smoking causes lung cancer,” without first examining the entire picture. Now, of course, we do know that cigarette smoking causes cancer. But what if instead of smoking and cancer, we had put “vaccines” and “autism”? If you looked only at the upper left-hand corner, you would of course see some number of people who had a vaccine and had autism. But looking at the whole table, you should see why assuming therefore that vaccines cause, or are even associated with, autism based on just the upper left-hand corner would be a huge mistake. This number needs to be compared with the number of children who are not vaccinated who have autism and with the number of children who are vaccinated and do not have autism. On its own, the number of children with both vaccinations and autism is meaningless. But the attentional bias is a cognitive heuristic we use all the time to ignore the other parts of the 2 × 2 table.

So the next time someone asserts an association between a potential exposure and disease, see if you can draw out the corresponding 2 × 2 table and then ask them to fill in the blanks. Using your 2 × 2 table, you can ask questions like “You say you found that 150 people who lived near a nuclear power plant developed cancer. How many people developed cancer in a similar area without a nuclear power plant?” You would be surprised at how often the rest of the table is neglected. Now you have learned how to help fill it in.

So What Should We Do About It?

Now that we have delineated all of the ways in which our minds try to fill in the ignorance gap by promoting causes for effects that may not be truly related, we have to figure out some strategies for avoiding these kinds of mental traps. We already outlined a few, including constructing a 2 × 2 table and asking yourself, “What is in the other boxes in this scenario?” We have hopefully also made you more aware of the ways in which our minds immediately jump to causes when we are sitting in a traffic jam or have a headache and a stomachache at the same time (“There must be a common cause,” our minds immediately trick us into thinking). In addition, we have talked a bit about independent events and demonstrated that the probability of one independent event happening has nothing to do with the probability of the occurrence of another independent event.

In addition to these strategies of avoiding the trap of the causal ignorance gap, we would like to include some recommendations for ways in which we can be more aware of how we think about causes and strategies to help prevent us from jumping to conclusions. One suggestion involves teaching children about cause and effect at a young age. This would include not only teaching them what causes and effects are, but also, at a more advanced grade level, training them to be skeptical of easy causal answers and teaching them more about the history of various definitions of causality. Some school curricula, most notably the Common Core, already embrace this topic. Figure 3 shows an example of a cause-and-effect worksheet for second graders from the Common Core.

These kinds of worksheets help young children think logically about cause and effect and can be very helpful in embedding the concept at a young age. Curriculum materials for older children could include assignments that involve finding an existing causal claim, perhaps on the Internet, and writing a short paper about whether or not the claim is defensible, how sound the evidence is for it, how logical it is, and what some other causes could be. Extending some of the specialized critical thinking skills that students of epidemiology gain at a graduate level could be extremely valuable for the general population. These kinds of skills include the ability to assess study designs for bias, the ability to think through the validity of causal claims, and the ability to identify competing biases and alternative explanations for phenomena that seem causally related. Many of the curriculum materials on these subjects for high school students could simply be extracted from epidemiology syllabi and simplified for a broader view of thinking critically about causality. These sorts of exercises will benefit a student no matter what field he or she ultimately chooses, because learning how to think in a systematic and logical manner about the phenomena we see in the world around us and learning how to articulate an argument in support of or in opposition to existing claims about our environments are highly transferable skills. They simply represent the core of critical thinking abilities that are in demand in every sector of the workplace and are essential to success in higher education and beyond.

FIGURE 3 Cause and effect worksheet.

Source: From http://www.superteacherworksheets.com/causeeffectfactopinion/causeeffect2_WBMBD.pdf

Of course, if you are conversing with someone who is completely convinced by an unscientific claim like vaccines cause autism or guns are good protection, you are unlikely to have much of an effect on him or her. However, a lot of the advice in this book is geared toward getting the very large number of people who are “on the fence” to accept the proven scientific evidence rather than the emotional arguments of the Jenny McCarthys of the world. If you find yourself speaking with a young parent who is afraid of getting his or her children vaccinated because of what he or she has heard about the potential “dangers” of vaccines, you can actually use a variety of techniques outlined in this chapter and in other places in the book to help guide your acquaintance to the evidence. We will discuss motivational interviewing as a technique that helps people face and resolve ambivalence at greater length in chapter 5.

Motivate the Inner Scientist

In the case of a conversation with a young parent who is reluctant to vaccinate his or her child, you can try motivational interviewing techniques yourself as part of a normal dialogue. In order for these techniques to be successful, you need to express empathy, support the person’s self-efficacy (that is, her ability to believe that she has power over the events in her life and the decisions she makes and the capacity to change her opinion about something), and to be able to tolerate the person’s resistance to what you are saying. You need to ask open-ended questions and to be able to draw out your conversation companion. For example, if this person tells you that she thinks vaccines are dangerous and she is thinking of not getting her children vaccinated, the best first thing to say to this statement is not “That is not true—there is no evidence that vaccines are dangerous,” but rather to gently prod the person into exploring her feelings further by saying something to the effect of “Tell me more” or “How did you come to feel nervous about vaccines?” You can then guide the person through a slowed-down, articulated version of her thought process in getting to the conclusion “Vaccines are dangerous,” and along the way you can get her to express her main desire, to keep her children healthy. This technique can help people begin to feel more comfortable with their ambivalence, slow down and think more carefully and calmly about an important decision such as whether or not to get their children vaccinated, and guide them toward more intelligent questions about the issue and a better understanding of their own fears and concerns. Of course, this technique will not always work and it may take more than one encounter with a person to really have an impact, but it is always better to err on the side of empathy and reflective listening rather than showering the person with all the facts that oppose her point of view. Most of us already know that the latter strategy pretty much never works.

Another tactic you can take is simply to help the person see the whole 2 × 2 table rather than just the upper left-hand corner. Again, you have to be gentle and not simply throw facts at the person to prove her “wrong.” Instead, you should show interest in her opinion and then simply ask her to elaborate: “That’s interesting. Do you know how many children who are vaccinated are diagnosed with autism within the year?” And then you can start asking the difficult questions: “I wonder how many children who are not vaccinated get autism.” By asking some of these questions, you may trigger a wider awareness in the person and help guide her toward a fuller understanding of how causality is determined in science, especially in epidemiology. However, if you simply tell her that she does not understand the true nature of causality and that she needs to study 2 × 2 tables, you will do nothing but alienate her and make her even less likely to examine her ambivalence and the foundations of her ideas.

Making causal inferences is absolutely necessary in negotiating our daily lives. If I am driving and start to doze off at the wheel, I am going to assume that insomnia the previous night is the cause of the problem and pull over and take a nap lest I cause an accident. We are required to do this all the time, and sometimes we even have fun with it: many of us love to be entertained by detective shows in which “Who did it?” is really asking the question of causality. But when making critical decisions about health and healthcare for ourselves and our families, we need to be extremely careful and understand how naturally prone we are to leap to conclusions. Scientists and doctors fall into the trap as well. Since we are all guilty of misplaced causal theories, it is critical that we help each other fill in the rest of those boxes.