Superintelligence: Paths, Dangers, Strategies - Nick Bostrom (2014)

Chapter 5. Decisive strategic advantage

A question distinct from, but related to, the question of kinetics is whether there will there be one superintelligent power or many? Might an intelligence explosion propel one project so far ahead of all others as to make it able to dictate the future? Or will progress be more uniform, unfurling across a wide front, with many projects participating but none securing an overwhelming and permanent lead?

The preceding chapter analyzed one key parameter in determining the size of the gap that might plausibly open up between a leading power and its nearest competitors—namely, the speed of the transition from human to strongly superhuman intelligence. This suggests a first-cut analysis. If the takeoff is fast (completed over the course of hours, days, or weeks) then it is unlikely that two independent projects would be taking off concurrently: almost certainty, the first project would have completed its takeoff before any other project would have started its own. If the takeoff is slow (stretching over many years or decades) then there could plausibly be multiple projects undergoing takeoffs concurrently, so that although the projects would by the end of the transition have gained enormously in capability, there would be no time at which any project was far enough ahead of the others to give it an overwhelming lead. A takeoff of moderate speed is poised in between, with either condition a possibility: there might or might not be more than one project undergoing the takeoff at the same time.1

Will one machine intelligence project get so far ahead of the competition that it gets a decisive strategic advantage—that is, a level of technological and other advantages sufficient to enable it to achieve complete world domination? If a project did obtain a decisive strategic advantage, would it use it to suppress competitors and form a singleton (a world order in which there is at the global level a single decision-making agency)? And if there is a winning project, how “large” would it be—not in terms of physical size or budget but in terms of how many people’s desires would be controlling its design? We will consider these questions in turn.

Will the frontrunner get a decisive strategic advantage?

One factor influencing the width of the gap between frontrunner and followers is the rate of diffusion of whatever it is that gives the leader a competitive advantage. A frontrunner might find it difficult to gain and maintain a large lead if followers can easily copy the frontrunner’s ideas and innovations. Imitation creates a headwind that disadvantages the leader and benefits laggards, especially if intellectual property is weakly protected. A frontrunner might also be especially vulnerable to expropriation, taxation, or being broken up under anti-monopoly regulation.

It would be a mistake, however, to assume that this headwind must increase monotonically with the gap between frontrunner and followers. Just as a racing cyclist who falls too far behind the competition is no longer shielded from the wind by the cyclists ahead, so a technology follower who lags sufficiently behind the cutting edge might find it hard to assimilate the advances being made at the frontier.2 The gap in understanding and capability might have grown too large. The leader might have migrated to a more advanced technology platform, making subsequent innovations untransferable to the primitive platforms used by laggards. A sufficiently pre-eminent leader might have the ability to stem information leakage from its research programs and its sensitive installations, or to sabotage its competitors’ efforts to develop their own advanced capabilities.

If the frontrunner is an AI system, it could have attributes that make it easier for it to expand its capabilities while reducing the rate of diffusion. In human-run organizations, economies of scale are counteracted by bureaucratic inefficiencies and agency problems, including difficulties in keeping trade secrets.3 These problems would presumably limit the growth of a machine intelligence project so long as it is operated by humans. An AI system, however, might avoid some of these scale diseconomies, since the AI’s modules (in contrast to human workers) need not have individual preferences that diverge from those of the system as a whole. Thus, the AI system could avoid a sizeable chunk of the inefficiencies arising from agency problems in human enterprises. The same advantage—having perfectly loyal parts—would also make it easier for an AI system to pursue long-range clandestine goals. An AI would have no disgruntled employees ready to be poached by competitors or bribed into becoming informants.4

We can get a sense of the distribution of plausible gaps in development times by looking at some historical examples (see Box 5). It appears that lags in the range of a few months to a few years are typical of strategically significant technology projects.

Box 5 Technology races: some historical examples

Over long historical timescales, there has been an increase in the rate at which knowledge and technology diffuse around the globe. As a result, the temporal gaps between technology leaders and nearest followers have narrowed.

China managed to maintain a monopoly on silk production for over two thousand years. Archeological finds suggest that production might have begun around 3000 BC, or even earlier.5 Sericulture was a closely held secret. Revealing the techniques was punishable by death, as was exporting silkworms or their eggs outside China. The Romans, despite the high price commanded by imported silk cloth in their empire, never learnt the art of silk manufacture. Not until around AD 300 did a Japanese expedition manage to capture some silkworm eggs along with four young Chinese girls, who were forced to divulge the art to their abductors.6 Byzantium joined the club of producers in AD 522. The story of porcelain-making also features long lags. The craft was practiced in China during the Tang Dynasty around AD 600 (and might have been in use as early as AD 200), but was mastered by Europeans only in the eighteenth century.7 Wheeled vehicles appeared in several sites across Europe and Mesopotamia around 3500 BC but reached the Americas only in post-Columbian times.8 On a grander scale, the human species took tens of thousands of years to spread across most of the globe, the Agricultural Revolution thousands of years, the Industrial Revolution only hundreds of years, and an Information Revolution could be said to have spread globally over the course of decades—though, of course, these transitions are not necessarily of equal profundity. (The Dance Dance Revolution video game spread from Japan to Europe and North America in just one year!)

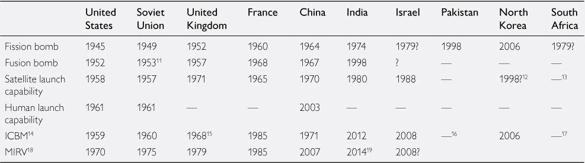

Technological competition has been quite extensively studied, particularly in the contexts of patent races and arms races.9 It is beyond the scope of our investigation to review this literature here. However, it is instructive to look at some examples of strategically significant technology races in the twentieth century (see Table 7).

With regard to these six technologies, which were regarded as strategically important by the rivaling superpowers because of their military or symbolic significance, the gaps between leader and nearest laggard were (very approximately) 49 months, 36 months, 4 months, 1 month, 4 months, and 60 months, respectively—longer than the duration of a fast takeoff and shorter than the duration of a slow takeoff.10 In many cases, the laggard’s project benefitted from espionage and publicly available information. The mere demonstration of the feasibility of an invention can also encourage others to develop it independently; and fear of falling behind can spur the efforts to catch up.

Perhaps closer to the case of AI are mathematical inventions that do not require the development of new physical infrastructure. Often these are published in the academic literature and can thus be regarded as universally available; but in some cases, when the discovery appears to offer a strategic advantage, publication is delayed. For example, two of the most important ideas in public-key cryptography are the Diffie-Hellman key exchange protocol and the RSA encryption scheme. These were discovered by the academic community in 1976 and 1978, respectively, but it has later been confirmed that they were known by cryptographers at the UK’s communications security group since the early 1970s.20 Large software projects might offer a closer analogy with AI projects, but it is harder to give crisp examples of typical lags because software is usually rolled out in incremental installments and the functionalities of competing systems are often not directly comparable.

Table 7 Some strategically significant technology races

It is possible that globalization and increased surveillance will reduce typical lags between competing technology projects. Yet there is likely to be a lower bound on how short the average lag could become (in the absence of deliberate coordination).21 Even absent dynamics that lead to snowball effects, some projects will happen to end up with better research staff, leadership, and infrastructure, or will just stumble upon better ideas. If two projects pursue alternative approaches, one of which turns out to work better, it may take the rival project many months to switch to the superior approach even if it is able to closely monitor what the forerunner is doing.

Combining these observations with our earlier discussion of the speed of the takeoff, we can conclude that it is highly unlikely that two projects would be close enough to undergo a fast takeoff concurrently; for a medium takeoff, it could easily go either way; and for a slow takeoff, it is highly likely that several projects would undergo the process in parallel. But the analysis needs a further step. The key question is not how many projects undergo a takeoff in tandem, but how many projects emerge on the yonder side sufficiently tightly clustered in capability that none of them has a decisive strategic advantage. If the takeoff process is relatively slow to begin and then gets faster, the distance between competing projects would tend to grow. To return to our bicycle metaphor, the situation would be analogous to a pair of cyclists making their way up a steep hill, one trailing some distance behind the other—the gap between them then expanding as the frontrunner reaches the peak and starts accelerating down the other side.

Consider the following medium takeoff scenario. Suppose it takes a project one year to increase its AI’s capability from the human baseline to a strong superintelligence, and that one project enters this takeoff phase with a six-month lead over the next most advanced project. The two projects will be undergoing a takeoff concurrently. It might seem, then, that neither project gets a decisive strategic advantage. But that need not be so. Suppose it takes nine months to advance from the human baseline to the crossover point, and another three months from there to strong superintelligence. The frontrunner then attains strong superintelligence three months before the following project even reaches the crossover point. This would give the leading project a decisive strategic advantage and the opportunity to parlay its lead into permanent control by disabling the competing projects and establishing a singleton. (Note that the concept of a singleton is an abstract one: a singleton could be democracy, a tyranny, a single dominant AI, a strong set of global norms that include effective provisions for their own enforcement, or even an alien overlord—its defining characteristic being simply that it is some form of agency that can solve all major global coordination problems. It may, but need not, resemble any familiar form of human governance.22)

Since there is an especially strong prospect of explosive growth just after the crossover point, when the strong positive feedback loop of optimization power kicks in, a scenario of this kind is a serious possibility, and it increases the chances that the leading project will attain a decisive strategic advantage even if the takeoff is not fast.

How large will the successful project be?

Some paths to superintelligence require great resources and are therefore likely to be the preserve of large well-funded projects. Whole brain emulation, for instance, requires many different kinds of expertise and lots of equipment. Biological intelligence enhancements and brain-computer interfaces would also have a large scale factor: while a small biotech firm might invent one or two drugs, achieving superintelligence along one of these paths (if doable at all) would likely require many inventions and many tests, and therefore the backing of an industrial sector or a well-funded national program. Achieving collective superintelligence by making organizations and networks more efficient requires even more extensive input, involving much of the world economy.

The AI path is more difficult to assess. Perhaps it would require a very large research program; perhaps it could be done by a small group. A lone hacker scenario cannot be excluded either. Building a seed AI might require insights and algorithms developed over many decades by the scientific community around the world. But it is possible that the last critical breakthrough idea might come from a single individual or a small group that succeeds in putting everything together. This scenario is less realistic for some AI architectures than others. A system that has a large number of parts that need to be tweaked and tuned to work effectively together, and then painstakingly loaded with custom-made cognitive content, is likely to require a larger project. But if a seed AI could be instantiated as a simple system, one whose construction depends only on getting a few basic principles right, then the feat might be within the reach of a small team or an individual. The likelihood of the final breakthrough being made by a small project increases if most previous progress in the field has been published in the open literature or made available as open source software.

We must distinguish the question of how big will be the project that directly engineers the system from the question of how big the group will be that controls whether, how, and when the system is created. The atomic bomb was created primarily by a group of scientists and engineers. (The Manhattan Project employed about 130,000 people at its peak, the vast majority of whom were construction workers or building operators.23) These technical experts, however, were controlled by the US military, which was directed by the US government, which was ultimately accountable to the American electorate, which at the time constituted about one-tenth of the adult world population.24

Monitoring

Given the extreme security implications of superintelligence, governments would likely seek to nationalize any project on their territory that they thought close to achieving a takeoff. A powerful state might also attempt to acquire projects located in other countries through espionage, theft, kidnapping, bribery, threats, military conquest, or any other available means. A powerful state that cannot acquire a foreign project might instead destroy it, especially if the host country lacks an effective deterrent. If global governance structures are strong by the time a breakthrough begins to look imminent, it is possible that promising projects would be placed under international control.

An important question, therefore, is whether national or international authorities will see an intelligence explosion coming. At present, intelligence agencies do not appear to be looking very hard for promising AI projects or other forms of potentially explosive intelligence amplification.25 If they are indeed not paying (much) attention, this is presumably due to the widely shared perception that there is no prospect whatever of imminent superintelligence. If and when it becomes a common belief among prestigious scientists that there is a substantial chance that superintelligence is just around the corner, the major intelligence agencies of the world would probably start to monitor groups and individuals who seem to be engaged in relevant research. Any project that began to show sufficient progress could then be promptly nationalized. If political elites were persuaded by the seriousness of the risk, civilian efforts in sensitive areas might be regulated or outlawed.

How difficult would such monitoring be? The task is easier if the goal is only to keep track of the leading project. In that case, surveillance focusing on the several best-resourced projects may be sufficient. If the goal is instead to prevent any work from taking place (at least outside of specially authorized institutions) then surveillance would have to be more comprehensive, since many small projects and individuals are in a position to make at least some progress.

It would be easier to monitor projects that require significant amounts of physical capital, as would be the case with a whole brain emulation project. Artificial intelligence research, by contrast, requires only a personal computer, and would therefore be more difficult to monitor. Some of the theoretical work could be done with pen and paper. Even so, it would not be too difficult to identify most capable individuals with a serious long-standing interest in artificial general intelligence research. Such individuals usually leave visible trails. They may have published academic papers, presented at conferences, posted on Internet forums, or earned degrees from leading computer science departments. They may also have had communications with other AI researchers, allowing them to be identified by mapping the social graph.

Projects designed from the outset to be secret could be more difficult to detect. An ordinary software development project could serve as a front.26 Only careful analysis of the code being produced would reveal the true nature of what the project was trying to accomplish. Such analysis would require a lot of (highly skilled) manpower, whence only a small number of suspect projects could be scrutinized at this level. The task would become much easier if effective lie detection technology had been developed and could be routinely used in this kind of surveillance.27

Another reason states might fail to catch precursor developments is the inherent difficulty of forecasting some types of breakthrough. This is more relevant to AI research than to whole brain emulation development, since for the latter the key breakthrough is more likely to be preceded by a clear gradient of steady advances.

It is also possible that intelligence agencies and other government bureaucracies have a certain clumsiness or rigidity that might prevent them from understanding the significance of some developments that might be clear to some outside groups. Barriers to official understanding of a potential intelligence explosion might be especially steep. It is conceivable, for example, that the topic will become inflamed with religious or political controversies, rendering it taboo for officials in some countries. The topic might become associated with some discredited figure or with charlatanry and hype in general, hence shunned by respected scientists and other establishment figures. (As we saw in Chapter 1, something like this has already happened twice: recall the two “AI winters.”) Industry groups might lobby to prevent aspersions being cast on profitable business areas; academic communities might close ranks to marginalize those who voice concerns about long-term consequences of the science that is being done.28

Consequently, a total intelligence failure cannot be ruled out. Such a failure is especially likely if breakthroughs should occur in the nearer future, before the issue has risen to public prominence. And even if intelligence agencies get it right, political leaders might not listen or act on the advice. Getting the Manhattan Project started took an extraordinary effort by several visionary physicists, including especially Mark Oliphant and Leó Szilárd: the latter persuaded Eugene Wigner to persuade Albert Einstein to put his name on a letter to persuade President Franklin D. Roosevelt to look into the matter. Even after the project reached its full scale, Roosevelt remained skeptical of its workability and significance, as did his successor Harry Truman.

For better or worse, it would probably be harder for a small group of activists to affect the outcome of an intelligence explosion if big players, such as states, are taking active part. Opportunities for private individuals to reduce the overall amount of existential risk from a potential intelligence explosion are therefore greatest in scenarios in which big players remain relatively oblivious to the issue, or in which the early efforts of activists make a major difference to whether, when, which, or with what attitude big players enter the game. Activists seeking maximum expected impact may therefore wish to focus most of their planning on such high-leverage scenarios, even if they believe that scenarios in which big players end up calling all the shots are more probable.

International collaboration

International coordination is more likely if global governance structures generally get stronger. Coordination might also be more likely if the significance of an intelligence explosion is widely appreciated ahead of time and if effective monitoring of all serious projects is feasible. Even if monitoring is infeasible, however, international cooperation would still be possible. Many countries could band together to support a joint project. If such a joint project were sufficiently well resourced, it could have a good chance of being the first to reach the goal, especially if any rival project had to be small and secretive to elude detection.

There are precedents of large-scale successful multinational scientific collaborations, such as the International Space Station, the Human Genome Project, and the Large Hadron Collider.29 However, the major motivation for collaboration in those cases was cost-sharing. (In the case of the International Space Station, fostering a collaborative spirit between Russia and the United States was itself an important goal.30) Achieving similar collaboration on a project that has enormous security implications would be more difficult. A country that believed it could achieve a breakthrough unilaterally might be tempted to go it alone rather than subordinate its efforts to a joint project. A country might also refrain from joining an international collaboration from fear that other participants might siphon off collaboratively generated insights and use them to accelerate a covert national project.

An international project would thus need to overcome major security challenges, and a fair amount of trust would probably be needed to get it started, trust that may take time to develop. Consider that even after the thaw in relations between the United States and the Soviet Union following Gorbachev’s ascent to power, arms reduction efforts—which could be greatly in the interests of both superpowers—had a fitful beginning. Gorbachev was seeking steep reductions in nuclear arms but negotiations stalled on the issue of Reagan’s Strategic Defense Initiative (“Star Wars”), which the Kremlin strenuously opposed. At the Reykjavík Summit meeting in 1986, Reagan proposed that the United States would share with the Soviet Union the technology that would be developed under the Strategic Defense Initiative, so that both countries could enjoy protection against accidental launches and against smaller nations that might develop nuclear weapons. Yet Gorbachev was not persuaded by this apparent win-win proposition. He viewed the gambit as a ruse, refusing to credit the notion that the Americans would share the fruits of their most advanced military research at a time when they were not even willing to share with the Soviets their technology for milking cows.31 Regardless of whether Reagan was in fact sincere in his offer of superpower collaboration, mistrust made the proposal a non-starter.

Collaboration is easier to achieve between allies, but even there it is not automatic. When the Soviet Union and the United States were allied against Germany during World War II, the United States concealed its atomic bomb project from the Soviet Union. The United States did collaborate on the Manhattan Project with Britain and Canada.32 Similarly, the United Kingdom concealed its success in breaking the German Enigma code from the Soviet Union, but shared it—albeit with some difficulty—with the United States.33 This suggests that in order to achieve international collaboration on some technology that is of pivotal importance for national security, it might be necessary to have built beforehand a close and trusting relationship.

We will return in Chapter 14 to the desirability and feasibility of international collaboration in the development of intelligence amplification technologies.

From decisive strategic advantage to singleton

Would a project that obtained a decisive strategic advantage choose to use it to form a singleton?

Consider a vaguely analogous historical situation. The United States developed nuclear weapons in 1945. It was the sole nuclear power until the Soviet Union developed the atom bomb in 1949. During this interval—and for some time thereafter—the United States may have had, or been in a position to achieve, a decisive military advantage.

The United States could then, theoretically, have used its nuclear monopoly to create a singleton. One way in which it could have done so would have been by embarking on an all-out effort to build up its nuclear arsenal and then threatening (and if necessary, carrying out) a nuclear first strike to destroy the industrial capacity of any incipient nuclear program in the USSR and any other country tempted to develop a nuclear capability.

A more benign course of action, which might also have had a chance of working, would have been to use its nuclear arsenal as a bargaining chip to negotiate a strong international government—a veto-less United Nations with a nuclear monopoly and a mandate to take all necessary actions to prevent any country from developing its own nuclear weapons.

Both of these approaches were proposed at the time. The hardline approach of launching or threatening a first strike was advocated by some prominent intellectuals such as Bertrand Russell (who had long been active in anti-war movements and who would later spend decades campaigning against nuclear weapons) and John von Neumann (co-creator of game theory and one of the architects of US nuclear strategy).34 Perhaps it is a sign of civilizational progress that the very idea of threatening a nuclear first strike today seems borderline silly or morally obscene.

A version of the benign approach was tried in 1946 by the United States in the form of the Baruch plan. The proposal involved the USA giving up its temporary nuclear monopoly. Uranium and thorium mining and nuclear technology would be placed under the control of an international agency operating under the auspices of the United Nations. The proposal called for the permanent members of the Security Council to give up their vetoes in matters related to nuclear weapons in order to prevent any great power found to be in breach of the accord from vetoing the imposition of remedies.35 Stalin, seeing that the Soviet Union and its allies could be easily outvoted in both the Security Council and the General Assembly, rejected the proposal. A frosty atmosphere of mutual suspicion descended on the relations between the former wartime allies, mistrust that soon solidified into the Cold War. As had been widely predicted, a costly and extremely dangerous nuclear arms race followed.

Many factors might dissuade a human organization with a decisive strategic advantage from creating a singleton. These include non-aggregative or bounded utility functions, non-maximizing decision rules, confusion and uncertainty, coordination problems, and various costs associated with a takeover. But what if it were not a human organization but a superintelligent artificial agent that came into possession of a decisive strategic advantage? Would the aforementioned factors be equally effective at inhibiting an AI from attempting to seize power? Let us briefly run through the list of factors and consider how they might apply in this case.

Human individuals and human organizations typically have preferences over resources that are not well represented by an “unbounded aggregative utility function.” A human will typically not wager all her capital for a fifty-fifty chance of doubling it. A state will typically not risk losing all its territory for a ten percent chance of a tenfold expansion. For individuals and governments, there are diminishing returns to most resources. The same need not hold for AIs. (We will return to the problem of AI motivation in subsequent chapters.) An AI might therefore be more likely to pursue a risky course of action that has some chance of giving it control of the world.

Humans and human-run organizations may also operate with decision processes that do not seek to maximize expected utility. For example, they may allow for fundamental risk aversion, or “satisficing” decision rules that focus on meeting adequacy thresholds, or “deontological” side-constraints that proscribe certain kinds of action regardless of how desirable their consequences. Human decision makers often seem to be acting out an identity or a social role rather than seeking to maximize the achievement of some particular objective. Again, this need not apply to artificial agents.

Bounded utility functions, risk aversion, and non-maximizing decision rules may combine synergistically with strategic confusion and uncertainty. Revolutions, even when they succeed in overthrowing the existing order, often fail to produce the outcome that their instigators had promised. This tends to stay the hand of a human agent if the contemplated action is irreversible, norm-breaking, and lacking precedent. A superintelligence might perceive the situation more clearly and therefore face less strategic confusion and uncertainty about the outcome should it attempt to use its apparent decisive strategic advantage to consolidate its dominant position.

Another major factor that can inhibit groups from exploiting a potentially decisive strategic advantage is the problem of internal coordination. Members of a conspiracy that is in a position to seize power must worry not only about being infiltrated from the outside, but also about being overthrown by some smaller coalition of insiders. If a group consists of a hundred people, and a majority of sixty can take power and disenfranchise the non-conspirators, what is then to stop a thirty-five-strong subset of these sixty from disenfranchising the other twenty-five? And then maybe a subset of twenty disenfranchising the other fifteen? Each of the original hundred might have good reason to uphold certain established norms to prevent the general unraveling that could result from any attempt to change the social contract by means of a naked power grab. This problem of internal coordination would not apply to an AI system that constitutes a single unified agent.36

Finally, there is the issue of cost. Even if the United States could have used its nuclear monopoly to establish a singleton, it might not have been able to do so without incurring substantial costs. In the case of a negotiated agreement to place nuclear weapons under the control of a reformed and strengthened United Nations, these costs might have been relatively small; but the costs—moral, economic, political, and human—of actually attempting world conquest through the waging of nuclear war would have been almost unthinkably large, even during the period of nuclear monopoly. With sufficient technological superiority, however, these costs would be far smaller. Consider, for example, a scenario in which one nation had such a vast technological lead that it could safely disarm all other nations at the press of a button, without anybody dying or being injured, and with almost no damage to infrastructure or to the environment. With such almost magical technological superiority, a first strike would be a lot more tempting. Or consider an even greater level of technological superiority which might enable the frontrunner to cause other nations to voluntarily lay down their arms, not by threatening them with destruction but simply by persuading a great majority of their populations by means of an extremely effectively designed advertising and propaganda campaign extolling the virtues of global unity. If this were done with the intention to benefit everybody, for instance by replacing national rivalries and arms races with a fair, representative, and effective world government, it is not clear that there would be even a cogent moral objection to the leveraging of a temporary strategic advantage into a permanent singleton.

Various considerations thus point to an increased likelihood that a future power with superintelligence that obtained a sufficiently large strategic advantage would actually use it to form a singleton. The desirability of such an outcome depends, of course, on the nature of the singleton that would be created and also on what the future of intelligent life would look like in alternative multipolar scenarios. We will revisit those questions in later chapters. But first let us take a closer look at why and how a superintelligence would be powerful and effective at achieving outcomes in the world.