Genius: The Life and Science of Richard Feynman - James Gleick (1993)

MIT

A seventeen-year-old freshman, Theodore Welton, helped some of the older students operate the wind-tunnel display at the Massachusetts Institute of Technology’s Spring Open House in 1936. Like so many of his classmates he had arrived at the Tech knowing all about airplanes, electricity, and chemicals and revering Albert Einstein. He was from a small town, Saratoga Springs, New York. With most of his first year behind him, he had lost none of his confidence. When his duties ended, he walked around and looked at the other exhibits. A miniature science fair of current projects made the open house a showcase for parents and visitors from Boston. He wandered over to the mathematics exhibit, and there, amid a crowd, his ears sticking out noticeably from a very fresh face, was what looked like another first-year boy, inappropriately taking charge of a complex, suitcase-size mechanical-mathematical device called a harmonic analyzer. This boy was pouring out explanations in a charged-up voice and fielding questions like a congressman at a press conference. The machine could take any arbitrary wave and break it down into a sum of simple sine and cosine waves. Welton, his own ears burning, listened while Dick Feynman rapidly explained the workings of the Fourier transform, the advanced mathematical technique for analyzing complicated wave forms, a piece of privileged knowledge that Welton until that moment had felt sure no other freshman possessed.

Welton (who liked to be called by his initials, T. A.) already knew he was a physics major. Feynman had vacillated twice. He began in mathematics. He passed an examination that let him jump ahead to the second-year calculus course, covering differential equations and integration in three-dimensional space. This still came easily, and Feynman thought he should have taken the second-year examination as well. But he also began to wonder whether this was the career he wanted. American professional mathematics of the thirties was enforcing its rigor and abstraction as never before, disdaining what outsiders would call “applications.” To Feynman—having finally reached a place where he was surrounded by fellow tinkerers and radio buffs—mathematics began to seem too abstract and too far removed.

In the stories modern physicists have made of their own lives, a fateful moment is often the one in which they realize that their interest no longer lies in mathematics. Mathematics is always where they begin, for no other school course shows off their gifts so clearly. Yet a crisis comes: they experience an epiphany, or endure a slowly building disgruntlement, and plunge or drift into this other, hybrid field. Werner Heisenberg, seventeen years older than Feynman, experienced his moment of crisis at the University of Munich, in the office of the local statesman of mathematics, Ferdinand von Lindemann. For some reason Heisenberg could never forget Lindemann’s horrid yapping black dog. It reminded him of the poodle in Faust and made it impossible for him to think clearly when the professor, learning that Heisenberg was reading Weyl’s new book about relativity theory, told him, “In that case you are completely lost to mathematics.” Feynman himself, halfway through his freshman year, reading Eddington’s book about relativity theory, confronted his own department chairman with the classic question about mathematics: What is it good for? He got the classic answer: If you have to ask, you are in the wrong field. Mathematics seemed suited only for teaching mathematics. His department chairman suggested calculating actuarial probabilities for insurance companies. This was not a joke. The vocational landscape had just been surveyed by one Edward J. v. K. Menge, Ph.D., Sc.D., who published his findings in a monograph titled Jobs for the College Graduate in Science. “The American mind is taken up largely with applications rather than with fundamental principles,” Menge noticed. “It is what is known as ‘practical.’” This left little room for would-be mathematicians: “The mathematician has little opportunity of employment except in the universities in some professorial capacity. He may become a practitioner of his profession, it is true, if he acts as an actuary for some large insurance company… .” Feynman changed to electrical engineering. Then he changed again, to physics.

Not that physics promised much more as a vocation. The membership of the American Physical Society still fell shy of two thousand, though it had doubled in a decade. Teaching at a college or working for the government in, most likely, the Bureau of Standards or the Weather Bureau, a physicist might expect to earn a good wage of from three thousand to six thousand dollars a year. But the Depression had forced the government and the leading corporate laboratories to lay off nearly half of their staff scientists. A Harvard physics professor, Edwin C. Kemble, reported that finding jobs for graduating physicists had become a “nightmare.” Not many arguments could be made for physics as a vocation.

Menge, putting his pragmatism aside for a moment, offered perhaps the only one: Does the student, he asked, “feel the craving of adding to the sum total of human knowledge? Or does he want to see his work go on and on and his influence spread like the ripples on a placid lake into which a stone has been cast? In other words, is he so fascinated with simply knowing the subject that he cannot rest until he learns all he can about it?”

Of the leading men in American physics MIT had three of the best, John C. Slater, Philip M. Morse, and Julius A. Stratton. They came from a more standard mold—gentlemanly, homebred, Christian—than some of the physicists who would soon eclipse them, foreigners like Hans Bethe and Eugene Wigner, who had just arrived at Cornell University and Princeton University, respectively, and Jews like I. I. Rabi and J. Robert Oppenheimer, who had been hired at Columbia University and the University of California at Berkeley, despite anti-Semitic misgivings at both places. Stratton later became president of MIT, and Morse became the first director of the Brookhaven National Laboratory for Nuclear Research. The department head was Slater. He had been one of the young Americans studying overseas, though he was not as deeply immersed in the flood tides of European physics as, for example, Rabi, who made the full circuit: Zurich, Munich, Copenhagen, Hamburg, Leipzig, and Zurich again. Slater had studied briefly at Cambridge University in 1923, and somehow he missed the chance to meet Dirac, though they attended at least one course together.

Slater and Dirac crossed paths intellectually again and again during the decade that followed. Slater kept making minor discoveries that Dirac had made a few months earlier. He found this disturbing. It seemed to Slater furthermore that Dirac enshrouded his discoveries in an unnecessary and somewhat baffling web of mathematical formalism. Slater tended to mistrust them. In fact he mistrusted the whole imponderable miasma of philosophy now flowing from the European schools of quantum mechanics: assertions about the duality or complementarity or “Jekyll-Hyde” nature of things; doubts about time and chance; the speculation about the interfering role of the human observer. “I do not like mystiques; I like to be definite,” Slater said. Most of the European physicists were reveling in such issues. Some felt an obligation to face the consequences of their equations. They recoiled from the possibility of simply putting their formidable new technology to work without developing a physical picture to go along with it. As they manipulated their matrices or shuffled their differential equations, questions kept creeping in. Where is that particle when no one is looking? At the ancient stone-built universities philosophy remained the coin of the realm. A theory about the spontaneous, whimsical birth of photons in the energy decay of excited atoms—an effect without a cause—gave scientists a sledgehammer to wield in late-evening debates about Kantian causality. Not so in America. “A theoretical physicist in these days asks just one thing of his theories,” Slater said defiantly soon after Feynman arrived at MIT. The theories must make reasonably good predictions about experiments. That is all.

He does not ordinarily argue about philosophical implications… . Questions about a theory which do not affect its ability to predict experimental results correctly seem to me quibbles about words, … and I am quite content to leave such questions to those who derive some satisfaction from them.

When Slater spoke for common sense, for practicality, for a theory that would be experiment’s handmaid, he spoke for most of his American colleagues. The spirit of Edison, not Einstein, still governed their image of the scientist. Perspiration, not inspiration. Mathematics was unfathomable and unreliable. Another physicist, Edward Condon, said everyone knew what mathematical physicists did: “they study carefully the results obtained by experimentalists and rewrite that work in papers which are so mathematical that they find them hard to read themselves.” Physics could really only justify itself, he said, when its theories offered people a means of predicting the outcome of experiments—and at that, only if the predicting took less time than actually carrying out the experiments.

Unlike their European counterparts, American theorists did not have their own academic departments. They shared quarters with the experimenters, heard their problems, and tried to answer their questions pragmatically. Still, the days of Edisonian science were over and Slater knew it. With a mandate from MIT’s president, Karl Compton, he was assembling a physics department meant to bring the school into the forefront of American science and meanwhile to help American science toward a less humble world standing. He and his colleagues knew how unprepared the United States had been to train physicists in his own generation. Leaders of the nation’s rapidly growing technical industries knew it, too. When Slater arrived, the MIT department sustained barely a dozen graduate students. Six years later, the number had increased to sixty. Despite the Depression the institute had completed a new physics and chemistry laboratory with money from the industrialist George Eastman. Major research programs had begun in the laboratory fields devoted to using electromagnetic radiation as a probe into the structure of matter: especially spectroscopy, analyzing the signature frequencies of light shining from different substances, but also X-ray crystallography. (Each time physicists found a new kind of “ray” or particle, they put it to work illuminating the interstices of molecules.) New vacuum equipment and finely etched mirrors gave a high precision to the spectroscopic work. And a monstrous new electromagnet created fields more powerful than any on the planet.

Julius Stratton and Philip Morse taught the essential advanced theory course for seniors and graduate students, Introduction to Theoretical Physics, using Slater’s own text of the same name. Slater and his colleagues had created the course just a few years before. It was the capstone of their new thinking about the teaching of physics at MIT. They meant to bring back together, as a unified subject, the discipline that had been subdivided for undergraduates into mechanics, electromagnetism, thermodynamics, hydrodynamics, and optics. Undergraduates had been acquiring their theory piecemeal, in ad hoc codas to laboratory courses mainly devoted to experiment. Slater now brought the pieces back together and led students toward a new topic, the “modern atomic theory.” No course yet existed in quantum mechanics, but Slater’s students headed inward toward the atom with a grounding not just in classical mechanics, treating the motion of solid objects, but also in wave mechanics—vibrating strings, sound waves bouncing around in hollow boxes. The instructors told the students at the outset that the essence of theoretical physics lay not in learning to work out the mathematics, but in learning how to apply the mathematics to the real phenomena that could take so many chameleon forms: moving bodies, fluids, magnetic fields and forces, currents of electricity and water, and waves of water and light. Feynman, as a freshman, roomed with two seniors who took the course. As the year went on he attuned himself to their chatter and surprised them sometimes by joining in on the problem solving. “Why don’t you try Bernoulli’s equation?” he would say—mispronouncing Bernoulli because, like so much of his knowledge, this came from reading the encyclopedia or the odd textbooks he had found in Far Rockaway. By sophomore year he decided he was ready to take the course himself.

The first day everyone had to fill out enrollment cards: green for seniors and brown for graduate students. Feynman was proudly aware of the sophomore-pink card in his own pocket. Furthermore he was wearing an ROTC uniform; officer’s training was compulsory for first- and second-year students. But just as he was feeling most conspicuous, another uniformed, pink-card-carrying sophomore sat down beside him. It was T. A. Welton. Welton had instantly recognized the mathematics whiz from the previous spring’s open house.

Feynman looked at the books Welton was stacking on his desk. He saw Tullio Levi-Civita’s Absolute Differential Calculus, a book he had tried to get from the library. Welton, meanwhile, looked at Feynman’s desk and realized why he had not been able to find A. P. Wills’s Vector and Tensor Analysis. Nervous boasting ensued. The Saratoga Springs sophomore claimed to know all about general relativity. The Far Rockaway sophomore announced that he had already learned quantum mechanics from a book by someone called Dirac. They traded several hours’ worth of sketchy knowledge about Einstein’s work on gravitation. Both boys realized that, as Welton put it, “cooperation in the struggle against a crew of aggressive-looking seniors and graduate students might be mutually beneficial.”

Nor were they alone in recognizing that Introduction to Theoretical Physics now harbored a pair of exceptional young students. Stratton, handling the teaching chores for the first semester, would sometimes lose the thread of a string of equations at the blackboard, the color of his face shifting perceptibly toward red. He would then pass the chalk, saying, “Mr. Feynman, how did you handle this problem,” and Feynman would stride to the blackboard.

The Best Path

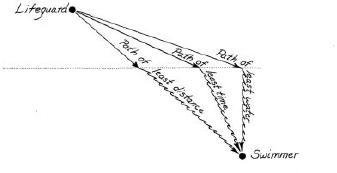

A law of nature expressed in a strange form came up again and again that term: the principle of least action. It arose in a simple sort of problem. A lifeguard, some feet up the beach, sees a drowning swimmer diagonally ahead, some distance offshore and some distance to one side. The lifeguard can run at a certain speed and swim at a certain lesser speed. How does one find the fastest path to the swimmer?

The path of least time. The lifeguard travels faster on land than in water; the best path is a compromise. Light-which also travels faster through air than through water-seems somehow to choose precisely this path on its way from an underwater fish to the eye of an observer.

A straight line, the shortest path, is not the fastest. The lifeguard will spend too much time in the water. If instead he angles far up the beach and dives in directly opposite the swimmer—the path of least water—he still wastes time. The best compromise is the path of least time, angling up the beach and then turning for a sharper angle through the water. Any calculus student can find the best path. A lifeguard has to trust his instincts. The mathematician Pierre de Fermat guessed in 1661 that the bending of a ray of light as it passes from air into water or glass—the refraction that makes possible lenses and mirages—occurs because light behaves like a lifeguard with perfect instincts. It follows the path of least time. (Fermat, reasoning backward, surmised that light must travel more slowly in denser media. Later Newton and his followers thought they had proved the opposite: that light, like sound, travels faster through water than through air. Fermat, with his faith in a principle of simplicity, was right.)

Theology, philosophy, and physics had not yet become so distinct from one another, and scientists found it natural to ask what sort of universe God would make. Even in the quantum era the question had not fully disappeared from the scientific consciousness. Einstein did not hesitate to invoke His name. Yet when Einstein doubted that God played dice with the world, or when he uttered phrases like the one later inscribed in the stone of Fine Hall at Princeton, “The Lord God is subtle, but malicious he is not,” the great man was playing a delicate game with language. He had found a formulation easily understood and imitated by physicists, religious or not. He could express convictions about how the universe ought to be designed without giving offense either to the most literal believers in God or to his most disbelieving professional colleagues, who were happy to read God as a poetic shorthand for whatever laws or principles rule this flux of matter and energy we happen to inhabit. Einstein’s piety was sincere but neutral, acceptable even to the vehemently antireligious Dirac, of whom Wolfgang Pauli once complained, “Our friend Dirac, too, has a religion, and its guiding principle is ‘There is no God and Dirac is His prophet.’”

Scientists of the seventeenth and eighteenth centuries also had to play a double game, and the stakes were higher. Denying God was still a capital offense, and not just in theory: offenders could be hanged or burned. Scientists made an assault against faith merely by insisting that knowledge—some knowledge—must wait on observation and experiment. It was not so obvious that one category of philosopher should investigate the motion of falling bodies and another the provenance of miracles. On the contrary, Newton and his contemporaries happily constructed scientific proofs of God’s existence or employed God as a premise in a chain of reasoning. Elementary particles must be indivisible, Newton wrote in his Opticks, “so very hard as never to wear or break in pieces; no ordinary power being able to divide what God himself made one in the first creation.” Elementary particles cannot be indivisible, René Descartes wrote in his Principles of Philosophy:

There cannot be any atoms or parts of matter which are indivisible of their own nature (as certain philosophers have imagined)… . For though God had rendered the particle so small that it was beyond the power of any creature to divide it, He could not deprive Himself of the power of division, because it was absolutely impossible that He should lessen His own omnipotence… .

Could God make atoms so flawed that they could break? Could God make atoms so perfect that they would defy His power to break them? It was only one of the difficulties thrown up by God’s omnipotence, even before relativity placed a precise upper limit on velocity and before quantum mechanics placed a precise upper limit on certainty. The natural philosophers wished to affirm the presence and power of God in every corner of the universe. Yet even more fervently they wished to expose the mechanisms by which planets swerved, bodies fell, and projectiles recoiled in the absence of any divine intervention. No wonder Descartes appended a blanket disclaimer: “At the same time, recalling my insignificance, I affirm nothing, but submit all these opinions to the authority of the Catholic Church, and to the judgment of the more sage; and I wish no one to believe anything I have written, unless he is personally persuaded by the evidence of reason.”

The more competently science performed, the less it needed God. There was no special providence in the fall of a sparrow; just Newton’s second law, f = ma. Forces, masses, and acceleration were the same everywhere. The Newtonian apple fell from its tree as mechanistically and predictably as the moon fell around the Newtonian earth. Why does the moon follow its curved path? Because its path is the sum of all the tiny paths it takes in successive instants of time; and because at each instant its forward motion is deflected, like the apple, toward the earth. God need not choose the path. Or, having chosen once, in creating a universe with such laws, He need not choose again. A God that does not intervene is a God receding into a distant, harmless background.

Yet even as the eighteenth-century philosopher scientists learned to compute the paths of planets and projectiles by Newton’s methods, a French geometer and philosophe, Pierre-Louis Moreau de Maupertuis, discovered a strangely magical new way of seeing such paths. In Maupertuis’s scheme a planet’s path has a logic that cannot be seen from the vantage point of someone merely adding and subtracting the forces at work instant by instant. He and his successors, and especially Joseph Louis Lagrange, showed that the paths of moving objects are always, in a special sense, the most economical. They are the paths that minimize a quantity called action—a quantity based on the object’s velocity, its mass, and the space it traverses. No matter what forces are at work, a planet somehow chooses the cheapest, the simplest, the best of all possible paths. It is as if God—a parsimonious God—were after all leaving his stamp.

None of which mattered to Feynman when he encountered Lagrange’s method in the form of a computational shortcut in Introduction to Theoretical Physics. All he knew was that he did not like it. To his friend Welton and to the rest of the class the Lagrange formulation seemed elegant and useful. It let them disregard many of the forces acting in a problem and cut straight through to an answer. It served especially well in freeing them from the right-angle coordinate geometry of the classical reference frame required by Newton’s equations. Any reference frame would do for the Lagrangian technique. Feynman refused to employ it. He said he would not feel he understood the real physics of a system until he had painstakingly isolated and calculated all the forces. The problems got harder and harder as the class advanced through classical mechanics. Balls rolled down inclines, spun in paraboloids—Feynman would resort to ingenious computational tricks like the ones he learned in his mathematics-team days, instead of the seemingly blind, surefire Lagrangian method.

Feynman had first come on the principle of least action in Far Rockaway, after a bored hour of high-school physics, when his teacher, Abram Bader, took him aside. Bader drew a curve on the blackboard, the roughly parabolic shape a ball would take if someone threw it up to a friend at a second-floor window. If the time for the journey can vary, there are infinitely many such paths, from a high, slow lob to a nearly straight, fast trajectory. But if you know how long the journey took, the ball can have taken only one path. Bader told Feynman to make two familiar calculations of the ball’s energy: its kinetic energy, the energy of its motion, and its potential energy, the energy it possesses by virtue of its presence high in a gravitational field. Like all high-school physics students Feynman was used to adding those energies together. An airplane, accelerating as it dives, or a roller coaster, sliding down the gravity well, trades its potential energy for kinetic energy: as it loses height it gains speed. On the way back up, friction aside, the airplane or roller coaster makes the same conversion in reverse: kinetic energy becomes potential energy again. Either way, the total of kinetic and potential energy never changes. The total energy is conserved.

Bader asked Feynman to consider a less intuitive quantity than the sum of these energies: their difference. Subtracting the potential energy from the kinetic energy was as easy as adding them. It was just a matter of changing signs. But understanding the physical meaning was harder. Far from being conserved, this quantity—the action, Bader said—changed constantly. Bader had Feynman calculate it for the ball’s entire flight to the window. And he pointed out what seemed to Feynman a miracle. At any particular moment the action might rise or fall, but when the ball arrived at its destination, the path it had followed would always be the path for which the total action was least. For any other path Feynman might try drawing on the blackboard—a straight line from the ground to the window, a higher-arcing trajectory, or a trajectory that deviated however slightly from the fated path—he would find a greater average difference between kinetic and potential energy.

It is almost impossible for a physicist to talk about the principle of least action without inadvertently imputing some kind of volition to the projectile. The ball seems to choose its path. It seems to know all the possibilities in advance. The natural philosophers started encountering similar minimum principles throughout science. Lagrange himself offered a program for computing planetary orbits. The behavior of billiard balls crashing against each other seemed to minimize action. So did weights swung on a lever. So, in a different way, did light rays bent by water or glass. Fermat, in plucking his principle of least time from a pristine mathematical landscape, had found the same law of nature.

Where Newton’s methods left scientists with a feeling of comprehension, minimum principles left a sense of mystery. “This is not quite the way one thinks in dynamics,” the physicist David Park has noted. One likes to think that a ball or a planet or a ray of light makes its way instant by instant, not that it follows a preordained path. From the Lagrangian point of view the forces that pull and shape a ball’s arc into a gentle parabola serve a higher law. Maupertuis wrote, “It is not in the little details … that we must look for the supreme Being, but in phenomena whose universality suffers no exception and whose simplicity lays them quite open to our sight.” The universe wills simplicity. Newton’s laws provide the mechanics; the principle of least action ensures grace.

The hard question remained. (In fact, it would remain, disquieting the few physicists who continued to ponder it, until Feynman, having long since overcome his aversion to the principle of least action, found the answer in quantum mechanics.) Park phrased the question simply: How does the ball know which path to choose?

Socializing the Engineer

“Let none say that the engineer is an unsociable creature who delights only in formulae and slide rules.” So pleaded the MIT yearbook. Some administrators and students did worry about the socialization of this famously awkward creature. One medicine prescribed by the masters of student life was Tea, compulsory for all freshmen. (“But after they have conquered their initial fears and learned to balance a cup on a saucer while conversing with the wife of a professor, compulsion is no longer necessary.”) Students also refined their conversational skills at Bull Session Dinners and their other social skills at an endless succession of dances: Dormitory Dinner Dances, the Christmas Dance and the Spring Dance, a Monte Carlo Dance featuring a roulette wheel and a Barn Dance offering sleigh rides, dances to attract students from nearby women’s colleges like Radcliffe and Simmons, dances accompanied by the orchestras of Nye Mayhew and Glenn Miller, the traditional yearly Field Day Dance after the equally traditional Glove Fight, and, in the fraternity houses that provided the most desirable student quarters, formal dances that persuaded even Dick Feynman to put on a tuxedo almost every week.

The fraternities at MIT, as elsewhere, strictly segregated students by religion. Jews had a choice of just two, and Feynman joined the one called Phi Beta Delta, on Bay State Road in Boston, in a neighborhood of town houses just across the Charles River from campus. One did not simply “join” a fraternity, however. One enjoyed a wooing process that began the summer before college at local smokers and continued, in Feynman’s case, with insistent offers of transportation and lodging that bordered on kidnapping. Having chosen a fraternity, one instantly underwent a status reversal, from an object of desire to an object of contempt. New pledges endured systematic humiliation. Their fraternity brothers drove Feynman and the other boys to an isolated spot in the Massachusetts countryside, abandoned them beside a frozen lake, and left them to find their way home. They submitted to wrestling matches in mud and allowed themselves to be tied down overnight on the wooden floor of a deserted house—though Feynman, still secretly afraid that he would be found out as a sissy, made a surprising show of resisting his sophomore captors by grabbing at their legs and trying to knock them over. These rites were tests of character, after all, mixed with schoolboy sadism that colleges only gradually learned to restrain. The hazing left many boys with emotional bonds both to their tormentors and to their fellow victims.

Walking into the parlor floor of the Bay State Road chapter house of Phi Beta Delta, a student could linger in the front room with its big bay windows overlooking the street or head directly for the dining room, where Feynman ate most of his meals for four years. The members wore jackets and ties to dinner. They gathered in the anteroom fifteen minutes before and waited for the bell that announced the meal. White-painted pilasters rose toward the high ceilings. A stairway bent gracefully up four flights. Fraternity members often leaned over the carved railing to shout down to those below, gathered around the wooden radio console in one corner or waiting to use the pay telephone on an alcove wall. The telephone provided an upperclassman with one of his many opportunities to harass freshmen: they were obliged to carry nickels for making change. They also carried individual black notebooks for keeping a record of their failures, among other things, to carry nickels. Feynman developed a trick of catching a freshman nickel-less, making a mark in his black book, and then punishing the same freshman all over again a few minutes later. The second and third floors were given over entirely to study rooms, where students worked in twos and threes. Only the top floor was for sleeping, in double-decker bunks crowded together.

Compulsory Tea notwithstanding, some members argued vehemently that other members lacked essential graces, among them the ability to dance and the ability to invite women to accompany them to a dance. For a while this complaint dominated the daily counsel of the thirty-odd members of Phi Beta Delta. A generation later the ease of postwar life made a place for words like “wonk” and “nerd” in the collegiate vocabulary. In more class-bound and less puritanical cultures the concept flowered even earlier. Britain had its boffins, working researchers subject to the derision of intellectual gentlemen. At MIT in the thirties the nerd did not exist; a penholder worn in the shirt pocket represented no particular gaucherie; a boy could not become a figure of fun merely by studying. This was fortunate for Feynman and others like him, socially inept, athletically feeble, miserable in any but a science course, risking laughter every time he pronounced an unfamiliar name, so worried about the other sex that he trembled when he had to take the mail out past girls sitting on the stoop. America’s future scientists and engineers, many of them rising from the working class, valued studiousness without question. How could it be otherwise, in the knots that gathered almost around the clock in fraternity study rooms, filling dappled cardboard notebooks with course notes to be handed down to generations? Even so, Phi Beta Delta perceived a problem. There did seem to be a connection between hard studying and failure to dance. The fraternity made a cooperative project of enlivening the potential dull boys. Attendance at dances became mandatory for everyone in Phi Beta Delta. For those who could not find dates, the older boys arranged dates. In return, stronger students tutored the weak. Dick felt he got a good bargain. Eventually he astonished even the most sociable of his friends by spending long hours at the Raymore-Playmore Ballroom, a huge dance hall near Boston’s Symphony Hall with a mirrored ball rotating from the ceiling.

The best help for his social confidence, however, came from Arline Greenbaum. She was still one of the most beautiful girls he knew, with dimples in her round, ruddy face, and she was becoming a distinct presence in his life, though mostly from a distance. On Saturdays she would visit his family in Far Rockaway and give Joan piano lessons. She was the kind of young woman that people called “talented”—musical and artistic in a well-rounded way. She danced and sang in the Lawrence High School revue, “America on Her Way.” The Feynmans let her paint a parrot on the inside door of the coat closet downstairs. Joan started to think of her as an especially benign older sister. Often after their piano lesson they went for walks or rode their bicycles to the beach.

Arline also made an impression on the fraternity boys when she started visiting on occasional weekends and spared Dick the necessity of finding a date from among the students at the nearby women’s colleges or (to the dismay of his friends) from among the waitresses at the coffee shop he frequented. Maybe there was hope for Dick after all. Still, they wondered whether she would succeed in domesticating him before he found his way to the end of her patience. Over the winter break he had some of his friends home to Far Rockaway. They went to a New Year’s Eve party in the Bronx, taking the long subway-train ride across Brooklyn and north through Manhattan and returning, early in the morning, by the same route. By then Dick had decided that alcohol made him stupid. He avoided it with unusual earnestness. His friends knew that he had drunk no wine or liquor at the party, but all the way home he put on a loud, staggering drunk act, reeling off the subway car doors, swinging from the overhead straps, leaning over the seated passengers, and comically slurring nonsense at them. Arline watched unhappily. She had made up her mind about him, however. Sometime in his junior year he suggested that they become engaged. She agreed. Long afterward he discovered that she considered that to have been not his first but his second proposal of marriage—he had once said (offhandedly, he thought) that he would like her to be his wife.

Her well-bred talents for playing the piano, singing, drawing, and conversing about literature and the arts met in Feynman a bristling negatively charged void. He resented art. Music of all kinds made him edgy and uncomfortable. He felt he had a feeling for rhythm, and he had fallen into a habit of irritating his roommates and study partners with an absentminded drumming of his fingers, a tapping staccato against walls and wastebaskets. But melody and harmony meant nothing to him; they were sand in the mouth. Although psychologists liked to speculate about the evident mental links between the gift for mathematics and the gift for music, Feynman found music almost painful. He was becoming not passively but aggressively uncultured. When people talked about painting or music, he heard nomenclature and pomposity. He rejected the bird’s nest of traditions, stories, and knowledge that cushioned most people, the cultural resting place woven from bits of religion, American history, English literature, Greek myth, Dutch painting, German music. He was starting fresh. Even the gentle, hearth-centered Reform Judaism of his parents left him cold. They had sent him to Sunday school, but he had quit, shocked at the discovery that those stories—Queen Esther, Mordechai, the Temple, the Maccabees, the oil that burned eight nights, the Spanish inquisition, the Jew who sailed with Christopher Columbus, the whole pastel mosaic of holiday legends and morality tales offered to Jewish schoolchildren on Sundays—mixed fiction with fact. Of the books assigned by his high-school teachers he read almost none. His friends mocked him when, forced to read a book, any book, in preparing for the New York State Regents Examination, he chose Treasure Island. (But he outscored all of them, even in English, when he wrote an essay on “the importance of science in aviation” and padded his sentences with what he knew to be redundant but authoritative phrases like “eddies, vortices, and whirlpools formed in the atmosphere behind the aircraft …”)

He was what the Russians derided as nekulturniy, what Europeans refused to permit in an educated scientist. Europe prepared its scholars to register knowledge more broadly. At one of the fateful moments toward which Feynman’s life was now beginning to speed, he would stand near the Austrian theorist Victor Weisskopf, both men watching as a light flared across the southern New Mexico sky. In that one instant Feynman would see a great ball of flaming orange, churning amid black smoke, while Weisskopf would hear, or think he heard, a Tchaikovsky waltz playing over the radio. That was strangely banal accompaniment for a yellow-orange sphere surrounded by a blue halo—a color that Weisskopf thought he had seen before, on an altarpiece at Colmar painted by the medieval master Matthias Grünewald to depict (the irony was disturbing) the ascension of Christ. No such associations for Feynman. MIT, America’s foremost technical school, was the best and the worst place for him. The institute justified its required English course by reminding students that they might someday have to write a patent application. Some of Feynman’s fraternity friends actually liked French literature, he knew, or actually liked the lowest-common-denominator English course, with its smattering of great books, but to Feynman it was an intrusion and a pain in the neck.

In one course he resorted to cheating. He refused to do the daily reading and got through a routine quiz, day after day, by looking at his neighbor’s answers. English class to Feynman meant arbitrary rules about spelling and grammar, the memorization of human idiosyncrasies. It seemed like supremely useless knowledge, a parody of what knowledge ought to be. Why didn’t the English professors just get together and straighten out the language? Feynman got his worst grade in freshman English, barely passing, worse than his grades in German, a language he did not succeed in learning. After freshman year matters eased. He tried to read Goethe’s Faust and felt he could make no sense of it. Still, with some help from his fraternity friends he managed to write an essay on the limitations of reason: problems in art or ethics, he argued, could not be settled with certainty through chains of logical reasoning. Even in his class themes he was beginning to assert a moral viewpoint. He read John Stuart Mill’s On Liberty (“Whatever crushes individuality is despotism”) and wrote about the despotism of social niceties, the white lies and fake politesse that he so wanted to escape. He read Thomas Huxley’s “On a Piece of Chalk,” and wrote, instead of the analysis he was assigned, an imitation, “On a Piece of Dust,” musing on the ways dust makes raindrops form, buries cities, and paints sunsets. Although MIT continued to require humanities courses, it took a relaxed view of what might constitute humanities. Feynman’s sophomore humanities course, for example, was Descriptive Astronomy. “Descriptive” meant “no equations.” Meanwhile in physics itself Feynman took two courses in mechanics (particles, rigid bodies, liquids, stresses, heat, the laws of thermodynamics), two in electricity (electrostatics, magnetism, …), one in experimental physics (students were expected to design original experiments and show that they understood many different sorts of instruments), a lecture course and a laboratory course in optics (geometrical, physical, and physiological), a lecture course and a laboratory course in electronics (devices, thermionics, photoemission), a course in X rays and crystals, a course and a laboratory in atomic structure (spectra, radioactivity, and a physicist’s view of the periodic table), a special seminar on the new nuclear theory, Slater’s advanced theory course, a special seminar on quantum theory, and a course on heat and thermodynamics that worked toward statistical mechanics both classical and quantum; and then, his docket full, he listened in on five more advanced courses, including relativity and advanced mechanics. When he wanted to round out his course selection with something different, he took metallography.

Then there was philosophy. In high school he had entertained the conceit that different kinds of knowledge come in a hierarchy: biology and chemistry, then physics and mathematics, and then philosophy at the top. His ladder ran from the particular and ad hoc to the abstract and theoretical—from ants and leaves to chemicals, atoms, and equations and then onward to God, truth, and beauty. Philosophers have entertained the same notion. Feynman did not flirt with philosophy long, however. His sense of what constituted a proof had already developed into something more hard-edged than the quaint arguments he found in Descartes, for example, whom Arline was reading. The Cartesian proof of God’s perfection struck him as less than rigorous. When he parsed I think, therefore I am, it came out suspiciously close to I am and I also think. When Descartes argued that the existence of imperfection implied perfection, and that the existence of a God concept in his own fuzzy and imperfect mind implied the existence of a Being sufficiently perfect and infinite as to create such a conception, Feynman thought he saw the obvious fallacy. He knew all about imperfection in science—“degrees of approximation.” He had drawn hyperbolic curves that approached an ideal straight line without ever reaching it. People like Descartes were stupid, Richard told Arline, relishing his own boldness in defying the authority of the great names. Arline replied that she supposed there were two sides to everything. Richard gleefully contradicted even that. He took a strip of paper, gave it a half twist, and pasted the ends together: he had produced a surface with one side.

No one showed Feynman, in return, the genius of Descartes’s strategy in proving the obvious—obvious because he and his contemporaries were supposed to take their own and God’s existence as given. The Cartesian master plan was to reject the obvious, reject the certain, and start fresh from a state of total doubt. Even I might be an illusion or a dream, Descartes declared. It was the first great suspension of belief. It opened a door to the skepticism that Feynman now savored as part of the modern scientific method. Richard stopped reading, though, long before giving himself the pleasure of rejecting Descartes’s final, equally unsyllogistic argument for the existence of God: that a perfect being would certainly have, among other excellent features, the attribute of existence.

Philosophy at MIT only irritated Feynman more. It struck him as an industry built by incompetent logicians. Roger Bacon, famous for introducing scientia experimentalis into philosophical thought, seemed to have done more talking than experimenting. His idea of experiment seemed closer to mere experience than to the measured tests a twentieth-century student performed in his laboratory classes. A modern experimenter took hold of some physical apparatus and performed certain actions on it, again and again, and generally wrote down numbers. William Gilbert, a less well-known sixteenth-century investigator of magnetism, suited Feynman better, with his credo, “In the discovery of secret things and in the investigation of hidden causes, stronger reasons are obtained from sure experiments and demonstrated arguments than from probable conjectures and the opinions of philosophical speculators of the common sort.” That was a theory of knowledge Feynman could live by. It also stuck in his mind that Gilbert thought Bacon wrote science “like a prime minister.” MIT’s physics instructors did nothing to encourage students to pay attention to the philosophy instructors. The tone was set by the pragmatic Slater, for whom philosophy was smoke and perfume, free-floating and untestable prejudice. Philosophy set knowledge adrift; physics anchored knowledge to reality.

“Not from positions of philosophers but from the fabric of nature”—William Harvey three centuries earlier had declared a division between science and philosophy. Cutting up corpses gave knowledge a firmer grounding than cutting up sentences, he announced, and the gulf between two styles of knowledge came to be accepted by both camps. What would happen when scientists plunged their knives into the less sinewy reality inside the atom remained to be seen. In the meantime, although Feynman railed against philosophy, an instructor’s cryptic comment about “stream of consciousness” started him thinking about what he could learn of his own mind through introspection. His inward looking was more experimental than Descartes’s. He would go up to his room on the fourth floor of Phi Beta Delta, pull down the shades, get into bed, and try to watch himself fall asleep, as if he were posting an observer on his shoulder. His father years before had raised the problem of what happens when one falls asleep. He liked to prod Ritty to step outside himself and look afresh at his usual way of thinking: he asked how the problem would look to a Martian who arrived in Far Rockaway and starting asking questions. What if Martians never slept? What would they want to know? How does it feel to fall asleep? Do you simply turn off, as if someone had thrown a switch? Or do your ideas come slower and slower until they stop? Up in his room, taking midday naps for the sake of philosophy, Feynman found that he could follow his consciousness deeper and deeper toward the dissolution that came with sleep. His thoughts, he saw, did not so much slow down as fray apart, snapping from place to place without the logical connectives of waking brain work. He would suddenly realize he had been imagining his bed rising amid a contraption of pulleys and wires, ropes winding upward and catching against one another, Feynman thinking, the tension of the ropes will hold … and then he would be awake again. He wrote his observations in a class paper, concluding with a comment in the form of doggerel about the hall-of-mirrors impossibility of true introspection: “I wonder why I wonder why. I wonder why I wonder. I wonder why I wonder why I wonder why I wonder!”

After his instructor read his paper aloud in class, poem and all, Feynman began trying to watch his dreams. Even there he obeyed a tinkerer’s impulse to take phenomena apart and look at the works inside. He was able to dream the same dream again and again, with variations. He was riding in a subway train. He noticed that kinesthetic feelings came through clearly. He could feel the lurching from side to side, see colors, hear the whoosh of air through the tunnel. As he walked through the car he passed three girls in bathing suits behind a pane of glass like a store window. The train kept lurching, and suddenly he thought it would be interesting to see how sexually excited he could become. He turned to walk back toward the window—but now the girls had become three old men playing violins. He could influence the course of a dream, but not perfectly, he realized. In another dream Arline came by subway train to visit him in Boston. They met and Dick felt a wave of happiness. There was green grass, the sun was shining, they walked along, and Arline said, “Could we be dreaming?”

“No, sir,” Dick replied, “no, this is not a dream.” He persuaded himself of Arline’s presence so forcibly that when he awoke, hearing the noise of the boys around him, he did not know where he was. A dismayed, disoriented moment passed before he realized that he had been dreaming after all, that he was in his fraternity bedroom and that Arline was back home in New York.

The new Freudian view of dreams as a door to a person’s inner life had no place in his program. If his subconscious wished to play out desires too frightening or confusing for his ego to contemplate directly, that hardly mattered to Feynman. Nor did he care to think of his dream subjects as symbols, encoded for the sake of a self-protective obscurity. It was his ego, his “rational mind,” that concerned him. He was investigating his mind as an intriguingly complex machine, one whose tendencies and capabilities mattered to him more than almost anything else. He did develop a rudimentary theory of dreams for his philosophy essay, though it was more a theory of vision: that the brain has an “interpretation department” to turn jumbled sensory impressions into familiar objects and concepts; that the people or trees we think we see are actually created by the interpretation department from the splotches of color that enter the eye; and that dreams are the product of the interpretation department running wild, free of the sights and sounds of the waking hours.

His philosophical efforts at introspection did nothing to soften his dislike of the philosophy taught at MIT as The Making of the Modern Mind. Not enough sure experiments and demonstrated arguments; too many probable conjectures and philosophical speculations. He sat through lectures twirling a small steel drill bit against the sole of his shoe. So much stuff in there, so much nonsense, he thought. Better I should use my modern mind.

The Newest Physics

The theory of the fast and the theory of the small were narrowing the focus of the few dozen men with the suasion to say what physics was. Most of human experience passed in the vast reality that was neither fast nor small, where relativity and quantum mechanics seemed unnecessary and unnatural, where rivers ran, clouds flowed, baseballs soared and spun by classical means—but to young scientists seeking the most fundamental knowledge about the fabric of their universe, classical physics had no more to say. They could not ignore the deliberately disorienting rhetoric of the quantum mechanicians, nor the unifying poetry of Einstein’s teacher Hermann Minkowski: “Space of itself and time of itself will sink into mere shadows, and only a kind of union between them shall survive.”

Later, quantum mechanics suffused into the lay culture as a mystical fog. It was uncertainty, it was acausality, it was the Tao updated, it was the century’s richest fount of paradoxes, it was the permeable membrane between the observer and the observed, it was the funny business sending shudders up science’s all-too-deterministic scaffolding. For now, however, it was merely a necessary and useful contrivance for accurately describing the behavior of nature at the tiny scales now accessible to experimenters.

Nature had seemed so continuous. Technology, however, made discreteness and discontinuity a part of everyday experience: gears and ratchets creating movement in tiny jumps; telegraphs that digitized information in dashes and dots. What about the light emitted by matter? At everyday temperatures the light is infrared, its wavelengths too long to be visible to the eye. At higher temperatures, matter radiates at shorter wavelengths: thus an iron bar heated in a forge glows red, yellow, and white. By the turn of the century, scientists were struggling to explain this relationship between temperature and wavelength. If heat was to be understood as the motion of molecules, perhaps this precisely tuned radiant energy suggested an internal oscillation, a vibration with the resonant tonality of a violin string. The German physicist Max Planck pursued this idea to its logical conclusion and announced in 1900 that it required an awkward adjustment to the conventional way of thinking about energy. His equations produced the desired results only if one supposed that radiation was emitted in lumps, discrete packets called quanta. He calculated a new constant of nature, the indivisible unit underlying these lumps. It was a unit, not of energy, but of the product of energy and time—the quantity called action.

Five years later Einstein used Planck’s constant to explain another puzzle, the photoelectric effect, in which light absorbed by a metal knocks electrons free and creates an electric current. He, too, followed the relationship between wavelength and current to an inevitable mathematical conclusion: that light itself behaves not as a continuous wave but as a broken succession of lumps when it interacts with electrons.

These were dubious claims. Most physicists found Einstein’s theory of special relativity, published the same year, more palatable. But in 1913 Niels Bohr, a young Dane working in Ernest Rutherford’s laboratory in Manchester, England, proposed a new model of the atom built on these quantum underpinnings. Rutherford had recently imagined the atom as a solar system in miniature, with electrons orbiting the nucleus. Without a quantum theory, physicists would have to accept the notion of electrons gradually spiraling inward as they radiated some of their energy away. The result would be continuous radiation and the eventual collapse of the atom in on itself. Bohr instead described an atom whose electrons could inhabit only certain orbits, prescribed by Planck’s indivisible constant. When an electron absorbed a light quantum, it meant that in that instant it jumped to a higher orbit: the soon-to-be-proverbial quantum jump. When the electron jumped to a lower orbit, it emitted a light quantum at a certain frequency. Everything else was simply forbidden. What happened to the electron “between” orbits? One learned not to ask.

These new kinds of lumpiness in the way science conceived of energy were the essence of quantum mechanics. It remained to create a theory, a mathematical framework that would accommodate the working out of these ideas. Classical intuitions had to be abandoned. New meanings had to be assigned to the notions of probability and cause. Much later, when most of the early quantum physicists were already dead, Dirac, himself chalky-haired and gaunt, with just a trace of white mustache, made the birth of quantum mechanics into a small fable. By then many scientists and writers had done so, but rarely with such unabashed stick-figure simplicity. There were heroes and almost heroes, those who reached the brink of discovery and those whose courage and faith in the equation led them to plunge onward.

Dirac’s simple morality play began with LORENTZ. This Dutch physicist realized that light shines from the oscillating charges within the atom, and he found a way of rearranging the algebra of space and time that produced a strange contraction of matter near the speed of light. As Dirac said, “Lorentz succeeded in getting correctly all the basic equations needed to establish the relativity of space and time, but he just was not able to make the final step.” Fear held him back.

Next came a bolder man, EINSTEIN. He was not so inhibited. He was able to move ahead and declare space and time to be joined.

HEISENBERG started quantum mechanics with “a brilliant idea”: “one should try to construct a theory in terms of quantities which are provided by experiment, rather than building it up, as people had done previously, from an atomic model which involved many quantities which could not be observed.” This amounted to a new philosophy, Dirac said.

(Conspicuously a noncharacter in Dirac’s fable was Bohr, whose 1913 model of the hydrogen atom now represented the old philosophy. Electrons whirling about a nucleus? Heisenberg wrote privately that this made no sense: “My whole effort is to destroy without a trace the idea of orbits.” One could observe light of different frequencies shining from within the atom. One could not observe electrons circling in miniature planetary orbits, nor any other atomic structure.)

It was 1925. Heisenberg set out to pursue his conception wherever it might lead, and it led to an idea so foreign and surprising that “he was really scared.” It seemed that Heisenberg’s quantities, numbers arranged in matrices, violated the usual commutative law of multiplication that says a times b equals b times a. Heisenberg’s quantities did not commute. There were consequences. Equations in this form could not specify both momentum and position with definite precision. A measure of uncertainty had to be built in.

A manuscript of Heisenberg’s paper made its way to DIRAC himself. He studied it. “You see,” he said, “I had an advantage over Heisenberg because I did not have his fears.”

Meanwhile, SCHRÖDINGER was taking a different route. He had been struck by an idea of DE BROGLIE two years before: that electrons, those pointlike carriers of electric charge, are neither particles nor waves but a mysterious combination. Schrödinger set out to make a wave equation, “a very neat and beautiful equation,” that would allow one to calculate electrons tugged by fields, as they are in atoms.

Then he tested his equation by calculating the spectrum of light emitted by a hydrogen atom. The result: failure. Theory and experiment did not agree. Eventually, however, he found that if he compromised and ignored the effects of relativity his theory agreed more closely with observations. He published this less ambitious version of his equation.

Thus fear triumphed again. “Schrödinger had been too timid,” Dirac said. Two other men, KLEIN and GORDON, rediscovered the more complete version of the theory and published it. Because they were “sufficiently bold” not to worry too much about experiment, the first relativistic wave equation now bears their names.

Yet the Klein-Gordon equation still produced mismatches with experiments when calculations were carried out carefully. It also had what seemed to Dirac a painful logical flaw. It implied that the probability of certain events must be negative, less than zero. Negative probabilities, Dirac said, “are of course quite absurd.”

It remained only for Dirac to invent—or was it “design” or “discover”?—a new equation for the electron. This was exceedingly beautiful in its formal simplicity and the sense of inevitability it conveyed, after the fact, to sensitive physicists. The equation was a triumph. It correctly predicted (and so, to a physicist, “explained”) the newly discovered quantity called spin, as well as the hydrogen spectrum. For the rest of his life Dirac’s equation remained his signal achievement. It was 1927. “That is the way in which quantum mechanics was started,” Dirac said.

These were the years of Knabenphysik, boy physics. When they began, Heisenberg was twenty-three and Dirac twenty-two. (Schrödinger was an elderly thirty-seven, but, as one chronicler noted, his discoveries came “during a late erotic outburst in his life.”) A new Knabenphysik began at MIT in the spring of 1936. Dick Feynman and T. A. Welton were hungry to make their way into quantum theory, but no course existed in this nascent science, so much more obscure even than relativity. With guidance from just a few texts they embarked on a program of self-study. Their collaboration began in one of the upstairs study rooms of the Bay State Road fraternity house and continued past the end of the spring term. Feynman returned home to Far Rockaway, Welton to Saratoga Springs. They filled a notebook, mailing it back and forth, and in a period of months they recapitulated nearly the full sweep of the 1925-27 revolution.

“Dear R. P… .” Welton wrote on July 23. “I notice you write your equation:

![]()

This was the relativistic Klein-Gordon equation. Feynman had rediscovered it, by correctly taking into account the tendency of matter to grow more massive at velocities approaching the speed of light—not just quantum mechanics, but relativistic quantum mechanics. Welton was excited. “Why don’t you apply your equation to a problem like the hydrogen atom, and see what results it gives?” Just as Schrödinger had done ten years before, they worked out the calculation and saw that it was wrong, at least when it came to making precise predictions.

“Here’s something, the problem of an electron in the gravitational field of a heavy particle. Of course the electron would contribute something to the field …”

“I wonder if the energy would be quantized? The more I think about the problem, the more interesting it sounds. I’m going to try it …

“… I’ll probably get an equation that I can’t solve anyway,” Welton added ruefully. (When Feynman got his turn at the notebook he scrawled in the margin, “Right!”) “That’s the trouble with quantum mechanics. It’s easy enough to set up equations for various problems, but it takes a mind twice as good as the differential analyzer to solve them.”

General relativity, barely a decade old, had merged gravity and space into a single object. Gravity was a curvature of space-time. Welton wanted more. Why not tie electromagnetism to space-time geometry as well? “Now you see what I mean when I say, I want to make electrical phenomena a result of the metric of a space in the same way that gravitational phenomena are. I wonder if your equation couldn’t be extended to Eddington’s affine geometry…” (In response Feynman scribbled: “I tried it. No luck yet.”)

Feynman also tried to invent an operator calculus, writing rules of differentiation and integration for quantities that did not commute. The rules would have to depend on the order of the quantities, themselves matrix representations of forces in space and time. “Now I think I’m wrong on account of those darn partial integrations,” Feynman wrote. “I oscillate between right and wrong.”

“Now I know I’m right … In my theory there are a lot more ‘fundamental’ invariants than in the other theory.”

And on they went. “Hot dog! after 3 wks of work … I have at last found a simple proof,” Feynman wrote. “It’s not important to write it, however. The only reason I wanted to do it was because I couldn’t do it and felt that there were some more relations between the An & their derivatives that I had not discovered … Maybe I’ll get electricity into the metric yet! Good night, I have to go to bed.”

The equations came fast, penciled across the notebook pages. Sometimes Feynman called them “laws.” As he worked to improve his techniques for calculating, he also kept asking himself what was fundamental and what was secondary, which were the essential laws and which were derivative. In the upside-down world of early quantum mechanics, it was far from obvious. Heisenberg and Schrödinger had taken starkly different routes to the same physics. Each in his way had embraced abstraction and renounced visualization. Even Schrödinger’s waves defied every conventional picture. They were not waves of substance or energy but of a kind of probability, rolling through a mathematical space. This space itself often resembled the space of classical physics, with coordinates specifying an electron’s position, but physicists found it more convenient to use momentum space (denoted by Pα), a coordinate system based on momentum rather than position—or based on the direction of a wavefront rather than any particular point on it. In quantum mechanics the uncertainty principle meant that position and momentum could no longer be specified simultaneously. Feynman in the August after his sophomore year began working with coordinate space (Qα)—less convenient for the wave point of view, but more directly visualizable.

“Pα is no more fundamental than Qα nor vice versa—why then has Pα played such an important role in theory and why don’t I try Qα instead of Pα in certain generalizations of equations …” Indeed, he proved that the customary approach could be derived directly from the theory as cast in terms of momentum space.

In the background both boys were worrying about their health. Welton had an embarrassing and unexplained tendency to fall asleep in his chair, and during the summer break he was taking naps, mineral baths, and sunlamp treatments—doses of high ultraviolet radiation from a large mercury arc light. Feynman suffered something like nervous exhaustion as he finished his sophomore year. At first he was told he would have to stay in bed all summer. “I’d go nuts if it were me I,” T. A. wrote in their notebook. “Anyhow, I hope you get to school all right in the fall. Remember, we’re going to be taught quantum mechanics by no less an authority than Prof. Morse himself. I’m really looking forward to that.” (“Me too,” Feynman wrote.)

They were desperately eager to be at the front edge of physics. They both started reading journals like the Physical Review. (Feynman made a mental note that a surprising number of articles seemed to be coming from Princeton.) Their hope was to catch up on the latest discoveries and to jump ahead. Welton would set to work on a development in wave tensor calculus; Feynman would tackle an esoteric application of tensors to electrical engineering, and only after wasting several months did they begin to realize that the journals made poor Baedekers. Much of the work was out of date by the time the journal article appeared. Much of it was mere translation of a routine result into an alternative jargon. News did sometimes break in the Physical Review, if belatedly, but the sophomores were ill equipped to pick it out of the mostly inconsequential background.

Morse had taught the second half of the theoretical physics course that brought Feynman and Welton together, and he had noticed these sophomores, with their penetrating questions about quantum mechanics. In the fall of 1937 they, along with an older student, met with Morse once a week and began to fit their own blind discoveries into the context of physics as physicists understood it. They finally read Dirac’s 1935 bible, The Principles of Quantum Mechanics. Morse put them to work calculating the properties of different atoms, using a method of his own devising. It computed energies by varying the parameters in equations known as hydrogenic radial functions—Feynman insisted on calling them hygienic functions—and it required more plain, plodding arithmetic than either boy had ever encountered. Fortunately they had calculators, a new kind that replaced the old hand cranks with electric motors. Not only could the calculators add, multiply, and subtract; they could divide, though it took time. They would enter numbers by turning metal dials. They would turn on the motor and watch the dials spin toward zero. A bell would ring. The chug-chug-ding-ding rang in their ears for hours.

In their spare time Feynman and Welton used the same machines to earn money through a Depression agency, the National Youth Administration, calculating the atomic lattices of crystals for a professor who wanted to publish reference tables. They worked out faster methods of running the calculator. And when they thought that they had their system perfected, they made another calculation: how long it would take to complete the job. The answer: seven years. They persuaded the professor to set the project aside.

Shop Men

MIT was still an engineering school, and an engineering school in the heyday of mechanical ingenuity. There seemed no limit to the power of lathes and cams, motors and magnets, though just a half-generation later the onset of electronic miniaturization would show that there had been limits after all. The school’s laboratories, technical classes, and machine shops gave undergraduates a playground like none other in the world. When Feynman took a laboratory course, the instructor was Harold Edgerton, an inventor and tinkerer who soon became famous for his high-speed photographs, made with a stroboscope, a burst of light slicing time more finely than any mechanical shutter could. Edgerton extended human sight into the realm of the very fast just as microscopes and telescopes were bringing into view the small and the large. In his MIT workshop he made pictures of bullets splitting apples and cards; of flying hummingbirds and splashing milk drops; of golf balls at the moment of impact, deformed to an ovoid shape that the eye had never witnessed. The stroboscope showed how much had been unseen. “All I’ve done is take God Almighty’s lighting and put it in a container,” he said. Edgerton and his colleagues gave body to the ideal of the scientist as a permanent child, finding ever more ingenious ways of taking the world apart to see what was inside.

That was an American technical education. In Germany a young would-be theorist could spend his days hiking around alpine lakes in small groups, playing chamber music and arguing philosophy with an earnest Magic Mountain volubility. Heisenberg, whose name would come to stand for the twentieth century’s most famous kind of uncertainty, grew enraptured as a young student with his own “utter certainty” that nature expressed a deep Platonic order. The strains of Bach’s D Minor Chaconne, the moonlit landscapes visible through the mists, the atom’s hidden structure in space and time—all seemed as one. Heisenberg had joined the youth movement that formed in Munich after the trauma of World War I, and the conversation roamed freely: Did the fate of Germany matter “more than that of all mankind”? Can human perception ever penetrate the atom deeply enough to see why a carbon atom bonds with two but never three oxygen atoms? Does youth have “the right to fashion life according to its own values”? For such students philosophy came first in physics. The search for meaning, the search for purpose, led naturally down into the world of atoms.

Students entering the laboratories and machine shops at MIT left the search for meaning outside. Boys tested their manhood there, learning to handle the lathes and talk with the muscular authority that seemed to emanate from the “shop men.” Feynman wanted to be a shop man but felt he was a faker among these experts, so easy with their tools and their working-class talk, their ties tucked in their belts to avoid catching in the chuck. When Feynman tried to machine metal it never came out quite right. His disks were not quite flat. His holes were too big. His wheels wobbled. Yet he understood these gadgets and he savored small triumphs. Once a machinist who had often teased him was struggling to center a heavy disk of brass in his lathe. He had it spinning against a position gauge, with a needle that jerked with each revolution of the off-kilter disk. The machinist could not see how to center the disk and stop the tick-tick-tick of the needle. He was trying to mark the point where the disk stuck out farthest by lowering a piece of chalk as slowly as he could toward the spinning edge. The lopsidedness was too subtle; it was impossible to hold the chalk steady enough to hit just the right spot. Feynman had an idea. He took the chalk and held it lightly above the disk, gently shaking his hand up and down in time with the rhythm of the shaking needle. The bulge of the disk was invisible, but the rhythm wasn’t. He had to ask the machinist which way the needle went when the bulge was up, but he got the timing just right. He watched the needle, said to himself, rhythm, and made his mark. With a tap of the machinist’s mallet on Feynman’s mark, the disk was centered.

The machinery of experimental physics was just beginning to move beyond the capabilities of a few men in a shop. In Rome, as the 1930s began, Enrico Fermi made his own tiny radiation counters from lipstick-size aluminum tubes at his institute above the Via Panisperna. He methodically brought one element after another into contact with free neutrons streaming from samples of radioactive radon. By his hands were created a succession of new radioactive isotopes, substances never seen in nature, some with half-lives so short that Fermi had to race his samples down the corridor to test them before they decayed to immeasurability. He found a nameless new element heavier than any found in nature. By hand he placed lead barriers across the neutron stream, and then, in a moment of mysterious inspiration, he tried a barrier of paraffin. Something in paraffin—hydrogen?—seemed to slow the neutrons. Unexpectedly, the slow neutrons had a far more powerful effect on some of the bombarded elements. Because the neutrons were electrically neutral, they floated transparently through the knots of electric charge around the target atoms. At speeds barely faster than a batted baseball they had more time to work nuclear havoc. As Fermi tried to understand this, it seemed to him that the essence of the process was a kind of diffusion, analogous to the slow invasion of the still air of a room by the scent of perfume. He imagined the path they must be taking through the paraffin, colliding one, two, three, a hundred times with atoms of hydrogen, losing energy with each collision, bouncing this way and that according to laws of probability.

The neutron, the chargeless particle in the atom’s core, had not even been discovered until 1932. Until then physicists supposed that the nucleus was a mixture of electrically negative and positive particles, electrons and protons. The evidence taken from ordinary chemical and electrical experiments shed little light on the nucleus. Physicists knew only that this core contained nearly all the atom’s mass and whatever positive charge was needed to balance the outer electrons. It was the electrons—floating or whirling in their shells, orbits, or clouds—that seemed to matter in chemistry. Only by bombarding substances with particles and measuring the particles’ deflection could scientists begin to penetrate the nucleus. They also began to split it. By the spring of 1938 not just dozens but hundreds of physics professors and students were at least glancingly aware of the ideas leading toward the creation of heavy new elements and the potential release of nuclear energies. MIT decided to offer a graduate seminar on the theory of nuclear structure, to be taught by Morse and a colleague.

Feynman and Welton, juniors, showed up in a room of excited-looking graduate students. When Morse saw them he demanded to know whether they were planning to register. Feynman was afraid they would be turned down, but when he said yes, Morse said he was relieved. Feynman and Welton brought the total enrollment to three. The other graduate students were willing only to audit the class. Like quantum mechanics, this was difficult new territory. No textbook existed. There was just one essential text for anyone studying nuclear physics in 1938: a series of three long articles in Reviews of Modern Physics by Hans Bethe, a young German physicist newly relocated to Cornell. In these papers Bethe effectively rebuilt this new discipline. He began with the basics of charge, weight, energy, size, and spin of the simplest nuclear particles. He moved on to the simplest compound nucleus, the deuteron, a single proton bound to a single neutron. He systematically worked his way toward the forces that were beginning to reveal themselves in the heaviest atoms known.

As he studied these most modern branches of physics, Feynman also looked for chances to explore more classical problems, problems he could visualize. He investigated the scattering of sunlight by clouds—scattering being a word that was taking a more and more central place in the vocabulary of physicists. Like so many scientific borrowings from plain English, the word came deceptively close to its ordinary meaning. Particles in the atmosphere scatter rays of light almost in the way a gardener scatters seeds or the ocean scatters driftwood. Before the quantum era a physicist could use the word without having to commit himself mentally either to a wave or a particle view of the phenomenon. Light simply dispersed as it passed through some medium and so lost some or all of its directional character. The scattering of waves implied a general diffusion, a randomizing of the original directionality. The sky is blue because the molecules of the atmosphere scatter the blue wavelengths more than the others; the blue seems to come from everywhere in the sky. The scattering of particles encouraged a more precise visualization: actual billiard-ball collisions and recoils. A single particle could scatter another. Indeed, the scattering of a very few particles would soon become the salient experiment of modern physics.

That clouds scattered sunlight was obvious. Close up, each wavering water droplet must shimmer with light both reflected and refracted, and the passage of the light from one drop to the next must be another kind of diffusion. A well-organized education in science fosters the illusion that when problems are easy to state and set up mathematically they are then easy to solve. For Feynman the cloud-scattering problem helped disperse the illusion. It seemed as primitive as any of hundreds of problems set out in his textbooks. It had the childlike quality that marks so many fundamental questions. It came just one step past the question of why we see clouds at all: water molecules scatter light perfectly well when they are floating as vapor, yet the light grows much whiter and more intense when the vapor condenses, because the molecules come so close together that their tiny electric fields can resonate in phase with one another to multiply the effect. Feynman tried to understand also what happened to the direction of the scattered light, and he discovered something that he could not believe at first. When the light emerges from the cloud again, caroming off billions of droplets, seemingly smeared to a ubiquitous gray, it actually retains some memory of its original direction. One foggy day he looked at a building far away across the river in Boston and saw its outline, faint but still sharp, diminished in contrast but not in focus. He thought: the mathematics worked after all.

Feynman of Course Is Jewish