Complexity: A Guided Tour - Melanie Mitchell (2009)

Part III. Computation Writ Large

Chapter 14. Prospects of Computer Modeling

BECAUSE COMPLEX SYSTEMS ARE TYPICALLY, as their name implies, hard to understand, the more mathematically oriented sciences such as physics, chemistry, and mathematical biology have traditionally concentrated on studying simple, idealized systems that are more tractable via mathematics. However, more recently, the existence of fast, inexpensive computers has made it possible to construct and experiment with models of systems that are too complex to be understood with mathematics alone. The pioneers of computer science—Alan Turing, John von Neumann, Norbert Wiener, and others—were all motivated by the desire to use computers to simulate systems that develop, think, learn, and evolve. In this fashion a new way of doing science was born. The traditional division of science into theory and experiment has been complemented by an additional category: computer simulation (figure 14.1). In this chapter I discuss what we can learn from computer models of complex systems and what the possible pitfalls are of using such models to do science.

What Is a Model?

A model, in the context of science, is a simplified representation of some “real” phenomenon. Scientists supposedly study nature, but in reality much of what they do is construct and study models of nature.

Think of Newton’s law of gravity: the force of gravity between two objects is proportional to the product of their masses divided by the square of the distance between them. This is a mathematical statement of the effects of a particular phenomenon—a mathematical model. Another kind of model describes how the phenomenon actually works in terms of simpler concepts—that is, what we call mechanisms. In Newton’s own time, his law of gravity was attacked because he did not give a mechanism for gravitational force. Literally, he did not show how it could be explained in terms of “size, shape, and motion” of parts of physical objects—the primitive elements that were, according to Descartes, necessary and sufficient components of all models in physics. Newton himself speculated on possible mechanisms of gravity; for example, he “pictured the Earth like a sponge, drinking up the constant stream of fine aethereal matter falling from the heavens, this stream by its impact on bodies above the Earth causing them to descend.” Such a conceptualization might be called a mechanistic model. Two hundred years later, Einstein proposed a different mechanistic model for gravity, general relativity, in which gravity is conceptualized as being caused by the effects of material bodies on the shape of four-dimensional space-time. At present, some physicists are touting string theory, which proposes that gravity is caused by miniscule, vibrating strings.

FIGURE 14.1. The traditional division of science into theory and experiment has been complemented by a new category: computer simulation. (Drawing by David Moser.)

Models are ways for our minds to make sense of observed phenomena in terms of concepts that are familiar to us, concepts that we can get our heads around (or in the case of string theory, that only a few very smart people can get their heads around). Models are also a means for predicting the future: for example, Newton’s law of gravity is still used to predict planetary orbits, and Einstein’s general relativity has been used to successfully predict deviations from those predicted orbits.

Idea Models

For applications such as weather forecasting, the design of automobiles and airplanes, or military operations, computers are often used to run detailed and complicated models that in turn make detailed predictions about the specific phenomena being modeled.

In contrast, a major thrust of complex systems research has been the exploration of idea models: relatively simple models meant to gain insights into a general concept without the necessity of making detailed predictions about any specific system. Here are some examples of idea models that I have discussed so far in this book:

· Maxwell’s demon: An idea model for exploring the concept of entropy.

· Turing machine: An idea model for formally defining “definite procedure” and for exploring the concept of computation.

· Logistic model and logistic map: Minimal models for predicting population growth; these were later turned into idea models for exploring concepts of dynamics and chaos in general.

· Von Neumann’s self-reproducing automaton: An idea model for exploring the “logic” of self-reproduction.

· Genetic algorithm: An idea model for exploring the concept of adaptation. Sometimes used as a minimal model of Darwinian evolution.

· Cellular automaton: An idea model for complex systems in general.

· Koch curve: An idea model for exploring fractal-like structures such as coastlines and snowflakes.

· Copycat: An idea model for human analogy-making.

Idea models are used for various purposes: to explore general mechanisms underlying some complicated phenomenon (e.g., von Neumann’s logic of self-reproduction); to show that a proposed mechanism for a phenomenon is plausible or implausible (e.g., dynamics of population growth); to explore the effects of variations on a simple model (e.g., investigating what happens when you change mutation rates in genetic algorithms or the value of the control parameter R in the logistic map); and more generally, to act as what the philosopher Daniel Dennett called “intuition pumps”—thought experiments or computer simulations used to prime one’s intuitions about complex phenomena.

Idea models in complex systems also have provided inspiration for new kinds of technology and computing methods. For example, Turing machines inspired programmable computers; von Neumann’s self-reproducing automaton inspired cellular automata; minimal models of Darwinian evolution, the immune system, and insect colonies inspired genetic algorithms, computer immune systems, and “swarm intelligence” methods, respectively.

To illustrate the accomplishments and prospects of idea models in science, I now delve into a few examples of particular idea models in the social sciences, starting with the best-known one of all: the Prisoner’s Dilemma.

Modeling the Evolution of Cooperation

Many biologists and social scientists have used idea models to explore what conditions can lead to the evolution of cooperation in a population of self-interested individuals.

Indeed, living organisms are selfish—their success in evolutionary terms requires living long enough, staying healthy enough, and being attractive enough to potential mates in order to produce offspring. Most living creatures are perfectly willing to fight, trick, kill, or otherwise harm other creatures in the pursuit of these goals. Common sense predicts that evolution will select selfishness and self-preservation as desirable traits that will be passed on through generations and will spread in any population.

In spite of this prediction, there are notable counterexamples to selfishness at all levels of the biological and social realms. Starting from the bottom, sometime in evolutionary history, groups of single-celled organisms cooperated in order to form more complex multicelled organisms. At some point later, social communities such as ant colonies evolved, in which the vast majority of ants not only work for the benefit of the whole colony, but also abnegate their ability to reproduce, allowing the queen ant to be the only source of offspring. Much later, more complex societies emerged in primate populations, involving communal solidarity against outsiders, complicated trading, and eventually human nations, governments, laws, and international treaties.

Biologists, sociologists, economists, and political scientists alike have faced the question of how such cooperation can arise among fundamentally selfish individuals. This is not only a question of science, but also of policy: e.g., is it possible to engender conditions that will allow cooperation to arise and persist among different nations in order to deal with international concerns such as the spread of nuclear weapons, the AIDS epidemic, and global warming?

FIGURE 14.2. Alice and Bob face a “Prisoner’s Dilemma.” (Drawing by David Moser.)

THE PRISONER’S DILEMMA

In the 1950s, at the height of the Cold War, many people were thinking about how to foster cooperation between enemy nations so as to prevent a nuclear war. Around 1950, two mathematical game theorists, Merrill Flood and Melvin Drescher, invented the Prisoner’s Dilemma as a tool for investigating such cooperation dilemmas.

The Prisoner’s Dilemma is often framed as follows. Two individuals (call them Alice and Bob) are arrested for committing a crime together and are put into separate rooms to be interrogated by police officers (figure 14.2). Alice and Bob are each separately offered the same deal in return for testifying against the other. If Alice agrees to testify against Bob, then she will go free and Bob will receive a sentence of life in prison. However, if Alice refuses to testify but Bob agrees to testify, he will go free and she will receive the life sentence. If they both testify against the other, they each will go to prison, but for a reduced sentence of ten years. Both Alice and Bob know that if neither testifies against the other they can be convicted only on a lesser charge for which they will go to jail for five years. The police demand a decision from each of them on the spot, and of course don’t allow any communication between them.

If you were Alice, what would you do?

You might reason it out this way: Bob is either going to testify against you or keep quiet, and you don’t know which. Suppose he plans to testify against you. Then you would come out best by testifying against him (ten years vs. life in prison). Now suppose he plans to keep quiet. Again your best choice is to testify (go free vs. five years in prison). Thus, regardless of what Bob does, your best bet for saving your own skin is to agree to testify.

The problem is that Bob is following the exact same line of reasoning. So the result is, you both agree to testify against the other, giving each of you a worse outcome than if you had both kept quiet.

Let me tell this story in a slightly different context. Imagine you are the U.S. president. You are considering a proposal to build a powerful nuclear weapon, much more powerful than any that you currently have in your stockpile. You suspect, but don’t know for sure, that the Russian government is considering the same thing.

Look into the future and suppose the Russians indeed end up building such a weapon. If you also had decided to build the weapon, then the United States and Russia would remain equal in fire power, albeit at significant cost to each nation and making the world a more dangerous place. If you had decided not to build the weapon, then Russia would have a military edge over the United States.

Now suppose Russia does not build the weapon in question. Then if you had decided to build it, the United States would have a military edge over Russia, though at some cost to the nation, and if you had decided not to build, the United States and Russia would remain equal in weaponry.

Just as we saw for Bob and Alice, regardless of what Russia is going to do, the rational thing is for you to approve the proposal, since in each case building the weapon turns out to be the better choice for the United States. Of course the Russians are thinking along similar lines, so both nations end up building the new bomb, producing a worse outcome for both than if neither had built it.

This is the paradox of the Prisoner’s Dilemma—in the words of political scientist Robert Axelrod, “The pursuit of self-interest by each leads to a poor outcome for all.” This paradox also applies to the all too familiar case of a group of individuals who, by selfishly pursuing their own interests, collectively bring harm to all members of the group (global warming is a quintessential example). The economist Garrett Hardin has famously called such scenarios “the tragedy of the commons.”

The Prisoner’s Dilemma and variants of it have long been studied as idea models that embody the essence of the cooperation problem, and results from those studies have influenced how scholars, businesspeople, and governments think about real-world policies ranging from weapons control and responses to terrorism to corporate management and regulation.

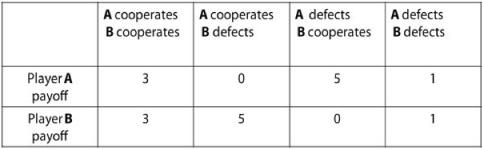

The Dilemma is typically formulated in terms of a two-person “game” defined by what mathematical game theorists call a payoff matrix—an array of all possible outcomes for two players. One possible payoff matrix for the Prisoner’s Dilemma is given in figure 14.3. Here, the goal is to get as many points (as opposed to as few years in prison) as possible. A turn consists of each player independently making a “cooperate or defect” decision. That is, on each turn, players A and B independently, without communicating, decide whether to cooperate with the other player (e.g., refuse to testify; decide not to build the bomb) or to defect from the other player (e.g., testify; build the bomb). If both players cooperate, each receives 3 points. If player A cooperates and player B defects, then player A receives zero points and player B gets 5 points, and vice versa if the situation is reversed. If both players defect, each receives 1 point. As I described above, if the game lasts for only one turn, the rational choice for both is to defect. However, if the game is repeated, that is, if the two players play several turns in a row, both players’ always defecting will lead to a much lower total payoff than the players would receive if they learned to cooperate. How can reciprocal cooperation be induced?

FIGURE 14.3. A payoff matrix for the Prisoner’s Dilemma game.

Robert Axelrod, of the University of Michigan, is a political scientist who has extensively studied and written about the Prisoner’s Dilemma. His work on the Dilemma has been highly influential in many different disciplines, and has earned him several prestigious research prizes, including a MacArthur foundation “genius” award.

Axelrod began studying the Dilemma during the Cold War as a result of his own concern over escalating arms races. His question was, “Under what conditions will cooperation emerge in a world of egoists without central authority?” Axelrod noted that the most famous historical answer to this question was given by the seventeenth-century philosopher Thomas Hobbes, who concluded that cooperation could develop only under the aegis of a central authority. Three hundred years (and countless wars) later, Albert Einstein similarly proposed that the only way to ensure peace in the nuclear age was to form an effective world government. The League of Nations, and later, the United Nations, were formed expressly for this purpose, but neither has been very successful in either establishing a world government or instilling peace between and within nations.

Robert Axelrod. (Photograph courtesy of the Center for the Study of Complex Systems, University of Michigan.)

Since an effective central government seems out of reach, Axelrod wondered if and how cooperation could come about without one. He believed that exploring strategies for playing the simple repeated Prisoner’s Dilemma game could give insight into this question. For Axelrod, “cooperation coming about” meant that cooperative strategies must, over time, receive higher total payoff than noncooperative ones, even in the face of any changes opponents make to their strategies as the repeated game is being played. Furthermore, if the players’ strategies are evolving under Darwinian selection, the fraction of cooperative strategies in the population should increase over time.

COMPUTER SIMULATIONS OF THE PRISONER’S DILEMMA

Axlerod’s interest in determining what makes for a good strategy led him to organize two Prisoner’s Dilemma tournaments. He asked researchers in several disciplines to submit computer programs that implemented particular strategies for playing the Prisoner’s Dilemma, and then had the programs play repeated games against one another.

Recall from my discussion of Robby the Robot in chapter 9 that a strategy is a set of rules that gives, for any situation, the action one should take in that situation. For the Prisoner’s Dilemma, a strategy consists of a rule for deciding whether to cooperate or defect on the next turn, depending on the opponent’s behavior on previous turns.

The first tournament received fourteen submitted programs; the second tournament jumped to sixty-three programs. Each program played with every other program for 200 turns, collecting points according to the payoff matrix in figure 14.3. The programs had some memory—each program could store the results of at least some of its previous turns against each opponent. Some of the strategies submitted were rather complicated, using statistical techniques to characterize other players’ “psychology.” However, in both tournaments the winner (the strategy with the highest average score over games with all other players) was the simplest of the submitted strategies: TIT FOR TAT. This strategy, submitted by mathematician Anatol Rapoport, cooperates on the first turn and then, on subsequent turns, does whatever the opponent did for its move on the previous turn. That is, TIT FOR TAT offers cooperation and reciprocates it. But if the other player defects, TIT FOR TAT punishes that defection with a defection of its own, and continues the punishment until the other player begins cooperating again.

It is surprising that such a simple strategy could beat out all others, especially in light of the fact that the entrants in the second tournament already knew about TIT FOR TAT so could plan against it. However, out of the dozens of experts who participated, no one was able to design a better strategy.

Axelrod drew some general conclusions from the results of these tournaments. He noted that all of the top scoring strategies have the attribute of being nice—that is, they are never the first one to defect. The lowest scoring of all the nice programs was the “least forgiving” one: it starts out by cooperating but if its opponent defects even once, it defects against that opponent on every turn from then on. This contrasts with TIT FOR TAT, which will punish an opponent’s defection with a defection of its own, but will forgive that opponent by cooperating once the opponent starts to cooperate again.

Axelrod also noted that although the most successful strategies were nice and forgiving, they also were retaliatory—they punished defection soon after it occurred. TIT FOR TAT not only was nice, forgiving, and retaliatory, but it also had another important feature: clarity and predictability. An opponent can easily see what TIT FOR TAT’s strategy is and thus predict how it would respond to any of the opponent’s actions. Such predictability is important for fostering cooperation.

Interestingly, Axelrod followed up his tournaments by a set of experiments in which he used a genetic algorithm to evolve strategies for the Prisoner’s Dilemma. The fitness of an evolving strategy is its score after playing many repeated games with the other evolving strategies in the population. The genetic algorithm evolved strategies with the same or similar behavior as TIT FOR TAT.

EXTENSIONS OF THE PRISONER’S DILEMMA

Axelrod’s studies of the Prisoner’s Dilemma made a big splash starting in the 1980s, particularly in the social sciences. People have studied all kinds of variations on the game—different payoff matrices, different number of players, multiplayer games in which players can decide whom to play with, and so on. Two of the most interesting variations experimented with adding social norms and spatial structure, respectively.

Adding Social Norms

Axelrod experimented with adding norms to the Prisoner’s Dilemma, where norms correspond to social censure (in the form of negative points) for defecting when others catch the defector in the act. In Axelrod’s multiplayer game, every time a player defects, there is some probability that some other players will witness that defection. In addition to a strategy for playing a version of the Prisoner’s Dilemma, each player also has a strategy for deciding whether to punish (subtract points from) a defector if the punisher witnesses the defection.

In particular, each player’s strategies consist of two numbers: a probability of defecting (boldness) and a probability of punishing a defection that the player witnesses (vengefulness). In the initial population of players, these probability values are assigned at random to each individual.

At each generation, the population plays a round of the game: each player in the population plays a single game against all other players, and each time a player defects, there is some probability that the defection is witnessed by other population members. Each witness will punish the defector with a probability defined by the witness’s vengefulness value.

At the end of each round, an evolutionary process takes place: a new population of players is created from parent strategies that are selected based on fitness (number of points earned). The parents create offspring that are mutated copies of themselves: each child can have slightly different boldness and vengefulness numbers than its parent. If the population starts out with most players’ vengefulness set to zero (e.g., no social norms), then defectors will come to dominate the population. Axelrod initially expected to find that norms would facilitate the evolution of cooperation in the population—that is, vengefulness would evolve to counter boldness.

However, it turned out that norms alone were not enough for cooperation to emerge reliably. In a second experiment, Axelrod added metanorms, in which there were punishers to punish the nonpunishers, if you know what I mean. Sort of like people in the supermarket who give me disapproving looks when I don’t discipline my children for chasing each other up the aisles and colliding with innocent shoppers. In my case the metanorm usually works. Axelrod also found that metanorms did the trick—if punishers of nonpunishers were around, the nonpunishers evolved to be more likely to punish, and the punished defectors evolved to be more likely to cooperate. In Axelrod’s words, “Meta-norms can promote and sustain cooperation in a population.”

Adding Spatial Structure

The second extension that I find particularly interesting is the work done by mathematical biologist Martin Nowak and collaborators on adding spatial structure to the Prisoner’s Dilemma. In Axelrod’s original simulations, there was no notion of space—it was equally likely for any player to encounter any other player, with no sense of distance between players.

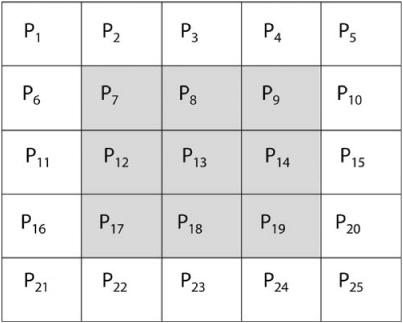

Nowak suspected that placing players on a spatial lattice, on which the notion of neighbor is well defined, would have a strong effect on the evolution of cooperation. With his postdoctoral mentor Robert May (whom I mentioned in chapter 2 in the context of the logistic map), Nowak performed computer simulations in which the players were placed in a two-dimensional array, and each player played only with its nearest neighbors. This is illustrated in figure 14.4, which shows a five by five grid with one player at each site (Nowak and May’s arrays were considerably larger). Each player has the simplest of strategies—it has no memory of previous turns; it either always cooperates or always defects.

The model runs in discrete time steps. At each time step, each player plays a single Prisoner’s Dilemma game against each of its eight nearest neighbors (like a cellular automaton, the grid wraps around at the edges) and its eight resulting scores are summed. This is followed by a selection step in which each player is replaced by the highest scoring player in its neighborhood (possibly itself); no mutation is done.

The motivation for this work was biological. As stated by Nowak and May, “We believe that deterministically generated spatial structure within populations may often be crucial for the evolution of cooperation, whether it be among molecules, cells, or organisms.”

Nowak and May experimented by running this model with different initial configurations of cooperate and defect players and by varying the values in the payoff matrix. They found that depending on these settings, the spatial patterns of cooperate and defect players can either oscillate or be “chaotically changing,” in which both cooperators and defectors coexist indefinitely. These results contrast with results from the nonspatial multiplayer Prisoner’s Dilemma, in which, in the absence of meta-norms as discussed above, defectors take over the population. In Nowak and May’s spatial case, cooperators can persist indefinitely without any special additions to the game, such as norms or metanorms.

FIGURE 14.4. Illustration of a spatial Prisoner’s Dilemma game. Each player interacts only with its nearest neighbors—e.g., player P13 plays against, and competes for selection against, only the players in its neighborhood (shaded).

Nowak and May believed that their result illustrated a feature of the real world—i.e., the existence of spatial neighborhoods fosters cooperation. In a commentary on this work, biologist Karl Sigmund put it this way: “That territoriality favours cooperation… is likely to remain valid for real-life communities.”

Prospects of Modeling

Computer simulations of idea models such as the Prisoner’s Dilemma, when done well, can be a powerful addition to experimental science and mathematical theory. Such models are sometimes the only available means of investigating complex systems when actual experiments are not feasible and when the math gets too hard, which is the case for almost all of the systems we are most interested in. The most significant contribution of idea models such as the Prisoner’s Dilemma is to provide a first hand-hold on a phenomenon—such as the evolution of cooperation—for which we don’t yet have precise scientific terminology and well-defined concepts.

The Prisoner’s Dilemma models play all the roles I listed above for idea models in science (and analogous contributions could be listed from many other complex-systems modeling efforts as well):

Show that a proposed mechanism for a phenomenon is plausible or implausible. For example, the various Prisoner’s Dilemma and related models have shown what Thomas Hobbes might not have believed: that it is indeed possible for cooperation—albeit in an idealized form—to come about in leaderless populations of self-interested (but adaptive) individuals.

Explore the effects of variations on a simple model and prime one’s intuitions about a complex phenomenon. The endless list of Prisoner’s Dilemma variations that people have studied has revealed much about the conditions under which cooperation can and cannot arise. You might ask, for example, what happens if, on occasion, people who want to cooperate make a mistake that accidentally signals noncooperation—an unfortunate mistranslation into Russian of a U.S. president’s comments, for instance? The Prisoner’s Dilemma gives an arena in which the effects of miscommunications can be explored. John Holland has likened such models to “flight simulators” for testing one’s ideas and for improving one’s intuitions.

Inspire new technologies. Results from the Prisoner’s Dilemma modeling literature—namely, the conditions needed for cooperation to arise and persist—have been used in proposals for improving peer-to-peer networks and preventing fraud in electronic commerce, to name but two applications.

Lead to mathematical theories. Several people have used the results from Prisoner’s Dilemma computer simulations to formulate general mathematical theories about the conditions needed for cooperation. A recent example is work by Martin Nowak, in a paper called “Five Rules for the Evolution of Cooperation.”

How should results from idea models such as the Prisoner’s Dilemma be used to inform policy decisions, such as the foreign relations strategies of governments or responses to global warming? The potential of idea models in predicting the results of different policies makes such models attractive, and, indeed, the influence of the Prisoner’s Dilemma and related models among policy analysts has been considerable.

As one example, New Energy Finance, a consulting firm specializing in solutions for global warming, recently put out a report called “How to Save the Planet: Be Nice, Retaliatory, Forgiving, and Clear.” The report argues that the problem of responding to climate change is best seen as a multi-player repeated Prisoner’s Dilemma in which countries can either cooperate (mitigate carbon output at some cost to their economies) or defect (do nothing, saving money in the short term). The game is repeated year after year as new agreements and treaties regulating carbon emissions are forged. The report recommends specific policies that countries and global organizations should adopt in order to implement the “nice, retaliatory, forgiving, and clear” characteristics Axelrod cited as requirements for success in the repeated Prisoner’s Dilemma.

Similarly, the results of the norms and metanorms models—namely, that not only norms but also metanorms can be important for sustaining cooperation—has had impact on policy-making research regarding government response to terrorism, arms control, and environmental governance policies, among other areas. The results of Nowak and May’s spatial Prisoner’s Dilemma models have informed people’s thinking about the role of space and locality in fostering cooperation in areas ranging from the maintenance of biodiversity to the effectiveness of bacteria in producing new antibiotics. (See the notes for details on these various impacts.)

Computer Modeling Caveats

All models are wrong, but some are useful.

—George Box and Norman Draper

Indeed, the models I described above are highly simplified but have been useful for advancing science and policy in many contexts. They have led to new insights, new ways of thinking about complex systems, better models, and better understanding of how to build useful models. However, some very ambitious claims have been made about the models’ results and how they apply in the real world. Therefore, the right thing for scientists to do is to carefully scrutinize the models and ask how general their results actually are. The best way to do that is to try to replicate those results.

In an experimental science such as astronomy or chemistry, every important experiment is replicated, meaning that a different group of scientists does the same experiment from scratch to see whether they get the same results as the original group. No experimental result is (or should be) believed if no other group can replicate it in this way. The inability of others to replicate results has been the death knell for uncountable scientific claims.

Computer models also need to be replicated—that is, independent groups need to construct the proposed computer model from scratch and see whether it produces the same results as those originally reported. Axelrod, an out-spoken advocate of this idea, writes: “Replication is one of the hallmarks of cumulative science. It is needed to confirm whether the claimed results of a given simulation are reliable in the sense that they can be reproduced by someone starting from scratch. Without this confirmation, it is possible that some published results are simply mistaken due to programming errors, misrepresentation of what was actually simulated, or errors in analyzing or reporting the results. Replication can also be useful for testing the robustness of inferences from models.”

Fortunately, many researchers have taken this advice to heart and have attempted to replicate some of the more famous Prisoner’s Dilemma simulations. Several interesting and sometimes unexpected results have come out of these attempts.

In 1995, Bernardo Huberman and Natalie Glance re-implemented Nowak and May’s spatial Prisoner’s Dilemma model. Huberman and Glance ran a simulation with only one change. In the original model, at each time step all games between players in the lattice were played simultaneously, followed by the simultaneous selection in all neighborhoods of the fittest neighborhood player. (This required Nowak and May to simulate parallelism on their nonparallel computer.) Huberman and Glance instead allowed some of the games to be played asynchronously—that is, some group of neighboring players would play games and carry out selection, then another group of neighboring players would do the same, and so on. They found that this simple change, arguably making the model more realistic, would typically result in complete replacement of cooperators by defectors over the entire lattice. A similar result was obtained independently by Arijit Mukherji, Vijay Rajan, and James Slagle, who in addition showed that cooperation would die out in the presence of small errors or cheating (e.g., a cooperator accidentally or purposefully defecting). Nowak, May, and their collaborator Sebastian Bonhoeffor replied that these changes did indeed lead to the extinction of all cooperators for some payoff-matrix values, but for others, cooperators were able to stay in the population, at least for long periods.

In 2005 Jose Manuel Galan and Luis Izquierdo published results of their re-implementation of Axelrod’s Norms and Metanorms models. Given the increase in computer power over the twenty years that had passed since Axelrod’s work, they were able to run the simulation for a much longer period and do a more thorough investigation of the effects of varying certain model details, such as the payoff matrix values, the probabilities for mutating offspring, and so on. Their results matched well with Axelrod’s for some aspects of the simulation, but for others, the re-implementation produced quite different results. For example, they found that whereas metanorms can facilitate the evolution and persistence of cooperation in the short term, if the simulation is run for a long time, defectors end up taking over the population. They also found that the results were quite sensitive to the details of the model, such as the specific payoff values used.

What should we make of all this? I think the message is exactly as Box and Draper put it in the quotation I gave above: all models are wrong in some way, but some are very useful for beginning to address highly complex systems. Independent replication can uncover the hidden unrealistic assumptions and sensitivity to parameters that are part of any idealized model. And of course the replications themselves should be replicated, and so on, as is done in experimental science. Finally, modelers need above all to emphasize the limitations of their models, so that the results of such models are not misinterpreted, taken too literally, or hyped too much. I have used examples of models related to the Prisoner’s Dilemma to illustrate all these points, but my previous discussion could be equally applied to nearly all other simplified models of complex systems.

I will give the last word to physicist (and ahead-of-his-time model-building proponent) Phillip Anderson, from his 1977 Nobel Prize acceptance speech:

The art of model-building is the exclusion of real but irrelevant parts of the problem, and entails hazards for the builder and the reader. The builder may leave out something genuinely relevant; the reader, armed with too sophisticated an experimental probe or too accurate a computation, may take literally a schematized model whose main aim is to be a demonstration of possibility.