Chaos: Making a New Science - James Gleick (1988)

Universality

The iterating of these lines brings gold;

The framing of this circle on the ground

Brings whirlwinds, tempests, thunder and lightning.

—MARLOWE, Dr. Faustus

A FEW DOZEN YARDS upstream from a waterfall, a smooth flowing stream seems to intuit the coming drop. The water begins to speed and shudder. Individual rivulets stand out like coarse, throbbing veins. Mitchell Feigenbaum stands at streamside. He is sweating slightly in sports coat and corduroys and puffing on a cigarette. He has been walking with friends, but they have gone on ahead to the quieter pools upstream. Suddenly, in what might be a demented high-speed parody of a tennis spectator, he starts turning his head from side to side. “You can focus on something, a bit of foam or something. If you move your head fast enough, you can all of a sudden discern the whole structure of the surface, and you can feel it in your stomach.” He draws in more smoke from his cigarette. “But for anyone with a mathematical background, if you look at this stuff, or you see clouds with all their puffs on top of puffs, or you stand at a sea wall in a storm, you know that you really don’t know anything.”

Order in chaos. It was science’s oldest cliché. The idea of hidden unity and common underlying form in nature had an intrinsic appeal, and it had an unfortunate history of inspiring pseudoscientists and cranks. When Feigenbaum came to Los Alamos National Laboratory in 1974, a year shy of his thirtieth birthday, he knew that if physicists were to make something of the idea now, they would need a practical framework, a way to turn ideas into calculations. It was far from obvious how to make a first approach to the problem.

Feigenbaum was hired by Peter Carruthers, a calm, deceptively genial physicist who came from Cornell in 1973 to take over the Theoretical Division. His first act was to dismiss a half-dozen senior scientists—Los Alamos provides its staff with no equivalent of university tenure—and to replace them with some bright young researchers of his own choosing. As a scientific manager, he had strong ambition, but he knew from experience that good science cannot always be planned.

“If you had set up a committee in the laboratory or in Washington and said, ‘Turbulence is really in our way, we’ve got to understand it, the lack of understanding really destroys our chance of making progress in a lot of fields,’ then, of course, you would hire a team. You’d get a giant computer. You’d start running big programs. And you would never get anywhere. Instead we have this smart guy, sitting quietly—talking to people, to be sure, but mostly working all by himself.” They had talked about turbulence, but time passed, and even Carruthers was no longer sure where Feigenbaum was headed. “I thought he had quit and found a different problem. Little did I know that this other problem was the same problem. It seems to have been the issue on which many different fields of science were stuck—they were stuck on this aspect of the nonlinear behavior of systems. Now, nobody would have thought that the right background for this problem was to know particle physics, to know something about quantum field theory, and to know that in quantum field theory you have these structures known as the renormalization group. Nobody knew that you would need to understand the general theory of stochastic processes, and also fractal structures.

“Mitchell had the right background. He did the right thing at the right time, and he did it very well. Nothing partial. He cleaned out the whole problem.”

Feigenbaum brought to Los Alamos a conviction that his science had failed to understand hard problems—nonlinear problems. Although he had produced almost nothing as a physicist, he had accumulated an unusual intellectual background. He had a sharp working knowledge of the most challenging mathematical analysis, new kinds of computational technique that pushed most scientists to their limits. He had managed not to purge himself of some seemingly unscientific ideas from eighteenth-century Romanticism. He wanted to do science that would be new. He began by putting aside any thought of understanding real complexity and instead turned to the simplest nonlinear equations he could find.

THE MYSTERY OF THE UNIVERSE first announced itself to the four-year-old Mitchell Feigenbaum through a Silvertone radio sitting in his parents’ living room in the Flatbush section of Brooklyn soon after the war. He was dizzy with the thought of music arriving from no tangible cause. The phonograph, on the other hand, he felt he understood. His grandmother had given him a special dispensation to put on the 78s.

His father was a chemist who worked for the Port of New York Authority and later for Clairol. His mother taught in the city’s public schools. Mitchell first decided to become an electrical engineer, a sort of professional known in Brooklyn to make a good living. Later he realized that what he wanted to know about a radio was more likely to be found in physics. He was one of a generation of scientists raised in the outer boroughs of New York who made their way to brilliant careers via the great public high schools—in his case, Samuel J. Tilden—and then City College.

Growing up smart in Brooklyn was in some measure a matter of steering an uneven course between the world of mind and the world of other people. He was immensely gregarious when very young, which he regarded as a key to not being beaten up. But something clicked when he realized he could learn things. He became more and more detached from his friends. Ordinary conversation could not hold his interest. Sometime in his last year of college, it struck him that he had missed his adolescence, and he made a deliberate project out of regaining touch with humanity. He would sit silently in the cafeteria, listening to students chatting about shaving or food, and gradually he relearned much of the science of talking to people.

He graduated in 1964 and went on to the Massachusetts Institute of Technology, where he got his doctorate in elementary particle physics in 1970. Then he spent a fruitless four years at Cornell and at the Virginia Polytechnic Institute—fruitless, that is, in terms of the steady publication of work on manageable problems that is essential for a young university scientist. Postdocs were supposed to produce papers. Occasionally an advisor would ask Feigenbaum what had happened to some problem, and he would say, “Oh, I understood it.”

Newly installed at Los Alamos, Carruthers, a formidable scientist in his own right, prided himself on his ability to spot talent. He looked not for intelligence but for a sort of creativity that seemed to flow from some magic gland. He always remembered the case of Kenneth Wilson, another soft-spoken Cornell physicist who seemed to be producing absolutely nothing. Anyone who talked to Wilson for long realized that he had a deep capacity for seeing into physics. So the question of Wilson’s tenure became a subject of serious debate. The physicists willing to gamble on his unproven potential prevailed—and it was as if a dam burst. Not one but a flood of papers came forth from Wilson’s desk drawers, including work that won him the Nobel Prize in 1982.

Wilson’s great contribution to physics, along with work by two other physicists, Leo Kadanoff and Michael Fisher, was an important ancestor of chaos theory. These men, working independently, were all thinking in different ways about what happened in phase transitions. They were studying the behavior of matter near the point where it changes from one state to another—from liquid to gas, or from unmagnetized to magnetized. As singular boundaries between two realms of existence, phase transitions tend to be highly nonlinear in their mathematics. The smooth and predictable behavior of matter in any one phase tends to be little help in understanding the transitions. A pot of water on the stove heats up in a regular way until it reaches the boiling point. But then the change in temperature pauses while something quite interesting happens at the molecular interface between liquid and gas.

As Kadanoff viewed the problem in the 1960s, phase transitions pose an intellectual puzzle. Think of a block of metal being magnetized. As it goes into an ordered state, it must make a decision. The magnet can be oriented one way or the other. It is free to choose. But each tiny piece of the metal must make the same choice. How?

Somehow, in the process of choosing, the atoms of the metal must communicate information to one another. Kadanoff’s insight was that the communication can be most simply described in terms of scaling. In effect, he imagined dividing the metal into boxes. Each box communicates with its immediate neighbors. The way to describe that communication is the same as the way to describe the communication of any atom with its neighbors. Hence the usefulness of scaling: the best way to think of the metal is in terms of a fractal-like model, with boxes of all different sizes.

Much mathematical analysis, and much experience with real systems, was needed to establish the power of the scaling idea. Kadanoff felt that he had taken an unwieldy business and created a world of extreme beauty and self-containedness. Part of the beauty lay in its universality. Kadanoff’s idea gave a backbone to the most striking fact about critical phenomena, namely that these seemingly unrelated transitions—the boiling of liquids, the magnetizing of metals—all follow the same rules.

Then Wilson did the work that brought the whole theory together under the rubric of renormalization group theory, providing a powerful way of carrying out real calculations about real systems. Renormalization had entered physics in the 1940s as a part of quantum theory that made it possible to calculate interactions of electrons and photons. A problem with such calculations, as with the calculations Kadanoff and Wilson worried about, was that some items seemed to require treatment as infinite quantities, a messy and unpleasant business. Renormalizing the system, in ways devised by Richard Feynman, Julian Schwinger, Freeman Dyson, and other physicists, got rid of the infinities.

Only much later, in the 1960s, did Wilson dig down to the underlying basis for renormalization’s success. Like Kadanoff, he thought about scaling principles. Certain quantities, such as the mass of a particle, had always been considered fixed—as the mass of any object in everyday experience is fixed. The renormalization shortcut succeeded by acting as though a quantity like mass were not fixed at all. Such quantities seemed to float up or down depending on the scale from which they were viewed. It seemed absurd. Yet it was an exact analogue of what Benoit Mandelbrot was realizing about geometrical shapes and the coastline of England. Their length could not be measured independent of scale. There was a kind of relativity in which the position of the observer, near or far, on the beach or in a satellite, affected the measurement. As Mandelbrot, too, had seen, the variation across scales was not arbitrary; it followed rules. Variability in the standard measures of mass or length meant that a different sort of quantity was remaining fixed. In the case of fractals, it was the fractional dimension—a constant that could be calculated and used as a tool for further calculations. Allowing mass to vary depending on scale meant that mathematicians could recognize similarity across scales.

So for the hard work of calculation, Wilson’s renormalization group theory provided a different route into infinitely dense problems. Until then the only way to approach highly nonlinear problems was with a device called perturbation theory. For purposes of calculation, you assume that the nonlinear problem is reasonably close to some solvable, linear problem—just a small perturbation away. You solve the linear problem and perform a complicated bit of trickery with the leftover part, expanding it into what are called Feynman diagrams. The more accuracy you need, the more of these agonizing diagrams you must produce. With luck, your calculations converge toward a solution. Luck has a way of vanishing, however, whenever a problem is especially interesting. Feigenbaum, like every other young particle physicist in the 1960s, found himself doing endless Feynman diagrams. He was left with the conviction that perturbation theory was tedious, nonilluminating, and stupid. So he loved Wilson’s new renormalization group theory. By acknowledging self-similarity, it gave a way of collapsing the complexity, one layer at a time.

In practice the renormalization group was far from foolproof. It required a good deal of ingenuity to choose just the right calculations to capture the self-similarity. However, it worked well enough and often enough to inspire some physicists, Feigenbaum included, to try it on the problem of turbulence. After all, self-similarity seemed to be the signature of turbulence, fluctuations upon fluctuations, whorls upon whorls. But what about the onset of turbulence—the mysterious moment when an orderly system turned chaotic. There was no evidence that the renormalization group had anything to say about this transition. There was no evidence, for example, that the transition obeyed laws of scaling.

AS A GRADUATE STUDENT at M.I.T., Feigenbaum had an experience that stayed with him for many years. He was walking with friends around the Lincoln Reservoir in Boston. He was developing a habit of taking four- and five-hour walks, attuning himself to the panoply of impressions and ideas that would flow through his mind. On this day he became detached from the group and walked alone. He passed some picnickers and, as he moved away, he glanced back every so often, hearing the sounds of their voices, watching the motions of hands gesticulating or reaching for food. Suddenly he felt that the tableau had crossed some threshold into incomprehensibility. The figures were too small to be made out. The actions seemed disconnected, arbitrary, random. What faint sounds reached him had lost meaning.

The ceaseless motion and incomprehensible bustle of life. Feigenbaum recalled the words of Gustav Mahler, describing a sensation that he tried to capture in the third movement of his Second Symphony. Like the motions of dancing figures in a brilliantly lit ballroom into which you look from the dark night outside and from such a distance that the music is inaudible…. Life may appear senseless to you.Feigenbaum was listening to Mahler and reading Goethe, immersing himself in their high Romantic attitudes. Inevitably it was Goethe’s Faust he most reveled in, soaking up its combination of the most passionate ideas about the world with the most intellectual. Without some Romantic inclinations, he surely would have dismissed a sensation like his confusion at the reservoir. After all, why shouldn’t phenomena lose meaning as they are seen from greater distances? Physical laws provided a trivial explanation for their shrinking. On second thought the connection between shrinking and loss of meaning was not so obvious. Why should it be that as things become small they also become incomprehensible?

He tried quite seriously to analyze this experience in terms of the tools of theoretical physics, wondering what he could say about the brain’s machinery of perception. You see some human transactions and you make deductions about them. Given the vast amount of information available to your senses, how does your decoding apparatus sort it out? Clearly—or almost clearly—the brain does not own any direct copies of stuff in the world. There is no library of forms and ideas against which to compare the images of perception. Information is stored in a plastic way, allowing fantastic juxtapositions and leaps of imagination. Some chaos exists out there, and the brain seems to have more flexibility than classical physics in finding the order in it.

At the same time, Feigenbaum was thinking about color. One of the minor skirmishes of science in the first years of the nineteenth century was a difference of opinion between Newton’s followers in England and Goethe in Germany over the nature of color. To Newtonian physics, Goethe’s ideas were just so much pseudoscientific meandering. Goethe refused to view color as a static quantity, to be measured in a spectrometer and pinned down like a butterfly to cardboard. He argued that color is a matter of perception. “With light poise and counterpoise, Nature oscillates within her prescribed limits,” he wrote, “yet thus arise all the varieties and conditions of the phenomena which are presented to us in space and time.”

The touchstone of Newton’s theory was his famous experiment with a prism. A prism breaks a beam of white light into a rainbow of colors, spread across the whole visible spectrum, and Newton realized that those pure colors must be the elementary components that add to produce white. Further, with a leap of insight, he proposed that the colors corresponded to frequencies. He imagined that some vibrating bodies—corpuscles was the antique word—must be producing colors in proportion to the speed of the vibrations. Considering how little evidence supported this notion, it was as unjustifiable as it was brilliant. What is red? To a physicist, it is light radiating in waves between 620 to 800 bil-lionths of a meter long. Newton’s optics proved themselves a thousand times over, while Goethe’s treatise on color faded into merciful obscurity. When Feigenbaum went looking for it, he discovered that the one copy in Harvard’s libraries had been removed.

He finally did track down a copy, and he found that Goethe had actually performed an extraordinary set of experiments in his investigation of colors. Goethe began as Newton had, with a prism. Newton had held a prism before a light, casting the divided beam onto a white surface. Goethe held the prism to his eye and looked through it. He perceived no color at all, neither a rainbow nor individual hues. Looking at a clear white surface or a clear blue sky through the prism produced the same effect: uniformity.

But if a slight spot interrupted the white surface or a cloud appeared in the sky, then he would see a burst of color. It is “the interchange of light and shadow,” Goethe concluded, that causes color. He went on to explore the way people perceive shadows cast by different sources of colored light. He used candles and pencils, mirrors and colored glass, moonlight and sunlight, crystals, liquids, and color wheels in a thorough range of experiments. For example, he lit a candle before a piece of white paper at twilight and held up a pencil. The shadow in the candlelight was a brilliant blue. Why? The white paper alone is perceived as white, either in the declining daylight or in the added light of the warmer candle. How does a shadow divide the white into a region of blue and a region of reddish-yellow? Color is “a degree of darkness,” Goethe argued, “allied to shadow.” Above all, in a more modern language, color comes from boundary conditions and singularities.

Where Newton was reductionist, Goethe was holistic. Newton broke light apart and found the most basic physical explanation for color. Goethe walked through flower gardens and studied paintings, looking for a grand, all-encompassing explanation. Newton made his theory of color fit a mathematical scheme for all of physics. Goethe, fortunately or unfortunately, abhorred mathematics.

Feigenbaum persuaded himself that Goethe had been right about color. Goethe’s ideas resemble a facile notion, popular among psychologists, that makes a distinction between hard physical reality and the variable subjective perception of it. The colors we perceive vary from time to time and from person to person—that much is easy to say. But as Feigenbaum understood them, Goethe’s ideas had more true science in them. They were hard and empirical. Over and over again, Goethe emphasized the repeatability of his experiments. It was the perception of color, to Goethe, that was universal and objective. What scientific evidence was there for a definable real-world quality of redness independent of our perception?

Feigenbaum found himself asking what sort of mathematical formalisms might correspond to human perception, particularly a perception that sifted the messy multiplicity of experience and found universal qualities. Redness is not necessarily a particular bandwidth of light, as the Newtonians would have it. It is a territory of a chaotic universe, and the boundaries of that territory are not so easy to describe—yet our minds find redness with regular and verifiable consistency. These were the thoughts of a young physicist, far removed, it seemed, from such problems as fluid turbulence. Still, to understand how the human mind sorts through the chaos of perception, surely one would need to understand how disorder can produce universality.

WHEN FEIGENBAUM BEGAN to think about nonlinearity at Los Alamos, he realized that his education had taught him nothing useful. To solve a system of nonlinear differential equations was impossible, notwithstanding the special examples constructed in textbooks. Perturbative technique, making successive corrections to a solvable problem that one hoped would lie somewhere nearby the real one, seemed foolish. He read through texts on nonlinear flows and oscillations and decided that little existed to help a reasonable physicist. His computational equipment consisting solely of pencil and paper, Feigenbaum decided to start with an analogue of the simple equation that Robert May studied in the context of population biology.

It happened to be the equation high school students use in geometry to graph a parabola. It can be written as y = r(x-x2). Every value of x produces a value of y, and the resulting curve expresses the relation of the two numbers for the range of values. If x (this year’s population) is small, then y (next year’s) is small, but larger than x; the curve is rising steeply. If x is in the middle of the range, then y is large. But the parabola levels off and falls, so that if x is large, then y will be small again. That is what produces the equivalent of population crashes in ecological modeling, preventing unrealistic unrestrained growth.

For May and then Feigenbaum, the point was to use this simple calculation not once, but repeated endlessly as a feedback loop. The output of one calculation was fed back in as input for the next. To see what happened graphically, the parabola helped enormously. Pick a starting value along the x axis. Draw a line up to where it meets the parabola. Read the resulting value off the y axis. And start all over with the new value. The sequence bounces from place to place on the parabola at first, and then, perhaps, homes in on a stable equilibrium, where x and y are equal and the value thus does not change.

In spirit, nothing could have been further removed from the complex calculations of standard physics. Instead of a labyrinthine scheme to be solved one time, this was a simple calculation performed over and over again. The numerical experimenter would watch, like a chemist peering at a reaction bubbling away inside a beaker. Here the output was just a string of numbers, and it did not always converge to a steady final state. It could end up oscillating back and forth between two values. Or as May had explained to population biologists, it could keep on changing chaotically as long as anyone cared to watch. The choice among these different possible behaviors depended on the value of the tuning parameter.

Feigenbaum carried out numerical work of this faintly experimental sort and, at the same time, tried more traditional theoretical ways of analyzing nonlinear functions. Even so, he could not see the whole picture of what this equation could do. But he could see that the possibilities were already so complicated that they would be viciously hard to analyze. He also knew that three Los Alamos mathematicians—Nicholas Metropolis, Paul Stein, and Myron Stein—had studied such “maps” in 1971, and now Paul Stein warned him that the complexity was frightening indeed. If this simplest of equations already proved intractable, what about the far more complicated equations that a scientist would write down for real systems? Feigenbaum put the whole problem on the shelf.

In the brief history of chaos, this one innocent-looking equation provides the most succinct example of how different sorts of scientists looked at one problem in many different ways. To the biologists, it was an equation with a message: Simple systems can do complicated things. To Metropolis, Stein, and Stein, the problem was to catalogue a collection of topological patterns without reference to any numerical values. They would begin the feedback process at a particular point and watch the succeeding values bounce from place to place on the parabola. As the values moved to the right or the left, they wrote down sequences of R’s and L’s. Pattern number one: R. Pattern number two: RLR. Pattern number 193: RLLLLLRRLL. These sequences had some interesting features to a mathematician—they always seemed to repeat in the same special order. But to a physicist they looked obscure and tedious.

No one realized it then, but Lorenz had looked at the same equation in 1964, as a metaphor for a deep question about climate. The question was so deep that almost no one had thought to ask it before: Does a climate exist? That is, does the earth’s weather have a long-term average? Most meteorologists, then as now, took the answer for granted. Surely any measurable behavior, no matter how it fluctuates, must have an average. Yet on reflection, it is far from obvious. As Lorenz pointed out, the average weather for the last 12,000 years has been notably different than the average for the previous 12,000, when most of North America was covered by ice. Was there one climate that changed to another for some physical reason? Or is there an even longer-term climate within which those periods were just fluctuations? Or is it possible that a system like the weather may never converge to an average?

Lorenz asked a second question. Suppose you could actually write down the complete set of equations that govern the weather. In other words, suppose you had God’s own code. Could you then use the equations to calculate average statistics for temperature or rainfall? If the equations were linear, the answer would be an easy yes. But they are nonlinear. Since God has not made the actual equations available, Lorenz instead examined the quadratic difference equation.

Like May, Lorenz first examined what happened as the equation was iterated, given some parameter. With low parameters he saw the equation reaching a stable fixed point. There, certainly, the system produced a “climate” in the most trivial sense possible—the “weather” never changed. With higher parameters he saw the possibility of oscillation between two points, and there, too, the system converged to a simple average. But beyond a certain point, Lorenz saw that chaos ensues. Since he was thinking about climate, he asked not only whether continual feedback would produce periodic behavior, but also what the average output would be. And he recognized that the answer was that the average, too, fluctuated unstably. When the parameter value was changed ever so slightly, the average might change dramatically. By analogy, the earth’s climate might never settle reliably into an equilibrium with average long-term behavior.

As a mathematics paper, Lorenz’s climate work would have been a failure—he proved nothing in the axiomatic sense. As a physics paper, too, it was seriously flawed, because he could not justify using such a simple equation to draw conclusions about the earth’s climate. Lorenz knew what he was saying, though. “The writer feels that this resemblance is no mere accident, but that the difference equation captures much of the mathematics, even if not the physics, of the transitions from one regime of flow to another, and, indeed, of the whole phenomenon of instability.” Even twenty years later, no one could understand what intuition justified such a bold claim, published in Tellus, a Swedish meteorology journal. (“Tellus! Nobody reads Tellus,” a physicist exclaimed bitterly.) Lorenz was coming to understand ever more deeply the peculiar possibilities of chaotic systems—more deeply than he could express in the language of meteorology.

As he continued to explore the changing masks of dynamical systems, Lorenz realized that systems slightly more complicated than the quadratic map could produce other kinds of unexpected patterns. Hiding within a particular system could be more than one stable solution. An observer might see one kind of behavior over a very long time, yet a completely different kind of behavior could be just as natural for the system. Such a system is called intransitive. It can stay in one equilibrium or the other, but not both. Only a kick from outside can force it to change states. In a trivial way, a standard pendulum clock is an intransitive system. A steady flow of energy comes in from a wind-up spring or a battery through an escapement mechanism. A steady flow of energy is drained out by friction. The obvious equilibrium state is a regular swinging motion. If a passerby bumps the clock, the pendulum might speed up or slow down from the momentary jolt but will quickly return to its equilibrium. But the clock has a second equilibrium as well—a second valid solution to its equations of motion—and that is the state in which the pendulum is hanging straight down and not moving. A less trivial intransitive system—perhaps with several distinct regions of utterly different behavior—could be climate itself.

Climatologists who use global computer models to simulate the long-term behavior of the earth’s atmosphere and oceans have known for several years that their models allow at least one dramatically different equilibrium. During the entire geological past, this alternative climate has never existed, but it could be an equally valid solution to the system of equations governing the earth. It is what some climatologists call the White Earth climate: an earth whose continents are covered by snow and whose oceans are covered by ice. A glaciated earth would reflect seventy percent of the incoming solar radiation and so would stay extremely cold. The lowest layer of the atmosphere, the troposphere, would be much thinner. The storms that would blow across the frozen surface would be much smaller than the storms we know. In general, the climate would be less hospitable to life as we know it. Computer models have such a strong tendency to fall into the White Earth equilibrium that climatologists find themselves wondering why it has never come about. It may simply be a matter of chance.

To push the earth’s climate into the glaciated state would require a huge kick from some external source. But Lorenz described yet another plausible kind of behavior called “almost-intransitivity.” An almost-intransitive system displays one sort of average behavior for a very long time, fluctuating within certain bounds. Then, for no reason whatsoever, it shifts into a different sort of behavior, still fluctuating but producing a different average. The people who design computer models are aware of Lorenz’s discovery, but they try at all costs to avoid almost-intransitivity. It is too unpredictable. Their natural bias is to make models with a strong tendency to return to the equilibrium we measure every day on the real planet. Then, to explain large changes in climate, they look for external causes—changes in the earth’s orbit around the sun, for example. Yet it takes no great imagination for a climatologist to see that almost-intransitivity might well explain why the earth’s climate has drifted in and out of long Ice Ages at mysterious, irregular intervals. If so, no physical cause need be found for the timing. The Ice Ages may simply be a byproduct of chaos.

LIKE A GUN COLLECTOR wistfully recalling the Colt .45 in the era of automatic weaponry, the modern scientist nurses a certain nostalgia for the HP-65 hand-held calculator. In the few years of its supremacy, this machine changed many scientists’ working habits forever. For Feigenbaum, it was the bridge between pencil-and-paper and a style of working with computers that had not yet been conceived.

He knew nothing of Lorenz, but in the summer of 1975, at a gathering in Aspen, Colorado, he heard Steve Smale talk about some of the mathematical qualities of the same quadratic difference equation. Smale seemed to think that there were some interesting open questions about the exact point at which the mapping changes from periodic to chaotic. As always, Smale had a sharp instinct for questions worth exploring. Feigenbaum decided to look into it once more. With his calculator he began to use a combination of analytic algebra and numerical exploration to piece together an understanding of the quadratic map, concentrating on the boundary region between order and chaos.

Metaphorically—but only metaphorically—he knew that this region was like the mysterious boundary between smooth flow and turbulence in a fluid. It was the region that Robert May had called to the attention of population biologists who had previously failed to notice the possibility of any but orderly cycles in changing animal populations. En route to chaos in this region was a cascade of period-doublings, the splitting of two-cycles into four-cycles, four-cycles into eight-cycles, and so on. These splittings made a a fascinating pattern. They were the points at which a slight change in fecundity, for example, might lead a population of gypsy moths to change from a four-year cycle to an eight-year cycle. Feigenbaum decided to begin by calculating the exact parameter values that produced the splittings.

In the end, it was the slowness of the calculator that led him to a discovery that August. It took ages—minutes, in fact—to calculate the exact parameter value of each period-doubling. The higher up the chain he went, the longer it took. With a fast computer, and with a printout, Feigenbaum might have observed no pattern. But he had to write the numbers down by hand, and then he had to think about them while he was waiting, and then, to save time, he had to guess where the next answer would be.

Yet all in an instant he saw that he did not have to guess. There was an unexpected regularity hidden in this system: the numbers were converging geometrically, the way a line of identical telephone poles converges toward the horizon in a perspective drawing. If you know how big to make any two telephone poles, you know all the rest; the ratio of the second to the first will also be the ratio of the third to the second, and so on. The period-doublings were not just coming faster and faster, but they were coming faster and faster at a constant rate.

Why should this be so? Ordinarily, the presence of geometric convergence suggests that something, somewhere, is repeating itself on different scales. But if there was a scaling pattern inside this equation, no one had ever seen it. Feigenbaum calculated the ratio of convergence to the finest precision possible on his machine—three decimal places—and came up with a number, 4.669. Did this particular ratio mean anything? Feigenbaum did what anyone would do who cared about numbers. He spent the rest of the day trying to fit the number to all the standard constants—π, e, and so forth. It was a variant of none.

Oddly, Robert May realized later that he, too, had seen this geometric convergence. But he forgot it as quickly as he noted it. From May’s perspective in ecology, it was a numerical peculiarity and nothing more. In the real-world systems he was considering, systems of animal populations or even economic models, the inevitable noise would overwhelm any detail that precise. The very messiness that had led him so far stopped him at the crucial point. May was excited by the gross behavior of the equation. He never imagined that the numerical details would prove important.

Feigenbaum knew what he had, because geometric convergence meant that something in this equation was scaling, and he knew that scaling was important. All of renormalization theory depended on it. In an apparently unruly system, scaling meant that some quality was being preserved while everything else changed. Some regularity lay beneath the turbulent surface of the equation. But where? It was hard to see what to do next.

Summer turns rapidly to autumn in the rarefied Los Alamos air, and October had nearly ended when Feigenbaum was struck by an odd thought. He knew that Metropolis, Stein, and Stein had looked at other equations as well and had found that certain patterns carried over from one sort of function to another. The same combinations of R’s and L’s appeared, and they appeared in the same order. One function had involved the sine of a number, a twist that made Feigenbaum’s carefully worked-out approach to the parabola equation irrelevant. He would have to start over. So he took his HP-65 again and began to compute the period-doublings for xt+1 = r sin π xt. Calculating a trigonometric function made the process that much slower, and Feigenbaum wondered whether, as with the simpler version of the equation, he would be able to use a shortcut. Sure enough, scanning the numbers, he realized that they were again converging geometrically. It was simply a matter of calculating the convergence rate for this new equation. Again, his precision was limited, but he got a result to three decimal places: 4.669.

It was the same number. Incredibly, this trigonometric function was not just displaying a consistent, geometric regularity. It was displaying a regularity that was numerically identical to that of a much simpler function. No mathematical or physical theory existed to explain why two equations so different in form and meaning should lead to the same result.

Feigenbaum called Paul Stein. Stein was not prepared to believe the coincidence on such scanty evidence. The precision was low, after all. Nevertheless, Feigenbaum also called his parents in New Jersey to tell them he had stumbled across something profound. He told his mother it was going to make him famous. Then he started trying other functions, anything he could think of that went through a sequence of bifurcations on the way to disorder. Every one produced the same number.

Feigenbaum had played with numbers all his life. When he was a teen-ager he knew how to calculate logarithms and sines that most people would look up in tables. But he had never learned to use any computer bigger than his hand calculator—and in this he was typical of physicists and mathematicians, who tended to disdain the mechanistic thinking that computer work implied. Now, though, it was time. He asked a colleague to teach him Fortran, and, by the end of the day, for a variety of functions, he had calculated his constant to five decimal places, 4.66920. That night he read about double precision in the manual, and the next day he got as far as 4.6692016090—enough precision to convince Stein. Feigenbaum wasn’t quite sure he had convinced himself, though. He had set out to look for regularity—that was what understanding mathematics meant—but he had also set out knowing that particular kinds of equations, just like particular physical systems, behave in special, characteristic ways. These equations were simple, after all. Feigenbaum understood the quadratic equation, he understood the sine equation—the mathematics was trivial. Yet something in the heart of these very different equations, repeating over and over again, created a single number. He had stumbled upon something: perhaps just a curiosity; perhaps a new law of nature.

Imagine that a prehistoric zoologist decides that some things are heavier than other things—they have some abstract quality he calls weight—and he wants to investigate this idea scientifically. He has never actually measured weight, but he thinks he has some understanding of the idea. He looks at big snakes and little snakes, big bears and little bears, and he guesses that the weight of these animals might have some relationship to their size. He builds a scale and starts weighing snakes. To his astonishment, every snake weighs the same. To his consternation, every bear weighs the same, too. And to his further amazement, bears weigh the same as snakes. They all weigh 4.6692016090. Clearly weight is not what he supposed. The whole concept requires rethinking.

Rolling streams, swinging pendulums, electronic oscillators—many physical systems went through a transition on the way to chaos, and those transitions had remained too complicated for analysis. These were all systems whose mechanics seemed perfectly well understood. Physicists knew all the right equations; yet moving from the equations to an understanding of global, long-term behavior seemed impossible. Unfortunately, equations for fluids, even pendulums, were far more challenging than the simple one-dimensional logistic map. But Feigenbaum’s discovery implied that those equations were beside the point. They were irrelevant. When order emerged, it suddenly seemed to have forgotten what the original equation was. Quadratic or trigonometric, the result was the same. “The whole tradition of physics is that you isolate the mechanisms and then all the rest flows,” he said. “That’s completely falling apart. Here you know the right equations but they’re just not helpful. You add up all the microscopic pieces and you find that you cannot extend them to the long term. They’re not what’s important in the problem. It completely changes what it means to know something.”

Although the connection between numerics and physics was faint, Feigenbaum had found evidence that he needed to work out a new way of calculating complex nonlinear problems. So far, all available techniques had depended on the details of the functions. If the function was a sine function, Feigenbaum’s carefully worked-out calculations were sine calculations. His discovery of universality meant that all those techniques would have to be thrown out. The regularity had nothing to do with sines. It had nothing to do with parabolas. It had nothing to do with any particular function. But why? It was frustrating. Nature had pulled back a curtain for an instant and offered a glimpse of unexpected order. What else was behind that curtain?

WHEN INSPIRATION CAME, it was in the form of a picture, a mental image of two small wavy forms and one big one. That was all—a bright, sharp image etched in his mind, no more, perhaps, than the visible top of a vast iceberg of mental processing that had taken place below the waterline of consciousness. It had to do with scaling, and it gave Feigenbaum the path he needed.

He was studying attractors. The steady equilibrium reached by his mappings is a fixed point that attracts all others—no matter what the starting “population,” it will bounce steadily in toward the attractor. Then, with the first period-doubling, the attractor splits in two, like a dividing cell. At first, these two points are practically together; then, as the parameter rises, they float apart. Then another period-doubling: each point of the attractor divides again, at the same moment. Feigenbaum’s number let him predict when the period-doublings would occur. Now he discovered that he could also predict the precise values of each point on this ever-more-complicated attractor—two points, four points, eight points…He could predict the actual populations reached in the year-to-year oscillations. There was yet another geometric convergence. These numbers, too, obeyed a law of scaling.

Feigenbaum was exploring a forgotten middle ground be tween mathematics and physics. His work was hard to classify. It was not mathematics; he was not proving anything. He was studying numbers, yes, but numbers are to a mathematician what bags of coins are to an investment banker: nominally the stuff of his profession, but actually too gritty and particular to waste time on. Ideas are the real currency of mathematicians. Feigenbaum was carrying out a program in physics, and, strange as it seemed, it was almost a kind of experimental physics.

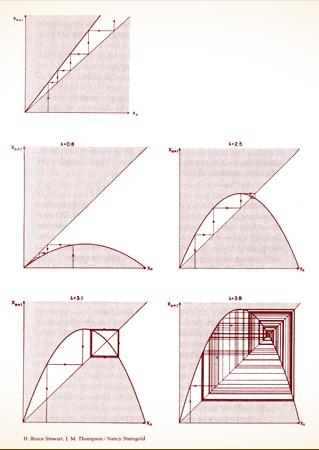

ZEROING IN ON CHAOS. A simple equation, repeated many times over: Mitchell Feigenbaum focused on straightforward functions, taking one number as input and producing another as output. For animal populations, a function might express the relationship between this year’s population and next year’s.

One way to visualize such functions is to make a graph, plotting input on the horizontal axis and output on the vertical axis. For each possible input, x, there is just one output, y, and these form a shape represented by the heavy line.

Then, to represent the long-term behavior of the system, Feigenbaum drew a trajectory that started with some arbitrary x. Because each y was then fed back into the same function as new input, he could use a sort of schematic shortcut: The trajectory would bounce off the 45-degree line, the line where x equals y.

For an ecologist, the most obvious sort of function for population growth is linear—the Malthusian scenario of steady, limitless growth by a fixed percentage each year (left). More realistic functions formed an arch, sending the population back downward when it became too high. Illustrated is the “logistic map,” a perfect parabola, defined by the function y = rx(1-x), where the value of r, from 0 to 4, determines the parabola’s steepness. But Feigenbaum discovered that it did not matter precisely what sort of arch he used; the details of the equation were beside the point. What mattered was that the function should have a “hump.”

The behavior depended sensitively, though, on the steepness—the degree of nonlinearity, or what Robert May called “boom-and-bustiness.” Too shallow a function would produce extinction: Any starting population would lead eventually to zero. Increasing the steepness produced the steady equilibrium that a traditional ecologist would expect; that point, drawing in all trajectories, was a one-dimensional “attractor.”

Beyond a certain point, a bifurcation produced an oscillating population with period two. Then more period-doublings would occur, and finally (bottom right) the trajectory would refuse to settle down at all.

Such images were a starting point for Feigenbaum when he tried to construct a theory. He began thinking in terms of recursion: functions of functions, and functions of functions of functions, and so on; maps with two humps, and then four….

Numbers and functions were his object of study, instead of mesons and quarks. They had trajectories and orbits. He needed to inquire into their behavior. He needed—in a phrase that later became a cliché of the new science—to create intuition. His accelerator and his cloud chamber were the computer. Along with his theory, he was building a methodology. Ordinarily a computer user would construct a problem, feed it in, and wait for the machine to calculate its solution—one problem, one solution. Feigenbaum and the chaos researchers who followed needed more. They needed to do what Lorenz had done, to create miniature universes and observe their evolution. Then they could change this feature or that and observe the changed paths that would result. They were armed with the new conviction, after all, that tiny changes in certain features could lead to remarkable changes in overall behavior.

Feigenbaum quickly discovered how ill-suited the computer facilities of Los Alamos were for the style of computing he wanted to develop. Despite enormous resources, far greater than at most universities, Los Alamos had few terminals capable of displaying graphs and pictures, and those few were in the Weapons Division. Feigenbaum wanted to take numbers and plot them as points on a map. He had to resort to the most primitive method conceivable: long rolls of printout paper with lines made by printing rows of spaces followed by an asterisk or a plus sign. The official policy at Los Alamos held that one big computer was worth far more than many little computers—a policy that went with the one problem, one solution tradition. Little computers were discouraged. Furthermore, any division’s purchase of a computer would have to meet stringent government guidelines and a formal review. Only later, with the budgetary complicity of the Theoretical Division, did Feigenbaum become the recipient of a $20,000 “desktop calculator.” Then he could change his equations and pictures on the run, tweaking them and tuning them, playing the computer like a musical instrument. For now, the only terminals capable of serious graphics were in high-security areas—behind the fence, in local parlance. Feigenbaum had to use a terminal hooked up by telephone lines to a central computer. The reality of working in such an arrangement made it hard to appreciate the raw power of the computer at the other end of the line. Even the simplest tasks took minutes. To edit a line of a program meant pressing Return and waiting while the terminal hummed incessantly and the central computer played its electronic round robin with other users across the laboratory.

While he was computing, he was thinking. What new mathematics could produce the multiple scaling patterns he was observing? Something about these functions must be recursive, he realized, self-referential, the behavior of one guided by the behavior of another hidden inside it. The wavy image that had come to him in a moment of inspiration expressed something about the way one function could be scaled to match another. He applied the mathematics of renormalization group theory, with its use of scaling to collapse infinities into manageable quantities. In the spring of 1976 he entered a mode of existence more intense than any he had lived through. He would concentrate as if in a trance, programming furiously, scribbling with his pencil, programming again. He could not call C division for help, because that would mean signing off the computer to use the telephone, and reconnection was chancy. He could not stop for more than five minutes’ thought, because the computer would automatically disconnect his line. Every so often the computer would go down anyway, leaving him shaking with adrenalin. He worked for two months without pause. His functional day was twenty-two hours. He would try to go to sleep in a kind of buzz, and awaken two hours later with his thoughts exactly where he had left them. His diet was strictly coffee. (Even when healthy and at peace, Feigenbaum subsisted exclusively on the reddest possible meat, coffee, and red wine. His friends speculated that he must be getting his vitamins from cigarettes.)

In the end, a doctor called it off. He prescribed a modest regimen of Valium and an enforced vacation. But by then Feigenbaum had created a universal theory.

UNIVERSALITY MADE THE DIFFERENCE between beautiful and useful. Mathematicians, beyond a certain point, care little whether they are providing a technique for calculation. Physicists, beyond a certain point, need numbers. Universality offered the hope that by solving an easy problem physicists could solve much harder problems. The answers would be the same. Further, by placing his theory in the framework of the renormalization group, Feigenbaum gave it a clothing that physicists would recognize as a tool for calculating, almost something standard.

But what made universality useful also made it hard for physicists to believe. Universality meant that different systems would behave identically. Of course, Feigenbaum was only studying simple numerical functions. But he believed that his theory expressed a natural law about systems at the point of transition between orderly and turbulent. Everyone knew that turbulence meant a continuous spectrum of different frequencies, and everyone had wondered where the different frequencies came from. Suddenly you could see the frequencies coming in sequentially. The physical implication was that real-world systems would behave in the same, recognizable way, and that furthermore it would be measurably the same. Feigenbaum’s universality was not just qualitative, it was quantitative; not just structural, but metrical. It extended not just to patterns, but to precise numbers. To a physicist, that strained credulity.

Years later Feigenbaum still kept in a desk drawer, where he could get at them quickly, his rejection letters. By then he had all the recognition he needed. His Los Alamos work had won him prizes and awards that brought prestige and money. But it still rankled that editors of the top academic journals had deemed his work unfit for publication for two years after he began submitting it. The notion of a scientific breakthrough so original and unexpected that it cannot be published seems a slightly tarnished myth. Modern science, with its vast flow of information and its impartial system of peer review, is not supposed to be a matter of taste. One editor who sent back a Feigenbaum manuscript recognized years later that he had rejected a paper that was a turning point for the field; yet he still argued that the paper had been unsuited to his journal’s audience of applied mathematicians. In the meantime, even without publication, Feigenbaum’s breakthrough became a superheated piece of news in certain circles of mathematics and physics. The kernel of theory was disseminated the way most science is now disseminated—through lectures and preprints. Feigenbaum described his work at conferences, and requests for photocopies of his papers came in by the score and then by the hundred.

MODERN ECONOMICS RELIES HEAVILY on the efficient market theory. Knowledge is assumed to flow freely from place to place. The people making important decisions are supposed to have access to more or less the same body of information. Of course, pockets of ignorance or inside information remain here and there, but on the whole, once knowledge is public, economists assume that it is known everywhere. Historians of science often take for granted an efficient market theory of their own. When a discovery is made, when an idea is expressed, it is assumed to become the common property of the scientific world. Each discovery and each new insight builds on the last. Science rises like a building, brick by brick. Intellectual chronicles can be, for all practical purposes, linear.

That view of science works best when a well-defined discipline awaits the resolution of a well-defined problem. No one misunderstood the discovery of the molecular structure of DNA, for example. But the history of ideas is not always so neat. As nonlinear science arose in odd corners of different disciplines, the flow of ideas failed to follow the standard logic of historians. The emergence of chaos as an entity unto itself was a story not only of new theories and new discoveries, but also of the belated understanding of old ideas. Many pieces of the puzzle had been seen long before—by Poincaré, by Maxwell, even by Einstein—and then forgotten. Many new pieces were understood at first only by a few insiders. A mathematical discovery was understood by mathematicians, a physics discovery by physicists, a meteorological discovery by no one. The way ideas spread became as important as the way they originated.

Each scientist had a private constellation of intellectual parents. Each had his own picture of the landscape of ideas, and each picture was limited in its own way. Knowledge was imperfect. Scientists were biased by the customs of their disciplines or by the accidental paths of their own educations. The scientific world can be surprisingly finite. No committee of scientists pushed history into a new channel—a handful of individuals did it, with individual perceptions and individual goals.

Afterwards, a consensus began to take shape about which innovations and which contributions had been most influential. But the consensus involved a certain element of revisionism. In the heat of discovery, particularly during the late 1970s, no two physicists, no two mathematicians understood chaos in exactly the same way. A scientist accustomed to classical systems without friction or dissipation would place himself in a lineage descending from Russians like A. N. Kolmogorov and V. I. Arnold. A mathematician accustomed to classical dynamical systems would envision a line from Poincaré to Birkhoff to Levinson to Smale. Later, a mathematician’s constellation might center on Smale, Guckenheimer, and Ruelle. Or it might emphasize a computationally inclined set of forebears associated with Los Alamos: Ulam, Metropolis, Stein. A theoretical physicist might think of Ruelle, Lorenz, Rössler, and Yorke. A biologist would think of Smale, Guckenheimer, May, and Yorke. The possible combinations were endless. A scientist working with materials—a geologist or a seismologist—would credit the direct influence of Mandelbrot; a theoretical physicist would barely acknowledge knowing the name.

Feigenbaum’s role would become a special source of contention. Much later, when he was riding a crest of semicelebrity, some physicists went out of their way to cite other people who had been working on the same problem at the same time, give or take a few years. Some accused him of focusing too narrowly on a small piece of the broad spectrum of chaotic behavior. “Feigenbaumology” was overrated, a physicist might say—a beautiful piece of work, to be sure, but not as broadly influential as Yorke’s work, for example. In 1984, Feigenbaum was invited to address the Nobel Symposium in Sweden, and there the controversy swirled. Benoit Mandelbrot gave a wickedly pointed talk that listeners later described as his “antifeigenbaum lecture.” Somehow Mandelbrot had exhumed a twenty-year-old paper on period-doubling by a Finnish mathematician named Myrberg, and he kept describing the Feigenbaum sequences as “Myrberg sequences.”

But Feigenbaum had discovered universality and created a theory to explain it. That was the pivot on which the new science swung. Unable to publish such an astonishing and counterintuitive result, he spread the word in a series of lectures at a New Hampshire conference in August 1976, an international mathematics meeting at Los Alamos in September, a set of talks at Brown University in November. The discovery and the theory met surprise, disbelief, and excitement. The more a scientist had thought about nonlinearity, the more he felt the force of Feigenbaum’s universality. One put it simply: “It was a very happy and shocking discovery that there were structures in nonlinear systems that are always the same if you looked at them the right way.” Some physicists picked up not just the ideas but also the techniques. Playing with these maps—just playing—gave them chills. With their own calculators, they could experience the surprise and satisfaction that had kept Feigenbaum going at Los Alamos. And they refined the theory. Hearing his talk at the Institute for Advanced Study in Princeton, Predrag Cvitanović, a particle physicist, helped Feigenbaum simplify his theory and extend its universality. But all the while, Cvitanović pretended it was just a pastime; he could not bring himself to admit to his colleagues what he was doing.

Among mathematicians, too, a reserved attitude prevailed, largely because Feigenbaum did not provide a rigorous proof. Indeed, not until 1979 did proof come on mathematicians’ terms, in work by Oscar E. Lanford III. Feigenbaum often recalled presenting his theory to a distinguished audience at the Los Alamos meeting in September. He had barely begun to describe the work when the eminent mathematician Mark Kac rose to ask: “Sir, do you mean to offer numerics or a proof?”

More than the one and less than the other, Feigenbaum replied.

“Is it what any reasonable man would call a proof?”

Feigenbaum said that the listeners would have to judge for themselves. After he was done speaking, he polled Kac, who responded, with a sardonically trilled r: “Yes, that’s indeed a reasonable man’s proof. The details can be left to the r-r-rigorous mathematicians.”

A movement had begun, and the discovery of universality spurred it forward. In the summer of 1977, two physicists, Joseph Ford and Giulio Casati, organized the first conference on a science called chaos. It was held in a gracious villa in Como, Italy, a tiny city at the southern foot of the lake of the same name, a stunningly deep blue catchbasin for the melting snow from the Italian Alps. One hundred people came—mostly physicists, but also curious scientists from other fields. “Mitch had seen universality and found out how it scaled and worked out a way of getting to chaos that was intuitively appealing,” Ford said. “It was the first time we had a clear model that everybody could understand.

“And it was one of those things whose time had come. In disciplines from astronomy to zoology, people were doing the same things, publishing in their narrow disciplinary journals, just totally unaware that the other people were around. They thought they were by themselves, and they were regarded as a bit eccentric in their own areas. They had exhausted the simple questions you could ask and begun to worry about phenomena that were a bit more complicated. And these people were just weepingly grateful to find out that everybody else was there, too.”

LATER, FEIGENBAUM LIVED in a bare space, a bed in one room, a computer in another, and, in the third, three black electronic towers for playing his solidly Germanic record collection. His one experiment in home furnishing, the purchase of an expensive marble coffee table while he was in Italy, had ended in failure; he received a parcel of marble chips. Piles of papers and books lined the walls. He talked rapidly, his long hair, gray now mixed with brown, sweeping back from his forehead. “Something dramatic happened in the twenties. For no good reason physicists stumbled upon an essentially correct description of the world around them—because the theory of quantum mechanics is in some sense essentially correct. It tells you how you can take dirt and make computers from it. It’s the way we’ve learned to manipulate our universe. It’s the way chemicals are made and plastics and what not. One knows how to compute with it. It’s an extravagantly good theory—except at some level it doesn’t make good sense.

“Some part of the imagery is missing. If you ask what the equations really mean and what is the description of the world according to this theory, it’s not a description that entails your intuition of the world. You can’t think of a particle moving as though it has a trajectory. You’re not allowed to visualize it that way. If you start asking more and more subtle questions—what does this theory tell you the world looks like?—in the end it’s so far out of your normal way of picturing things that you run into all sorts of conflicts. Now maybe that’s the way the world really is. But you don’t really know that there isn’t another way of assembling all this information that doesn’t demand so radical a departure from the way in which you intuit things.

“There’s a fundamental presumption in physics that the way you understand the world is that you keep isolating its ingredients until you understand the stuff that you think is truly fundamental. Then you presume that the other things you don’t understand are details. The assumption is that there are a small number of principles that you can discern by looking at things in their pure state—this is the true analytic notion—and then somehow you put these together in more complicated ways when you want to solve more dirty problems. If you can.

“In the end, to understand you have to change gears. You have to reassemble how you conceive of the important things that are going on. You could have tried to simulate a model fluid system on a computer. It’s just beginning to be possible. But it would have been a waste of effort, because what really happens has nothing to do with a fluid or a particular equation. It’s a general description of what happens in a large variety of systems when things work on themselves again and again. It requires a different way of thinking about the problem.

“When you look at this room—you see junk sitting over there and a person sitting over here and doors over there—you’re supposed to take the elementary principles of matter and write down the wave functions to describe them. Well, this is not a feasible thought. Maybe God could do it, but no analytic thought exists for understanding such a problem.

“It’s not an academic question any more to ask what’s going to happen to a cloud. People very much want to know—and that means there’s money available for it. That problem is very much within the realm of physics and it’s a problem very much of the same caliber. You’re looking at something complicated, and the present way of solving it is to try to look at as many points as you can, enough stuff to say where the cloud is, where the warm air is, what its velocity is, and so forth. Then you stick it into the biggest machine you can afford and you try to get an estimate of what it’s going to do next. But this is not very realistic.”

He stubbed out one cigarette and lit another. “One has to look for different ways. One has to look for scaling structures—how do big details relate to little details. You look at fluid disturbances, complicated structures in which the complexity has come about by a persistent process. At some level they don’t care very much what the size of the process is—it could be the size of a pea or the size of a basketball. The process doesn’t care where it is, and moreover it doesn’t care how long it’s been going. The only things that can ever be universal, in a sense, are scaling things.

“In a way, art is a theory about the way the world looks to human beings. It’s abundantly obvious that one doesn’t know the world around us in detail. What artists have accomplished is realizing that there’s only a small amount of stuff that’s important, and then seeing what it was. So they can do some of my research for me. When you look at early stuff of Van Gogh there are zillions of details that are put into it, there’s always an immense amount of information in his paintings. It obviously occurred to him, what is the irreducible amount of this stuff that you have to put in. Or you can study the horizons in Dutch ink drawings from around 1600, with tiny trees and cows that look very real. If you look closely, the trees have sort of leafy boundaries, but it doesn’t work if that’s all it is—there are also, sticking in it, little pieces of twiglike stuff. There’s a definite interplay between the softer textures and the things with more definite lines. Somehow the combination gives the correct perception. With Ruysdael and Turner, if you look at the way they construct complicated water, it is clearly done in an iterative way. There’s some level of stuff, and then stuff painted on top of that, and then corrections to that. Turbulent fluids for those painters is always something with a scale idea in it.

“I truly do want to know how to describe clouds. But to say there’s a piece over here with that much density, and next to it a piece with this much density—to accumulate that much detailed information, I think is wrong. It’s certainly not how a human being perceives those things, and it’s not how an artist perceives them. Somewhere the business of writing down partial differential equations is not to have done the work on the problem.

“Somehow the wondrous promise of the earth is that there are things beautiful in it, things wondrous and alluring, and by virtue of your trade you want to understand them.” He put the cigarette down. Smoke rose from the ashtray, first in a thin column and then (with a nod to universality) in broken tendrils that swirled upward to the ceiling.