Denying to the Grave: Why We Ignore the Facts That Will Save Us - Sara E Gorman, Jack M Gorman (2016)

Introduction

ON OCTOBER 8, 2014, THOMAS ERIC DUNCAN DIED IN A Dallas hospital from Ebola virus infection. From the moment he was diagnosed with Ebola newspapers all over the country blared the news that the first case of Ebola in the United States had occurred. Blame for his death was immediately assigned to the hospital that cared for him and to the U.S. Centers for Disease Control and Prevention (CDC).1 By the end of the month a total of four cases had developed in the United States: two in people—including Duncan—who acquired it in African countries where the disease was epidemic and two in nurses who cared for Duncan. Headlines about Ebola continued to dominate the pages of American newspapers throughout the month, warning of the risk we now faced of this deadly disease. These media reports were frightening and caused some people to wonder if it was safe to send their children to school or ride on public transportation—even if they lived miles from any of the four cases. “There are reports of kids being pulled out of schools and even some school closings,” reported Dean Baker in the Huffington Post. “People in many areas are not going to work and others are driving cars rather than taking mass transit because they fear catching Ebola from fellow passengers. There are also reports of people staying away from stores, restaurants, and other public places.”2 An elementary school in Maine suspended a teacher because she stayed in a hotel in Dallas that is 9.5 miles away from the hospital where two nurses contracted the virus.3 As Charles Blow put it in his New York Times column, “We aren’t battling a virus in this country as much as a mania, one whipped up by reactionary politicians and irresponsible media.”4

It turns out that Thomas Duncan was not the only person who died in the United States on October 8, 2014. If we extrapolate from national annual figures, we can say that on that day almost 7,000 people died in the United States. About half of them died of either heart disease or cancer; 331 from accidents, of which automobile accidents are the most common; and 105 by suicide.5 About 80 people were killed by gunshot wounds the day that Thomas Eric Duncan died, two thirds of which were self-inflicted. NPR correspondent Michaeleen Doucleff made a rough calculation and determined that the risk of contracting Ebola in the United States was 1 in 13.3 million, far less than the risk of dying in a plane crash, from a bee sting, by being struck by lightning, or being attacked by a shark.6 The chance of being killed in a car crash is about 1,500 times greater than the risk of getting infected with the Ebola virus in the United States.

It probably comes as no surprise that there were very few front-page headlines about bee stings or shark attacks on October 8, 2014, let alone about car crashes and gun violence. Perhaps this seems natural. After all, Ebola virus infection, the cause of Ebola hemorrhagic fever (EHF), is unusual in the United States, whereas heart attacks, cancer, car crashes, suicide, and murder happen on a regular basis. The problem, however, with the media emphasizing (and ordinary people fearing) Ebola over other far more prevalent health threats is that it shapes our behavior, sometimes in dangerous ways. How many people smoked a cigarette or consumed a sugary breakfast cereal while reading about the threat they faced in catching Ebola?

Some Americans jumped on the blame game bandwagon, attacking the CDC, insisting that politicians and hospitals had dropped the ball, and accusing African countries of ignorant, superstitious behavior. In Washington, DC, one-quarter of the members of Trinity Episcopal Church stopped attending services because they feared that some fellow parishioners might have traveled to West Africa.7 An Ohio bridal shop owner reported losing tens of thousands of dollars because Amber Vinson, one of two Dallas-based nurses who tested positive for Ebola, had visited her shop. The owner closed the store during the incubation period and had the store professionally cleaned, but “a string of frightened customers cancelled their orders.”8 According to a presidential commission report, “Americans focused on their own almost nonexistent risk of catching Ebola from travelers instead of pressing to help the truly affected nations.”9 It is quite likely that most of these frightened Americans did not increase their exercise, cut down on eating processed foods, fasten their seat belts, quit smoking, make sure guns in the home were unloaded in locked cases, or get help for depression and alcoholism. The novelty of Ebola was exciting, like watching a horror movie. Those other causes of death are familiar and boring. In other words, Americans acted in an irrational way, overestimating the risk of Ebola and underestimating the risk of the life-threatening phenomena about which they might be able to do something. As Sara pointed out in an article she wrote that month, “What is the most effective treatment for Americans to protect themselves from early death by an infectious pathogen? A flu shot.”10

Fear of Ebola “gripped the country” after Dr. Craig Spencer was diagnosed with Ebola and was admitted to New York City’s Bellevue Hospital.11 Comparing this dramatic fear about something that is not a real health threat—Ebola infection in the United States—to the more muted concern about things that really threaten our health—lack of exercise, smoking, eating sugary foods, drinking too much alcohol, not wearing seat belts, owning guns—illustrates our persistent failure to use scientific evidence in making decisions about what we do to maintain and improve our health and our lives. This drives scientists, healthcare experts, and public health officials nuts. When an educated parent refuses to vaccinate her child because she fears the vaccination will do more harm than good, these experts decry the “ignorance” of that stance and cite the reams of evidence that prove the contrary is the case. Time after time, we make decisions about the health of ourselves and our families based on emotion rather than on analysis of the scientific data. The government uses taxpayer money to mount hugely expensive studies in order to prove that the science is correct, but people come up with more and more reasons to insist that the research is incomplete, biased, or simply wrong. Opposing camps accuse each other of deliberately plotting to harm the American public, of being bought off by special interests, and of stupidity. The data may be clear about who is right and who is wrong, but people make decisions that ignore or deny the evidence. This book is an attempt to elucidate why we do this and what we can do to make better decisions about our health and the health of our loved ones.

Why Do We Ignore or Deny Scientific Evidence?

It turns out that there are many reasons for refusing to acknowledge scientific evidence when making health decisions, but stupidity is not one of them. Very smart people take positions that are not remotely based on evidence. There is a direct relationship, for example, between intelligence and refusing to vaccinate a child: this unfortunate behavior is championed mainly by very well-educated and affluent people.12 What then causes smart people to make decisions and adopt positions that have no factual basis? That is the question with which this book wrestles.

The idea for this book took shape as Sara became increasingly involved in the world of public health. She was particularly mystified by the “anti-vaxxers,” people who promulgate the notion that immunizations are harmful, causing, among other things, autism. Nothing could be further from the truth. Immunization is one of the triumphs of modern medicine, having eliminated from our lives deadly diseases such as smallpox, measles, polio, and diphtheria. It is based on elegant scientific principles, has a remarkable safety record, and absolutely does not cause autism. How could anyone, Sara wondered, refuse to vaccinate a child?

At the same time Jack, who trained as a psychiatrist and worked as a scientist most of his career, was increasingly interested in the reasons people own guns. He thought at first it was for hunting and decided that just because it was a hobby that neither he nor any of his New York City friends pursued, it would be wrong to advocate that all the people who enjoy shooting animals in the woods be prevented from doing so. But a look at the data showed that a relatively small number of Americans are hunters and that most gun owners have weapons for “protection.” Yet studies show over and over again that a gun in the house is far more likely to be used to injure or kill someone who lives there than to kill an intruder. Gun availability is clearly linked to elevated risk for both homicide and suicide.13 Statistically speaking, the risks of having a gun at home are far greater than any possible benefits. Consider the tragic case of 26-year-old St. Louis resident Becca Campbell, who bought a gun reportedly to protect herself and her two daughters. Instead of protecting herself and her family from risks such as the Ferguson riots, she wound up accidentally shooting and killing herself with it.14

In both cases scientific evidence strongly suggests a position—vaccinate your child and don’t keep a gun at home—that many of us choose to ignore or deny. We make a distinction between “ignore” and “deny,” the former indicating that a person does not know the scientific evidence and the latter that he or she does but actively disagrees with it. We quickly generated a list of several other health and healthcare beliefs that fly directly in the face of scientific evidence and that are supported by at least a substantial minority of people:

✵Vaccination is harmful.

✵Guns in the house will protect residents from armed intruders.

✵Foods containing genetically modified organisms (GMOs) are dangerous to human health.

✵The human immunodeficiency virus (HIV) is not the cause of AIDS.

✵Nuclear power is more dangerous than power derived from burning fossil fuels.

✵Antibiotics are effective in treating viral infections.

✵Unpasteurized milk is safe and contains beneficial nutrients that are destroyed by the pasteurization process.

✵Electroconvulsive therapy (ECT, or shock treatment) causes brain damage and is ineffective.

Throughout this book we present evidence that none of these positions is correct. But our aim is not to exhaustively analyze scientific data and studies. Many other sources review in rigorous detail the evidence that counters each of these eight beliefs. Rather, our task is to try to understand why reasonably intelligent and well-meaning people believe them.

Many of the thought processes that allow us to be human, to have empathy, to function in society, and to survive as a species from an evolutionary standpoint can lead us astray when applied to what scientists refer to as scientific reasoning. Why? For starters, scientific reasoning is difficult for many people to accept because it precludes the ability to make positive statements with certainty. Scientific reasoning, rather, works by setting up hypotheses to be knocked down, makes great demands before causality can be demonstrated, and involves populations instead of individuals. In other words, in science we can never be 100% sure. We can only be very close to totally sure. This runs counter to the ways we humans are accustomed to thinking. Moreover, science works through a series of negations and disproving, while we are wired to resist changing our minds too easily. As a result, a multitude of completely healthy and normal psychological processes can conspire to make us prone to errors in scientific and medical thinking, leading to decisions that adversely affect our health.

These poor health decisions often involve adopting risky behaviors, including refusal to be vaccinated, consumption of unhealthy food, cigarette smoking, failure to adhere to a medication regimen, and failure to practice safe sex. We believe that these risk-taking behaviors stem in large part from complex psychological factors that often have known biological underpinnings. In this book we explore the psychology and neurobiology of poor health decisions and irrational health beliefs, arguing that in many cases the psychological impulses under discussion are adaptive (meaning, they evolved to keep us safe and healthy), but are often applied in a maladaptive way. We also argue that without proper knowledge of the psychological and biological underpinnings of irrational health decisions and beliefs, we as a society cannot design any strategy that will alleviate the problem. We therefore conclude by offering our own method of combatting poor health decision making, a method that takes into account psychology and neurobiology and that offers guidance on how to encourage people to adopt a more scientific viewpoint without discounting or trying to eliminate their valuable emotional responses.

We will assert many times that the problem is not simply lack of information, although that can be a factor. Irrational behavior occurs even when we know and understand all the facts. Given what we now understand about brain function, it is probably not even appropriate to label science denial as, strictly speaking, “irrational.” Rather, it is for the most part a product of the way our minds work. This means that simple education is not going to be sufficient to reverse science denial. Certainly, angry harangues at the “stupidity” of science denialists will only reify these attitudes. Take, for example, a recent article in The Atlantic that discussed affluent Los Angeles parents who refuse to have their children vaccinated, concluding, “Wealth enables these people to hire fringe pediatricians who will coddle their irrational beliefs. But it doesn’t entitle them to threaten an entire city’s children with terrifying, 19th-century diseases for no reason.”15 This stance is unhelpful because it attempts to shame people into changing their beliefs and behaviors, a strategy that rarely works when it comes to health and medicine. As Canadian scientists Chantal Pouliot and Julie Godbout point out in their excellent article on public science education, when scientists think about communicating with nonscientists, they generally operate from the “knowledge deficit” model, the idea that nonscientists simply lack the facts. Evidence shows, these authors argue instead, that nonscientists are in fact capable of understanding “both the complexity of research and the uncertainties accompanying many technological and scientific developments.”16 Pouliot and Godbout call for educating scientists about social scientists’ research findings that show the public is indeed capable of grasping scientific concepts.

Each of the six chapters of this book examines a single key driver of science denial. Each one of them rests on a combination of psychological, behavioral, sociological, political, and neurobiological components. We do not insist that these are the only reasons for science denial, but through our own work and research we have come to believe that they are among the most important and the most prominent.

Just Because You’re Paranoid Doesn’t Mean People Aren’t Conspiring Against You

In chapter 1, “Conspiracy Theories,” we address the complicated topic of conspiracy theories. Complicated because a conspiracy theory is not prima facie wrong and indeed there have been many conspiracies that we would have been better off uncovering when they first occurred. Yet one of the hallmarks of false scientific beliefs is the claim by their adherents that they are the victims of profiteering, deceit, and cover-ups by conglomerates variously composed of large corporations, government regulatory agencies, the media, and professional medical societies. The trick is to figure out if the false ones can be readily separated from those in which there may be some truth.

Only by carefully analyzing a number of such conspiracy theories and their adherents does it become possible to offer some guidelines as to which are most obviously incorrect. More important for our purposes, we explore the psychology of conspiracy theory adherence. What do people get out of believing in false conspiracy theories? How does membership in a group of like-minded conspiracy theorists develop, and why is it so hard to persuade people that they are wrong? As is the case with every reason we give in this book for adherence to false scientific ideas, belittling people who come to believe in false conspiracy theories as ignorant or mean-spirited is perhaps the surest route to reinforcing an anti-science position.

Charismatic Leaders

Perhaps the biggest and most formidable opponents to rational understanding and acceptance of scientific evidence in the health field are what are known as charismatic leaders, our second factor in promoting science denial. In chapter 2, “Charismatic Leaders,” we give several profiles of leaders of anti-science movements and try to locate common denominators among them. We stress that most people who hold incorrect ideas about health and deny scientific evidence are well-meaning individuals who believe that they are doing the right thing for themselves and their families. We also stress that the very same mental mechanisms that we use to generate incorrect ideas about scientific issues often have an evolutionary basis, can be helpful and healthy in many circumstances, and may even make us empathic individuals.

When we deal with charismatic figures, however, we are often dealing with people for whom it is difficult to generate kind words. Although they may be true believers, most of them are people who should know better, who often distort the truth, and who may masquerade as selfless but in fact gain considerable personal benefit from promulgating false ideas. And, most important, what they do harms people.

There is a fascinating, albeit at times a bit frightening, psychology behind the appeal of these charismatic leaders that may help us be more successful in neutralizing their hold over others. Although many of them have impressive-sounding academic credentials, the factors that can make them seem believable may be as trivial as having a deep voice.17 Chapter 2 marks the end of a two-chapter focus on the psychology of groups and how it fosters anti-science beliefs.

If I Thought It Once, It Must Be True

Everyone looks for patterns in the environment. Once we find them and if they seem to explain things we become more and more convinced we need them. The ancients who thought the sun revolved around the earth were not stupid, nor were they entirely ruled by religious dogma that humans must be at the center of the earth. In fact, if you look up in the sky, that is at first what seems to be happening. After years of observation and calculation, the Greek astronomer Ptolemy devised a complicated mathematical system to explain the rotation of stars around the earth. It is not a simple explanation but rather the work of a brilliant mind using all of the tools available to him. Of course, it happens to be wrong. But once it was written down and taught to other astronomers it became hard to give up. Rather, as new observations came in that may have challenged the “geocentric” model, astronomers were apt to do everything possible to fit them into the “earth as the center of the universe” idea. That is, they saw what they believed.

It took another set of geniuses—Copernicus, Kepler, and Galileo—and new inventions like the telescope to finally dislodge the Ptolemaic model.

Once any such belief system is in place, it becomes the beneficiary of what is known as the confirmation bias. Paul Slovic captured the essence of confirmation bias, the subject of chapter 3, very well:

It would be comforting to believe that polarized positions would respond to informational and educational programs. Unfortunately, psychological research demonstrates that people’s beliefs change slowly and are extraordinarily persistent in the face of contrary evidence (Nisbett & Ross, 1980). Once formed, initial impressions tend to structure the way that subsequent evidence is interpreted. New evidence appears reliable and informative if it is consistent with one’s initial beliefs; contrary evidence is dismissed as unreliable, erroneous or unrepresentative.18

Does this sound familiar? We can probably all think of examples in our lives in which we saw things the way we believed they should be rather than as they really were. For many years, for example, Jack believed that antidepressant medications were superior to cognitive behavioral therapy (CBT) in the treatment of an anxiety condition called panic disorder. That is what his professors insisted was true and it was part of being an obedient student to believe them. When studies were published that showed that CBT worked, he searched hard for flaws in the experimental methods or interpretation of those studies in order to convince himself that the new findings were not sufficient to dislodge his belief in medication’s superiority. Finally, he challenged a CBT expert, David Barlow, now of Boston University, to do a study comparing the two forms of treatment and, to his surprise, CBT worked better than medication.19

Fortunately, Jack let the data have its way and changed his mind, but until that point he worked hard to confirm his prior beliefs. This happens all the time in science and is the source of some very passionate scientific debates and conflicts in every field. It also turns out that neuroscientists, using sophisticated brain imaging techniques, have been able to show the ways in which different parts of the brain respond to challenges to fixed ideas with either bias or open-mindedness. The challenge for those of us who favor adherence to scientific evidence is to support the latter. In this effort we are too often opposed by anti-science charismatic leaders.

If A Came Before B, Then A Caused B

Uncertainty, as we explain in chapter 4, “Causality and Filling the Ignorance Gap,” is uncomfortable and even frightening to many people. It is a natural tendency to want to know why things are as they are, and this often entails figuring out what caused what. Once again, this tendency is almost certainly an evolutionarily conserved, protective feature—when you smell smoke in your cave you assume there is a fire causing it and run; you do not first consider alternative explanations or you might not survive. We are forced to assign causal significance to things we observe every day. If you hear the crash of a broken glass and see your dog running out of the room, you reach the conclusion immediately that your dog has knocked a glass off a tabletop. Of course, it is possible that something else knocked the glass off and frightened the dog into running, what epidemiologists might call reverse causation, but that would be an incorrect conclusion most of the time. Without the propensity to assign causality we would once again get bogged down in all kinds of useless considerations and lose our ability to act.

In many ways, however, the causality assumption will fail us when we are considering more complicated systems and issues. This is not a “chicken and egg” problem; that is a philosophical and linguistic conundrum that has no scientific significance. We know that chickens hatch from eggs and also lay eggs so that neither the chicken nor egg itself actually came first but rather evolutionarily more primitive life forms preceded both. By causality we are talking here instead about the way in which the scientific method permits us to conclude that something is the cause of something else. If you look again at our list of examples of anti-science views, you can see that a number of them are the result of disputes over causality. There is no question, for example, that HIV causes AIDS, and we are similarly certain that vaccines do not cause autism. Yet there are individuals and groups that dispute these clear scientific facts about causality.

If one has a child with autism the temptation to find a cause for it is perfectly understandable. The uncertainty of why a child has autism is undoubtedly part of the heartbreak faced by parents. Many initially blame themselves. Perhaps the mom should not have eaten so much fried food during pregnancy. Or maybe it is because the dad has a bad gene or was too old to father a child. Guilt sets in, adding to the anguish of having a child who will struggle throughout his or her life to fit in. Such parents in distress become highly prone to leap to a causality conclusion if one becomes available, especially if it allows us to be angry at someone else instead of ourselves. Once an idea like vaccines cause autism is circulated and supported by advocacy groups it becomes very hard for scientists to dislodge it by citing data from all the studies showing that the conclusion is wrong. So the tendency to want or need to find causal links will often lead us to incorrect or even dangerous scientific conclusions.

It’s Complicated

What is it about Ebola that makes it an epidemic in some West African countries like Liberia, Guinea, and Sierra Leone but a very small threat in America? Why, on the other hand, is the flu virus a major threat to the health of Americans, responsible for thousands of deaths in the last 30 years? The answer lies in the biology of these viruses. The Ebola virus, like HIV and influenza, is an RNA virus that is converted to DNA once inside a human cell, which allows it to insert itself into the host cells’ genome, to replicate and then move on to infect more host cells. Its main reservoir is the fruit bat. Most important, it is spread by contact with bodily fluids of an infected individual;20 it is not, like flu or chicken pox, an airborne virus. This is a very important point: one can catch the flu from someone merely by sitting nearby and inhaling the air into which he or she coughs or sneezes. A person can infect another person with the flu from as far as six feet away. Ebola does not spread that way. One has to handle the blood, urine, feces, or vomit of someone with Ebola in order to get it. Hence, the chance of spreading flu is much greater than the chance of spreading Ebola. The people most at risk for contracting Ebola are healthcare workers and family members who care for infected people. The incubation period for the Ebola virus is between 2 and 21 days, and an individual is not contagious until he or she becomes symptomatic. This is also different from the flu, which can be spread a day before a person becomes symptomatic. Thus, we know when a person with Ebola is contagious; we may not if the person has the flu.

Did you get all of that? Those are the facts. Do you now understand the difference between RNA and DNA viruses or the mechanisms by which viruses infect cells and replicate, or even exactly what replication is? Is the difference between bodily fluids and airborne infection completely clear, and do you understand what we mean by incubation period? Don’t be surprised if you don’t. These terms are second nature to doctors and most scientists, but other educated people probably don’t think about them very much. Just as most doctors do not understand what cost-based accounting entails, most accountants are not expert virologists. In other words, the details of what Ebola is and does are complicated. Despite the common refrain that we are in a new era of information brought on by the World Wide Web, our brains are still not programmed to welcome technical and complex descriptions. You may have read the paragraph before this one quickly and committed to memory only words like HIV, bats, infect, symptomatic, and spread. Thus, if you are like most people you can read that paragraph and walk away only thinking, incorrectly, that “Ebola is infectious and you can get it from another person sitting next to you on the train.”

Chapter 5, “Avoidance of Complexity,” deals with another reason people succumb to unscientific notions—the discomfort we all have with complexity. It isn’t that we are incapable of learning the facts but rather we are reluctant to put in the time and effort to do so. This may especially be the case when science and math are involved because many people believe, often incorrectly, that they are incapable of understanding those disciplines except in their most basic aspects. As we explain when we discuss some aspects of brain function, our minds gravitate naturally to clear and simple explanations of things, especially when they are laced with emotional rhetoric. We are actually afraid of complexity. Our natural instinct is to try to get our arms around an issue quickly, not to “obsess” or get caught in the weeds about it. As we indicate throughout this book, this retreat from complexity is similar to the other reasons for science denial in that it is in many ways a useful and adaptive stance. We would be paralyzed if we insisted on understanding the technical details of everything in our lives. One need have very little idea about how a car engine works in order to get in and drive; music can be enjoyed without fully understanding the relationships among major and minor keys and the harmonics of stringed instruments; a garden can be planted without an ability to write down all the chemical steps involved in photosynthesis and plant oxygen exchange. Furthermore, when we have to make urgent decisions it is often best not to dwell on the details. Emergencies are best handled by people who can act, not deliberate.

But when making health decisions, the inability to tackle scientific details can leave us prone to accepting craftily packaged inaccuracies and slogans. Scientists, doctors, and public health experts are often not helpful in this regard because they frequently refuse to explain things clearly and interestingly. Scientists seem to waver between overly complicated explanations that only they can fathom and overly simplistic explanations that feel patronizing and convey minimal useful information. Experts are also prone to adhering to simplistic and incorrect ideas when the truth is complicated.

In fact, as we argue in chapter 5, scientists need to work much harder on figuring out the best ways to communicate facts to nonscientists. This must begin by changing the way we teach science to children, from one of pure memorization to an appreciation of the way science actually works. By focusing on the scientific method, we can begin to educate people about how to accept complexity and uncertainty, how to be skeptical, and how to ask the right questions.

Who’s Afraid of the Shower?

Chapter 6, “Risk Perception and Probability,” focuses on something that is highlighted by the puzzle described in the opening passages of this book, about Ebola virus infection: an inability to appreciate true risk. The chance of getting Ebola virus in America is very small, but the attention it got and the fear it generated in this country have been enormous. On the one hand, then, we know the true risk of contracting Ebola, somewhere around 1 in several million. On the other hand, we perceive the risk as something approaching enormous. Thanks largely to the work of Daniel Kahneman and other behavioral economists, we now have a substantial amount of empirical evidence about how people form and hang on to their ideas about risk—and, for the most part, they do it very differently from how statisticians do it. A public health statistician might tell us that the risk of dying from a fall is 1 in 152 whereas the risk of dying in an airplane crash is 1 in 8,321.21 That is a relative risk of 0.002, meaning that the risk of dying from a fall is 2,000 times greater than from perishing in an airplane disaster. But psychologists and behavioral economists tell us that our understanding of what these numbers mean is not straightforward. Sure, everyone can understand from this that a person is more likely to be killed slipping in the shower or falling down the stairs than flying in an airplane. But numbers like 152 and 8,321 do not have much significance for us other than to signify that one is bigger than the other.

A lot more goes into our perception of risk than the numbers that describe the actual threat. As we mentioned earlier, the human mind is naturally biased in favor of overestimating small risks and underestimating large risks. The former is the reason we buy lottery tickets and buy insurance. The latter is the reason we neglect getting a flu shot or fastening our seat belt. The decision to keep a gun in the house is another example. As Daniel Kahneman recently wrote to us, “The gun safety issue is straightforward. When you think of the safety of your home, you assume an attack. Conditional on being an attack, the gun makes you safe. No one multiplies that conditional probability by the very low probability of an attack, to compare that with the probability of an accident, which is clearly low.”22 Refusing to vaccinate a child is a classic example of this: those who fear immunization exaggerate the very, very small risk of an adverse side effect and underestimate the devastation that occurs during a measles outbreak or just how deadly whooping cough (pertussis) can be. We also get fooled by randomness—believing that something that happened by mere chance is actually a real effect.23 Thus, if a child is vaccinated one day and gets a severe viral illness three days later we are prone to think the vaccine caused the illness, even though the two events probably happened in close proximity purely by chance. Everyone makes this mistake.

Convincing people about the relative risks of things without making them experts in biostatistics is a difficult task. And here, a little learning can be a dangerous thing. Jack recently asked a group of very educated and successful people whether the fact that there is a 1 in 1,000 chance of falling in the shower made them feel reassured or nervous. Most of them said reassured because they thought that, like a coin flip, each time they get into the shower they have the same very small risk of falling. They had absorbed the classic explanation of independent events, taught on the first day of every statistics class, represented by the odds of getting a heads or tails each time we flip a coin. But they were actually wrong. If we ask not what are the odds of tossing heads on each coin flip, which is always 1 in 2 (50%) because each toss is an independent event, but rather what are the odds of flipping at least 1 head in 100 coin flips we get a very different answer: 99%. Similarly, if the risk of falling in the shower is 1/1,000 and you take a shower every day you will probably fall at least once every 3 years. So please be careful. And clearly, trying to help people understand what is and isn’t really a risk to their health requires considerable effort and novel approaches.

The last chapter in this book, “Conclusion,” presents some of our recommendations for improving the recognition and use of scientific information to make health decisions. These follow naturally from our understanding of the factors that we argue contribute so much to science denial. But there is another set of overarching recommendations that we think best presented at the outset. These have to do with the need for vastly improved science education, science journalism, research into what will help nonscientists understand the nature of scientific evidence, and the recognition of all aspects of the conflict of interest conundrum. Although we will touch on these topics at many points throughout the book, we will advance our ideas on them now.

Our Desperate Need for Scientific First Responders

Analyzing the reasons for science denial in detail leads to the question of what can be done to reverse it. Clearly, new and research-proven approaches to educating the public are urgently needed if we are going to accomplish this. We have used the African Ebola epidemic (which did not, and will not, become a North American epidemic) as a recent example of public misperception about scientific evidence that ultimately proved harmful. Because of public hysteria, African Americans and Africans visiting the United States were shunned, abused, and even beaten.24 It was initially proposed that in return for their brave service in Africa treating the victims of Ebola, healthcare workers returning home to the United States should be quarantined for a minimum of 21 days. When Dr. Craig Spencer returned to New York City after treating Ebola patients in Guinea, his fiancée and two friends with whom he had had contact were ordered into quarantine for 21 days, his apartment was sealed off, and a bowling alley he had been to in Brooklyn was shuttered. Much of this was an overreaction based on fear rather than science. Fortunately, Dr. Spencer survived.25

Ebola is not a public health crisis in the United States, but it does represent a public relations disaster for public health, medicine, and science. Because scientists understood that it would be highly unlikely for Ebola to get a foothold here given its mode of transmission, which makes it a not very contagious organism, they knew that the proper response was to fight the infection where it was really an epidemic, in Africa. Craig Spencer, in a very moving article he wrote after his discharge from the hospital, laments the fact that our attention was misplaced when media and politicians focused on the nonexistent threat to Americans. “Instead of being welcomed as respected humanitarians,” he wrote, “my U.S. colleagues who have returned home from battling Ebola have been treated as pariahs.”26 That was wrong from two points of view. The first is, of course, a moral one—America has the most advanced medical technology in the world and we have an ethical obligation to use what we know to save the lives of people who are unable to access the benefits of modern medical science. Fulfilling this obligation is of course a complex endeavor and beyond the scope of this book, but we cannot avoid being painfully aware that Americans did not care very much about Ebola when it was limited to Liberia, Sierra Leone, Guinea, and other West African countries.

The second reason it was wrong to ignore the Ebola epidemic in Africa is more germane to our concerns here. It was always clear that a few cases of Ebola would show up in this country. Remember that we have been fed a steady diet of movies, books, and television programs in recent years in which lethal viruses suddenly emerge that kill millions of people in days and threaten to eliminate humanity. In those dramatic depictions (which usually have only a tenuous relationship to actual biology and epidemiology), a handful of very physically attractive scientists, who know better than the rest of the scientific community, courageously intervene to figure out how to stop the viral plague and save the human race. These are the kind of things that scientists tend not to read or watch or even know about, but they should because we see them all the time.

Therefore, given the fact that Ebola was going to crop up somewhere in the United States and that people are programmed to be frightened of it, the CDC should have initiated a public education campaign as soon as the African epidemic became known. Perhaps the organization did not do so because public health officials feared that bringing up the possibility that Ebola would certainly come here would frighten people and cause hysteria. If so, it was a bad decision because fear and hysteria is exactly what emerged when Thomas Duncan got sick. Hence, weeks that could have been devoted to preparing Americans to make a sensible response were wasted. Craig Spencer recognized this when he wrote, “At times of threat to our public health, we need one pragmatic response, not 50 viewpoints that shift with the proximity of the next election.”27

An even more likely reason behind the failure of CDC and other public health and medical agencies to be proactive in dealing with America’s response to Ebola is the sad fact that these groups generally do not see public education as their job. Over and over again we see the public health and scientific communities ignoring events that are guaranteed to frighten people. The scientists decide, usually correctly, that the popular explanations of such events are wrong—there is no danger—and therefore not worthy of their attention. Only after the false notions become embedded in many people’s minds does the scientific community mount any meager effort to counteract it.

A good example of this occurred when Brian Hooker published an online article on August 27, 2014. In this paper, which had no coauthors, Hooker took data assembled by the CDC and previously published28 that addressed the purported relationship between autism and vaccination and “re-analyzed” them. He used a very basic statistical test called the chi-square and concluded that African American males who had been given the MMR vaccine before age 36 months were more than 3 times more likely to have autism than African American males who received the vaccination after 36 months.

This is a shocking conclusion. Every piece of evidence previously reported failed to find any association between vaccinations and autism. Hooker claimed that “for genetic subpopulations” the MMR vaccine “may be associated with adverse events.” The story went viral on the Internet and throughout social media. Almost immediately the “news” that Hooker had discovered an association between an immunization and autism in African American males led to accusations that the CDC knew about this from the beginning and deliberately chose to hide it. So-called anti-vaxxers who had been maintaining all along that vaccinations cause autism and weaken the immune system of children felt they had finally gotten the scientific confirmation for which they had been longing. Moreover, a conspiracy theory was born and the generally admired CDC plastered with scorn.

The Hooker “analysis” is actually close to nonsensical. For a variety of technical reasons having to do with study methodology and statistical inference, some of which we will mention shortly, it has absolutely no scientific validity. Indeed, the journal editors who published it retracted the article on October 3, 2014, with the following explanation:

The Editor and Publisher regretfully retract the article as there were undeclared competing interests on the part of the author which compromised the peer review process. Furthermore, post-publication peer review raised concerns about the validity of the methods and statistical analysis, therefore the Editors no longer have confidence in the soundness of the findings. We apologize to all affected parties for the inconvenience caused.29

The “undisclosed” conflict of interest is the well-known fact that Hooker, the father of a child with autism, is a board member of an organization called Focus Autism, which has campaigned incessantly to assert that vaccines cause autism. Many critics rushed to point out that Hooker has spent years addressing this topic but is not himself an expert in immunology, infectious diseases, autism, or any relevant discipline. He is a chemical engineer.

Importantly, the Hooker paper was ignored by the medical, scientific, and public health communities for days. The paper itself was accessed online frequently and became the subject of reports all over the media. Journalists debated its merits and tried to get comments from scientists at the CDC. Anti-vaxxer groups went wild with self-righteous glee. Here was the smoking gun they knew all along existed. And in the midst of this was virtual silence from the experts who knew immediately the paper by Hooker was simply incorrect.

The problem is that explaining why Hooker’s paper is wrong and should never have been published in the first place is a complicated task full of technical points. The original paper by DeStefano and colleagues in which these data were first reported in 2004 is not easy reading. In fact, nowhere in the paper do the exact words “We found that there is no relationship between the MMR vaccine and autism” occur.

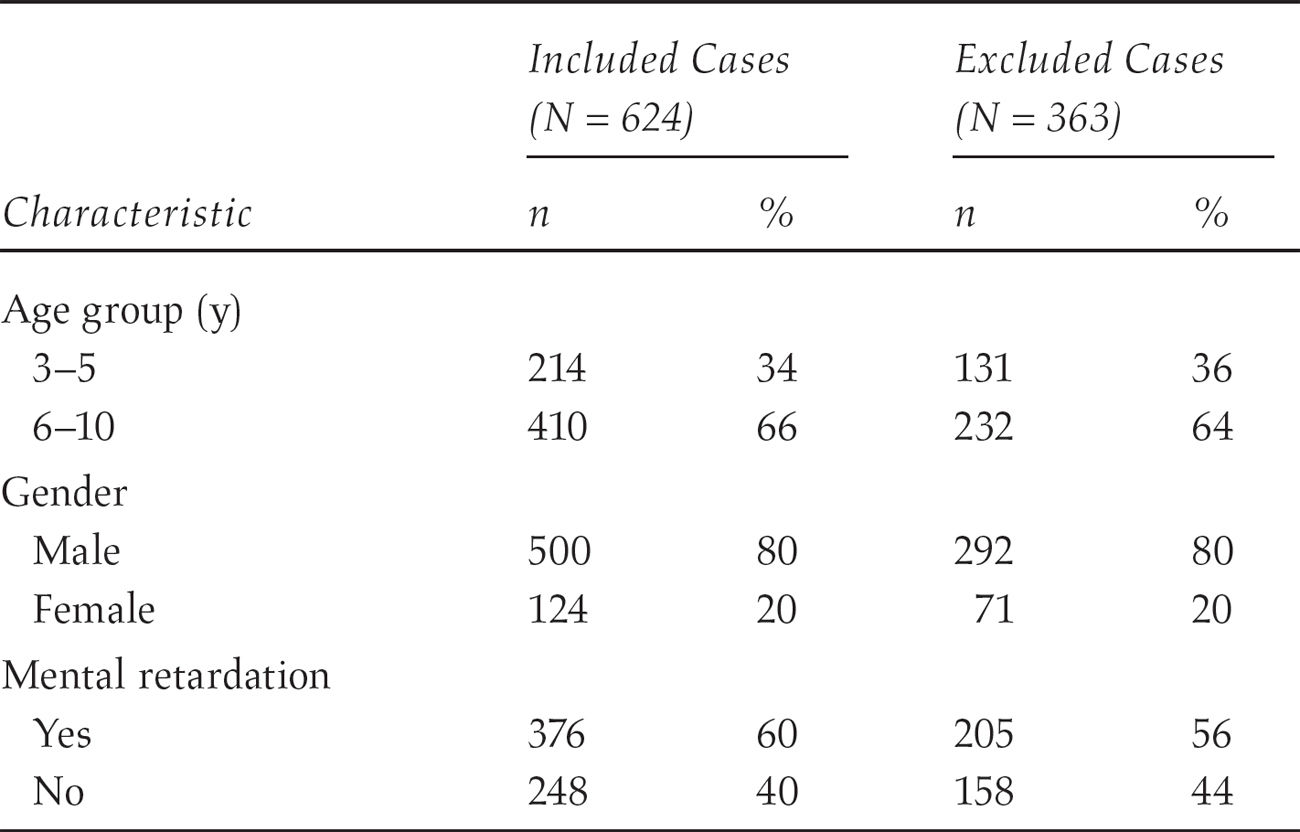

The original investigators’ hypothesis was that if MMR vaccinations caused autism, then children with autism should have received the MMR vaccine before the age when autism developed. Clearly, a vaccination given after a child has received a diagnosis of autism cannot be its cause. Therefore, more children with autism should have received the vaccine before age 36 months than children without autism. The results are therefore analyzed in terms of ages at which first vaccination was given, and according to figure 1 in the paper it is clear that there is no difference between the groups (see table 1). Thus the authors concluded, “In this population-based study in a large U.S. metropolitan area [Atlanta, Georgia], we found that the overall distribution of ages at first MMR vaccination among children with autism was similar to that of school-matched control children who did not have autism.” In other words, there was no evidence of any relationship between MMR vaccine and autism.

TABLE 1 Comparison of Demographic Characteristics and Cognitive Levels Between Included and Excluded Autism Case Children*

* Reproduced with permission from Pediatrics, 113, 259-266, Copyright © 2004, by the AAP.

To anyone with an advanced understanding of case-control study methods, hypothesis testing, and regression analysis, the DeStefano paper is very reassuring. To most people, however, it is probably opaque. There are multiple points at which a charlatan could seize upon the authors’ honest explanations of what they did and did not do, take them out of context, and distort the meaning of the paper. Hooker went even beyond this—he took the data and did what scientists and statisticians call “data massage.” If you take a big data set and query it enough times, for mathematical reasons it will sooner or later give a spurious result by chance. That is why it is improper to take a data set and chop it up into subgroups and redo the analyses. But that is essentially what Hooker did by looking separately at boys and girls and various ethnic groups. He also applied inappropriate statistical methods—simple chi-square tests are not the correct way to analyze data from a large case control study like DeStefano’s.

It was not particularly difficult for us to explain these shortcomings in Hooker’s analysis. We could, if asked, make them simpler or more complex. Nothing would make us happier than to have the opportunity to explain technical aspects of the DeStefano paper over and over again until nonscientists understand what is going on here. But sadly, no one from the pediatric, epidemiology, or statistics communities jumped on the opportunity the minute the Hooker paper appeared. It is uncertain how many mothers and fathers of 2- and 3-year-old children who had been on the fence about vaccinating their children decided not to do so in the days after Hooker’s paper appeared online.

It has been customary for scientists, physicians, and public health experts to blame people when they follow anti-science notions. When the culprit is a charismatic leader who—with the stakes for the public so high—fails to adhere meticulously to accepted scientific norms, we agree that blame is exactly what needs to be assigned. But most people who believe science denialist ideas truly think they are protecting their and their children’s health. Clearly what is desperately needed is rigorously conducted research into the best methods to educate people about scientific issues. The results may be surprising, but waiting until we are gripped with fear and swayed by incorrect ideas is obviously not an option. We need a corps of “scientific first responders” to address science denialism the minute it appears.

But Science Is Boring … Right?

How can we make sure everyone is equipped to make decisions based on science? It is becoming abundantly apparent which actions do not work to improve everyone’s understanding of scientific evidence and chief among them is lecturing, haranguing, and telling people not to be so emotional. Emotion is what makes us loving, empathic human beings, capable of working together in social groups, maintaining family units and being altruistic and charitable. It is also what drives a great deal of our daily activity and powerfully influences how we make decisions. Turning it off is both unpleasant (sports fans know that in-depth analyses of every technicality during a basketball game becomes tedious and ruins the thrill of watching great athletes compete) and nearly impossible. Further, we are not interested in advocating that everyone become an automaton who obsesses over every decision and every action.

Rather, as we assert, it will be necessary to study and develop new strategies at two levels: one, to help school-age children actually like science and enjoy thinking like scientists, and two, to initiate serious research on the best way to convince adults that following scientific evidence in making choices is in their best interests. In chapter 5 on complexity we make clear that the current method of teaching science, which largely relies on forcing children to memorize facts and perform boring cookbook-style “experiments,” is guaranteed to make most of them hate the subject. Furthermore, the present pedagogical approach does not give children a sense of how scientists work, what the scientific method looks like in action, or how to understand what constitutes scientific evidence. Teaching children what it means to make a scientific hypothesis, to test it, and to decide if it is correct or not, we argue, may be far more likely to both increase the number of children who actually like science and create a citizenry that can sort out scientific facts from hype.

There is already robust evidence that hands-on learning in science, in which young students do rather than simply absorb science, enhances both the enjoyment students get from science education and their proficiency with scientific concepts.30 Two graduate students in the University of Pennsylvania chemistry department recently founded a program called Activities for Community Education in Science (ACES) in which local Philadelphia schoolchildren perform a variety of experiments in the program that are designed to be fun.31 This approach is very different from typical lab classes that use a cookbook-style approach in which students follow a series of prescribed steps in order to get—if they do each step exactly correctly—a preordained result. A few students find the typical approach challenging, but many others get bored and frustrated. Most important, the usual lab class in high school and college science courses does not mimic the excitement of laboratory research. There is no “cookbook” for guidance when one is proceeding through a new experiment. Rather, scientists are constantly figuring out if what they are doing is leading in the right direction, if an intermediary result makes it more or less likely that the whole study will provide a meaningful result.

There are signs that some work is now being done to rectify this situation. Peter J. Alaimo and colleagues at Seattle University developed a new approach to undergraduate organic chemistry lab class in which students must “think, perform, and behave more like professional scientists.”32 In the ACES program just mentioned students and their mentors similarly focus on the concepts guiding the experiments. This restores the sense of discovery that can make otherwise tedious lab work exciting. Similar efforts are being made in colleges across the country like the Universities of California, Davis; Colorado; and North Carolina.33 As we always stress, and as the students who designed the program well understand, we will know how successful these programs are only when they are subjected to serious evaluation research themselves.

There is even less reliable scientific information on the best way to influence adults. A large part of the problem is the persistent refusal of scientific organizations to get involved in public education. As we saw with the Ebola crisis, even otherwise impressive agencies like the CDC wait far too long to recognize when people need to be provided correct information in a formulation that can be understood and that is emotionally persuasive. We surely need public health officials who are prepared to take to the airwaves—perhaps social media—on a regular basis and who anticipate public concerns and fears. To take another example, it was recently announced that scientists determined that the radiation reaching the U.S. Pacific coast 2 years after the Japanese Fukushima meltdown is of much too low a concentration to be a health threat. It is not clear whether this information calmed the perpetrators of terrifying stories of the threat of radiation to Californians.34 But what is needed to be sure that such frightening claims are rejected by the public are proactive campaigns carefully addressing understandable fears and explaining in clear terms that the reassuring information was gathered via extensive, exhaustive monitoring and measurement using sophisticated technology and not as part of government cover-ups.

Dan Kahan, Donald Braman, and Hank Jenkins-Smith, of Yale and Georgetown Universities and the University of Oklahoma, respectively, conducted a series of experiments that demonstrated how cultural values and predispositions influence whom we see as a scientific “expert.”35 They concluded from their work:

It is not enough to assure that scientifically sound information—including evidence of what scientists themselves believe—is widely disseminated: cultural cognition strongly motivates individuals—of all worldviews—to recognize such information as sound in a selective pattern that reinforces their cultural predispositions. To overcome this effect, communicators must attend to the cultural meaning as well as the scientific content of information.

Culture in this regard includes those that are created around an anti-science cause. People who oppose GMOs, nuclear power, or vaccinations often turn these ideas into a way of life in which a culture of opposition is created. Disrupting even one tenet of this culture is experienced by its adherents as a mortal blow to their very raison d’être.

At present, we know very little about the best ways to educate adults about science. Some of our assumptions turn out to be wrong. For example, it has long been known that the perception of risk is heightened when a warning about a potential danger is accompanied by highly emotional content. Those who oppose vaccination pepper their outcries with appeals to our most basic human sympathies by detailing cases of children who were supposedly harmed. It is a laudable human emotion that we are instantly moved by a child in distress: while other species sometimes even eat their young, humans have developed a basic instinct to do almost anything to protect or save the life of an unrelated young boy or girl. No wonder, then, that anti-vaxxer advertisements are so compelling. With this knowledge in hand, a group of scientists devised an experiment to see if confronting parents who are ambivalent about vaccination with information about the ravages of measles and other preventable infectious diseases would push them toward vaccination approval. The researchers were surprised to find that their emotionally laden messages actually made things worse: parents who were already scared of the alleged adverse side effects of immunization became more so when they were given these frightening messages.36 We will delve a bit more into the possible reasons for this later in this book.

Similarly, inclusion of more graphic warnings about the dangers of cigarette smoking on cigarette packaging and advertisements was mandated by the Food and Drug Administration in 2012. There is some evidence that this is more effective in motivating people to stop smoking than the previous, more benign health warnings.37 There has been a dramatic decrease in the number of Americans who smoke since the surgeon general issued the first report detailing the risks of nicotine. Nevertheless, after more than 50 years of warning the public that cigarettes kill, it is entirely unclear to what extent educational efforts contributed to that decline. All that is certain is that increasing taxes on cigarettes and enforcing rules about where smoking is allowed have been effective.38 Scare tactics can easily backfire, as has been observed by climate scientists trying to warn us about the severe implications of global warming. Ted Nordhaus and Michael Shellenberger of the environmental research group Breakthrough Institute summarized this issue: “There is every reason to believe that efforts to raise public concern about climate change by linking it to natural disasters will backfire. More than a decade’s worth of research suggests that fear-based appeals about climate change inspire denial, fatalism and polarization.”39 What is needed instead, they argue, are educational messages that stress goals that people will recognize they can actually accomplish. Scaring people may make people pray for deliverance but not take scientifically driven action.

These educational efforts must be extended to our legislators, who often claim ignorance about anything scientific. Politics frequently invades scientific decision making, and here our legislators must be far more sophisticated in understanding the difference between legitimate debates over policy and misleading ones over scientific evidence. That the earth is warming due to human-driven carbon emissions is not debatable. How to solve this problem—for example, with what combination of solar, wind, and nuclear power—is a legitimate topic of public debate. As David Shiffman once complained:

When politicians say “I’m not a scientist,” it is an exasperating evasion… . This response raises lots of other important questions about their decision-making processes. Do they have opinions about how best to maintain our nation’s highways, bridges, and tunnels—or do they not because they’re not civil engineers? Do they refuse to talk about agriculture policy on the grounds that they’re not farmers?40

Educating politicians about science can work, as was recently shown with a project in the African country Botswana. Hoping to improve the quality of discussion, debate, and lawmaking about HIV and AIDS, members of the Botswana parliament were contacted by a research group and asked if they felt they needed more education. As reported in Science, the MPs “cited jargon and unexplained technical language and statistical terms as key blocks to their understanding and use of evidence.”41 They were then provided hands-on training that touched on key concepts of science like control groups, bias, and evidence. The experiment in legislator education seems to have been successful:

The feedback from the Botswana legislators was very favorable; they asked for further session to cover the topics in more detail and for the training to be offered to other decision-makers. After the training … a senior parliamentarian noted that parliamentary debate—for example around the updated national HIV policy—was more sophisticated and focused on evidence.

The researchers involved in the Botswana project are quick to caution, however, that its true value will not be known until the long-term impact is formally measured. Testimonials from people involved in a project like this are a kind of one-way street. Had the legislators given an unambiguously unfavorable assessment of their experience, they would have clearly indicated that the program in its present state is unlikely to be worthwhile. A positive response, however, is less clear. It could be that the program will prove effective or that the people enrolled in it were grateful and therefore biased to give favorable responses. Even when studying science education the usual principles of the scientific method apply and uncertainty is the required initial pose.

Indeed, at present we are not certain how best to convince people that there are scientific facts involved in making health decisions and that ignoring them has terrible consequences. Hence, one of the most important things we will recommend is increased funding by the National Institutes of Health, National Science Foundation, Department of Education, CDC, FDA, and other relevant public and private agencies for science communication research. This is not the same as research on science education for youth, although that too needs a great deal more funding and attention. By science communication, we mean the ways in which scientific facts are presented so that they are convincing. Attention must also be paid to the wide variety of information sources. Traditional journalism—print, radio, and television—has sometimes fallen short in communicating science to the public. In part this is because very few journalists have more than a rudimentary understanding of scientific principles and methods. One study showed that much of the information offered in medical talk shows has no basis in fact.42 But perhaps a bigger problem rests with the perception that people are interested only in controversies. Journalists might try to excuse the dissemination of sensationalistic versions of science by insisting that they are providing only what people want to hear. After all, no one is forcing the public to pay for and read their publications. But the result of this kind of reporting is the fabrication of scientific controversies when in fact none exist. Journalists must learn that the notion of “fair balance” in science does not mean that outliers should get the same level of attention as mainstream scientists. These outliers are not lonely outcasts crying out the truth from the desert but rather simply wrong. Science and politics are not the same in this regard.

Another mistake found in some science journalism is accepting the absence of evidence as signifying the evidence of absence. Let us say for example that a study is published in which a much touted, promising new treatment for cancer is found to work no better than an already available medication. The conclusion of the scientific article is “We failed to find evidence that ‘new drug’ is better than ‘old drug.’ ” The headline of the article in the newspaper, however, is “New Drug Does Not Work for Cancer.” Demonstrating that one drug is better than another involves showing that the rate of response to the first drug is not only larger than to the second drug but that it is statistically significantly larger. Let’s say for example that 20 patients with cancer are randomized so that 10 get the new drug and 10 get the old drug. The people who get the new drug survive 9 months after starting treatment and the people who get the old drug survive for only 3 months. That difference of 6 months may seem important to us, but because there are so few people in the trial and given the technical details of statistical analysis it is quite likely that the difference won’t be statistically significant. Hence, the researchers are obligated to say they can’t detect a difference. But in the next sentence of their conclusion they will certainly state that “a future study with a larger number of patients may well show that ‘new drug’ offers a survival advantage.”

In this case, however, the journalist, unlike the scientist, cannot accept uncertainty about whether the drug does or doesn’t work. A story must be written with a firm conclusion. Hence, the incorrect conclusion that the drug doesn’t work, period. When further research later on finds that the drug does work to significantly improve survival, perhaps for a subgroup of people with upper respiratory cancer, the journalist has moved on to a different story and the news does not see the light of day. Journalists need education in the proper communication of science and the scientific method.

Today, of course, we get most of our information from all kinds of sources. Here again, public education is vitally needed. The idea that the first result that pops up in a Google search is necessarily the best information must be systematically eradicated from people’s minds. The Internet is a fabulous development that facilitates, among other things, writing books like this one. But it is also loaded with polemical content, misinformation, and distortions of the truth when it comes to science. Gregory Poland and Robert Jacobson at the Mayo Clinic did a simple search 15 years ago and found 300 anti-vaccine Internet sites.43 Helping people avoid merely getting confirmation for their incorrect beliefs must be the topic of extensive research.

The Conflict of Interest Conflict

Whom we ultimately select to carry out this education will also be tricky. The charismatic leaders of science denialist groups often have impressive scientific credentials, although they are usually not in the field most relevant to the area of science under discussion. Still, simply having an MD, MPH, or PhD in a scientific field is not sufficient to distinguish a science denialist from a scientist. It is clear that the people who carry out this education should themselves be charismatic in the sense that they can speak authoritatively and with emotion, that they truly care about educating the public, and that they are not angry at “ignorance,” arrogant, or condescending.

One of the most controversial areas, of course, will be “purity,” by which we mean a near complete absence of potential conflicts of interest. A very prominent rallying cry by science denialists is the charge that our health and well-being is threatened by corporations and their paid lackeys. Whoever disagrees with a science denialist is frequently accused of being “bought and paid for” by a drug company, an energy company, a company that makes genetically modified seeds, and so forth.

There is no question that money is a powerful motivator to ignore bias in scientific assessments. Although pharmaceutical companies insist otherwise, it is clear that their promotional and marketing activity directed at doctors influences prescribing behavior.44 Doctors and scientists on expert panels that design professional guidelines for treatment of many medical disorders often receive funding for their research or for service on advisory boards from drug companies that make the same drugs that are recommended for use in those guidelines. Studies of medications that are funded by pharmaceutical companies are more likely to show positive results than are those funded by nonprofit sources like the NIH. As someone who once accepted considerable amounts of money from pharmaceutical companies for serving on advisory boards and giving lectures, Jack can attest to the sometimes subtle but unmistakable impact such funding has on a doctor’s or scientist’s perspective. It is not a matter of consciously distorting the truth, but rather being less likely to see the flaw in a drug made by a company that is providing funding.45

Scientific journals now require extensive disclosure of any such relationships before accepting a paper. Sunshine laws are currently being passed that require drug companies to make known all the physicians to whom they give money. Other scientists and healthcare professionals are rightly suspicious of studies funded by pharmaceutical companies; studies that result in a negative outcome for the company’s product are generally not published, and sometimes negative aspects of a drug are obscured in what otherwise seems a positive report.

The issue is not, of course, restricted to scientists and doctors. A recent article in the Harvard Business Review noted that “Luigi Zingales, a professor at Chicago’s Booth School of Business, found that economists who work at business schools or serve on corporate boards are more supportive of high levels of executive pay than their colleagues.”46 An important aspect to this finding is that the economists, just like doctors in a similar position, truly believed that they were immune to such influence. People who take money from corporations have the same “I am in control” bias that we see when they insist they are safer driving a car than flying in a plane because they are in control of only the former: “Money may influence other people’s opinions, but I am fully in control of this and completely objective.”

Corporate support for science is always going to run the risk of at least creating the impression of bias if not actually causing it outright. Elizabeth Whelan, who founded the American Council on Science and Health, was an important figure in demanding that public policy involving health be based only on sound science. She decried regulations that lacked firm scientific support and correctly challenged the notion that a substance that causes an occasional cancer in a cancer-prone rat should automatically be labeled a human toxin. She famously decried the banning of foods “at the drop of a rat.” Yet despite all of the important advocacy she did on behalf of science in the public square, her work was always criticized because it received major corporate financing. As noted in her New York Times obituary, “Critics say that corporations have donated precisely because the [American Council on Science and Health’s] reports often support industry positions.”47 The fact that Whelan and her council accepted corporate money does not, of course, automatically invalidate their opinions; and even when they made a wrong call, as they did in supporting artificial sweeteners,48 it is not necessarily because manufacturers of artificial sweeteners paid for that support. Corporate support, however, will forever cast a shadow over Whelan’s scientific legacy.

The problem of obscure financial incentives also infects the anti-science side, although this is rarely acknowledged. Figure 1 is used by a group called Credo Action as part of their campaign against GMOs. It describes itself as “a social change organization that supports activism and funds progressive nonprofits—efforts made possible by the revenues from our mobile phone company, CREDO Mobile.” But what is Credo Mobile? It is a wireless phone company that markets cell phones and wireless phone plans, offers a credit card, and asks potential customers to choose them over “big telecom companies.” In other words, it uses social action to advertise its products. Customers might think it is a nonprofit organization, but it is not. We agree with some of the positions Credo takes and disagree with others, but that is beside the point. In their anti-GMO work, they are a for-profit cell phone company opposing a for-profit agriculture company. It takes a fair amount of digging to find that out.

So far, we have focused on conflicts of interests that involve money, but there are a number of problems with the exclusive focus on financial conflicts of interest. First of all, just because a corporation paid for a study or makes a product does not automatically deem it a tainted study or dangerous product. Most of the medications we take are in fact safe and effective. Cases of acute food toxicity are fortunately infrequent. Some pesticides and herbicides are not harmful to humans, only to insects, rodents, and invasive weeds. The involvement of corporate money rightly makes us suspicious and insistent on safeguards to ensure that finances have not led to shortcuts or unethical behavior, but it does not justify a reflexive conclusion that corruption is involved.

FIGURE 1 Advertisement from Credo Mobile, a for-profit company that uses social action to promote its products.

Source: From http://act.credoaction.com/sign/monsanto_protection_act

Another problem is that focusing only on financial conflicts of interests ignores the many other factors that can cause bias in science. In the Brian Hooker example we discussed earlier in this chapter the bias was in the form of belonging to an organization with an agenda that does not permit dissent. Hooker’s views are suspect not because he is getting money from a large corporation but because he belongs to what appears to be a cult of believers who steadfastly refuse to acknowledge anything that contradicts their views. Deep emotional reasons for being biased can be just as strong as a financial incentive.

We are strong proponents of complete disclosure of all competing interests.

In that spirit, we will make our disclosures. Jack worked as a consultant to almost all the major pharmaceutical companies and some minor ones for many years. He was frequently paid by drug companies to give lectures, and a small percentage of his research was also funded by drug companies. He stopped taking money from drug companies in 2003. He was funded for his research by the National Institutes of Health from 1982 until 2006. He has been an employee of private medical schools, a private healthcare company, and of the New York State government.

Sara is an employee of a large, multinational healthcare and pharmaceutical company. She works for the global public health division, involved in improving global public health in resource-limited settings. The vast majority of her work does not involve pharmaceutical products at all. Her company does manufacture a few vaccines and antibiotics, but this is a relatively small part of their business in which Sara does not work. She developed her views on the safety of vaccinations before joining the company.

We are also clear about our positions on the topics we cover. We think every child should receive all of the vaccinations recommended by the American Academy of Pediatrics, that no one should have a gun in his or her home, that antibiotics are overprescribed, that nuclear energy and genetically modified foods are safe and necessary when the appropriate safeguards are in place and carefully maintained, that ECT is a safe and effective treatment for severe depression, and that unpasteurized milk should be outlawed. We hope our readers do not hold these opinions against us. Our opinions are based on our reading of the scientific literature. Many of them are unpopular among our closest friends and family. We also hope our readers will always bear in mind that the main focus of this book is not to argue back and forth about vaccinations, GMOs, or nuclear power but rather to present a framework to understand why people deny scientific evidence and how this situation can be improved.

We believe that without the capacity to make decisions based on science, we are doomed to waste time and precious resources and to place ourselves and our children at great risk. This is why we believe the time has come to more fully explore the intricacies of irrational health beliefs and the responses the medical and health communities have employed. This is why we have undertaken to write this book. Elucidating causes of irrational health decisions and what to do about them is not easy, and we certainly do not claim to have all the answers. But it is essential that we try, since we can say, without exaggeration, that these decisions frequently mean the difference between life and death.

Just a word on the title: Denying to the Grave signifies two themes that are central to this book. The first highlights the potential consequences of denying what science tells us. If we refuse to vaccinate our children, some of them will become very sick with otherwise preventable illnesses. If we take antibiotics unnecessarily, we will promote the spread of resistant bacterial strains capable of causing severe disease and even death. In each case, refusing to accept sound scientific evidence can produce dire outcomes. But we want to make clear once more that this first point, as important as it is to our work, is not where we spend most of our time. The data are overwhelming for each of the scientific issues we use as examples in Denying to the Grave, and extensive reviews of the evidence are not our central purpose. Rather, it is on the second meaning of Denying to the Grave that we focus most of our attention: the willingness of people to “take to the grave” their scientific misconceptions in the face of abundant evidence that they are wrong and placing themselves in danger doing so. We are less concerned with convincing our readers that they should vaccinate their children (of course they should) than with trying to understand why some intelligent, well-meaning people hold tenaciously to the notion that vaccines are dangerous. The same principle holds for GMOs, nuclear power, antibiotics, pasteurization, electroconvulsive therapy, and all the other examples we call upon to illustrate this puzzling phenomenon.

We do not claim to be complete experts on all of the topics discussed in the book, but we are expert at discerning the difference between good and bad evidence. This is a skill we hope many of our readers will strive to attain. And of course, we do not claim to be immune from the psychological tricks that can sometimes cause any one of us to disbelieve evidence. No one is. We simply humbly offer what we do know to improve the way we all think about denial of evidence. As such, this book is aimed at a variety of audiences, from public health and medical professionals to science journalists to scientists to politicians and policymakers. Most important, we hope this book will be of use to everyone who is or has ever been confused about scientific debates they have read about in the news or heard about from friends. We count ourselves in this group, as well as many of our close friends and family, and we hope you will join us in this journey to try to understand where this confusion comes from and what to do about it.