An Introduction to Applied Cognitive Psychology - David Groome, Anthony Esgate, Michael W. Eysenck (2016)

Chapter 13. Music and cognition

Catherine Loveday

13.1 INTRODUCTION

‘Without music, life would be a mistake,’ said the nineteenth-century German philosopher, Friedrich Nietzsche (1888; see Nietzsche et al., 2015). Long before this, the ancient Chinese teacher Confucius (551-479 BC) acknowledged the fundamental importance of music, saying, ‘Music produces a kind of pleasure which human nature cannot do without.’ Few of us would disagree that music plays a powerful part in our lives, with research suggesting that we listen to music nearly 40 per cent of the time (e.g. North et al., 2004). It helps us relax, gees us up, motivates us to exercise, supports learning, facilitates socialising, provides therapy, defines our identity and unites us in common purposes, such as chanting on the football terraces or praying. And music is big business. A report published in 2013 estimated that the UK music industry alone was worth £3.5 billion, with exports worth £1.4 billion (UK Music, 2013).

But can this tell us anything about the human mind? Storr (1992) points out that music is a primal and fundamental human activity, with ancient cave paintings suggesting that it was always a central part of human life. Most would also argue that music is unique to humans, at least in the way that we produce and respond to it. Music is also regarded as a universal human activity, in the sense that all those with a typically developed brain and no neurological injury have a natural ability to acquire basic musical skills. In fact, some would argue that humans have as much of an instinct for music as they do for language (Panksepp and Bernatzky, 2002).

As well as being a unique, universal and fundamental skill, the sheer ubiquity of music demonstrates its social and cultural significance (e.g. Boer et al., 2012), and there is no doubt that it can be enormously emotionally arousing (Sloboda and Juslin, 2010), which has led some to suggest that it may have some evolutionary purpose (Miller, 2000; Panksepp and Bernatzky, 2002). It is easy to see the evolutionary benefit of most of our cognitive and social behaviours, but it is less immediately obvious why music seems to be so powerful and valuable for us, and this alone makes it an important and interesting thing to study. This chapter will explain what music is, how we process and make sense of music and how we develop musical skills. It will also discuss some of the parallels between music and language and will finish by looking at the importance of musical memories.

13.2 MAKING SENSE OF MUSIC

What is music? How are we able to recognise the difference between non-musical sounds, such as traffic noise or a vacuum cleaner, and a nice piece by Mozart or a song by our favourite band? The Oxford English Dictionarydefines music as ‘An art form consisting of sequences of sounds in time, esp. tones of a definite pitch organized melodically, harmonically, and rhythmically.’ Aniruddh Patel (2010, p. 12) uses a similar definition but specifies that the organised sequence of sounds must be ‘intended for, or perceived as, aesthetic experience’. Using these definitions, we can work on the basis that sounds must be deliberately organised and that they must have some aesthetic value.

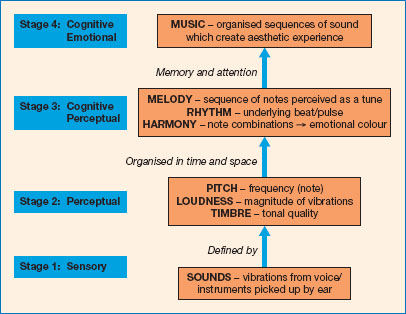

Figure 13.1 gives an overview of the processes involved in understanding music. First, our ears have to detect sounds and send this information to our brain (stage 1). We then process the characteristics of these sounds (stage 2) and establish how they relate to each other (stage 3). Finally, a range of other cognitive and emotional processes are brought in, which allow us to understand, interpret and respond to this collection of sounds and recognise it as music (stage 4). This section will examine each of these stages in turn and explain the overall sensory and cognitive stages involved in the perception of music. The section takes a bottom-up approach to explaining music perception, but it should be noted that top-down processes are also important (e.g. Iversen et al., 2009; Tervaniemi et al., 2009).

Figure 13.1 Stages involved in music perception.

THE SENSORY BUILDING BLOCKS OF MUSIC

Let us look first at what we actually mean by sound. In simple terms, a sound is produced when something causes air molecules to vibrate, for example when a guitar string is plucked, a drum is banged, a hi-fi speaker vibrates or when someone coughs or speaks. These vibrations propagate through space as sound waves, much like the ripples you see when you throw a stone into a pond. When the sound waves reach our ear, the vibrations are transferred to the ear drum, then to the bones in the middle ear and finally to the fluid in the inner ear where the mechanical vibrations get transformed (i.e. ‘transduced’) into nerve impulses, which then travel to the primary auditory cortex in the brain (see Chapter 4).

Our world consists of an infinite array of sounds but they are characterised by three basic elements: pitch, loudness and timbre. Pitch is what a musician might call the ‘note’ (e.g. middle C or F sharp) and this is essentially how high or low it sounds. The pitch is determined by the frequency of the air vibrations, so the voice of a screaming child will cause very fast vibrations of air molecules (i.e. high frequency), and the deep rumble of thunder will cause very slow vibrations (i.e. low frequency).

Loudness, as the name suggests, describes how loud a sound is and is determined by how big the vibrations of air molecules are. So a scream will cause bigger vibrations than a flute, for example, and therefore have a louder volume. The timbre of a sound is essentially the tonal quality that enables us to identify it, so for example a flute has a very different sound from an electric guitar even when they are playing the same note. The reason that different instruments and different voices have different timbres is because of additional ‘harmonic’ vibrations that occur when the sound is produced (see Chapter 4 for more detail).

NATURAL GROUPING OF SOUNDS

In the first sensory step, the ear collects the sounds around us and the auditory nerve carries information about the pitch, loudness and timbre of sounds to the auditory cortex. This is still a long way from explaining that shiver down the spine we get when we hear our favourite song though, so how do we turn all these sounds into something meaningful? The next stage requires us to identify and process a stream of skilfully coordinated sounds from many different instruments. Take, as an example, the song ‘Happy’ by Pharrell Williams, best-selling song of 2014 in both the UK and the US (Official Charts). When we hear this song, we are able to process and combine the sounds from a set of drums and other percussive instruments, a guitar and bass guitar, a keyboard, lead vocal and backing vocals, clapping and so on.

A critical feature of music is that sounds stand in significant relation to one another: the first note of any well-known nursery rhyme means nothing on its own but most of us would be able to identify the tune by the time we hear the first four or five notes. Likewise, a single bass drum beat cannot be described as music but a regular beat from a bass drum combined with alternating strikes on a snare drum creates a familiar basic rock or pop beat. Loudness, timbre and pitch all play a key role as well, with certain regular beats being played louder and the snare drum having a different timbre and pitch from the bass drum (see exercise, Box 13.1). This process of grouping and organising sounds in terms of time, pitch, timbre and loudness continues, as each new instrument or voice is added and the relationships range from very simple to very complex.

So in order to hear music, we need to be able to identify and recognise patterns of sounds. This seems to rely to a large extent on the ‘Gestalt principles of perception’ (explained in detail in Chapter 4). These primitive perceptual tendencies mean that when we are listening to a complex piece of music, we are able to group together the elements of sound that come from the same source (e.g. the bass drum) and separate those that don’t (e.g. the guitar).

Box 13.1 Exercise

1 Find a hard surface such as a table and tap a regular beat with your left hand at a pace of roughly one a second.

2 Now start counting in time with the tapping in cycles of four: ‘1, 2, 3, 4, 1, 2, 3, 4’ etc.

3 Try putting an emphasis on the beat, each time you say the number 1: ‘1, 2, 3, 4, 1, 2, 3, 4’ etc. You should now hear a regular beat, which musicians refer to as ‘common time’. Notice the sense that the beats are in groups of four.

4 Now try this: keep your left hand going as above but start tapping your right hand in between each left-hand beat. If you can manage this, you have the basis of a simple pop beat.

The two Gestalt rules that seem particularly important in music perception are proximity - sounds that seem to be close together in space - and similarity - sounds that seem to be close in pitch, timbre or loudness. Often these will be mutually reinforcing, so if you are sitting in your garden you may hear birdsong, a sequence of sounds that are similar in pitch, timbre and loudness but also coming from the same location. These clues help to separate the birdsong from the sounds of people talking, a dog barking or an aeroplane flying overhead, despite the fact that the ears are receiving all this information at once. A similar process happens when listening to a band, a choir or an orchestra.

These principles of perceptual grouping play an important role in how music is composed, performed or produced. With a pop record, a good mix engineer will make sure that there is clear perceptual distinction between individual instruments by (i) separating them out spatially, (ii) ensuring that the volume of specific instruments is generally consistent and (iii) reducing overlapping frequencies or timbres. This same approach has been used by classical composers for centuries, and these techniques applied effectively can make a huge difference to the clarity and meaning of a piece of music.

Early experimental evidence for these Gestalt perceptual tendencies in music came from a study by Dowling (1973), who found that it was difficult for listeners to identify two simultaneous melodies if they were close in pitch. However, if the melodies were separated in pitch (e.g. one performed in a high register and one in a low register), the task became much easier. This effect has been described as both ‘melodic fission’ and ‘pitch streaming’ - the tendency for us to group sounds that are close in pitch - and explains how we are able to distinguish, for example, a bass guitar from the singer. The task is also made easier if participants are given prior information about what to listen out for (e.g. Deutsch, 1972) - an indication that top-down processing is an important part of the process.

Another early study by Deutsch (1975) showed how powerful ‘pitch streaming’ can be. She used ascending notes of a major scale (e.g. C-D-E-F etc.) and descending notes of the same major scale (e.g. C′-B-A-G etc.), but instead of playing each of these as sequences, she broke them up and interspersed them so that the right ear could hear C-B-E-G etc. and the left ear C′-D-A-F etc. (see Figure 13.2). Note that C′ denotes a C that is an octave higher than middle C. This means that the ascending scale starts on a low C and the descending scale started on a high C.

Rather than hearing two random sequences of notes jumping about in pitch, the listener hears them as two distinct but concurrent ascending and descending scales. Despite the fact that these sequences of notes were separated in location, the auditory system grouped each note with the one that was closest in pitch rather than the one that was closest in location. Deutsch found that even if she used different instruments for each sequence, people would still hear this ‘scale illusion’, leading her to conclude that pitch grouping is a more powerful perceptual clue than spatial location or timbre.

It seems, then, that we have mechanisms for being able to identify different instruments or voices from within a complex piece of music, much like we distinguish between the different sounds around us in our everyday lives. Of course, the clusters of sounds we might hear in our environment are random and independent; as an ensemble they do not generate any specific meaning. In specific contrast, music is created when sequences of sounds are deliberately and carefully organised so that together they create an aesthetic, meaningful experience, for example a tune sung by a voice alongside a rhythmic backdrop of other voices or instruments.

Figure 13.2 Example of stimuli that create the scale illusion.

MELODY, HARMONY AND RHYTHM

In order to understand the next stage in the perception of music, we need to consider three more important terms: ‘melody’, ‘harmony’ and ‘rhythm’, each of which emerges from the perceptual grouping of sounds according to pitch, timbre and loudness. Rhythm refers to the underlying pulse of a piece of music - the thing that makes you want to tap your feet - and in psychological terms this emerges when we hear a regular identifiable pattern of strong and weak beats. Rhythms may be very simple, like the basic pop drumbeat described earlier, or more complex, such as you might hear in African drumming or salsa music. A melody is essentially a tune, i.e. a sequence of notes that we perceive as a single entity, almost like a musical sentence. Harmony refers to the effect that occurs when notes are played or sung at the same time, for example a chord on a guitar. In a typical pop song the melody is often marked by the lead singer, but at any point in time each note of the melody is accompanied by a different set of notes from additional voices or instruments, and this is the harmony. Melody is often described as the horizontal element of music and harmony the vertical element. These three elements - melody, harmony and rhythm - form the basis of music and give rise to a sense of meaning and emotional character.

THE ROLE OF ATTENTION IN MUSIC LISTENING

In order to derive meaning and emotion from the overall listening experience, another important cognitive skill comes into play. This is the need to identify and attend to different elements of the music and be able to switch our attention between them accordingly. It is well established that the human brain has a limited attentional capacity (Allport et al., 1972), meaning that we cannot process and interpret more than one stream of similar information at the same time; hence we are not very good at listening to two people speaking simultaneously. In music this means that we can only attend to part of the overall musical picture at once, so for example if two or three people are singing together in harmony (think of The Beatles), we can only give our full attention to one voice at a time. In that early Dowling study (1973), where participants were asked to identify two simultaneous melodies, it became clear that even when the task was made easier by separating the melodies in pitch or timbre, it was only possible for participants to identify one melody at a time. I have repeated this experiment in my lectures over many years and even the best musicians need a minimum of two hearings to identify two short melodies played simultaneously.

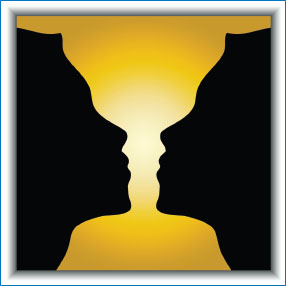

This does not mean that the ‘background’ sounds are not important, though. Far from it! As the very famous cocktail party effect illustrates (see Chapter 1), even though we can only fully focus on one auditory stream at a time, we are still able to hear and monitor all the other streams of sound. In fact, as explained above, the harmonic and rhythmic backdrop provides a crucial context and much of the emotional character of a piece of music. Some people have described polyphonic music (where there is more than one voice or instrument) as an ambiguous pattern capable of figure-ground reversal (see Figure 13.3). So at any given time we may focus on one line of music, but our focus can very quickly shift to another part. A good analogy is to imagine looking around a wood land. We can only cognitively process one part of that visual scene at a time - a few trees maybe - yet we maintain a sense that these are part of a bigger collection of trees. This happens because our focal point constantly shifts, allowing working memory to temporarily store details and fill in any gaps, thus giving our conscious mind a sense of the whole picture. To some extent we choose what we want to look at, but at other times our attention might be drawn in a particular direction because there is movement or change. All of this is true of listening to music (see Box 13.2).

A series of studies by Sloboda and Edworthy (1981) have shown that various things affect how easily we shift our attention around the musical scene. They used an elegant experimental paradigm to test this. First, particpants were asked to listen to three different melodies until they were able to recognise them reliably. Then they heard these melodies played simultaneously but, critically, in some trials there was an error in one of these melodies. The rationale is that if the participant happened to be focusing on the line with the error, they would be able to identify where the mistake lay, but otherwise they would be unable to locate the source of the error, even though they might often be able to tell that something didn’t sound right. We can explain this using our earlier woodland analogy: if something unexpected happened in the tree you were looking at, you would be able to see and report it, but if something unexpected happened in a different part of the scene, you might be aware that something had changed but you would be unable to describe exactly what happened.

Figure 13.3 An example of figure-ground switching: a vase or two faces?

Source: copyright Peteri/Shutterstock.com.

Using this paradigm, Sloboda and Edworthy (1981) showed that greater musical experience led to better performance, presumably because experienced musicians can learn the melodies quicker and are generally more adept at moving their attention from one part to another. Conductors and sound engineers often say that they can listen to every part at once and indeed they are often very good at identifying where an error lies. However, this sense of being able to process holistically comes from being generally more skilled at moving their attention around and is also affected by how well they know the piece of music they are working on. In reality, when they spot a mistake, conductors or musical directors often have to ask different sections of voices or instruments to replay their parts individually so that they can identify exactly where the error lies.

How and where we focus our attention is therefore an important part of music cognition, and Sloboda (1985) has pointed out that good composers are very skilful at directing listeners around different parts of the musical scene. Our natural tendency is to focus on the upper line, so in classical and jazz music, principal themes will often be carried by high-pitch instruments, such as a violin or trumpet. There is also a natural pull towards the human voice, particularly if there is lyrical content, so for pop and folk music our attention may tend towards a singer and in particular the lead singer who will often be louder - volume being another important factor. So in general, composers can use pitch, loudness and the innate significance of the human voice to lure us towards particular lines within the whole musical scene.

Box 13.2 Attention switching in music listening

First of all, take a moment to listen to all the different sounds around you. Focus for a short while on one particular sound, but notice how other sounds may draw your attention. Now actively move your attention to different sounds and see how many you can identify.

Try doing this same exercise with a piece of music, if possible with headphones. You may have to listen a few times to be able to do this properly. Notice what grabs your attention first of all - a voice maybe, or a drum beat? Listen carefully to the pitch and timbre of that instrument and try to stay focused on it for as long as possible. Next, take time to observe the other instruments and see how many different ones you can pick out. Once you have done this, allow yourself to listen more naturally to the music. Notice where your focus is, and when it moves from one voice or instrument to another, try to identify what it is about it that has attracted your attention. The more you do this exercise, the better your musical listening skills will become.

Our natural propensity for ‘attentional conservatism’ means that we will tend to stick with the same line unless something entices us away; however, if our attention is held in one place for too long, the music may start to become boring (a bit like listening to one person speaking for a long time!), so an important part of the compositional process is to periodically direct attention elsewhere. Luckily, human beings have an excellent innate ‘orienting response’, which means that if we sense change in any part of our environment we will quickly direct our attention towards it. Within music, any change in the quality of a given instrument - timbre, texture, volume - will make our ears prick up. A very good example in pop music is a lead guitar solo, where the performer uses all of these techniques to attract attention towards their melody.

Of course, it is not only the composer who manages and directs the listener’s attention. In classical music, the conductor and performers play a critical role by maintaining or changing volume, timbre and texture, and in modern recorded music, these characteristics are also hugely influenced by the person recording or mixing the overall sound.

THE ROLE OF MEMORY IN MUSIC LISTENING

Given that the meaning of every musical sound relies on the context of what has come before, we cannot complete a discussion on hearing and understanding music without considering the role of short-term memory. Music perception relies heavily on working memory, since we need to be able to temporarily store patterns of sound activity and then pull them together to form a coherent whole. Long-term musical memory is also highly significant, but this will be covered later in the chapter and here we will focus on the memory mechanisms relevant for music perception.

Much of the work on musical memory is based on recognition tests, but these only really give us half the story. Testing recall for music, however, is tricky for a number of reasons (see Müllensiefen and Wiggins, 2011 for an in-depth discussion). Unlike recalling a piece of linguistic text, many people may find it difficult to reproduce what they’ve heard even if they do remember it. It is possible to ask people to hum or play back short sequences of notes, but only for very simple melodies or rhythms. Additionally, in a traditional word recall test, the order is usually not important, whereas in music this is critical. Another problem is that all errors are not equal - some wrong notes are more wrong than others - so issues of accuracy become much harder to assess.

The most rigorous work on melody recall comes from Sloboda and Parker (1985), who devised a paradigm where participants were played short, unfamiliar folk melodies that they were asked to sing back. This stimulus plus recall was repeated six times with the same tune and a complex scoring method was devised that included measures of melodic contour, timing and phrase structure. They found that all participants produced valid and measurable recall responses but, interestingly, not one single recall attempt over all trials was note perfect. Despite this, their results did show that participants demonstrated real memory for the music, that they retained the underlying timing structure and that musicians were better at retaining the harmonic structure. Their scoring inevitably involved a degree of subjectivity, but a more recent paper by Müllensiefen and Wiggins (2011) has been able to confirm almost all of their findings using a thorough computational analysis.

Given that experimental work shows such limitations when it comes to precise recall of music, how can we explain why a good pop or folk musician can hear a song once or twice and then produce a relatively accurate copy? And how do we account for the case of Mozart, who is famously cited as having written down a complete 11-minute piece of music - Allegri’s Miserere - at the age of 14 (Sloboda, 1985), having heard it just twice. Sloboda (2005) draws on his own results and those of others to suggest that there are two important cognitive mechanisms that allow us to build up a memory for music. First, he hypothesises that we construct mental models of the underlying structure without remembering all the surface details. This has some parallels with Bartlett’s notion of schemata (see Chapter 1) and is much like remembering a story we’ve been told - we can store the overall meaning and the basic order of events, even if we don’t remember the precise wording. Recognition memory studies support the idea of abstract representation, showing that music can still be recognised, despite changes in instrumentation, loudness, tempo and register (Jäncke, 2008). The evidence from Sloboda and Parker’s study suggests that this ability to form a mental model improves with greater musical experience and knowledge of genre-specific rules.

Sloboda has also argued that musical memory is enhanced through ‘chunking’, a technique that hugely increases our short-term memory capacity (see Chapter 6). He suggests that we do this by segmenting music into meaningful chunks - using structural clues such as pauses, changes in instrumentation and rhythmic markers - and that these chunks are then retained in order (Williamon and Valentine, 2002, offer good support for this). We can boost our chunking ability through a number of additional cognitive tricks, such as identifying sequences that are the same or have slight variations (think of the first two lines of ‘Happy Birthday’, for example), or using musical knowledge or experience to label a particular chunk that is used commonly in other pieces of music.

The ability to store a mental model and use chunking may go a long way to explaining Mozart’s prodigious memory: Allegri’s Misereri consists of seven almost identical musical sequences, conforms to the musical rules that Mozart was already very familiar with and is based on a religious text that was easily available to him. Clearly the accuracy of his memory goes way beyond most of our capabilities, but it is likely that Mozart was just particularly good at using the two same basic memory techniques that all of us use every day. As Williamson (2014) so neatly puts it, ‘musical memory is a skill, not a gift, one that develops with practice and relies on the types of techniques that just about any memory expert will use’.

13.3 DEVELOPMENT OF MUSICAL SKILL

I would challenge any person leading a relatively typical life to go for more than a week without hearing the music of, or at least coming across a reference to, either Mozart or The Beatles. In my life, I’ve been lucky enough to watch many brilliant musicians perform, and my own music collection is a testament to many excellent composers of all genres from across the centuries. But every now and then, there are musicians that stand out from the crowd, those that seem not just to be brilliant but somehow extraordinary. What is it about the music of Mozart and The Beatles that has made it so enormously successful and ubiquitous? Is there such a thing as innate musical talent, or does anyone have the potential to be the next Wolfgang Amadeus Mozart or Paul McCartney?

A well-accepted theory of musical development comes from Sloboda (1985), who identified two separate but overlapping stages of musical skill acquisition. He suggests that from birth until the age of about 10, the dominant developmental process is enculturation. During this stage, musical skills are acquired passively and without self-conscious effort or instruction, simply through natural environmental exposure to music. Sloboda suggests that during enculturation, children will achieve basic musical skills in the same order and at roughly the same ages and that this occurs because of the specific combination of (i) a rapidly changing cognitive system, (ii) a shared set of primitive capacities and (iii) a shared set of cultural experiences. It is worth noting that Sloboda confines his theory to Western culture, since this is where the bulk of the research has been carried out.

Figure 13.4 Mozart and The Beatles are among the most successful musicians ever.

Source: copyright (a) Everett Historical/ Shutterstock.com, (b) Andy Lidstone/ Shutterstock.com.

From the age of 10 onwards, Sloboda argues that the dominant process is training, which relates to the active and deliberate development of specialised musical skills through conscious effort and often instruction from an expert. Training is built on the foundations of enculturation but requires specific experiences that are not chosen by, or available to, all members of the culture.

EARLY MUSICAL DEVELOPMENT

Measuring musical ability in a young infant is quite difficult for obvious reasons, but musical knowledge can to some extent be inferred by observing behaviour or measuring physiological changes. A huge early landmark study by Moog (1976) assessed musical behaviour, specifically movement and vocalisations, in 500 children between the ages of 0 and 5. He played six different sets of sounds: (i) nursery rhymes, (ii) instrumental music, (iii) pure rhythms, (iv) words spoken to a rhythm but without pitch, (v) dissonant music (i.e. music with notes that ‘clash’ and sound uncomfortable) and (vi) non-musical sounds such as traffic. Results from this study still form the foundations for our knowledge of early musical development. For example, he found that 6-month-old babies responded more to musical sequences, regardless of whether they were consonant or dissonant, than they did to purely rhythmic sounds.

He also demonstrated other aspects of musical development that have since been supported by more recent studies. When it comes to singing, children typically show the first signs of spontaneity around 12-18 months, although tunes are simple and the intervals between the notes are small. Ability develops quickly though, and by the age of 2 or 3, children are able to create songs that are longer and show more organisation. Within another year they are starting to make songs using parts of melodies they have heard elsewhere. In fact, spontaneity decreases quite rapidly over the ages of 4-5 and children replace their own creative song-writing with imitation or songs they have heard or been taught by adults and older children. We must bear in mind that these findings are based on research with Western children and it is important to question to what extent this favouring of imitation over creativity is shaped by our environment.

Moog’s study also examined rhythmic behaviour and he showed that children tend to move to music from quite an early age, swaying rhythmically from about 6 months and more obviously ‘dancing’ or ‘conducting’ by 18 months. Interestingly, in all but 10 per cent of 2-year-olds the movements are not synchronised to the music; in other words, the dancing is not in time. This does not get much better until after the age of 5, but once again, as ability increases, spontaneity decreases: most 1-year-olds will start dancing as soon as they hear any kind of beat, but by the time they reach school age, the vast majority of children have to be actively asked to dance.

But how early does musical awareness kick in? Sarah Trehub has spent over 40 years investigating development of musical skills in young infants. One of her first studies (Chang and Trehub, 1977a) used a clever experiment to investigate whether infants could learn melodies. They created a novel six-tone melody and played it fifteen times to a group of 5-month-old infants while measuring heart rate. When the infants first heard the melody their heart rate would rise, indicating that they were experiencing a novel event, but after a while the heart rate settled to a stable lower rate, showing that the babies had habituated to the melody. The infants were then given one of three different melodies to listen to: (i) the control melody, which was exactly the same as the one they had habituated to, (ii) the same melody but played in a different key, i.e. exactly the same pattern of notes but starting on a higher or lower pitch, and (iii) the same set of notes but sequenced differently to make a new melody. They found that the novel melody caused a change in heart rate, whereas the other two melodies didn’t. From this, Chang and Trehub were able to show that the infants had learnt the melody and were able to recognise it when it changed. Crucially, the babies were responding to changed relationships between the notes, as opposed to any absolute change in pitch, a sign that this was a genuine musical recognition.

Chang and Trehub (1977b) used the same technique to show that babies of a similar age were also able to learn basic six-note rhythms. Later work by Trehub’s team investigated musical knowledge by applying a listening behaviour paradigm, where babies and children were trained to respond to new musical sequences using reward schedules. Using this technique she has been able to show, not only that we are able to learn melodies and rhythms at a very young age, but also that ‘good’ melodies that fit the rules of typical Western music are easier for infants to learn than ‘bad’ melodies (Trehub et al., 1990).

More recent studies have suggested that even newborns are able to recognise melodies and rhythm. Granier-Deferre et al. (2011) carried out a controlled study where foetuses were exposed to a descending piano melody twice a day during the 35th, 36th and 37th week of pregnancy. Six weeks later they played this melody back to the babies when they were asleep, as well as a matched ascending melody. Heart rate measurements were significantly different for the melody they had heard in the womb compared with the new melody, whereas control infants did not show this distinction. Similarly, Winkler and his colleagues (2009) were able to use an EEG paradigm to show that newborns can detect rhythm.

There is little doubt, then, that musical skills kick in at a very young age and seem to be instinctive, which is not surprising when we consider how much of our linguistic communication depends on recognition of patterns in pitch, timbre and loudness. Panksepp and others argue that music is a vital element of pre- and para-linguistic communication (Panksepp and Bernatzky, 2002): think of the soothing musical sounds a parent uses to calm a distressed child, or the contrasting sounds that are used when a child is misbehaving, not to mention other obvious musical vocalisations such as screaming, laughing and crying.

Figure 13.5 Babies are able to learn rhythms and melodies at an early age.

Source: copyright Ipatov/Shutterstock.com.

It is outside the scope of this chapter to provide a comprehensive review of musical development, but overall, findings suggest that during the enculturation period, awareness of emotional content, style and genre becomes increasingly sophisticated (Sloboda, 1985). Importantly, it seems that, as with language, children are able to use musical rules about harmony and structure in producing and perceiving music before they are able to explicitly recognise when these rules are violated, but that by the age of 12-13 they are as good as adults at detecting when something doesn’t sound right (Sloboda, 1985).

DEVELOPING MUSICAL EXPERTISE

During our childhood, we all acquire the basic musical skills needed to recognise songs. We can sing ‘Happy Birthday’, dance or tap our feet in time, or indeed be emotionally moved by a piece of music - we become enculturated. However, as with many other activities, such as football, drawing, mathematics, chess, even computer games, a subset of the population will go on to develop more specific skills and indeed some will become world-leading experts. In music, we tend to think of the real stars as being brilliant performers - the Michael Jacksons and Nigel Kennedys of this world - or even brilliant composers such as Ludwig Van Beethoven or Hans Zimmer. But music is a multidimensional skill and there is just as much musical expertise in many of our best conductors, sound engineers, music producers and music critics. However, musical proficiency doesn’t come easily or quickly to anyone. A famous landmark study by Ericsson et al. (1993) found that it took a minimum of 10,000 hours of practice over 10 years to become an expert violinist or pianist.

So what is the secret recipe to becoming a brilliant musician, and is it something anyone can achieve with enough practice? This is a matter of huge debate, with some (e.g. Sloboda, 2005) arguing vehemently that there is no such thing as musical genius, and that anyone can become a great master given the right conditions and opportunities. Others (e.g. Mosing et al., 2014) offer evidence that refutes this, suggesting instead that innate talent is a prerequisite.

In my experience, everyone has quite a strong opinion on this, but what none of the theorists would contest is the fact that musical brilliance requires time and effort - this is Sloboda’s training period, where a subset of the enculturated individuals purposefully work on developing their musical expertise. Over the years, many different learning theories have been applied to the development of musical expertise. Each of these offers relevant and helpful perspectives, but from a practical psychological point of view they all boil down to proficiency in two key domains: listening and technical skill.

Let us look first at what is entailed in developing the physical skills needed to be a first-class electric guitar player or a brilliant pianist. Just like mastering any other coordinated movement - learning to walk, drive a car, play tennis - this requires the strengthening of specific muscle groups and the encoding of complex motor sequences in the brain. There are three vital prerequisites for this type of learning: ‘motivation’, ‘repetition’ and ‘feedback’. So first, an individual must have motivation, i.e. they must feel a desire to play an instrument and want to engage with the activity. This may be an intrinsic motivation, where someone is internally driven, maybe because of an inherent love of music, or it may be more of an extrinsic motivation where they want to learn because they anticipate that it may lead to some material or social reward.

Repetition, or practice, is probably the most obvious aspect of any learning. For the muscles to get stronger they need to be worked, and for the brain to store the memory of specific motor sequences the relevant neuronal pathways need to be stimulated over and over again. One of the big questions in music education is how to practise effectively, and this is where a good teacher can be helpful. For example, it is very common for a beginner to go back to the start of a piece each time they make a mistake. The trouble is that, more often than not, they will make the same mistake and then repeat the cycle of going back to the beginning and making the same mistake again. In other words, they are effectively encoding the mistake! A far better strategy is to play through the notes in a slow but error-free way so that the correct neural pathways are being stimulated. The pace can then be increased as the task gets easier. A good teacher will also make sure that a student can play from different starting points and not just the beginning; this is a sign that they have developed multiple representations of the music and makes for a more secure performance.

As Sloboda (1985) points out, the ultimate goal may be to be a brilliant player, but this will only be attained via many subgoals, starting with the simple goal of playing a single note and gradually building up to higher-level goals, such as playing sequences of notes, then being able to play the same sequence in different styles etc. In psychological terms, much of this process is about turning conscious declarative memories (knowing how to perform these actions) into automatic procedural memories (being able to perform these actions), which then frees up working memory to deal with higher goals that give the music more finesse. The same memory tricks we use in perception are also relevant here, because we learn how to chunk particular motor sequences together and these can then be stored and retrieved more efficiently, hence the value of scales and exercises.

The final, but equally vital, component of skill learning is accurate and timely feedback. Given that learning happens by repeating an action, or sequence of actions, it is important to know if it has been performed correctly. This can be difficult for the inexperienced performer, especially when they are focusing so heavily on the mechanics of creating the sound, and once again this is where a teacher may help, as they are able to provide useful feedback about errors and can also boost motivation through fitting praise. To become an expert performer, though, it is essential that a person learns how to listen to themselves, to know whether what they are doing is in tune and in time, has a nice timbre, and importantly whether it expresses the music in a way that is moving and connects with the listener.

So, we have seen that many hours of practice - of motivation, repetition and feedback - are needed for someone to master an instrument, but this is still only half the story. Equally important is the development of listening, or aural, skills, not only for that vital self-feedback but also because this enables an individual to recognise and learn the ‘dictionary of expressions’ used by performers and composers to ensure that the music communicates effectively (Sloboda, 2005). This includes devices such as slowing down/speeding up, changing volume, emphasising particular notes or altering the timbre, all of which provide meaning about structure and are a vital part of making music emotive and meaningful (Bhatara et al., 2011). Music played without these nuances sounds very unemotional and is often described as ‘robotic’ or ‘wooden’, in much the way that language does when speech lacks prosody.

An aspiring performer therefore needs to listen to enough music to learn the rules of expression, but must also practise enough to acquire the technical dexterity to realise these aims. A final skill that can be useful and is sometimes essential, particularly for classical performers, is that of reading notation. Unlike language, where we learn to speak a good few years before we learn to read, most musicians are taught to read music at the same time as they are taught to play, and this can create a heavy load on working memory, which often means that note-reading skills suffer (Sloboda, 1985). As with technical and listening skills, most studies show that repetition and the use of chunking and other cognitive strategies are important aspects of successful sight-reading (e.g. Pike and Carter, 2010). It has also been shown that sight-reading expertise is predicted by someone’s ability to hear the music inwardly, as well as generic cognitive skills such as processing speed (Kopiez and In Lee, 2008).

Of course, it is possible to become an expert musician with strong development in one but not all of these areas. A neuroimaging study by Münte et al. (2006) demonstrated very clearly that different types of musicians develop different strengths and use different areas of their brain. Our best music producers and conductors will not necessarily be performers, but their aural skills will be extremely well developed, and some of our greatest pop stars, and indeed musicians from other cultures, may not be able to read music at all but are still able to put on a magical performance. Composing is another distinct area of expertise, and while it requires many of the above skills and many hours of practice, there are other important factors that have been identified, including ‘belief in self’, ‘unwillingness to accept the first solution as the best’ and the presence of ‘supportive but searching critics’.

Let us return briefly to that thorny question of whether absolutely anyone has the potential to become a brilliant musician. We have seen that motivation is a key factor, and there is good evidence that some people are genetically more driven to engage with music (e.g. Mosing et al., 2014; Hambrick and Tucker-Drob, 2014); likewise, maybe some people have a more highly developed auditory cortex or a better working memory (Janata et al., 2002), and there is good evidence that pitch perception and musical aptitude have a genetic basis (Gingras et al., 2015). But as with all nature-nurture debates, it is virtually impossible to separate environment from genetic predisposition (although see Levitin, 2012 for an excellent discussion). Undoubtedly, some physical, genetically defined characteristics are likely to have an impact, especially on performance, but the more intangible characteristics such as engagement and auditory perceptual acuity are so susceptible to plasticity, even from before birth, that it is incredibly difficult to determine empirically.

13.4 MUSIC AND LANGUAGE

The opening lines of ‘Sir Duke’ by Stevie Wonder claim that music is a language that we can all understand, but is this really true? Most people would agree that music is a powerful form of communication, and indeed, this is the reason that music is used so successfully as a therapy in children with autism and other communication disorders (see Geretsegger et al., 2014 for a review). But what does music communicate? And does it communicate the same thing to all of us? In this section we will consider these two basic questions: whether music can be described as a language and whether it communicates universally.

It is not difficult to see the many similarities between music and language. At a very fundamental level, both language and music are produced and received via the auditory-vocal systems, meaning that they use shared physical structures such as the ears and vocal cords, and there is significant overlap in the neural mechanisms involved in processing music and speech (e.g. Sammler et al., 2009; Peretz et al., 2015). As we have seen in the previous section, there are also a number of developmental parallels, for example the natural ability to learn simply through passive exposure and the fact that our receptive skills precede our productive skills, i.e. we are able to understand the rules of music and language long before we are able to apply them. As Sloboda (1985) points out, another similarity lies in our ability to continually generate novel sequences of both notes and words. He also highlights the fact that most cultures have developed methods for recording both speech and music in a written form, using symbolic representation that denotes not just the notes and words but many other elements of expression, such as pauses, boundaries and grouping markers.

Probably the most complex but important parallel between music and language is the notion that they both have an underlying grammar (Lerdahl and Jackendoff, 1983) and they rely on internal psychological representations that we can describe in terms of ‘phonology’, ‘syntax’ and ‘semantics’. Sloboda provides an in-depth discussion on this (Sloboda, 1985, 2005), but it is worth summarising here. Phonology relates to the way in which we categorise sounds into discrete, identifiable, units. In language these phonemes refer to the building blocks we use to make words, e.g. ‘t’, ‘ea’, ‘ch’ etc., while in music these are the notes. Studies have shown that we use very similar perceptual processes to distinguish between different sounds, e.g. ‘ch’ and ‘sh’, and different notes, e.g. C and C sharp (Locke and Kellar, 1973).

Syntax concerns the rules that govern how these sounds are put together so that they can effectively convey an intended meaning, such as the construction of sentences or melodies. In both cases, it seems that it is easier to remember sequences that follow convention, i.e. conform to the syntactic rules (Sloboda, 2005, p. 179). Finally, semantics is a term used to describe the meaning of a musical or linguistic form - the underlying thought, object or event that is being represented. Despite these many parallels between music and language, this is one domain where there may be a fundamental difference. While music may communicate something and can do so very powerfully, the meaning is intangible, ambiguous and difficult to pass on to someone else. Language, on the other hand, has very specific and concrete meaning, and we would have little problem passing on the general gist of something that has been spoken or read.

Sloboda (2005) notes another key difference between language and music: speech tends to be asynchronous and alternating - one person says something and another replies and so it goes on - while music, on the whole, is a synchronised activity with performers making sounds together, in time with each other. The extent of this synchronicity was illustrated in a fascinating study by Lindenberger et al. (2009), who used EEG measurements to show that the neural activity of two guitarists became coordinated when they played a duet together.

So, to come back to our original question, can we describe music as a language? Clearly there are many parallels in the way they are learnt, produced, received and represented, and there is no doubt that both are used as a way of communicating and connecting with other human beings. It is also indisputable that music and language are inextricably linked, in that melody, pitch and rhythm are an important aspect of speech, and music is often accompanied by words. However, there are many theories, definitions and philosophies of language, and whether music can truly be described as a language ultimately depends on from which of these perspectives the question is viewed (see Sawyer, 2005 for a detailed discussion on this).

So there seems to be a good consensus that music does communicate, albeit in a different way from language, but can it transcend cultural and generational boundaries? Music differs drastically from age to age, culture to culture and subculture to subculture. In fact, it is often a key feature by which a group defines itself, so at first glance the obvious answer to this would be ‘no’! Someone who has spent their life listening to Beethoven and Bach may find it hard to relate to a 70s punk band or a piece of Indian Raga - not only are the melodies, harmonies and rhythms worlds apart, they also use different instruments, scales, voices etc. There are some musical universals, which include the tendency for a regular beat and the fact that most music takes place within a fixed set of reference pitches. There also seems to be an innate tendency to respond to changes in volume and tempo (Dalla Bella et al., 2001). Most work relating to music and emotion has focused on Western music, but there is a recent move to consider other cultures and importantly to look at whether music communicates the same emotions to different cultures (see Mathur et al., 2015). A useful parallel might be comedy: some actions and sounds will be universally funny to most people (e.g. slapstick comedy), but as soon as the humour relies on knowledge of the culture or language, it will have limited appeal. The same principle could be said to be true of music.

13.5 MUSIC AND LONG-TERM MEMORY

Many things can take us back to an earlier point in our lives - particular smells, photos, conversations with our friends or relatives and so on - but music seems to be a particularly powerful cue, flooding our minds with feelings and thoughts that are sometimes vivid and clear, other times intangible and indescribable, but nearly always hugely moving. Song writers and poets also capture the special resonance and sentimentality of songs from our teenage and early adult lives.

There are many reasons why the empirical study of musical memories is important, not least because it provides an interesting and accessible way of investigating the fundamental cognitive and neural mechanisms of memory. For example, we can learn a lot about the nature of involuntary memories as well as the ruminative cognitions that underlie earworms, not to mention memory across the lifespan, associative and cue-based memory, the effects of implicit memories and the overlap between remembering and imagining. There are also a number of very practical applications, with music increasingly being used as a reminiscence tool in various therapies, and the commercial and cultural value it has when used in advertisements and films.

REMEMBERING AND IMAGINING MUSIC

Let me ask you to pause for a moment and ask you to reflect on whether you have music in your head right now. If so, what is it and do you know why it is there? An experience-sampling study we have just carried out (Loveday and Conway, in prep.) suggests that on average people have what we call ‘inner music’ around 45 per cent of the time! Studies using alternative paradigms have found this to be a little lower (Bailes, 2006) and there are many influencing factors. Nevertheless, it is a reflection of how strong and powerful musical memory can be. Research on inner music has really taken off in the past 10 years, but the main focus has been on the phenomenon of ‘ear-worms’ (e.g. Halpern and Bartlett, 2011). This is where people get a song, or more likely a short section of a song, stuck in their head, going round on an endless loop. Around 90 per cent of people experience this, and, contrary to popular belief, diary studies find that most of the time people are not bothered by it (Williamson et al., 2014), although they are more likely to notice, report and complain about the moments when it becomes annoying.

The fact that we can easily imagine our favourite tunes suggests that we are good at storing them, but as Williamson (2014, p. 179) points out, it takes time to build up a musical memory. Halpern and Müllensiefen (2008) played forty new tunes to a group of sixty-three undergraduates and found that their recognition memory was not much above chance; and as you may recall from earlier in this chapter, Sloboda and Parker’s study (1985) found that no one was able to exactly recall a tune even after they had heard it six times.

However, some things seem to make music stick more easily, for example we are better at remembering music we like (Eschrich et al., 2008) as well as music that contains the human voice (Weiss et al., 2012). And once we do form a musical memory it seems to be very powerful and robust. Krumhansl (2010) played her participants exceptionally short, 400 ms, snippets of popular music and found that they were able to identify the artist and title on more than 25 per cent of the clips. Where they were not able to recognise the song, they often reported a consistent emotional response and/or were able to ascertain the style or decade. A well-known study by Levitin (1994) has also found that we store long-term memories of music so accurately that even non-musicians can reliably sing the starting note at exactly the right pitch, and this has subsequently been supported by other work (Frieler et al., 2013).

Before considering the value and purpose of long-term musical memories, it is worth noting that most music memory research uses measures of explicit memory, where participants are asked to consciously identify a piece of music or the circumstances in which it was previously encountered. However, a number of researchers have stressed the importance of studying implicit musical memory, which has been shown to be distinct from explicit musical memory and is dependent on different brain structures (Samson and Peretz, 2005). This has implications for people with amnesia and dementia, as well as being relevant for people who may use music to influence behaviour and perception, for example in films, advertising and commercial settings. It is also particularly relevant for theories of music and emotion (see Jäncke, 2008 for a review).

THE MUSICAL REMINISCENCE BUMP

In 1941, Roy Plomley, a broadcaster for BBC Radio, had what turned out to be a brilliant idea for a new programme. It involved interviewing celebrities and asking them to choose eight pieces of music they would take with them if they were stranded on an island. ‘Desert Island Discs’ was first transmitted in 1942 and, with the exception of a break between 1946 and 1951, it has been running ever since. It is regarded as one of Radio 4’s most successful programmes, with around 3 million listeners a week (Hodgson, 2014). What makes this programme so appealing is the way in which the music choices provide such a natural and personal insight into the lives of those being interviewed. When asked why they have chosen a piece of music, interviewees very often cite a significant moment or period of time in their lives, or they may say it reminds them of a particular place or person.

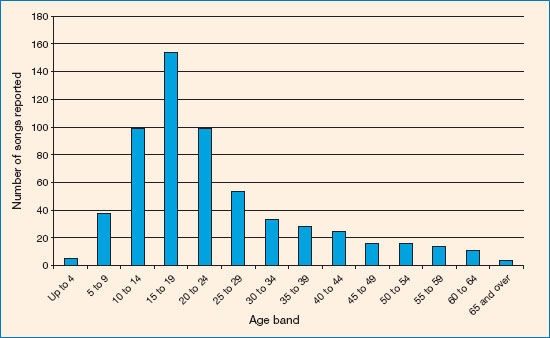

What is also interesting is that when older people are asked to choose music that is important or significant for them, they have a bias towards choosing music from their adolescence or early 20s. In autobiographical memory research, this period is commonly referred to as the reminiscence bump, the period of our past lives for which we seem to have the richest and most emotionally powerful memories (see Chapter 7).

Figure 13.6 The reminiscence bump for music.

Source: data from Loveday and Conway (in prep.).

We have investigated the reminiscence bump for music, in a study where we asked sixty participants over the age of 35 to select ten pieces of music that they felt were particularly important to them (Loveday et al., in prep.). We also asked people to state when they had first been listening to that piece of music and why they had chosen it. We found a clear reminiscence bump (see Figure 13.6), although interestingly the peak was at 15-19, which is younger than has been found for other stimuli, such as books, films or footballers (Janssen et al., 2012). Furthermore, when we compared the musicians and non-musicians in this group, the reminiscence bump for musicians was significantly larger. Given that the reminiscence bump has been strongly linked with developing a sense of self, these findings suggest to us that music plays an early role in identity formation and that this effect is particularly strong for people who become musicians.

A number of other studies have looked at the reminiscence bump for music using methodologies where, instead of asking people to select songs, participants are provided wih songs from different periods and asked to recognise or make decisions about them. Holbrook and Schindler (1989) found that we have lifelong preferences for songs from the reminiscence period, while Cady et al. (2008) found that memories stimulated by music from childhood and the reminiscence period were higher in specificity, vividness and emotionality. A particularly fascinating recent study by Krumhansl and Zupnick (2013) looked at music preferences in 20-year-olds and found what they called ‘cascading reminiscence bumps’, where music preferences peaked for the period of time that matched their parents’ reminiscence period and even that of their grandparents.

MUSIC IN MEMORY-IMPAIRED GROUPS

While there is a lot more research still needed on why music is so strongly linked with our most significant lifetime memories, it seems that there is potential for applying these findings to real-life applications, such as reminiscence therapy in people with dementia and amnesia. There is some evidence that musical memory may be disproportionately spared in people with amnesia. For example, Baur et al. (2000) describe an amnesic patient who was able to learn a new instrument and to learn tunes from memory, as well as being able to name them. Other people have reported similar case studies (e.g. Haslam and Cook, 2002; Fornazzari et al., 2006), and probably the most wellknown example is Clive Wearing, a professional musician with an exceptionally dense amnesia, who is nevertheless able to play and enjoy the music he loved and to conduct his choir. I had the great pleasure of meeting and singing with Clive a few years ago, and his passion for music and ability to engage with it was very evident.

There is also a popular belief that music is preserved in people with severe dementia, although, as Baird and Samson (2015) point out, there has been limited rigorous empirical investigation to examine whether this is true. An interesting case study by Cuddy and Duffin (2005) showed that a woman, EN, with very severe dementia, sang along to familiar tunes and ignored novel tunes. She was also able to start humming a tune after she had heard familiar lyrics spoken. Despite having very little language, EN also gave non-verbal indications that she was uncomfortable listening to music that had wrong notes in it. There are also a number of studies that have shown that patients with mild-moderate Alzheimer’s disease perform better on tests of recall and autobiographical memory when they are listening to music, compared with sitting in silence (Foster and Valentine, 2001; El Haj et al., 2012), although it is unclear whether this is because the music stimulates their memory or simply relaxes them. Given that music is a powerful modulator of emotion, it is important to exercise some caution in the use of music with people who have memory difficulties, especially those who have lost the ability to express their views and preferences. Nevertheless, music may provide some general cognitive enhancement, and more importantly does seem to provide a means to connect people with their past and a communicative channel with their carers and loved ones.

Figure 13.7 Can music stimulate memory in patients with dementia?

Source: copyright GWImages/ Shutterstock.com.

SUMMARY

✵ We hear notes that differ in pitch, timbre and volume and can be spatially located. We then use these clues to tie groups of notes together into coherent instruments and sequences, which give rise to rhythm, melody and harmony.

✵ Our attention tends to be drawn to a melodic line but the harmonic structure provides context and emotional colouring.

✵ Every moment in music only becomes meaningful in relation to what has come before, so memory is crucial. We are able to store the general gist of a piece of music and, in addition, melodic, harmonic and rhythmic sequences become chunked together into meaningful units, which are then held in mind or stored using tricks such as repetition and labelling.

✵ Musical development can be divided into two distinct phases, which may overlap to some extent. Enculturation is the passive and spontaneous acquisition of musical skill that occurs during the first 10 years of life; training is the self-conscious development of specialised musical skills.

✵ There is much debate about the extent to which musical expertise depends on nature or nurture.

✵ Music has a lot in common with language and is widely accepted as a form of communication, but it does not convey concrete, specific meaning.

✵ Musical memories are not formed as quickly as many believe, but once formed, these memories are very robust and emotionally powerful. They are often strongly linked to our autobiographical memories and may be useful cues for people with amnesia or dementia.

FURTHER READING

✵ Ball, P. (2010). The music instinct: How music works and why we can’t do without it. New York: Random House.

✵ Levitin, D. J. (2011). This is your brain on music: Understanding a human obsession. New York: Atlantic Books.

✵ Sloboda, J. (2005). Exploring the musical mind: Cognition, emotion, ability, function. Oxford: Oxford University Press.