Superintelligence: Paths, Dangers, Strategies - Nick Bostrom (2014)

Chapter 14. The strategic picture

It is now time to consider the challenge of superintelligence in a broader context. We would like to orient ourselves in the strategic landscape sufficiently to know at least which general direction we should be heading. This, it turns out, is not at all easy. Here in the penultimate chapter, we introduce some general analytical concepts that help us think about long-term science and technology policy issues. We then apply them to the issue of machine intelligence.

It can be illuminating to make a rough distinction between two different normative stances from which a proposed policy may be evaluated. The person-affecting perspective asks whether a proposed change would be in “our interest”—that is to say, whether it would (on balance, and in expectation) be in the interest of those morally considerable creatures who either already exist or will come into existence independently of whether the proposed change occurs or not. The impersonal perspective, in contrast, gives no special consideration to currently existing people, or to those who will come to exist independently of whether the proposed change occurs. Instead, it counts everybody equally, independently of their temporal location. The impersonal perspective sees great value in bringing new people into existence, provided they have lives worth living: the more happy lives created, the better.

This distinction, although it barely hints at the moral complexities associated with a machine intelligence revolution, can be useful in a first-cut analysis. Here we will first examine matters from the impersonal perspective. We will later see what changes if person-affecting considerations are given weight in our deliberations.

Science and technology strategy

Before we zoom in on issues specific to machine superintelligence, we must introduce some strategic concepts and considerations that pertain to scientific and technological development more generally.

Differential technological development

Suppose that a policymaker proposes to cut funding for a certain research field, out of concern for the risks or long-term consequences of some hypothetical technology that might eventually grow from its soil. She can then expect a howl of opposition from the research community.

Scientists and their public advocates often say that it is futile to try to control the evolution of technology by blocking research. If some technology is feasible (the argument goes) it will be developed regardless of any particular policymaker’s scruples about speculative future risks. Indeed, the more powerful the capabilities that a line of development promises to produce, the surer we can be that somebody, somewhere, will be motivated to pursue it. Funding cuts will not stop progress or forestall its concomitant dangers.

Interestingly, this futility objection is almost never raised when a policymaker proposes to increase funding to some area of research, even though the argument would seem to cut both ways. One rarely hears indignant voices protest: “Please do not increase our funding. Rather, make some cuts. Researchers in other countries will surely pick up the slack; the same work will get done anyway. Don’t squander the public’s treasure on domestic scientific research!”

What accounts for this apparent doublethink? One plausible explanation, of course, is that members of the research community have a self-serving bias which leads us to believe that research is always good and tempts us to embrace almost any argument that supports our demand for more funding. However, it is also possible that the double standard can be justified in terms of national self-interest. Suppose that the development of a technology has twoeffects: giving a small benefit B to its inventors and the country that sponsors them, while imposing an aggregately larger harm H—which could be a risk externality—on everybody. Even somebody who is largely altruistic might then choose to develop the overall harmful technology. They might reason that the harm H will result no matter what they do, since if they refrain somebody else will develop the technology anyway; and given that total welfare cannot be affected, they might as well grab the benefit B for themselves and their nation. (“Unfortunately, there will soon be a device that will destroy the world. Fortunately, we got the grant to build it!”)

Whatever the explanation for the futility objection’s appeal, it fails to show that there is in general no impersonal reason for trying to steer technological development. It fails even if we concede the motivating idea that with continued scientific and technological development efforts, all relevant technologies will eventually be developed—that is, even if we concede the following:

Technological completion conjecture

If scientific and technological development efforts do not effectively cease, then all important basic capabilities that could be obtained through some possible technology will be obtained.1

There are at least two reasons why the technological completion conjecture does not imply the futility objection. First, the antecedent might not hold, because it is not in fact a given that scientific and technological development efforts will not effectively cease (before the attainment of technological maturity). This reservation is especially pertinent in a context that involves existential risk. Second, even if we could be certain that all important basic capabilities that could be obtained through some possible technology will be obtained, it could still make sense to attempt to influence the direction of technological research. What matters is not only whether a technology is developed, but also when it is developed, by whom, and in what context. These circumstances of birth of a new technology, which shape its impact, can be affected by turning funding spigots on or off (and by wielding other policy instruments).

These reflections suggest a principle that would have us attend to the relative speed with which different technologies are developed:2

The principle of differential technological development

Retard the development of dangerous and harmful technologies, especially ones that raise the level of existential risk; and accelerate the development of beneficial technologies, especially those that reduce the existential risks posed by nature or by other technologies.

A policy could thus be evaluated on the basis of how much of a differential advantage it gives to desired forms of technological development over undesired forms.3

Preferred order of arrival

Some technologies have an ambivalent effect on existential risks, increasing some existential risks while decreasing others. Superintelligence is one such technology.

We have seen in earlier chapters that the introduction of machine superintelligence would create a substantial existential risk. But it would reduce many other existential risks. Risks from nature—such as asteroid impacts, supervolcanoes, and natural pandemics—would be virtually eliminated, since superintelligence could deploy countermeasures against most such hazards, or at least demote them to the non-existential category (for instance, via space colonization).

These existential risks from nature are comparatively small over the relevant timescales. But superintelligence would also eliminate or reduce many anthropogenic risks. In particular, it would reduce risks of accidental destruction, including risk of accidents related to new technologies. Being generally more capable than humans, a superintelligence would be less likely to make mistakes, and more likely to recognize when precautions are needed, and to implement precautions competently. A well-constructed superintelligence might sometimes take a risk, but only when doing so is wise. Furthermore, at least in scenarios where the superintelligence forms a singleton, many non-accidental anthropogenic existential risks deriving from global coordination problems would be eliminated. These include risks of wars, technology races, undesirable forms of competition and evolution, and tragedies of the commons.

Since substantial peril would be associated with human beings developing synthetic biology, molecular nanotechnology, climate engineering, instruments for biomedical enhancement and neuropsychological manipulation, tools for social control that may facilitate totalitarianism or tyranny, and other technologies as-yet unimagined, eliminating these types of risk would be a great boon. An argument could therefore be mounted that earlier arrival dates of superintelligence are preferable. However, if risks from nature and from other hazards unrelated to future technology are small, then this argument could be refined: what matters is that we get superintelligence before other dangerous technologies, such as advanced nanotechnology. Whether it happens sooner or later may not be so important (from an impersonal perspective) so long as the order of arrival is right.

The ground for preferring superintelligence to come before other potentially dangerous technologies, such as nanotechnology, is that superintelligence would reduce the existential risks from nanotechnology but not vice versa.4Hence, if we create superintelligence first, we will face only those existential risks that are associated with superintelligence; whereas if we create nanotechnology first, we will face the risks of nanotechnology and then, additionally, the risks of superintelligence.5 Even if the existential risks from superintelligence are very large, and even if superintelligence is the riskiest of all technologies, there could thus be a case for hastening its arrival.

These “sooner-is-better” arguments, however, presuppose that the riskiness of creating superintelligence is the same regardless of when it is created. If, instead, its riskiness declines over time, it might be better to delay the machine intelligence revolution. While a later arrival would leave more time for other existential catastrophes to intercede, it could still be preferable to slow the development of superintelligence. This would be especially plausible if the existential risks associated with superintelligence are much larger than those associated with other disruptive technologies.

There are several quite strong reasons to believe that the riskiness of an intelligence explosion will decline significantly over a multidecadal timeframe. One reason is that a later date leaves more time for the development of solutions to the control problem. The control problem has only recently been recognized, and most of the current best ideas for how to approach it were discovered only within the past decade or so (and in several cases during the time that this book was being written). It is plausible that the state of the art will advance greatly over the next several decades; and if the problem turns out to be very difficult, a significant rate of progress might continue for a century or more. The longer it takes for superintelligence to arrive, the more such progress will have been made when it does. This is an important consideration in favor of later arrival dates—and a very strong consideration against extremely early arrival dates.

Another reason why superintelligence later might be safer is that this would allow more time for various beneficial background trends of human civilization to play themselves out. How much weight one attaches to this consideration will depend on how optimistic one is about these trends.

An optimist could certainly point to a number of encouraging indicators and hopeful possibilities. People might learn to get along better, leading to reductions in violence, war, and cruelty; and global coordination and the scope of political integration might increase, making it easier to escape undesirable technology races (more on this below) and to work out an arrangement whereby the hoped-for gains from an intelligence explosion would be widely shared. There appear to be long-term historical trends in these directions.6

Further, an optimist could expect that the “sanity level” of humanity will rise over the course of this century—that prejudices will (on balance) recede, that insights will accumulate, and that people will become more accustomed to thinking about abstract future probabilities and global risks. With luck, we could see a general uplift of epistemic standards in both individual and collective cognition. Again, there are trends pushing in these directions. Scientific progress means that more will be known. Economic growth may give a greater portion of the world’s population adequate nutrition (particularly during the early years of life that are important for brain development) and access to quality education. Advances in information technology will make it easier to find, integrate, evaluate, and communicate data and ideas. Furthermore, by the century’s end, humanity will have made an additional hundred years’ worth of mistakes, from which something might have been learned.

Many potential developments are ambivalent in the abovementioned sense—increasing some existential risks and decreasing others. For example, advances in surveillance, data mining, lie detection, biometrics, and psychological or neurochemical means of manipulating beliefs and desires could reduce some existential risks by making it easier to coordinate internationally or to suppress terrorists and renegades at home. These same advances, however, might also increase some existential risks by amplifying undesirable social dynamics or by enabling the formation of permanently stable totalitarian regimes.

One important frontier is the enhancement of biological cognition, such as through genetic selection. When we discussed this in Chapters 2 and 3, we concluded that the most radical forms of superintelligence would be more likely to arise in the form of machine intelligence. That claim is consistent with cognitive enhancement playing an important role in the lead-up to, and creation of, machine superintelligence. Cognitive enhancement might seem obviously risk-reducing: the smarter the people working on the control problem, the more likely they are to find a solution. However, cognitive enhancement could also hasten the development of machine intelligence, thus reducing the time available to work on the problem. Cognitive enhancement would also have many other relevant consequences. These issues deserve a closer look. (Most of the following remarks about “cognitive enhancement” apply equally to non-biological means of increasing our individual or collective epistemic effectiveness.)

Rates of change and cognitive enhancement

An increase in either the mean or the upper range of human intellectual ability would likely accelerate technological progress across the board, including progress toward various forms of machine intelligence, progress on the control problem, and progress on a wide swath of other technical and economic objectives. What would be the net effect of such acceleration?

Consider the limiting case of a “universal accelerator,” an imaginary intervention that accelerates literally everything. The action of such a universal accelerator would correspond merely to an arbitrary rescaling of the time metric, producing no qualitative change in observed outcomes.7

If we are to make sense of the idea that cognitive enhancement might generally speed things up, we clearly need some other concept than that of universal acceleration. A more promising approach is to focus on how cognitive enhancement might increase the rate of change in one type of process relative to the rate of change in some other type of process. Such differential acceleration could affect a system’s dynamics. Thus, consider the following concept:

Macro-structural development accelerator—A lever that accelerates the rate at which macro-structural features of the human condition develop, while leaving unchanged the rate at which micro-level human affairs unfold.

Imagine pulling this lever in the decelerating direction. A brake pad is lowered onto the great wheel of world history; sparks fly and metal screeches. After the wheel has settled into a more leisurely pace, the result is a world in which technological innovation occurs more slowly and in which fundamental or globally significant change in political structure and culture happens less frequently and less abruptly. A greater number of generations come and go before one era gives way to another. During the course of a lifespan, a person sees little change in the basic structure of the human condition.

For most of our species’ existence, macro-structural development was slower than it is now. Fifty thousand years ago, an entire millennium might have elapsed without a single significant technological invention, without any noticeable increase in human knowledge and understanding, and without any globally meaningful political change. On a micro-level, however, the kaleidoscope of human affairs churned at a reasonable rate, with births, deaths, and other personally and locally significant events. The average person’s day might have been more action-packed in the Pleistocene than it is today.

If you came upon a magic lever that would let you change the rate of macro-structural development, what should you do? Ought you to accelerate, decelerate, or leave things as they are?

Assuming the impersonal standpoint, this question requires us to consider the effects on existential risk. Let us distinguish between two kinds of risk: “state risks” and “step risks.” A state risk is one that is associated with being in a certain state, and the total amount of state risk to which a system is exposed is a direct function of how long the system remains in that state. Risks from nature are typically state risks: the longer we remain exposed, the greater the chance that we will get struck by an asteroid, supervolcanic eruption, gamma ray burst, naturally arising pandemic, or some other slash of the cosmic scythe. Some anthropogenic risks are also state risks. At the level of an individual, the longer a soldier pokes his head up above the parapet, the greater the cumulative chance he will be shot by an enemy sniper. There are anthropogenic state risks at the existential level as well: the longer we live in an internationally anarchic system, the greater the cumulative chance of a thermonuclear Armageddon or of a great war fought with other kinds of weapons of mass destruction, laying waste to civilization.

A step risk, by contrast, is a discrete risk associated with some necessary or desirable transition. Once the transition is completed, the risk vanishes. The amount of step risk associated with a transition is usually not a simple function of how long the transition takes. One does not halve the risk of traversing a minefield by running twice as fast. Conditional on a fast takeoff, the creation of superintelligence might be a step risk: there would be a certain risk associated with the takeoff, the magnitude of which would depend on what preparations had been made; but the amount of risk might not depend much on whether the takeoff takes twenty milliseconds or twenty hours.

We can then say the following regarding a hypothetical macro-structural development accelerator:

✵ Insofar as we are concerned with existential state risks, we should favor acceleration—provided we think we have a realistic prospect of making it through to a post-transition era in which any further existential risks are greatly reduced.

✵ If it were known that there is some step ahead destined to cause an existential catastrophe, then we ought to reduce the rate of macro-structural development (or even put it in reverse) in order to give more generations a chance to exist before the curtain is rung down. But, in fact, it would be overly pessimistic to be so confident that humanity is doomed.

✵ At present, the level of existential state risk appears to be relatively low. If we imagine the technological macro-conditions for humanity frozen in their current state, it seems very unlikely that an existential catastrophe would occur on a timescale of, say, a decade. So a delay of one decade—provided it occurred at our current stage of development or at some other time when state risk is low—would incur only a very minor existential state risk, whereas a postponement by one decade of subsequent technological developments might well have a significant beneficial impact on later existential step risks, for example by allowing more time for preparation.

Upshot: the main way that the speed of macro-structural development is important is by affecting how well prepared humanity is when the time comes to confront the key step risks.8

So the question we must ask is how cognitive enhancement (and concomitant acceleration of macro-structural development) would affect the expected level of preparedness at the critical juncture. Should we prefer a shorter period of preparation with higher intelligence? With higher intelligence, the preparation time could be used more effectively, and the final critical step would be taken by a more intelligent humanity. Or should we prefer to operate with closer to current levels of intelligence if that gives us more time to prepare?

Which option is better depends on the nature of the challenge being prepared for. If the challenge were to solve a problem for which learning from experience is key, then the chronological length of the preparation period might be the determining factor, since time is needed for the requisite experience to accumulate. What would such a challenge look like? One hypothetical example would be a new weapons technology that we could predict would be developed at some point in the future and that would make it the case that any subsequent war would have, let us say, a one-in-ten chance of causing an existential catastrophe. If such were the nature of the challenge facing us, then we might wish the rate of macro-structural development to be slow, so that our species would have more time to get its act together before the critical step when the new weapons technology is invented. One could hope that during the grace period secured through the deceleration, our species might learn to avoid war—that international relations around the globe might come to resemble those between the countries of the European Union, which, having fought one another ferociously for centuries, now coexist in peace and relative harmony. The pacification might occur as a result of the gentle edification from various civilizing processes or through the shock therapy of sub-existential blows (e.g. small nuclear conflagrations, and the recoil and resolve they might engender to finally create the global institutions necessary for the abolishment of interstate wars). If this kind of learning or adjusting would not be much accelerated by increased intelligence, then cognitive enhancement would be undesirable, serving merely to burn the fuse faster.

A prospective intelligence explosion, however, may present a challenge of a different kind. The control problem calls for foresight, reasoning, and theoretical insight. It is less clear how increased historical experience would help. Direct experience of the intelligence explosion is not possible (until too late), and many features conspire to make the control problem unique and lacking in relevant historical precedent. For these reasons, the amount of time that will elapse before the intelligence explosion may not matter much per se. Perhaps what matters, instead, is (a) the amount of intellectual progress on the control problem achieved by the time of the detonation; and (b) the amount of skill and intelligence available at the time to implement the best available solutions (and to improvise what is missing).9 That this latter factor should respond positively to cognitive enhancement is obvious. How cognitive enhancement would affect factor (a) is a somewhat subtler matter.

Suppose, as suggested earlier, that cognitive enhancement would be a general macro-structural development accelerator. This would hasten the arrival of the intelligence explosion, thus reducing the amount of time available for preparation and for making progress on the control problem. Normally this would be a bad thing. However, if the only reason why there is less time available for intellectual progress is that intellectual progress is speeded up, then there need be no net reduction in the amount of intellectual progress that will have taken place by the time the intelligence explosion occurs.

At this point, cognitive enhancement might appear to be neutral with respect to factor (a): the same intellectual progress that would otherwise have been made prior to the intelligence explosion—including progress on the control problem—still gets made, only compressed within a shorter time interval. In actuality, however, cognitive enhancement may well prove a positive influence on (a).

One reason why cognitive enhancement might cause more progress to have been made on the control problem by the time the intelligence explosion occurs is that progress on the control problem may be especially contingent on extreme levels of intellectual performance—even more so than the kind of work necessary to create machine intelligence. The role for trial and error and accumulation of experimental results seems quite limited in relation to the control problem, whereas experimental learning will probably play a large role in the development of artificial intelligence or whole brain emulation. The extent to which time can substitute for wit may therefore vary between tasks in a way that should make cognitive enhancement promote progress on the control problem more than it would promote progress on the problem of how to create machine intelligence.

Another reason why cognitive enhancement should differentially promote progress on the control problem is that the very need for such progress is more likely to be appreciated by cognitively more capable societies and individuals. It requires foresight and reasoning to realize why the control problem is important and to make it a priority.10 It may also require uncommon sagacity to find promising ways of approaching such an unfamiliar problem.

From these reflections we might tentatively conclude that cognitive enhancement is desirable, at least insofar as the focus is on the existential risks of an intelligence explosion. Parallel lines of thinking apply to other existential risks arising from challenges that require foresight and reliable abstract reasoning (as opposed to, e.g., incremental adaptation to experienced changes in the environment or a multigenerational process of cultural maturation and institution-building).

Technology couplings

Suppose that one thinks that solving the control problem for artificial intelligence is very difficult, that solving it for whole brain emulations is much easier, and that it would therefore be preferable that machine intelligence be reached via the whole brain emulation path. We will return later to the question of whether whole brain emulation would be safer than artificial intelligence. But for now we want to make the point that even if we accept this premiss, it would not follow that we ought to promote whole brain emulation technology. One reason, discussed earlier, is that a later arrival of superintelligence may be preferable, in order to allow more time for progress on the control problem and for other favorable background trends to culminate—and thus, if one were confident that whole brain emulation would precede AI anyway, it would be counterproductive to further hasten the arrival of whole brain emulation.

But even if it were the case that it would be best for whole brain emulation to arrive as soon as possible, it still would not follow that we ought to favor progress toward whole brain emulation. For it is possible that progress toward whole brain emulation will not yield whole brain emulation. It may instead yield neuromorphic artificial intelligence—forms of AI that mimic some aspects of cortical organization but do not replicate neuronal functionality with sufficient fidelity to constitute a proper emulation. If—as there is reason to believe—such neuromorphic AI is worse than the kind of AI that would otherwise have been built, and if by promoting whole brain emulation we would make neuromorphic AI arrive first, then our pursuit of the supposed best outcome (whole brain emulation) would lead to the worst outcome (neuromorphic AI); whereas if we had pursued the second-best outcome (synthetic AI) we might actually have attained the second-best (synthetic AI).

We have just described an (hypothetical) instance of what we might term a “technology coupling.”11 This refers to a condition in which two technologies have a predictable timing relationship, such that developing one of the technologies has a robust tendency to lead to the development of the other, either as a necessary precursor or as an obvious and irresistible application or subsequent step. Technology couplings must be taken into account when we use the principle of differential technological development: it is no good accelerating the development of a desirable technology Y if the only way of getting Y is by developing an extremely undesirable precursor technology X, or if getting Y would immediately produce an extremely undesirable related technology Z. Before you marry your sweetheart, consider the prospective in-laws.

In the case of whole brain emulation, the degree of technology coupling is debatable. We noted in Chapter 2 that while whole brain emulation would require massive progress in various enabling technologies, it might not require any major new theoretical insight. In particular, it does not require that we understand how human cognition works, only that we know how to build computational models of small parts of the brain, such as different species of neuron. Nevertheless, in the course of developing the ability to emulate human brains, a wealth of neuroanatomical data would be collected, and functional models of cortical networks would surely be greatly improved. Such progress would seem to have a good chance of enabling neuromorphic AI before full-blown whole brain emulation.12 Historically, there are quite a few examples of AI techniques gleaned from neuroscience or biology. (For example: the McCulloch-Pitts neuron, perceptrons, and other artificial neurons and neural networks, inspired by neuroanatomical work; reinforcement learning, inspired by behaviorist psychology; genetic algorithms, inspired by evolution theory; subsumption architectures and perceptual hierarchies, inspired by cognitive science theories about motor planning and sensory perception; artificial immune systems, inspired by theoretical immunology; swarm intelligence, inspired by the ecology of insect colonies and other self-organizing systems; and reactive and behavior-based control in robotics, inspired by the study of animal locomotion.) Perhaps more significantly, there are plenty of important AI-relevant questions that could potentially be answered through further study of the brain. (For example: How does the brain store structured representations in working memory and long-term memory? How is the binding problem solved? What is the neural code? How are concepts represented? Is there some standard unit of cortical processing machinery, such as the cortical column, and if so how is it wired and how does its functionality depend on the wiring? How can such columns be linked up, and how can they learn?)

We will shortly have more to say about the relative danger of whole brain emulation, neuromorphic AI, and synthetic AI, but we can already flag another important technology coupling: that between whole brain emulation and AI. Even if a push toward whole brain emulation actually resulted in whole brain emulation (as opposed to neuromorphic AI), and even if the arrival of whole brain emulation could be safely handled, a further risk would still remain: the risk associated with a second transition, a transition from whole brain emulation to AI, which is an ultimately more powerful form of machine intelligence.

There are many other technology couplings, which could be considered in a more comprehensive analysis. For instance, a push toward whole brain emulation would boost neuroscience progress more generally.13 That might produce various effects, such as faster progress toward lie detection, neuropsychological manipulation techniques, cognitive enhancement, and assorted medical advances. Likewise, a push toward cognitive enhancement might (depending on the specific path pursued) create spillovers such as faster development of genetic selection and genetic engineering methods not only for enhancing cognition but for modifying other traits as well.

Second-guessing

We encounter another layer of strategic complexity if we take into account that there is no perfectly benevolent, rational, and unified world controller who simply implements what has been discovered to be the best option. Any abstract point about “what should be done” must be embodied in the form of a concrete message, which is entered into the arena of rhetorical and political reality. There it will be ignored, misunderstood, distorted, or appropriated for various conflicting purposes; it will bounce around like a pinball, causing actions and reactions, ushering in a cascade of consequences, the upshot of which need bear no straightforward relationship to the intentions of the original sender.

A sophisticated operator might try to anticipate these kinds of effect. Consider, for example, the following argument template for proceeding with research to develop a dangerous technology X. (One argument fitting this template can be found in the writings of Eric Drexler. In Drexler’s case, X = molecular nanotechnology.14)

1 The risks of X are great.

2 Reducing these risks will require a period of serious preparation.

3 Serious preparation will begin only once the prospect of X is taken seriously by broad sectors of society.

4 Broad sectors of society will take the prospect of X seriously only once a large research effort to develop X is underway.

5 The earlier a serious research effort is initiated, the longer it will take to deliver X (because it starts from a lower level of pre-existing enabling technologies).

6 Therefore, the earlier a serious research effort is initiated, the longer the period during which serious preparation will be taking place, and the greater the reduction of the risks.

7 Therefore, a serious research effort toward X should be initiated immediately.

What initially looks like a reason for going slow or stopping—the risks of X being great—ends up, on this line of thinking, as a reason for the opposite conclusion.

A related type of argument is that we ought—rather callously—to welcome small and medium-scale catastrophes on grounds that they make us aware of our vulnerabilities and spur us into taking precautions that reduce the probability of an existential catastrophe. The idea is that a small or medium-scale catastrophe acts like an inoculation, challenging civilization with a relatively survivable form of a threat and stimulating an immune response that readies the world to deal with the existential variety of the threat.15

These “shock’em-into-reacting” arguments advocate letting something bad happen in the hope that it will galvanize a public reaction. We mention them here not to endorse them, but as a way to introduce the idea of (what we will term) “second-guessing arguments.” Such arguments maintain that by treating others as irrational and playing to their biases and misconceptions it is possible to elicit a response from them that is more competent than if a case had been presented honestly and forthrightly to their rational faculties.

It may seem unfeasibly difficult to use the kind of stratagems recommended by second-guessing arguments to achieve long-term global goals. How could anybody predict the final course of a message after it has been jolted hither and thither in the pinball machine of public discourse? Doing so would seem to require predicting the rhetorical effects on myriad constituents with varied idiosyncrasies and fluctuating levels of influence over long periods of time during which the system may be perturbed by unanticipated events from the outside while its topology is also undergoing a continuous endogenous reorganization: surely an impossible task!16 However, it may not be necessary to make detailed predictions about the system’s entire future trajectory in order to identify an intervention that can be reasonably expected to increase the chances of a certain long-term outcome. One might, for example, consider only the relatively near-term and predictable effects in a detailed way, selecting an action that does well in regard to those, while modeling the system’s behavior beyond the predictability horizon as a random walk.

There may, however, be a moral case for de-emphasizing or refraining from second-guessing moves. Trying to outwit one another looks like a zero-sum game—or negative-sum, when one considers the time and energy that would be dissipated by the practice as well as the likelihood that it would make it generally harder for anybody to discover what others truly think and to be trusted when expressing their own opinions.17 A full-throttled deployment of the practices of strategic communication would kill candor and leave truth bereft to fend for herself in the backstabbing night of political bogeys.

Pathways and enablers

Should we celebrate advances in computer hardware? What about advances on the path toward whole brain emulation? We will look at these two questions in turn.

Effects of hardware progress

Faster computers make it easier to create machine intelligence. One effect of accelerating progress in hardware, therefore, is to hasten the arrival of machine intelligence. As discussed earlier, this is probably a bad thing from the impersonal perspective, since it reduces the amount of time available for solving the control problem and for humanity to reach a more mature stage of civilization. The case is not a slam dunk, though. Since superintelligence would eliminate many other existential risks, there could be reason to prefer earlier development if the level of these other existential risks were very high.18

Hastening or delaying the onset of the intelligence explosion is not the only channel through which the rate of hardware progress can affect existential risk. Another channel is that hardware can to some extent substitute for software; thus, better hardware reduces the minimum skill required to code a seed AI. Fast computers might also encourage the use of approaches that rely more heavily on brute-force techniques (such as genetic algorithms and other generate-evaluate-discard methods) and less on techniques that require deep understanding to use. If brute-force techniques lend themselves to more anarchic or imprecise system designs, where the control problem is harder to solve than in more precisely engineered and theoretically controlled systems, this would be another way in which faster computers would increase the existential risk.

Another consideration is that rapid hardware progress increases the likelihood of a fast takeoff. The more rapidly the state of the art advances in the semiconductor industry, the fewer the person-hours of programmers’ time spent exploiting the capabilities of computers at any given performance level. This means that an intelligence explosion is less likely to be initiated at the lowest level of hardware performance at which it is feasible. An intelligence explosion is thus more likely to be initiated when hardware has advanced significantly beyond the minimum level at which the eventually successful programming approach could first have succeeded. There is then a hardware overhang when the takeoff eventually does occur. As we saw in Chapter 4, hardware overhang is one of the main factors that reduce recalcitrance during the takeoff. Rapid hardware progress, therefore, will tend to make the transition to superintelligence faster and more explosive.

A faster takeoff via a hardware overhang can affect the risks of the transition in several ways. The most obvious is that a faster takeoff offers less opportunity to respond and make adjustments whilst the transition is in progress, which would tend to increase risk. A related consideration is that a hardware overhang would reduce the chances that a dangerously self-improving seed AI could be contained by limiting its ability to colonize sufficient hardware: the faster each processor is, the fewer processors would be needed for the AI to quickly bootstrap itself to superintelligence. Yet another effect of a hardware overhang is to level the playing field between big and small projects by reducing the importance of one of the advantages of larger projects—the ability to afford more powerful computers. This effect, too, might increase existential risk, if larger projects are more likely to solve the control problem and to be pursuing morally acceptable objectives.19

There are also advantages to a faster takeoff. A faster takeoff would increase the likelihood that a singleton will form. If establishing a singleton is sufficiently important for solving the post-transition coordination problems, it might be worth accepting a greater risk during the intelligence explosion in order to mitigate the risk of catastrophic coordination failures in its aftermath.

Developments in computing can affect the outcome of a machine intelligence revolution not only by playing a direct role in the construction of machine intelligence but also by having diffuse effects on society that indirectly help shape the initial conditions of the intelligence explosion. The Internet, which required hardware to be good enough to enable personal computers to be mass produced at low cost, is now influencing human activity in many areas, including work in artificial intelligence and research on the control problem. (This book might not have been written, and you might not have found it, without the Internet.) However, hardware is already good enough for a great many applications that could facilitate human communication and deliberation, and it is not clear that the pace of progress in these areas is strongly bottlenecked by the rate of hardware improvement.20

On balance, it appears that faster progress in computing hardware is undesirable from the impersonal evaluative standpoint. This tentative conclusion could be overturned, for example if the threats from other existential risks or from post-transition coordination failures turn out to be extremely large. In any case, it seems difficult to have much leverage on the rate of hardware advancement. Our efforts to improve the initial conditions for the intelligence explosion should therefore probably focus on other parameters.

Note that even when we cannot see how to influence some parameter, it can be useful to determine its “sign” (i.e. whether an increase or decrease in that parameter would be desirable) as a preliminary step in mapping the strategic lay of the land. We might later discover a new leverage point that does enable us to manipulate the parameter more easily. Or we might discover that the parameter’s sign correlates with the sign of some other more manipulable parameter, so that our initial analysis helps us decide what to do with this other parameter.

Should whole brain emulation research be promoted?

The harder it seems to solve the control problem for artificial intelligence, the more tempting it is to promote the whole brain emulation path as a less risky alternative. There are several issues, however, that must be analyzed before one can arrive at a well-considered judgment.21

First, there is the issue of technology coupling, already discussed earlier. We pointed out that an effort to develop whole brain emulation could result in neuromorphic AI instead, a form of machine intelligence that may be especially unsafe.

But let us assume, for the sake of argument, that we actually achieve whole brain emulation (WBE). Would this be safer than AI? This, itself, is a complicated issue. There are at least three putative advantages of WBE: (i) that its performance characteristics would be better understood than those of AI; (ii) that it would inherit human motives; and (iii) that it would result in a slower takeoff. Let us very briefly reflect on each.

i That it should be easier to understand the intellectual performance characteristics of an emulation than of an AI sounds plausible. We have abundant experience with the strengths and weaknesses of human intelligence but no experience with human-level artificial intelligence. However, to understand what a snapshot of a digitized human intellect can and cannot do is not the same as to understand how such an intellect will respond to modifications aimed at enhancing its performance. An artificial intellect, by contrast, might be carefully designed to be understandable, in both its static and dynamic dispositions. So while whole brain emulation may be more predictable in its intellectual performance than a generic AI at a comparable stage of development, it is unclear whether whole brain emulation would be dynamically more predictable than an AI engineered by competent safety-conscious programmers.

ii As for an emulation inheriting the motivations of its human template, this is far from guaranteed. Capturing human evaluative dispositions might require a very high-fidelity emulation. Even if some individual’s motivations wereperfectly captured, it is unclear how much safety would be purchased. Humans can be untrustworthy, selfish, and cruel. While templates would hopefully be selected for exceptional virtue, it may be hard to foretell how someone will act when transplanted into radically alien circumstances, superhumanly enhanced in intelligence, and tempted with an opportunity for world domination. It is true that emulations would at least be more likely to have human-likemotivations (as opposed to valuing only paperclips or discovering digits of pi). Depending on one’s views on human nature, this might or might not be reassuring.22

iii It is not clear why whole brain emulation should result in a slower takeoff than artificial intelligence. Perhaps with whole brain emulation one should expect less hardware overhang, since whole brain emulation is less computationally efficient than artificial intelligence can be. Perhaps, also, an AI system could more easily absorb all available computing power into one giant integrated intellect, whereas whole brain emulation would forego quality superintelligence and pull ahead of humanity only in speed and size of population. If whole brain emulation does lead to a slower takeoff, this could have benefits in terms of alleviating the control problem. A slower takeoff would also make a multipolar outcome more likely. But whether a multipolar outcome is desirable is very doubtful.

There is another important complication with the general idea that getting whole brain emulation first is safer: the need to cope with a second transition. Even if the first form of human-level machine intelligence is emulation-based, it would still remain feasible to develop artificial intelligence. AI in its mature form has important advantages over WBE, making AI the ultimately more powerful technology.23 While mature AI would render WBE obsolete (except for the special purpose of preserving individual human minds), the reverse does not hold.

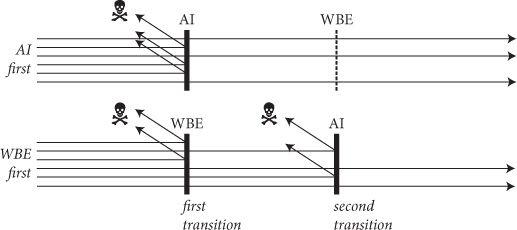

What this means is that if AI is developed first, there might be a single wave of the intelligence explosion. But if WBE is developed first, there may be two waves: first, the arrival of WBE; and later, the arrival of AI. The total existential risk along the WBE-first path is the sum of the risk in the first transition and the risk in the second transition (conditional on having made it through the first); see Figure 13.24

How much safer would the AI transition be in a WBE world? One consideration is that the AI transition would be less explosive if it occurs after some form of machine intelligence has already been realized. Emulations, running at digital speeds and in numbers that might far exceed the biological human population, would reduce the cognitive differential, making it easier for emulations to control the AI. This consideration is not too weighty, since the gap between AI and WBE could still be wide. However, if the emulations were not just faster and more numerous but also somewhat qualitatively smarter than biological humans (or at least drawn from the top end of the human distribution) then the WBE-first scenario would have advantages paralleling those of human cognitive enhancement, which we discussed above.

Figure 13 Artificial intelligence or whole brain emulation first? In an AI-first scenario, there is one transition that creates an existential risk. In a WBE-first scenario, there are two risky transitions, first the development of WBE and then the development of AI. The total existential risk along the WBE-first scenario is the sum of these. However, the risk of an AI transition might be lower if it occurs in a world where WBE has already been successfully introduced.

Another consideration is that the transition to WBE would extend the lead of the frontrunner. Consider a scenario in which the frontrunner has a six-month lead over the closest follower in developing whole brain emulation technology. Suppose that the first emulations to be created are cooperative, safety-focused, and patient. If they run on fast hardware, these emulations could spend subjective eons pondering how to create safe AI. For example, if they run at a speedup of 100,000× and are able to work on the control problem undisturbed for six months of sidereal time, they could hammer away at the control problem for fifty millennia before facing competition from other emulations. Given sufficient hardware, they could hasten their progress by fanning out myriad copies to work independently on subproblems. If the frontrunner uses its six-month lead to form a singleton, it could buy its emulation AI-development team an unlimited amount of time to work on the control problem.25

On balance, it looks like the risk of the AI transition would be reduced if WBE comes before AI. However, when we combine the residual risk in the AI transition with the risk of an antecedent WBE transition, it becomes very unclear how the total existential risk along the WBE-first path stacks up against the risk along the AI-first path. Only if one is quite pessimistic about biological humanity’s ability to manage an AI transition—after taking into account that human nature or civilization might have improved by the time we confront this challenge—should the WBE-first path seem attractive.

To figure out whether whole brain emulation technology should be promoted, there are some further important points to place in the balance. Most significantly, there is the technology coupling mentioned earlier: a push toward WBE could instead produce neuromorphic AI. This is a reason against pushing for WBE.26 No doubt, there are some synthetic AI designs that are less safe than some neuromorphic designs. In expectation, however, it seems that neuromorphic designs are less safe. One ground for this is that imitation can substitute for understanding. To build something from the ground up one must usually have a reasonably good understanding of how the system will work. Such understanding may not be necessary to merely copy features of an existing system. Whole brain emulation relies on wholesale copying of biology, which may not require a comprehensive computational systems-level understanding of cognition (though a large amount of component-level understanding would undoubtedly be needed). Neuromorphic AI may be like whole brain emulation in this regard: it would be achieved by cobbling together pieces plagiarized from biology without the engineers necessarily having a deep mathematical understanding of how the system works. But neuromorphic AI would be unlike whole brain emulation in another regard: it would not have human motivations by default.27 This consideration argues against pursuing the whole brain emulation approach to the extent that it would likely produce neuromorphic AI.

A second point to put in the balance is that WBE is more likely to give us advance notice of its arrival. With AI it is always possible that somebody will make an unexpected conceptual breakthrough. WBE, by contrast, will require many laborious precursor steps—high-throughput scanning facilities, image processing software, detailed neural modeling work. We can therefore be confident that WBE is not imminent (not less than, say, fifteen or twenty years away). This means that efforts to accelerate WBE will make a difference mainly in scenarios in which machine intelligence is developed comparatively late. This could make WBE investments attractive to somebody who wants the intelligence explosion to preempt other existential risks but is wary of supporting AI for fear of triggering an intelligence explosion prematurely, before the control problem has been solved. However, the uncertainty over the relevant timescales is probably currently too large to enable this consideration to carry much weight.28

A strategy of promoting WBE is thus most attractive if (a) one is very pessimistic about humans solving the control problem for AI, (b) one is not too worried about neuromorphic AI, multipolar outcomes, or the risks of a second transition, (c) one thinks that the default timing of WBE and AI is close, and (d) one prefers superintelligence to be developed neither very late nor very early.

The person-affecting perspective favors speed

I fear the blog commenter “washbash” may speak for many when he or she writes:

I instinctively think go faster. Not because I think this is better for the world. Why should I care about the world when I am dead and gone? I want it to go fast, damn it! This increases the chance I have of experiencing a more technologically advanced future.29

From the person-affecting standpoint, we have greater reason to rush forward with all manner of radical technologies that could pose existential risks. This is because the default outcome is that almost everyone who now exists is dead within a century.

The case for rushing is especially strong with regard to technologies that could extend our lives and thereby increase the expected fraction of the currently existing population that may still be around for the intelligence explosion. If the machine intelligence revolution goes well, the resulting superintelligence could almost certainly devise means to indefinitely prolong the lives of the then still-existing humans, not only keeping them alive but restoring them to health and youthful vigor, and enhancing their capacities well beyond what we currently think of as the human range; or helping them shuffle off their mortal coils altogether by uploading their minds to a digital substrate and endowing their liberated spirits with exquisitely good-feeling virtual embodiments. With regard to technologies that do not promise to save lives, the case for rushing is weaker, though perhaps still sufficiently supported by the hope of raised standards of living.30

The same line of reasoning makes the person-affecting perspective favor many risky technological innovations that promise to hasten the onset of the intelligence explosion, even when those innovations are disfavored in the impersonal perspective. Such innovations could shorten the wolf hours during which we individually must hang on to our perch if we are to live to see the daybreak of the posthuman age. From the person-affecting standpoint, faster hardware progress thus seems desirable, as does faster progress toward WBE. Any adverse effect on existential risk is probably outweighed by the personal benefit of an increased chance of the intelligence explosion happening in the lifetime of currently existing people.31

Collaboration

One important parameter is the degree to which the world will manage to coordinate and collaborate in the development of machine intelligence. Collaboration would bring many benefits. Let us take a look at how this parameter might affect the outcome and what levers we might have for increasing the extent and intensity of collaboration.

The race dynamic and its perils

A race dynamic exists when one project fears being overtaken by another. This does not require the actual existence of multiple projects. A situation with only one project could exhibit a race dynamic if that project is unaware of its lack of competitors. The Allies would probably not have developed the atomic bomb as quickly as they did had they not believed (erroneously) that the Germans might be close to the same goal.

The severity of a race dynamic (that is, the extent to which competitors prioritize speed over safety) depends on several factors, such as the closeness of the race, the relative importance of capability and luck, the number of competitors, whether competing teams are pursuing different approaches, and the degree to which projects share the same aims. Competitors’ beliefs about these factors are also relevant. (See Box 13.)

In the development of machine superintelligence, it seems likely that there will be at least a mild race dynamic, and it is possible that there will be a severe race dynamic. The race dynamic has important consequences for how we should think about the strategic challenge posed by the possibility of an intelligence explosion.

The race dynamic could spur projects to move faster toward superintelligence while reducing investment in solving the control problem. Additional detrimental effects of the race dynamic are also possible, such as direct hostilities between competitors. Suppose that two nations are racing to develop the first superintelligence, and that one of them is seen to be pulling ahead. In a winner-takes-all situation, a lagging project might be tempted to launch a desperate strike against its rival rather than passively await defeat. Anticipating this possibility, the frontrunner might be tempted to strike preemptively. If the antagonists are powerful states, the clash could be bloody.34 (A “surgical strike” against the rival’s AI project might risk triggering a larger confrontation and might in any case not be feasible if the host country has taken precautions.35)

Box 13 A risk-race to the bottom

Consider a hypothetical AI arms race in which several teams compete to develop superintelligence.32 Each team decides how much to invest in safety—knowing that resources spent on developing safety precautions are resources not spent on developing the AI. Absent a deal between all the competitors (which might be stymied by bargaining or enforcement difficulties), there might then be a risk-race to the bottom, driving each team to take only a minimum of precautions.

One can model each team’s performance as a function of its capability (measuring its raw ability and luck) and a penalty term corresponding to the cost of its safety precautions. The team with the highest performance builds the first AI. The riskiness of that AI is determined by how much its creators invested in safety. In the worst-case scenario, all teams have equal levels of capability. The winner is then determined exclusively by investment in safety: the team that took the fewest safety precautions wins. The Nash equilibrium for this game is for every team to spend nothing on safety. In the real world, such a situation might arise via a risk ratchet: some team, fearful of falling behind, increments its risk-taking to catch up with its competitors—who respond in kind, until the maximum level of risk is reached.

Capability versus risk

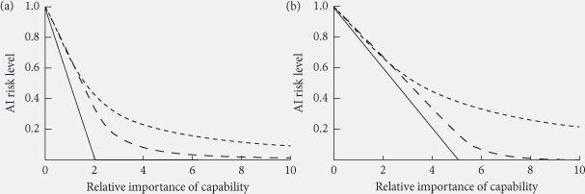

The situation changes when there are variations in capability. As variations in capability become more important relative to the cost of safety precautions, the risk ratchet weakens: there is less incentive to incur an extra bit of risk if doing so is unlikely to change the order of the race. This is illustrated under various scenarios in Figure 14, which plots how the riskiness of the AI depends on the importance of capability. Safety investment ranges from 1 (resulting in perfectly safe AI) to 0 (completely unsafe AI). The x-axis represents the relative importance of capability versus safety investment in determining the speed of a team’s progress toward AI. (At 0.5, the safety investment level is twice are important as capability; at 1, the two are equal; at 2, capability is twice as important as safety level; and so forth.) The y-axis represents the level of AI risk (the expected fraction of their maximum utility that the winner of the race gets).

Figure 14 Risk levels in AI technology races. Levels of risk of dangerous AI in a simple model of a technology race involving either (a) two teams or (b) five teams, plotted against the relative importance of capability (as opposed to investment in safety) in determining which project wins the race. The graphs show three information-level scenarios: no capability information (straight), private capability information (dashed), and full capability information (dotted).

We see that, under all scenarios, the dangerousness of the resultant AI is maximal when capability plays no role, gradually decreasing as capability grows in importance.

Compatible goals

Another way of reducing the risk is by giving teams more of a stake in each other’s success. If competitors are convinced that coming second means the total loss of everything they care about, they will take whatever risk necessary to bypass their rivals. Conversely, teams will invest more in safety if less depends on winning the race. This suggests that we should encourage various forms of cross-investment.

The number of competitors

The greater the number of competing teams, the more dangerous the race becomes: each team, having less chance of coming first, is more willing to throw caution to the wind. This can be seen by contrasting Figure 14a (two teams) with Figure 14b (five teams). In every scenario, more competitors means more risk. Risk would be reduced if teams coalesce into a smaller number of competing coalitions.

The curse of too much information

Is it good if teams know about their positions in the race (knowing their capability scores, for instance)? Here, opposing factors are at play. It is desirable that a leader knows it is leading (so that it knows it has some margin for additional safety precautions). Yet it is undesirable that a laggard knows it has fallen behind (since this would confirm that it must cut back on safety to have any hope of catching up). While intuitively it may seem this trade-off could go either way, the models are unequivocal: information is (in expectation) bad.33 Figures 14a and 14b each plot three scenarios: the straight lines correspond to situations in which no team knows any of the capability scores, its own included. The dashed lines show situations where each team knows its own capability only. (In those situations, a team takes extra risk only if its capability is low.) And the dotted lines show what happens when all teams know each other’s capabilities. (They take extra risks if their capability scores are close to one another.) With each increase in information level, the race dynamic becomes worse.

Scenarios in which the rival developers are not states but smaller entities, such as corporate labs or academic teams, would probably feature much less direct destruction from conflict. Yet the overall consequences of competition may be almost as bad. This is because the main part of the expected harm from competition stems not from the smashup of battle but from the downgrade of precaution. A race dynamic would, as we saw, reduce investment in safety; and conflict, even if nonviolent, would tend to scotch opportunities for collaboration, since projects would be less likely to share ideas for solving the control problem in a climate of hostility and mistrust.36

On the benefits of collaboration

Collaboration thus offers many benefits. It reduces the haste in developing machine intelligence. It allows for greater investment in safety. It avoids violent conflicts. And it facilitates the sharing of ideas about how to solve the control problem. To these benefits we can add another: collaboration would tend to produce outcomes in which the fruits of a successfully controlled intelligence explosion get distributed more equitably.

That broader collaboration should result in wider sharing of gains is not axiomatic. In principle, a small project run by an altruist could lead to an outcome where the benefits are shared evenly or equitably among all morally considerable beings. Nevertheless, there are several reasons to suppose that broader collaborations, involving a greater number of sponsors, are (in expectation) distributionally superior. One such reason is that sponsors presumably prefer an outcome in which they themselves get (at least) their fair share. A broad collaboration then means that relatively many individuals get at least their fair share, assuming the project is successful. Another reason is that a broad collaboration also seems likelier to benefit people outside the collaboration. A broader collaboration contains more members, so more outsiders would have personal ties to somebody on the inside looking out for their interests. A broader collaboration is also more likely to include at least some altruist who wants to benefit everyone. Furthermore, a broader collaboration is more likely to operate under public oversight, which might reduce the risk of the entire pie being captured by a clique of programmers or private investors.37 Note also that the larger the successful collaboration is, the lower the costs to it of extending the benefits to all outsiders. (For instance, if 90% of all people were already inside the collaboration, it would cost them no more than 10% of their holdings to bring all outsiders up to their own level.)

It is thus plausible that broader collaborations would tend to lead to a wider distribution of the gains (though some projects with few sponsors might also have distributionally excellent aims). But why is a wide distribution of gains desirable?

There are both moral and prudential reasons for favoring outcomes in which everybody gets a share of the bounty. We will not say much about the moral case, except to note that it need not rest on any egalitarian principle. The case might be made, for example, on grounds of fairness. A project that creates machine superintelligence imposes a global risk externality. Everybody on the planet is placed in jeopardy, including those who do not consent to having their own lives and those of their family imperiled in this way. Since everybody shares the risk, it would seem to be a minimal requirement of fairness that everybody also gets a share of the upside.

The fact that the total (expected) amount of good seems greater in collaboration scenarios is another important reason such scenarios are morally preferable.

The prudential case for favoring a wide distribution of gains is two-pronged. One prong is that wide distribution should promote collaboration, thereby mitigating the negative consequences of the race dynamic. There is less incentive to fight over who gets to build the first superintelligence if everybody stands to benefit equally from any project’s success. The sponsors of a particular project might also benefit from credibly signaling their commitment to distributing the spoils universally, a certifiably altruistic project being likely to attract more supporters and fewer enemies.38

The other prong of the prudential case for favoring a wide distribution of gains has to do with whether agents are risk-averse or have utility functions that are sublinear in resources. The central fact here is the enormousness of the potential resource pie. Assuming the observable universe is as uninhabited as it looks, it contains more than one vacant galaxy for each human being alive. Most people would much rather have certain access to one galaxy’s worth of resources than a lottery ticket offering a one-in-a-billion chance of owning a billion galaxies.39 Given the astronomical size of humanity’s cosmic endowment, it seems that self-interest should generally favor deals that would guarantee each person a share, even if each share corresponded to a small fraction of the total. The important thing, when such an extravagant bonanza is in the offing, is to not be left out in the cold.

This argument from the enormousness of the resource pie presupposes that preferences are resource-satiable.40 That supposition does not necessarily hold. For instance, several prominent ethical theories—including especially aggregative consequentialist theories—correspond to utility functions that are risk-neutral and linear in resources. A billion galaxies could be used to create a billion times more happy lives than a single galaxy. They are thus, to a utilitarian, worth a billion times as much.41 Ordinary selfish human preference functions, however, appear to be relatively resource-satiable.

This last statement must be flanked by two important qualifications. The first is that many people care about rank. If multiple agents each wants to top the Forbes rich list, then no resource pie is large enough to give everybody full satisfaction.

The second qualification is that the post-transition technology base would enable material resources to be converted into an unprecedented range of products, including some goods that are not currently available at any price even though they are highly valued by many humans. A billionaire does not live a thousand times longer than a millionaire. In the era of digital minds, however, the billionaire could afford a thousandfold more computing power and could thus enjoy a thousandfold longer subjective lifespan. Mental capacity, likewise, could be for sale. In such circumstances, with economic capital convertible into vital goods at a constant rate even for great levels of wealth, unbounded greed would make more sense than it does in today’s world where the affluent (those among them lacking a philanthropic heart) are reduced to spending their riches on airplanes, boats, art collections, or a fourth and a fifth residence.

Does this mean that an egoist should be risk-neutral with respect to his or her post-transition resource endowment? Not quite. Physical resources may not be convertible into lifespan or mental performance at arbitrary scales. If a life must be lived sequentially, so that observer moments can remember earlier events and be affected by prior choices, then the life of a digital mind cannot be extended arbitrarily without utilizing an increasing number of sequential computational operations. But physics limits the extent to which resources can be transformed into sequential computations.42 The limits on sequential computation may also constrain some aspects of cognitive performance to scale radically sublinearly beyond a relatively modest resource endowment. Furthermore, it is not obvious that an egoist would or should be risk-neutral even with regard to highly normatively relevant outcome metrics such as number of quality-adjusted subjective life years. If offered the choice between an extra 2,000 years of life for certain and a one-in-ten chance of an extra 30,000 years of life, I think most people would select the former (even under the stipulation that each life year would be of equal quality).43

In reality, the prudential case for favoring a wide distribution of gains is presumably subject-relative and situation-dependent. Yet, on the whole, people would be more likely to get (almost all of) what they want if a way is found to achieve a wide distribution—and this holds even before taking into account that a commitment to a wider distribution would tend to foster collaboration and thereby increase the chances of avoiding existential catastrophe. Favoring a broad distribution, therefore, appears to be not only morally mandated but also prudentially advisable.

There is a further set of consequences to collaboration that should be given at least some shrift: the possibility that pre-transition collaboration influences the level of post-transition collaboration. Assume humanity solves the control problem. (If the control problem is not solved, it may scarcely matter how much collaboration there is post transition.) There are two cases to consider. The first is that the intelligence explosion does not create a winner-takes-all dynamic (presumably because the takeoff is relatively slow). In this case it is plausible that if pre-transition collaboration has any systematic effect on post-transition collaboration, it has a positive effect, tending to promote subsequent collaboration. The original collaborative relationships may endure and continue beyond the transition; also, pre-transition collaboration may offer more opportunity for people to steer developments in desirable (and, presumably, more collaborative) post-transition directions.

The second case is that the nature of the intelligence explosion does encourage a winner-takes-all dynamic (presumably because the takeoff is relatively fast). In this case, if there is no extensive collaboration before the takeoff, a singleton is likely to emerge—a single project would undergo the transition alone, at some point obtaining a decisive strategic advantage combined with superintelligence. A singleton, by definition, is a highly collaborative social order.44 The absence of extensive collaboration pre-transition would thus lead to an extreme degree of collaboration post-transition. By contrast, a somewhat higher level of collaboration in the run-up to the intelligence explosion opens up a wider variety of possible outcomes. Collaborating projects could synchronize their ascent to ensure they transition in tandem without any of them getting a decisive strategic advantage. Or different sponsor groups might merge their efforts into a single project, while refusing to give that project a mandate to form a singleton. For example, one could imagine a consortium of nations forming a joint scientific project to develop machine superintelligence, yet not authorizing this project to evolve into anything like a supercharged United Nations, electing instead to maintain the factious world order that existed before.

Particularly in the case of a fast takeoff, therefore, the possibility exists that greater pre-transition collaboration would result in less post-transition collaboration. However, to the extent that collaborating entities are able to shape the outcome, they may allow the emergence or continuation of non-collaboration only if they foresee that no catastrophic consequences would follow from post-transition factiousness. Scenarios in which pre-transition collaboration leads to reduced post-transition collaboration may therefore mostly be ones in which reduced post-transition collaboration is innocuous.

In general, greater post-transition collaboration appears desirable. It would reduce the risk of dystopian dynamics in which economic competition and a rapidly expanding population lead to a Malthusian condition, or in which evolutionary selection erodes human values and selects for non-eudaemonic forms, or in which rival powers suffer other coordination failures such as wars and technology races. The last of these issues, the prospect of technology races, may be particularly problematic if the transition is to an intermediary form of machine intelligence (whole brain emulation) since it would create a new race dynamic that would harm the chances of the control problem being solved for the subsequent second transition to a more advanced form of machine intelligence (artificial intelligence).

We described earlier how collaboration can reduce conflict in the run-up to the intelligence explosion, increasing the chances that the control problem will be solved, and improve both the moral legitimacy and the prudential desirability of the resulting resource allocation. To these benefits of collaboration it may thus be possible to add one more: that broader collaboration pre-transition could help with important coordination problems in the post-transition era.

Working together

Collaboration can take different forms depending on the scale of the collaborating entities. At a small scale, individual AI teams who believe themselves to be in competition with one another could choose to pool their efforts.45Corporations could merge or cross-invest. At a larger scale, states could join in a big international project. There are precedents to large-scale international collaboration in science and technology (such as CERN, the Human Genome Project, and the International Space Station), but an international project to develop safe superintelligence would pose a different order of challenge because of the security implications of the work. It would have to be constituted not as an open academic collaboration but as an extremely tightly controlled joint enterprise. Perhaps the scientists involved would have to be physically isolated and prevented from communicating with the rest of the world for the duration of the project, except through a single carefully vetted communication channel. The required level of security might be nearly unattainable at present, but advances in lie detection and surveillance technology could make it feasible later this century. It is also worth bearing in mind that broad collaboration does not necessarily mean that large numbers of researchers would be involved in the project; it simply means that many people would have a say in the project’s aims. In principle, a project could involve a maximally broad collaboration comprising all of humanity as sponsors (represented, let us say, by the General Assembly of the United Nations), yet employ only a single scientist to carry out the work.46