A World Without Ice - Henry N. Pollack (2009)

Chapter 6. HUMAN FOOTPRINTS

I will bless you … and multiply your descendants into countless thousands and millions, like the stars above you in the sky, and like the sands along the seashore.

—GENESIS 22:17

IPCC scientists in their 2007 Assessment Report concluded that “most of the observed increase in globally averaged temperature since the mid-20th century is very likely (90% probability) due to the observed increase in anthropogenic greenhouse gas concentrations.” In other words, according to the IPCC scientists, there are nine chances out of ten that we humans, through our burning of fossil fuels, have been the dominant factor in the warming of the last half century. Ninety percent certainty is an extraordinary statement of confidence in the conclusion—were you to go into a casino and be offered the opportunity to win at any game nine times out of ten, you would surely play with great confidence, and very likely leave with a bundle of cash.

But as certain as the scientists are about the role of humans in the recent climate change, the American public remains less persuaded. In a 2008 Gallup poll, only three out of five Americans believed that the climate was changing, let alone that humans had anything to do with it. The reasons why the American public has been slow to grasp the realities of climate change are many and complex, but certainly include the decades of disinformation and propaganda put out by the fossil fuel industry. Add to that eight years of the George W. Bush administration in Washington, which deliberately fostered additional doubts about climate change by exaggerating the scientific uncertainty and discouraging government climate scientists from speaking out about the causes and consequences of climate change. And there are a number of people simply distrustful of scientists because of the widespread scientific embrace of biological evolution that conflicts with their religious beliefs. So when scientists make pronouncements about Earth’s changing climate, these same people dismiss the climate science because they don’t trust scientists in general. They dismiss the message because of the messenger.

Certainly these industrial, governmental, and philosophical impediments have made it hard to persuade people that we humans have become big players in the climate system. However, other reasons also make it difficult for some people to recognize that the large human population has been driving Earth’s climate away from the environmental background in which human society developed and thrived over the past ten thousand years.

Some find it hard to grasp the very concept that the global average temperature of Earth has changed over the past century. Most of us are unaccustomed to thinking at global spatial scales and intergenerational time scales. Whatever setting we are born into is imprinted upon us as normal and unchanging, even if it has experienced something different from the worldwide average and may be in the middle of rapid social, economic, and environmental change. We are not born with global vision or a sense of history. Sensing change over a time interval longer than the characteristic human lifetime requires a well-honed historical awareness and memory, attributes that no one is born with, and which therefore must be acquired.

A second reason why some people have difficulty recognizing a human role in climate change is that in our daily lives we tend to focus on contemporary local concerns. Some of this narrow focus stems directly from our evolutionary history. Only a thousand generations ago, our ancestors’ chief occupation was the daily business of finding food in their immediate environment. Successful hunting of wild game and harvesting of natural fruits and grains were skills rewarded by natural selection. Humans did not have the need to know what the local climate would be like a century into the future, or whether there might be an intense drought developing halfway around the world due to an El Niño event in the Pacific Ocean. They were much more concerned with the necessities of the here and now, and had little time or inclination to ponder the abstract world.

Yet another reason why many people do not recognize their role in climate change is that their daily activities are separated from the subsequent effects those activities have on the climate, by both space and time. It is an abstraction to connect the simple act of increasing the setting of a thermostat in one’s home, or driving alone to work each day, to the reality that these activities slowly but steadily increase the absorption of infrared radiation in the atmosphere and warm the planet.

But there is an even more fundamental reason that impedes recognition of the human role in climate change. In the face of hurricanes, tornadoes, tsunamis, earthquakes, and volcanic eruptions, all natural phenomena that can kill thousands of people very quickly, people feel very insignificant and powerless compared to the forces of nature. And indeed, as individuals, we do have very little power. But what people do not appreciate is that, collectively, the almost seven billion people on Earth today, with millions of big machines, are staggeringly powerful and becoming more so every year. It is the sum of activities of billions of individuals—a collective human force far greater than Earth has previously experienced—that is indeed changing Earth’s climate.58 Robert F. Kennedy understood this collective power when, in a social context, he said, “Few will have the greatness to bend history itself, but each of us can work to change a small portion of events and in the total of all those acts will be written the history of this generation.”

In the remainder of this chapter, I guide you on a tour of our planet, and show you how completely we humans have taken control of Earth—its land, oceans, ecosystems, and, most certainly, its climate.

WHAT DO PEOPLE DO?

What on Earth have people been doing to push the climate out of equilibrium? One answer can be found in the way humans alter the land they live on, and in so doing change the planetary albedo—the amount of sunshine that is reflected back to space from Earth’s surface. These changes to the land began long before the twentieth century.

The principal human activity that has directly led to changes in reflected sunlight is deforestation, whereby dark forest canopy is replaced by more open, lighter-colored, more reflective agricultural lands. Deforestation had a big head start over other human activities as a climate factor—with a beginning traceable to the human use of fire.

Fire was, and remains, nature’s own agent of deforestation. Long before humans appeared on Earth, lightning strikes routinely set forests afire, and the flames burned until a lack of fuel or natural extinguishers—principally rainfall—eventually limited their spread. The arrival of humans did nothing to slow the burning; quite to the contrary, early humans valued fire as a mechanism to drive and concentrate game, and a way to produce clear space where they could more easily become aware of nearby predators, and eventually to use it for agriculture. Once early humans discovered the advantages of fire for light, warmth, cooking, and protection, they worked hard to maintain and preserve fire rather than extinguish it. Fire was a friend, not foe, of the early hunter-gatherers.

Humans later “domesticated” fire—they learned to make fire with tools, to have fire when and where they wanted it. Fire, or the heat it generated, found new uses that eventually powered the industrial revolution. Controlled fires boiled water to produce steam for engines, and fires in confined spaces gave rise to the internal combustion engine—the burning of fuels within closed cylinders that made gas expand and push pistons to produce mechanical energy. Eventually, however, people began to think of fire not only as a friend, but also as a hazard, to the cities in which they lived and to the forest resource that provided construction material and fuel. Since about the middle of the nineteenth century, our attention has turned to extinguishing fire wherever it occurs unintentionally.

As Earth warmed following the end of the last ice age, the global population was much smaller than today. An estimated few million people were scattered over all the habitable continents, with a population density less than one person per square mile, only 10 percent of the density of present-day Alaska. But farmers these people were not. Everywhere, they remained dependent on hunting skills. And so formidable were these hunting skills that even the small human numbers were able to push the giant woolly mammoths, the mastodons, and the great Irish elk toward extinction.

The warming that followed the Last Glacial Maximum was episodic, but by ten thousand years ago the climate had become similar to what we, the present-day representatives of the human race, have known. Over the next ten millennia, almost to the present day, the climate remained remarkably stable at this new level, an equable condition that fostered fundamental changes in the way of life of humans. Climatic stability enabled the establishment of sustainable agriculture, which in turn provided sufficient food to allow population growth and urbanization, along with the specialized skills that develop in the urban setting. When a subset of the population can produce more food than they personally require, not everyone needs to be a hunter, or gatherer, or farmer.

USING THE LAND

Following the retreat of the continental glaciers, forests reoccupied the newly exposed terrain, eventually covering about one third of Earth’s land area. With the establishment of agriculture, humans began to leave another big footprint on the landscape, cutting or burning the forests, plowing soil, and diverting water.

As successful agriculture supported a larger population, the many uses of timber accelerated deforestation. Not only did forests succumb to land clearing for agriculture, but increasingly timber also became an industrial commodity used in dwellings, urban construction, and even for roadbeds. There are still places in the world today where wheeled vehicles, motorized or otherwise, roll across the washboard-like surface of tree trunks laid side by side on the ground, mile after mile. Just a few years ago, in a visit to the temperate rain forest of Chile, I experienced such a roadbed, with its rhythmic staccato vibrations similar to those that accompany travel on a corrugated gravel road.

Another use of timber led to equally dramatic deforestation. The recognition of the simple fact that wood floated on water stimulated the large-scale building of sailing ships for exploration, colonization, trade, piracy, and political and military advantage. The Phoenicians, Romans, and Vikings sailed long distances in substantial wooden ships. The European powers of the Middle Ages became the first practitioners of globalization, sending vessels around the world to disseminate Christi anity and accumulate wealth. The 1571 eastern Mediterranean battle of Lepanto, between the European Holy League and the Ottoman Turks, and the failed invasion of England by the Spanish Armada near the end of the sixteenth century both involved hundreds of naval vessels constructed of prime timber, each requiring thousands of mature trees. The forests of Europe no longer seemed infinite.

When the Europeans arrived in North America, about 70 percent of the land east of the Mississippi River was forested. By the end of the nineteenth century that had been reduced to around 25 percent. Much of the landscape was literally stripped naked. Wood was used for nearly every endeavor in the growing nation—for the barges and channels and locks of the inland canal system; for the ties, trestles, and rolling stock of the national railways; for the fences that demarked property; for the telegraph and telephone poles that enabled early telecommunication; and eventually for paper. Photos of the state capital in Vermont toward the end of the nineteenth century show the extent that the hills surrounding Montpelier had been denuded. Such scenes were widespread across North America—almost the entire forest cover of Michigan succumbed to logging, and to fires that often followed close on the heels of careless lumbering practices.

Today, deforestation remains very active in many parts of the world. The tropics are being particularly hard hit, with large areas of Brazil, Indonesia, and Madagascar being subjected to relentless clear-cutting. Half of the world’s tropical and temperate rain forests are now gone, and the current rate of deforestation exceeds one acre per second. That is equivalent to cutting down an area the size of the state of Mississippi each year. But in places such as the eastern states of America, where deforestation was rampant in the nineteenth century, the forests are returning, as other materials have replaced wood in much of the modern economy of the region. The recovery in the eastern states, however, is far from complete—today’s second-growth forests cover only 70 percent of the pre-colonial distribution.

How do changes in the forest cover affect climate? Deforestation generally changes the color of Earth’s surface from dark green to lighter brown, thus causing more sunshine to be reflected back to space rather than warming the planet’s surface. Countering this slight cooling, however, is the much more significant effect of the cutting and burning of trees itself. In the natural state of affairs, living trees pull the greenhouse gas carbon dioxide (CO2) from the atmosphere in the process of photosynthesis, and dead trees decay, liberating CO2 and returning it to the atmosphere—an atmospheric equilibrium established by pulling out and pumping back equal amounts of CO2. But rapid and large-scale deforestation upsets that equilibrium—the loss of trees decreases photosynthesis, leaving more CO2 in the atmosphere. And when deforestation occurs by burning, it returns CO2 to the atmosphere far faster than new trees can grow and remove it. In sum, deforestation leads to warming of the atmosphere.

HUMAN NUMBERS GROW

The past ten millennia have generally been good times for us humans, and we have multiplied at a breathtaking pace. At 6.8 billion and growing, the human population today is more than a thousand times bigger than it was at the end of the last ice age, some 10,000 years ago. But the growth of population has not been steady over that time—it has accelerated dramatically in recent centuries.

Multiplying a number by one thousand is almost the same as doubling that number ten times. The concept of ten doublings is a good approximation of the growth of Earth’s human population from the last gasps of the ice age, when the population was around four million people, up to almost the present day. The growth began slowly—the first, second, and third doublings together required more than six thousand years, an interval of time that began when humans first began to congregate in villages and ended not long after the construction of the Great Pyramids of Egypt. The fourth doubling required a thousand years, and the fifth, only five hundred. The sixth doubling began when Rome ruled the West and the Han Dynasty the East, and ended as Europe entered the Dark Age. The seventh doubling took place in the seven hundred years between 900 and 1600, slowed by the Black Plague, which killed a quarter of the global population in the fourteenth century. The seventh doubling ended just as European explorers were circumnavigating the globe and claiming colonial territory in the New World. The eighth doubling, occurring in the two hundred years between 1600 and 1800, encompassed the creation of the United States of America, and carried the global population to the landmark statistic of one billion human inhabitants.

An extraordinary change in technology also occurred during the eighth doubling: the discovery of how to access the fossil energy contained in coal. No longer would humans rely solely on wood for heat or flowing water for industrial power. Spurred on by the abundant energy in coal, the world population underwent its ninth doubling in only 130 years, to reach two billion by 1930, in spite of the Napoleonic Wars, World War I, and a virulent flu pandemic. The tenth doubling occurred between 1930 and 1975, overcoming the effects of World War II and three subsequent Asian wars. Those ten doublings took Earth’s population from four million around ten thousand years ago to four billion in 1975, and the doubling interval shrank from twenty or thirty centuries to fewer than five decades. The eleventh doubling, now under way, from four to eight billion, will be achieved around 2025.

As I write in early 2009, the global population is at 6.8 billion. Were a person to be born each second, and if no one ever died, it would take more than 215 years to populate Earth with 6.8 billion people. The current rate of population growth is more than a million people each week, the result of more than 4 births, offset by fewer than 2 deaths, each second. At that rate, Earth’s population grows by the addition of a Philadelphia or a Phoenix each week, a Rio de Janeiro each month, and an Egypt each year.

A doubling of Earth’s population, a process that once required a few thousand years, today takes place in less than fifty. The human footprint on the planet is increasingly apparent simply due to the sheer number of people on Earth today. One cannot fully understand the changes in the global environment under way outside the context of the dramatic population growth of the last few centuries.

PEOPLE AND MACHINES

Since the end of the last ice age, humans have grown not only in numbers, but also in technological skill and resource consumption. In only a thousand generations, they have moved from human power to horsepower, at first literally and later with machines that amplified the strength of humans and their domesticated beasts of burden. These machines have enabled us to travel far faster than we or horses can run, carry far more than the capacity of backpacks or saddlebags, dig far deeper in the soil than shovels, hoes, or plows can reach, and kill far more people faster than clubs, spears, or arrows could ever accomplish.

For much of the industrial revolution, the rate at which humans use energy was measured in horsepower—a throwback to one of the animals that humans domesticated for agriculture and transportation. That unit of energy expenditure remains in common use in the automobile industry, where the power of engines is still rated in horsepower. James Watt, the developer of one of the first commercial steam engines, wanted a way to compare the work his engine could accomplish to the power output of the more familiar workhorse. Watt estimated the lifting that one horse could accomplish in bringing coal out of a mine. He determined that a horse could, using ropes and pulleys, lift a ton of coal up a mineshaft fifteen feet each minute, which, when expressed in the more common terms for the rate of energy use, is about 750 watts.59This is about the power required for a small microwave oven or space heater. The kilowatt-hour is the common unit of electricity consumption, which translates into using electricity at a rate of a thousand watts for one hour, or a little more than one horsepower for an hour. In my home, my family and I consume around twenty-four kilowatt-hours of electrical energy each day, which is the equivalent of having a horse working around the clock.

Of course, we use much more energy in our daily lives than just electrical energy. There is natural gas used to heat my home, gasoline used in the car I drive to and from work, and energy used in my workplace. Energy is also used for manufacturing, bulk transport of goods, agriculture, and much more. Effectively we all have many more horses working for us. Worldwide, the per capita rate of energy consumption is about 2,600 watts—that is, about 3.5 horsepower for every man, woman, and child on the planet, or the energy equivalent of a global population of workhorses numbering almost 25 billion. And the rate of energy consumption is hardly stable—to the contrary, it increased sixteenfold during the twentieth century alone.

Surely many people in developing countries would welcome the news that they have three and a half horses working for them. But of course the global average rate of energy consumption is deceiving—many people have no horses at all working for them, and some others have a stable full. In the United States, the 300 million residents, about 4 percent of the world’s population, account for 20 percent of the global energy expenditure. That amounts to more than fifteen horses working for every single American.

Richard Alley, a well-known climate scientist at Penn State University, has carried the horse analogy further. He points out that the carbon emitted from the combustion of fossil fuels is in the form of the greenhouse gas carbon dioxide (CO2). This gas is colorless, odorless, and tasteless, and so its presence in the atmosphere is not easily detected with our human sensory organs. But Alley asks us to imagine how different our attitude toward this important source of global warming would be if the carbon were emitted not as an invisible gas but rather as horse manure that accumulated ankle deep over the entire land surface. That would certainly get our attention in ways that CO2 in the atmosphere does not.

PLOWING AND BUILDING

Deforestation was only the beginning of human interactions with the natural Earth. Once people cleared the land for agriculture and towns, they put sticks, bones, spades, plows—and later tractors, bulldozers, steam shovels, and massive excavators—to work. As earth-movers, humans showed what they could do, and they could do a lot,60 century after century.

The seeds of agriculture were first sown some nine thousand years ago, as villages became established and nomadic life gave way to a more rooted, sedentary social structure. About 2.5 acres of crop and pasture-land were required to feed a person for a year then, and it is not much less even today. Every year, the loss of topsoil associated with tilling the land, at least until the adoption of soil conservation measures in the mid-twentieth century, amounted to about ten tons for each person, or about the volume of ten human graves for each person fed by agriculture. What has changed dramatically, of course, is the number of people to feed. With the global population nearing seven billion people, we lose on average about three inches of soil to erosion every century over all the farm and pasture land of the world, an area close to 40 percent of Earth’s ice-free land surface.

As people developed quarrying and mining, both for raw materials and energy, they dislodged more and more earth. With urbanization also came the need for water for the growing population, thus leading to the excavation of canals and the construction of aqueducts. Political and economic control required road and wall building—the Romans paved nearly two hundred thousand miles of roads and highways, and built Hadrian’s Wall seventy-five miles across the north of England as a defense against the unwilling-to-be-governed Scots. The Chinese built the Great Wall—actually a series of walls—stretching for some four thousand miles across northern China to defend against Mongol raiders. Great monuments, such as the pyramids of Egypt, and less grandiose but widespread burial mounds constituted massive construction projects.

In the modern world, the scale of our human assault on the landscape is no less profound. Coal mining, always a hazardous operation underground, surfaced with the discovery of widespread coal deposits with just a thin veneer of soil covering them. Surface strip-mining increased the volume of coal, rock, and soil moved by a factor of ten or more compared with underground operations. Today in the Powder River Basin of Wyoming, gigantic machines claw into the thick coal seams, delivering load after load of coal to waiting railway hopper cars. Mile-long railway trains leave the mines every twenty minutes. Over a year the trains could form a belt that completely encircles Earth. These trains snake across the prairies of Nebraska in an unending stream, slowly diverging to other tracks that fan the delivery of coal to electrical power plants in the eastern and southern states. And the pits of Wyoming—the residual scars of strip mining—grow larger.

In the east, coal mining continues in Pennsylvania and West Virginia. The long-ago geological collision of Africa with North America folded the coal seams, along with the other sedimentary layers, into the beautiful valley-and-ridge topography of the Appalachians. Erosion along the crests of the ridges has brought the coal seams closer to the surface, but not quite unburdened them to full exposure. But that is no insurmountable problem to coal mining today—just move in with dynamite and earthmovers, scrape off the mountaintops until the coal is exposed, and strip the coal away.61 The overburden, as geologists call the rock that sits between the coal and the surface, is unceremoniously dumped into the adjacent valleys, where it destroys forests on the mountain slopes and causes flooding in the streams occupying the valleys below. The very name of this process—mountaintop removal—expresses both the power and hubris of this human endeavor.

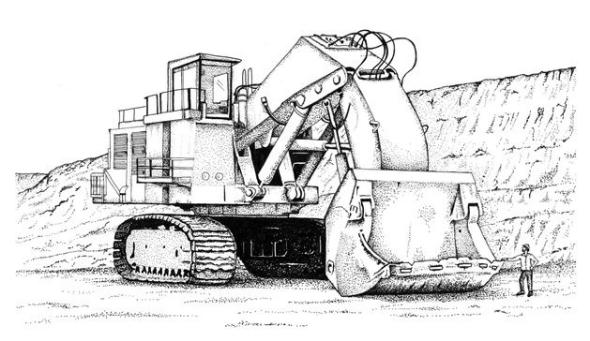

Giant earth excavator62

ALL OF THESE changes brought to the landscape by industrious people are best understood and more fully appreciated only when they are compared with natural processes that move earth around. When compared to nature’s ability to move sediment and rock, is the human impact trivial or enormous? This question fascinated Bruce Wilkinson, one of my geology colleagues at the University of Michigan for many years. Bruce is not a dapper tweed-attired professor—he is a gritty field geologist, always in Levi’s; with his big voice, he is never afraid to speak truth to power or call attention to a naked emperor. Not surprisingly, Bruce approached the question of whether human earth-moving was significant with geological logic. He reasoned that the long-term record of sediment erosion and transport can be found in the sediment deposits that have accumulated on the ocean floor, and in the sedimentary rocks of past eras on the continents. He calls this the “deep-time perspective.”63 It involves the careful estimation of sediment volumes: in the deltas of all the rivers of the world, on the continental shelves, on the deep ocean floor, and in the ancient sedimentary rocks now stranded on the continents.

Wilkinson’s calculations showed that over the past five hundred million years, natural processes of erosion have on average lowered Earth’s land surface by several tens of feet each million years. When he next calculated the present-day rate of erosion, the result was startling—humans are moving earth today at ten times the rate that nature eroded the planetary surface over the past five hundred million years. Perhaps more alarming is the erosion rate in the places where the erosion is actually occurring. On land used for agriculture, soil loss is progressing at a rate almost thirty times greater than the long-term worldwide average of natural erosion.

Not only is soil erosion much more rapid than in the geological past, but the rate of soil loss also far exceeds the rate at which new soil is produced. 64 In the same way that our consumption of petroleum far exceeds the pace at which nature makes it, and our withdrawal of groundwater far exceeds the rate at which nature recharges aquifers, the human practices that lead to the loss of agricultural soil are effectively “mining” the soil, using up a resource of finite extent. As humorist Will Rogers once noted, “They’re making more people every day, but they ain’t makin’ any more dirt.” David Montgomery of the University of Washington estimates that almost a third of the soil capable of supporting farming worldwide has been lost to erosion since the dawn of agriculture, with much of it occurring in the past half century.65

Once the land surface is plowed for agriculture, or opened to livestock grazing, wind has easier access to dust to blow around. The blowing dust is dropped eventually, and some falls into lakes and the ocean. The amount of dust accumulating in lakes of the western United States has increased by 500 percent during the past two centuries, an increase attributable to the expansion of livestock grazing following the settlement of the American West.66

Blowing dust travels the world. Satellite photos show huge clouds of dust billowing out of the Sahara Desert in northern Africa, in giant plumes that spread westward over the Atlantic Ocean. From China, similar clouds head eastward across the Pacific, but unlike the clouds from the uninhabited Sahara, the clouds emanating in China are not just dust—they include industrial pollution that the winds carry all the way to the western coast of North America. The atmosphere, by distributing industrial waste and unintentional erosion from agriculture, is an effective agent of globalization—the globalization of pollution.

Blowing dust and soot from diesel engines, cooking fires in rural undeveloped areas, and the burning of grasslands and forests are also having their effects on climate, both regionally and globally. In the same way that ash from large volcanic eruptions blocks sunshine from reaching Earth’s surface by making the atmosphere less transparent, dust and soot also dim the Sun, at least as it is seen from Earth. But the dark soot particles in the atmosphere also absorb some of the sunlight reflected back to space from Earth’s surface, thereby trapping energy in the visible wavelengths of the solar spectrum just as greenhouse gases absorb some of the infrared wavelengths. The dust and soot also lead to accelerated melting of snow and ice around the world, by darkening the white surface ever so slightly. The darkening causes less sunlight to be reflected back to space and more solar heat to be absorbed by the dust and soot, thereby further increasing the melting of the snow and ice.

FLOWING WATER

It is not just the land that people have changed—they have had equally dramatic effects on the water. The development of agriculture and urbanization could not have proceeded without the parallel development of water resources. The roots of hydraulic engineering date back almost six thousand years, and these special skills developed independently in many locations. In ancient Persia, large underground conduits called qanats carried water from the highlands to the arid plains. People built levees to stabilize river channels, and canals to carry water to fields for irrigation along the banks of the Nile in Egypt, the Indus in Pakistan, and the Yellow River in China. In the Fertile Crescent of Mesopotamia, water management along the Tigris and Euphrates reached high levels of sophistication thousands of years ago.

Following the retreat of the last continental ice sheets, many areas of North America and Europe were dotted with small lakes, marshes, and swamps. And as sea level rose following deglaciation, the estuaries of rivers extended farther inland, creating additional wetlands. Agriculture demands, land development pressures, and public health concerns led to the draining of wetlands. The District of Columbia, seat of the United States government, was originally a malarial swamp, as was much of southern Florida. The eradication of malaria in the United States was a singular public health achievement resulting from the draining of wetlands.

Today, half of the wetlands that existed around the world only ten thousand years ago are gone. Although there have been some genuine benefits associated with wetland drainage, there have also been losses. When wetland drainage began in earnest, little was known of the many services wetlands provided—their importance in the ecology of wildlife and the role they played in water purification and as a buffer against storm surges in coastal areas. And the assault on wetlands is not over—in the United States, wetland loss continues at a rate of one hundred thousand acres every year.

We have left our imprint on the lakes and rivers of the world as well. The Aral Sea, a once huge inland body of water situated along the border between Kazakhstan and Uzbekistan, in central Asia, was only half a century ago high on the list of the world’s largest lakes, surpassed in area only by the upper Great Lakes of North America and Lake Victoria in Africa. Today the Aral Sea has almost disappeared, reduced to a scant 10 percent of its former area.67 The shrinking has not been due to long-term climatic cycles that sometimes lead to lake fluctuations elsewhere. No, the Aral Sea has been the victim of water diversion from the two principal rivers that feed it, water diverted to irrigate cotton planted in the desert of Uzbekistan. Diversion canals began taking water away from the Aral Sea following World War II, and by 1960 the lake level began to fall—almost a foot each year in the 1960s, but tripling by the end of the century as withdrawals grew.

As the lake level fell and the water volume diminished, the remaining water became more saline, in much the same way that Great Salt Lake of Utah became saltier as it shrank from its glacial meltwater maximal extent. Today what remains of the Aral Sea has a salt concentration ten times its pre-diversion salinity, and three times the salinity of ocean water. The fishing industry of the Aral Sea, formerly producing one sixth of the fish of the entire Soviet Union and employing tens of thousands of people, disappeared with the water. Abandoned fishing boats now sit motionless on a sea of sand.68

RIVERS HAVE FROM the earliest days of human settlement been favored places for villages. They provided domestic and agricultural water, avenues of transportation, and power for industrialization. The human tendency to “control and improve” nature led to the construction of dams on many rivers. Today, some fifty thousand large dams and many smaller ones have altered the natural flows of rivers virtually everywhere. No major river in the United States has escaped damming somewhere along its course; not a single one flows unimpeded from headwaters to the sea.

In the spring of 1953, when I was in high school in Omaha, a major flood began to build farther up the Missouri River as heavy snow melt and ice dams raised this great river to flood stage. As the crest approached Omaha, it became apparent that it might top the levees and floodwall that protected the lower levels of the city, including the airport. The call went out for volunteer manpower to sandbag the levees. Classes be damned—this was an opportunity for students to get involved in some excitement, and so many of my friends and I were soon patrolling and reinforcing the levees as the water crept upward. When the crest was only hours away, it became obvious that it might top the floodwall, so emergency construction began to place flashboards atop the floodwall—and they proved essential. The river crested two feet above the floodwall, but was held back by the flashboarding. Omaha was saved from a major flood, ironically the last flood ever to roll down the upper Missouri River Valley. Soon after, six large dams were constructed upriver, in the Dakotas and Montana, that have ended the Missouri River’s free flowing days.

Later in my professional career as a geologist, I rafted the length of the Grand Canyon of Arizona, a chasm cut by millions of years of erosion by a turbulent Colorado River. To every serious geologist, the Grand Canyon is an obligatory pilgrimage, a geological Mecca. When first explored by John Wesley Powell, an early director of the U.S. Geological Survey, in the 1860s, the Colorado River was free-flowing, with treacherous rapids and large seasonal changes in volume. Today the river that carved the greatest canyon in the world is just a controlled-flow connection between the huge Glen Canyon and Hoover dams, two of the five large dams on the Colorado. In the Grand Canyon, the Colorado River predictably rises and falls on a daily basis, as water is released from the Glen Canyon Dam each day. Ironically, even as the flow has steadied, some of the rapids have become even fiercer. Within the canyon, where tributaries join the Colorado, they continue to deliver debris that no longer is moved downstream by an annual spring flood on the Colorado. The result is a growing fan of boulders spilling into a narrowing river channel—a sure recipe for enhancement of rapids.

A few years ago I traveled on a small boat down the Douro River in Portugal.69 The Douro arises in northern Spain (where it is called the Duero) before crossing into Portugal on its way to the Atlantic. The stories of the early navigators of the Douro tell of cataracts, turbulence, and danger. Much to my surprise, the Douro today is little more than five narrow flat lakes behind five large dams. Whatever current exists today is due to controlled flow through the locks and spillways of the dams.

Dam construction is hardly a thing of the past. The Itaipu Dam, on the Paraná River of South America, became operational in 1984. When it opened it was the largest in the world in terms of electrical generating capacity. Itaipu’s electricity output has since been overtaken by the massive Three Gorges Dam on the Yangtze River in China, already impounding water and scheduled for full operations in 2012. Three Gorges is only the latest of major dam construction projects on all the principal rivers of southern Asia, and China has plans for another dozen in the upper reaches of the Yangtze.

Dams and diversions so diminish the flow of major rivers that some rivers barely reach the sea. The apportionment of Colorado River water between the United States and Mexico (the river’s mouth is in Mexican territory) was decided on the basis of measured river volumes of the early 1920s. Unappreciated at the time was the fact that the river volumes then were at historic highs, not to be seen again in the rest of the twentieth century. Today, after the allotted withdrawals from the Colorado River in the United States, there is little water left for Mexico, and in some years not a drop of water flows out of the mouth of the Colorado into the Gulf of California.

The situation is not very different on the Ganges or the Nile, where the flows at the river mouths have been reduced to just a trickle. The fertile Nile Delta, long the principal source of food for much of Egypt, is being slowly reclaimed by the Mediterranean Sea because so little sediment is carried by the weakened Nile to replenish the soil of the delta. Farther upstream, where the Nile now flows slowly, and in the giant reservoir behind the great dam at Aswan, where it hardly flows at all, quiet backwaters have been created that have allowed schistosomiasis, a debilitating parasitic infection, to flourish in places it never appeared before. Schistosomiasis is second only to malaria in terms of tropical diseases that afflict humans.

The sediment load is not the only burden moved along by rivers. They also carry heavy chemical loads, picked up from industrial pollution, inadequate sewage treatment systems, increased runoff from the impervious surfaces of urban areas, and the fertilizers and pesticides used in agriculture. More manufactured nitrogen fertilizer is spread over agricultural lands than is provided by the entire natural ecosystem. Although the sediment load is slowed in its passage to the sea by the presence of dams, the chemical load carried by rivers is virtually unaffected by those barriers. Effectively, the chemical waste streams of entire continents are flushed into the sea.

This flux of chemicals to the sea is increasingly producing “dead zones” in the oceans, regions of the ocean floor devoid of all but microbial life forms. The delivery of fertilizers to the sea promotes the growth of algae in the surface waters, but when these organisms die, they fall to the seafloor, where they fuel microbial respiration. The dissolved oxygen in the bottom water is depleted by the microbes, and is therefore unavailable for other bottom-dwelling marine creatures, principally fish and mollusks, that need oxygen.

Dead zones are appearing all over the globe—in Chesapeake Bay; the Gulf of Mexico; the Adriatic, Baltic, and Black seas of Europe; and along the coast of China. The number of dead zones, now in excess of four hundred, has roughly doubled every decade since the 1960s. In aggregate they now cover an area approaching one hundred thousand square miles,70 about the size of the state of Michigan.

WATER UNDERGROUND

Large numbers of people in the United States drink water that is pumped from underground. And not all of these people live in rural areas—many cities also draw some of their municipal water from wells. Almost 40 percent of the public water supply in the United States is pumped from subsurface aquifers, and nearly all residential water in rural areas is drawn from a well. But domestic use of groundwater represents the lesser call on underground water—twice as much is pumped for agricultural irrigation in the areas of the United States where precipitation is inadequate, at least for the crops selected for cultivation. The regions most dependent on groundwater for agriculture are in California and the Southwest (where there is a great use of the surface water as well) and in the vast Great Plains of Montana, the Dakotas, Nebraska, Kansas, Oklahoma, Texas, New Mexico, and Colorado.

On the Great Plains, the 100º meridian of west longitude is the dividing line for irrigation—to the east there is usually sufficient winter snow and summer rain to keep the soil moist enough to support agriculture naturally. West of longitude 100º the crops need some help from irrigation. Fortunately, thick layers of sand and gravel, representing millions of years of waste and wash from the eroding Rocky Mountains, lie beneath the surface of the Great Plains. The never-ending competition between tectonic uplift of the mountains and the erosive power of rain, snow, and ice produces vast amounts of debris, carried eastward by streams and rivers meandering across the plains. And within the buried deposits of sand and gravel, water fills the pore spaces between the grains, water that had not seen the light of day for many thousands of years—until mechanized agriculture came to the Great Plains in the twentieth century.

The prairie pioneers overturned the sod and dug wells to withdraw water from the saturated aquifers beneath them. The first mechanical boost to pumps came from windmills, which took advantage of the wind that came “sweeping down the plain.” Rural electrification provided steady energy independent of the vagaries of the wind, and soon pumps of higher capacity were pulling more water from the aquifers below.71The best known of these buried aquifers is called the Ogallala, the name of a small town in western Nebraska. More than a quarter of the irrigated land in the United States sits atop this aquifer.

Withdrawals of water from the Ogallala aquifer have been at a far faster rate than nature has been able to recharge it—leading to a drop in the subsurface water table. In areas of intense irrigation, such as in southwestern Kansas and west Texas, the water table has been dropping several feet each year, requiring wells to be deepened just to reach the water. In some places the water has been exhausted. Effectively, the water that had been in place since the end of the last ice age has been “mined out.” With the draining of this aquifer at a rate equal to some fifteen Colorado Rivers each year, natural recharge cannot keep pace—the groundwater in reality is a non-renewable resource.

WILL WE TAKE IT ALL?

Yet another measure of our footprint on Earth is the fraction of the total plant growth on the continents that we have appropriated to serve our needs for food, clothing, shelter, and other necessities and amenities of life. Before humans became a presence on Earth, the fraction appropriated was zero. But today, with more than 6.8 billion people occupying the continents, the fraction is certainly not zero. This measure of the human dominance of the land-based ecosystems is variously estimated at between 20 and 40 percent of net primary production,72 an astounding number in spite of the uncertainty.

The appropriation of resources for human use is not restricted to the land. In the oceans, an estimated 90 percent of the large predator fish present in the oceans a half century ago are gone. Although there are ample dry statistics to make this point, none is quite so visual as an archive of tourist photos from Florida showing the size of the catch worth bragging about, progressing through the twentieth century. Decades ago the catch worth posing for was as big as the fisherman, but through the years photos reveal smaller and smaller fish, with today’s trophy catch seldom exceeding three feet.

Three quarters of the marine fisheries around the world are now fished to capacity or overfished. Thirty percent of the fisheries have collapsed, a term defined to indicate a depletion greater than 90 percent of the recorded maximum abundance.73 Cumulative catches of the global fishing fleet reached a maximum in 1994, and since have declined by 13 percent, despite large increases in the fleets and their range of operations. A well-recognized indicator of marine resource depletion is the fact that the fish catch is declining despite many efforts to increase it.

Human depletion of other resources can be recognized in the same way. Petroleum production in the United States reached a peak in the mid-1970s and has declined ever since, in spite of the fact that the petroleum industry has intensified exploration and has been motivated by substantial increases in the price of oil and natural gas. Worldwide, the production of petroleum will likely reach a peak in the next decade or two.

LEAVE ONLY FOOTPRINTS

As tourists, we have been urged to “take only pictures, leave only footprints” in order to preserve the natural and historical sites we visit. But the societal footprint we are leaving on Earth will win us no prizes for environmental stewardship. Species are disappearing from the global ecosphere at rates about a thousand times faster than only a millennium ago. A 2008 status report on the world’s 5,487 known species of mammals showed that more than one fifth face extinction, and more than half show declining populations, largely because of declining habitat and hunting on the land, and overfishing, collisions with boats and ships, and pollution in the sea.74 Gus Speth, the dean of the Yale School of Forestry and Environmental Studies, says that “Earth has not seen such a spasm of extinction since 65 million years ago,” when the dinosaurs and many other species disappeared following the collision of an asteroid with Earth.75

We humans, in addition to manufacturing chemicals that impact the land, air, and waters of Earth, also produce heat, sound, and light, each with its own environmental consequences. It is well known that the buildings, roads, and roofs of a city absorb the heat of the day, and rera diate it through the night, in amounts greater than do the surrounding natural areas. Even the ground beneath the city has warmed substantially, as the warm footprint of heated buildings has replaced the cold air of winter at the ground surface. I recall that when I first started research into underground temperatures, my colleagues and I drilled a borehole near the laboratory for testing our instruments. The very first measurements of the temperatures down this hole surprised us—they showed that the soil next to the building had warmed almost ten Fahrenheit degrees since the building was constructed many decades earlier.

If the gradual warming of cities is a subtle manifestation of urbanization, the generation of light is an obvious one. Driving at night on the highways of America, cities glow on the horizon like giant navigational beacons. And air passengers flying cross-country on a clear night see an illuminated panorama of the cities, towns, and isolated rural homes. The activities of people extend well into the hours of natural darkness, all made possible by our relatively newfound ability to “domesticate” light, just as we learned to domesticate fire thousands of years ago. The increase in urban illumination has long been known to impair the work of astronomers, who find once-visible stars gradually disappearing in an ever-brighter sky. And it deprives urban dwellers of one of the most beautiful experiences of all—viewing the millions of stars in the nighttime sky, visible only in areas free of light pollution.

I recall camping in the middle of the Kalahari Desert while engaged in some geophysical fieldwork in Botswana. In the dark of night—and it was truly dark—I found nothing so exquisite as gazing upward to see the majesty of a sky filled with uncountable stars, distant points of illumination in every direction, as far as the eye could see. In the Book of Genesis, God declares that he will “multiply your descendants into countless thousands and millions, like the stars above you in the sky.” What a tragedy that so many people live their entire lives without ever experiencing this image.

But light pollution affects more than astronomers and city dwellers.76 The other living creatures of the night, those we call nocturnal, display evolutionary behavior keyed to the existence of darkness. Some bats have become urbanized because their favorite insects swarm around urban light sources. And nocturnal mammals—many rodents, badgers, and possums—have become more vulnerable to predators because of their increased visibility at night. Some species are tuned to the longer-term variations in light and darkness that accompany seasonality. Many birds breed when daylight reaches a certain duration. If the nights are shortened artificially, and the days thereby apparently lengthened, the birds mistakenly think it is time to breed. Unfortunately, many of the grubs and insects that will nourish the hatchlings have not received the message that they should also advance their breeding cycle.

The biological rhythms of many species are tied to the daily cycles of light and darkness—these are called circadian rhythms. Because daylight provides the setting for work, the human body itself has evolved to use nighttime for sleeping. Alaskan, Scandinavian, and Russian hotels provide dark window shades to simulate darkness for summer tourists. Some geologists working summers at polar latitudes with twenty-four hours of daylight find that there is a real tendency to work too long, which leads to inadvertent fatigue and a greater susceptibility to accidents and illness.

No one needs to be reminded that populated areas are noisy areas: the sounds of vehicles throughout the day and night; of construction equipment digging holes, driving piles, and moving earth; of airplanes passing overhead; of motorboats, jet skis, and snowmobiles; of loudspeakers, radios, and televisions blaring. We go into the woods or the countryside for “a little peace and quiet,” to leave behind the noisy urban environment.

But it may surprise you to learn that the waters of the oceans are not quiet sanctuaries free of anthropogenic noise. To be sure, the cacophony of the cities is absent, but sound travels very efficiently through ocean water, so that no place beneath the surface of the oceans is free of industrial sound. The cranes and conveyors that load and unload maritime cargo, the clanking of anchor chains dropping or lifting, the hum of diesel engines, the slow churning of massive propellers pushing ships through the sea, the pinging of sonar depth-finder systems, the underwater air guns and explosives used in seismic exploration of the sea bottom, naval training exercises with depth charges—the list of man-made sounds in the ocean is virtually endless. There is no place in the oceans—no place at all—where a sensitive hydrophone on the seafloor will not detect sounds of human origin.

Because so much of the ocean below the surface is dark, marine creatures often rely on sound for communication and navigation. The human noises have become distracting and disorienting, and in some cases of nearby noise, physically debilitating. Migration routes and breeding habits of marine mammals have changed in response to the geography and intensity of the noise—they, too, seek peace and quiet in the ocean. The mass grounding of whales and dolphins has on occasion been linked to high-intensity military sonar exercises in the vicinity. The U.S. Navy has acknowledged the “side effects” of this activity, but when challenged in court to end the noise-making, the navy argued that the necessity of conducting such exercises overrode the damage to the marine mammal population. In late 2008, the United States Supreme Court decided in favor of the navy.77

A BLANKET IN THE ATMOSPHERE

The parade of human effects on the land and water that we have just viewed certainly has left big footprints. But the biggest driver of climate change today, without question, is the impact of human industrial activity on the chemistry of the atmosphere. Since the beginning of the industrial revolution in the eighteenth century, there has been an ever-increasing extraction and combustion of coal, petroleum, and natural gas. The burning of these carbon-based fuels, previously sequestered in geological formations for millions of years, has rapidly pumped great quantities of greenhouse gases into the atmosphere, gases that affect Earth’s climate by absorbing infrared radiation trying to escape from Earth’s surface, thereby warming the atmosphere.

The industrial pollution of the atmosphere actually began long before the combustion of fossil fuels. Ice cores in Greenland show deposition of lead during Roman times, when that metal was used widely in the plumbing systems of the Roman Empire. The word plumbing derives from the Latin word for lead—plumbum—and Pb is the symbol for lead in the periodic table of elements. The smelting of lead and its use in manufacturing created lead dust that circulated widely in the atmosphere, some of which fell on Greenland and was incorporated into the accumulating ice. The deposition of Roman lead in Greenland came and went in tandem with the rise and fall of the Roman Empire. Lead reappeared in the Greenland ice when leaded gasoline made its debut as an automobile fuel, and largely disappeared when it was phased out as a fuel additive.

Another example of industrial atmospheric pollution is tied to the chemicals known as chlorofluorocarbons (CFCs). These inert nontoxic synthetic chemicals were first developed in 1927 as refrigerants, to replace the more combustible and toxic chemicals such as ammonia then in common use in refrigerators. In the decades following World War II, more and more uses for CFCs were discovered—for air-conditioning in homes, commercial buildings, and autos, and in the fabrication of electronics, foam insulation, and aerosol propellants. But the very property that made the CFCs attractive, their relative lack of reactivity with other chemicals, also made them very durable. Over decades they accumulated in the atmosphere, where they played an important role in the destruction of stratospheric ozone and the opening of the ozone hole in the last two decades of the twentieth century.

Ever since the beginning of the industrial revolution, air pollution has had deleterious environmental impacts, not the least of which have been the effects on public health. In 1948, a five-day-long incident befell the industrial town of Donora, Pennsylvania, on the Monongahela River, some twenty miles southeast of Pittsburgh. In what has been described as “one of the worst air pollution disasters in the nation’s history,”78 a combination of unusual atmospheric conditions compounded by smoke-stack discharges of sulfuric acid, nitrogen dioxide, and fluorine from two large steel production facilities led to a stagnant yellowish acrid smog. Respiratory distress for several thousand residents, and death for at least twenty, ensued.

In 1980, well before the fall of the Iron Curtain that separated the socialist countries of Eastern Europe from their Western European neighbors, I was invited to lecture in Czechoslovakia and East Germany about my geothermal research. On the highway from Prague to Leipzig through some nicely forested areas, I encountered a ten-to-fifteen-mile-long stretch of dead trees—defoliated gray matchsticks pointing mutely to the sky. Soon the cause became apparent: a massive chemical factory spewing great clouds of toxic pollution into the atmosphere, snuffing the life out of the forest downwind. The death of the forest was apparently considered acceptable collateral damage. One can only imagine what the airborne pollution did to the people and wildlife living nearby.

The combustion of coal has long been tied to health problems. In the United Kingdom, well known for dense, damp, chilly fogs, the addition of coal dust and tars to the fog forms a toxic brew associated with dramatic increases in pulmonary disorders. In the last decades of the nineteenth century, several “smog events” were accompanied by death rates as much as 40 percent higher than the seasonal norms. The infamous five-day London smog in December of 1952, an event that darkened the city at midday, was the product of a cold, dense fog made worse by increased burning of high-sulfur coal by London’s chilly residents. It led to more than four thousand coincident fatalities, with another eight thousand to follow in the weeks and months thereafter.

Industrialization and atmospheric pollution usually grow together. Following the rapid industrialization of Asia in the past few decades, severe atmospheric pollution is now commonplace over large parts of Asia. Dense brown clouds of industrial haze regularly blanket many large Asian cities, where automobiles and coal-fired power plants have grown, on a per capita basis, even faster than the population. In rural areas where consumerism has yet to penetrate, the simple aspects of daily living, such as cooking fires fueled by wood or dung, or the seasonal burning of the fields to prepare them for the next planting, also contribute to the haze that dims the Sun, retards agricultural production, and burns the lungs of millions of urban dwellers. The non-Asian world became aware of this acute pollution as China attempted to “clear the air” for the 2008 Olympic Games in Beijing, by closing factories and restricting automobile usage for weeks in advance of the Olympics.

The rapid post-World War II growth of coal-fired electric power plants in the central industrial states of America—Illinois, Indiana, Michigan, Wisconsin, and Ohio—was also followed by widespread atmospheric pollution that produced what came to be known as acid rain. The combustion of coal with high sulfur content yielded oxides of both sulfur and nitrogen, which reacted with water vapor in the atmosphere to produce potent acids. These acids were then deposited by rain and snowfall on the forests, lakes, and soil hundreds of miles downwind of the midwestern power plants, principally in the northeastern states and adjacent Canada.

In only a half century, the acid rain and snow led to a decline in the pine forests of the Northeast, and increased the acidity of some lakes in the Adirondacks to a level where fish eggs could not hatch. In the late 1960s and early 1970s, dead lakes surrounded by declining forests and acidic soils appeared with increasing frequency. When the cause of the acid rain was recognized, it provided some of the motivation for passage of the Clean Air Act in 1970, which, among other things, led to installation of scrubbers on smokestacks to capture the sulfur and nitrogen compounds that caused the downwind acid rain. The authors of the Clean Air Act, however, did not foresee a more dramatic environmental consequence of the burning of coal—the production of CO2 that would accumulate in the atmosphere and dissolve in the oceans, altering Earth’s climate and producing a more acidic ocean. Let us now take a close look at this unanticipated world-changing pollutant.

CO2

In 1958, Charles David Keeling of the Scripps Institution of Oceanography in California began making measurements of the CO2 in the atmosphere atop Mauna Loa, in Hawaii. These daily measurements, which have continued to the present day, provide the world’s longest instrumental record of the direct consequence of burning carbon-based fossil fuels. This continued monitoring of carbon dioxide levels in the atmosphere is another example of the important Cinderella science that I describe in chapter 4.

These measurements show an increase in CO2 concentrations in the atmosphere from 315 parts per million (ppm)79 in 1958 to 390 ppm in 2009, an increase of 22 percent over just the past half century.

Riding along on this upward trend of CO2 in the atmosphere is a fascinating annual oscillation—a small seasonal up-and-down that is the signature of photosynthesis occurring in the plant life of Earth each year. Most of the land area on Earth, and therefore most of the land plants, are in the Northern Hemisphere. During each Northern Hemisphere summer the growth of plant life draws CO2 out of the atmosphere; in winter, when much of the vegetation is dormant, the CO2 resumes its upward climb. This oscillation represents the actual metabolism of plant life on our planet, the annual “breathing” of Earth’s green vegetation.

Carbon dioxide concentrations in the atmosphere over Mauna Loa from 1958 to 2008. Data from Scripps Institution of Oceanography

During the 1990s, CO2 continued its climb above preindustrial levels at a rate of 2.7 percent per year, a rate more than twice as great as when Keeling began making measurements in 1958. In the first decade of the twenty-first century the rate has increased to 3.5 percent. Now, more than a half century long, the Keeling graph is the iconic signature of human energy consumption,80 acknowledged even by skeptics.

The growth of CO2 in the atmosphere is only part of the story—more than a third of all the CO2 emitted by the global industrial economy enters the ocean, with effects that we are only beginning to understand. Oceanic uptake of CO2 leads to a progressive decline in the pH of the water in the oceans, an indicator that the water is trending toward becoming a weak acid, a process sometimes referred to as ocean acidification, although the seawater is not yet an acid. A lowering of the pH does result in a decline in the production and stability of minerals that calcifying organisms use to build reefs, shells, and skeletons, an effect likened to the onset of osteoporosis in the marine environment. This is already being observed along the Great Barrier Reef of Australia, where the calcification rate has decreased by about 14 percent since 1990, the largest decline in the past four hundred years.81

IN PERSPECTIVE

We can place the Keeling measurements into a much longer temporal context provided by microscopic bubbles of air trapped in ice. In chapter 3, while discussing the rhythms and orbital pacemakers of the last several ice ages, I mention that the deep ice core drilled at the Russian Vostok Station in East Antarctica revealed a 100,000 year periodicity in the temperature of precipitation of the annual snowfall. This same ice core, which at its bottom comprises ice as old as 450,000 years, also gives us a remarkable historical record of atmospheric carbon dioxide and methane.

The mechanism of record-keeping in the ice is fascinating—when snowflakes fall they accumulate into a very fluffy layer of flakes and air, which gets compressed into ice by the weight of subsequent snowfalls accumulating above. The air that is present becomes trapped as microscopic bubbles in the ice layer, and those bubbles constitute samples of the atmosphere at the time the snow recrystallized into an ice layer. The gases contained in the bubbles can be extracted and chemically analyzed to reveal the year-by-year greenhouse gas concentrations in the atmosphere over the complete time span represented in the ice core.

Changes in temperature and atmospheric carbon

dioxide at the Vostok site in Antarctica over the past four hundred

thousand years. Data from the Carbon Dioxide Information

Analysis Center (CDIAC) of the Oak Ridge National Laboratory

These remarkable graphs show not only that the temperature went up and down with a 100,000-year periodicity, but that CO2 did as well, in a pattern that is almost a direct overlay of the temperature record. The fundamental lesson of this overlay is that temperature and CO2 are closely related in the processes that produce ice ages. A second observation drawn from these graphs is that atmospheric CO2, over the course of the four complete ice age cycles shown, ranged roughly between 200 and 300 parts per million (ppm), from the cold of a glacial maximum when ice sheets covered vast expanses of the northern continents, to the briefer warm times that separated glaciations. Ice cores from elsewhere in Antarctica have extended the history of temperature and CO2 back some 800,000 years, but throughout this long record CO2 did not move out of the 200- to 300-ppm range.

But no longer. At the beginning of the industrial revolution in the middle of the eighteenth century, ice bubbles showed a concentration of CO2 at around 280 ppm, near the upper value of 300 ppm that characterized the earlier interglacial periods. By 1958, when Dave Keeling began his measurements of atmospheric CO2 atop Mauna Loa in Hawaii, it had already reached 315 ppm, well beyond the upper limit of the past 800,000 years. The concentration in 2009, a half century after Keeling began these measurements, reached 390 ppm, and is increasing by 2 to 3 ppm each year. It will likely cross the 400 ppm threshold in just a few more years. In the absence of any effective mitigation of greenhouse gas emissions in the near future, CO2 in the atmosphere will reach the 450 ppm mark by 2030, a level that most climate scientists think will be accompanied by increasingly dangerous changes in our climate, which I describe in the next chapter.

In retrospect, a landmark point was reached in the mid-twentieth century when the concentration of CO2 and other greenhouse gases moved out of the range of natural variability displayed over the previous 800,000 years. And it has become clear that yet another tipping point had been crossed, a point in time when the human influence on climate has overtaken the natural factors that had previously governed climate. Since then, population growth and increasing technological prowess have made the human influence increasingly dominant. Today the atmospheric concentrations of carbon dioxide and methane are substantially above their respective preindustrial levels, due to the contributions by humans and our many machines. This change in atmospheric chemistry is the real signature of human industrial activity, and the most important driver of contemporary global warming.

THE PRINCIPAL DIVISIONS of the geological time scale—such as the Paleozoic, Mesozoic, and Cenozoic eras, which together span the last 570 million years or so of Earth’s history—have beginnings and ends marked by major changes in the character and distribution of life on Earth. Major extinctions on many branches of the tree of life mark the boundaries between these eras. Trilobites and many other marine invertebrates thrived in the Paleozoic, but did not survive into the Mesozoic. The Mesozoic era was the age of reptiles, with the dinosaurs the prime megafauna. But the saurians and many other taxa met their demise as the result of an asteroid impact sixty-five million years ago.

The Cenozoic is known as the mammalian era, and many of its subdivisions are associated with major climatic events: the temporal boundary between the Paleocene and Eocene was a very warm interval, likely caused by releases of the strong greenhouse gas methane from the ocean floor into the atmosphere. The Pleistocene is the period encompassing the most recent ice ages, followed by the Holocene, representing the eleven thousand years since the end of the last ice age. During the Holocene the climate has been remarkably steady, and Homo sapiens, that amazingly capable mammal, has flourished.

For nearly three million years, representatives of the genus Homo, both ancient and modern, have been passengers aboard Earth on its annual journey around the Sun. For most of that time, humans were but pawns—albeit increasingly clever ones—in the hands of nature, adapting to changes in climate and food and water as best they could. But in the past few centuries, after we domesticated fossil energy to amplify our personal strength, we humans are no longer simply passive passengers on the planet—we now are the dominant species of the planet. Unwittingly, we have become the managers of the intricately intertwined Earth system of rock, water, air, and life—the lithosphere, hydrosphere, atmosphere, and biosphere. And we are belatedly discovering that we are clumsy managers, woefully unprepared for this endeavor, and undergoing rough-and-tumble on-the job training.

As recounted in this chapter, humans are having a profound impact on the landscape, on the waters, and on the living things of Earth. People have plowed up large areas of the land surface, have moved soil and rock at a rate much greater than natural erosion processes do, have controlled the flow of nearly every major river in the world, and have significantly altered the chemistry of lakes, rivers, the atmosphere, and oceans. We humans have wrested control of the carbon cycle, the nitrogen cycle, and the hydrological cycle away from nature, and have overpowered all other life forms on Earth, driving many to extinction. These clear and deep human footprints signal a major change in life on Earth, a reshuffling of the deck of life, with we humans moving to the top of the deck in only the eleven thousand years of the Holocene. Might not this reordering of life on Earth, driven by human fecundity and ingenuity, qualify for a new epoch in the geological time scale?

THE ANTHROPOCENE

Some say yes, and have given this epoch82 a provisional name: the Anthropocene. 83 This name was first proposed informally by Paul Crutzen and Eugene Stoermer,84 although several earlier authors have elaborated on the concept of a human-dominated period in Earth’s history. In a short essay titled “Geology of Mankind,”85 Crutzen, a co-winner of the 1995 Nobel Prize in chemistry for his work on the chemistry of ozone depletion, again argued that humans have become the dominant species of the planet, and by the usual standards for defining a new unit of the geological time scale, that a new subdivision was warranted. Crutzen points to the late eighteenth century as the start of the Anthropocene, when fossil fuels began to drive James Watt’s new steam engine and the concentration of carbon dioxide in the atmosphere began to increase. William Ruddiman, a climate scientist at the University of Virginia, places the beginning of the human influence on the terrestrial environment five thousand years earlier, when agriculture began to generate the greenhouse gas methane, and deforestation led to more carbon dioxide remaining in the atmosphere.86

By any measure, humans have moved to center stage. We have placed our footprints indelibly upon Earth, and by changing the chemistry of Earth’s atmosphere, we have inadvertently begun a planet-wide experiment with the global climate. In the next chapter I examine the consequences of this experiment, in terms both of changes that have already taken place on Earth, and of projected changes that we will face in the future. Today ice is already retreating because of human activities on Earth, and is perhaps on a trajectory to disappearance. Will future generations live on a world without ice?