Fukushima: The Story of a Nuclear Disaster (2015)

12

A RAPIDLY CLOSING WINDOW OF OPPORTUNITY

The accusations began flying almost immediately. Over the ensuing weeks and months, everyone, it seemed, was looking for something or someone to blame for the disaster at Fukushima Daiichi. Antinuclear activists around the globe looked at the accident and saw irredeemable technical and institutional failures, reinforcing their conviction that nuclear energy is inherently unsafe. Nuclear power supporters indicted the user of the technology—in this instance TEPCO—instead of the technology itself, and thus avoided answering larger safety questions.

TEPCO initially pointed fingers at Mother Nature, asserting that the event was unavoidable. But the utility also blamed Japanese government regulators for not forcing the company to meet sufficiently stringent safety standards.

For its part, the Japanese government blamed the “unprecedented” accident on what it called “an extremely massive earthquake and tsunami rarely seen in history,” suggesting that there was no way authorities could have anticipated such an event. By invoking forces beyond their control, TEPCO and the Japanese government absolved themselves of responsibility.

Some experts in the United States and Japan decided the culprit was the deficient Mark I reactor design, contending that advanced reactor designs, such as the Westinghouse AP1000, would not have suffered the same fate. In response, the Mark I’s designer, GE, asserted that no nuclear plant design could have survived the flooding and blackout conditions that Fukushima Daiichi experienced.

Then there were those who argued precisely the opposite: that the Fukushima disaster was clearly preventable. The Japanese Diet Independent Investigation Commission laid blame at the feet of both TEPCO and Japanese regulators for their incompetence, lack of foresight, and even corruption. The utility and government should have anticipated and prepared for larger earthquakes and tsunamis, and should have had more robust accident management measures in place, the commission concluded.

It is too simplistic to say either that the accident was fully preventable or that it was impossible to foresee. The truth lies in between, and there is plenty of blame to go around.

NEW REACTOR DESIGNS: SAFER OR MORE OF THE SAME?

The accident at Fukushima provided nuclear advocates with a fresh argument to bolster their support for construction of a new generation of reactors. Certain new designs, they claimed, could have withstood a catastrophic event such as the disaster at Fukushima Daiichi.

Among those designs is the AP1000, built by Westinghouse Electric Company (AP stands for “advanced passive” and one thousand is its approximate electrical generation output in megawatts.) The reactor utilizes passive safety features to reduce the need for machinery, such as motor-driven pumps, to provide coolant in the event of an accident. Westinghouse advertises the AP1000 as capable of withstanding a seventy-two-hour station blackout. Soon after Fukushima, Aris Candris, then CEO of Westinghouse, said that the Fukushima accident “would not have happened” had an AP1000 been on the site.

The design received a significant boost in February 2012 when the NRC commissioners voted 4-1 to approve licenses to construct two AP1000 reactors at the Vogtle nuclear complex, about 170 miles east of Atlanta. NRC chairman Gregory Jaczko cast the lone “no” vote, saying, “I cannot support issuing this license as if Fukushima had never happened.” Jaczko anticipated that the lessons learned from the Japanese disaster would lead the NRC to adopt new requirements for U.S. plants, and he argued that any new licenses should be conditioned on plant owners’ agreement to incorporate all safety upgrades that the NRC might require in the future.

Other new reactor designs, such as the GE Hitachi ESBWR (“economic simplified boiling water reactor”), also boast passive systems. In contrast, the French company Areva’s EPR (“evolutionary power reactor” in the United States, “European pressurized water reactor” elsewhere) adds redundant active safety systems and new features to cope with severe accidents such as a “core catcher,” intended to capture and contain a damaged core if it melts through the reactor vessel. Both designs await NRC certification in the United States.

Advocates are also promoting development of small modular reactors, those generating less than three hundred megawatts of electricity. In principle, the units can be built on assembly lines and would allow utilities to augment their generating output in smaller increments to meet fluctuating demand.

Backers argue that because the small units could be constructed underground, they would be safer from terrorist attacks or natural events such as earthquakes. On the other hand, critics argue that in the case of a serious accident, emergency crews would have difficulty accessing the below-grade reactors, and multiple units at one site may compound the challenges for emergency response efforts, as was seen at Fukushima.

There is no question that nuclear safety can be improved through thoughtful design of new reactors. However, nuclear power’s safety problems cannot be solved through good design alone. Any reactor, regardless of design, is only as robust as the standards it is required to meet.

Unless regulators expand the spectrum of accidents that plants are designed to withstand, even enhanced safety systems could prove of little value in the face of Fukushima-scale events such as an extended station blackout or a massive earthquake.

In the case of the AP1000, for example, Aris Candris was wrong. Even if an AP1000 had been at the Fukushima site, it would have become endangered after three days of blackout when it would have needed AC power to refill the overhead tank that supplies the emergency cooling water. And if the plant had experienced an earthquake larger than it was designed to withstand, the tank itself might have been rendered unusable.

Perhaps the strongest vote of no confidence comes from the reactor vendors themselves. Even as they heavily promoted the new designs in the United States, the vendors in 2003 successfully lobbied Congress to reauthorize federal liability protection for all reactors—new and old—under the Price-Anderson Act for another twenty years. While they asserted that the next generation of plants would pose an infinitesimally small risk to the public, they wanted to make sure there would be limits on the damage claims they would have to pay if they were wrong.

Those with an interest in advancing nuclear safety need to look past their own biases and recognize the root causes of the accident, wherever they may be. Absent such a clear-eyed appraisal, the opportunity to identify and correct past mistakes will be lost. With it will go the opportunity to prevent the next severe accident. The legacy of Fukushima Daiichi will then be revealed as a tragic fiasco.

There’s no question that TEPCO and the Japanese regulatory system bear much responsibility. Each clearly could have done more to prevent the disaster (like erecting a taller seawall when information about a larger tsunami threat emerged) or to lessen its severity (like equipping the plant with more reliable containment vent valves). Such change could have been accomplished through new regulations or voluntary initiatives, neither of which was forthcoming.

But that is too narrow a focus. TEPCO and government regulators were merely the Japanese affiliate of a global nuclear establishment of power companies, vendors, regulators, and supporters, all of whom share the complacent attitude that made an accident like Fukushima possible.

The safety philosophy and regulatory process that governed Fukushima were not fundamentally different from those that exist elsewhere, including the United States. The reactor technology was nearly identical. The reality is that any nuclear plant facing conditions as far beyond its design basis as those at Fukushima would be likely to suffer an equivalent fate. The story line would differ, but the outcome would be much the same—wrecked reactors, off-site radioactive contamination, social disruption, and massive economic cost.

The catastrophe at Fukushima should not have been a surprise to anyone familiar with the vulnerabilities of today’s global reactor fleet. If those vulnerabilities are not addressed, the next accident won’t be a surprise, either.

Fukushima triggered extensive “lessons learned” reviews in Japan, France, the United States, and elsewhere. Many lessons have indeed been learned, but to date few have been promptly and adequately addressed—at least in the United States. The reason, of course, is the prevailing mind-set.

“It Can’t Happen Here”

That mind-set underlies all that went wrong at Fukushima Daiichi. While numerous technological and regulatory failures contributed to the events that began on March 11, 2011, that pervasive belief stands as the root cause of the accident.

In the United States, “it can’t happen here” was a common refrain while details of the Fukushima accident were still unfolding. In June 2011, for example, Senator Al Franken joked that “the chances of an earthquake of that level in Minnesota are very low, but if we had a tsunami in Minnesota, we’d have bigger problems than even the reactor.”

Senator Franken’s casual attitude illustrates the problem. Yes, it is unlikely that a tsunami will sweep into the northern plains. But serious potential threats to reactors do exist in his home state, as well as the states of many other members of Congress.

Two pressurized-water reactors at the Prairie Island nuclear plant, southeast of Minneapolis, are among the thirty-four reactors at twenty sites around the United States downstream from large dams. A dam failure could rapidly inundate a nuclear plant, disabling its power supplies and cooling systems, not unlike the impact of a tsunami.1

Nor is a dam failure the only type of accident that could create Fukushima-scale challenges at a U.S. nuclear plant. Fire is another. A fire could damage electric cabling and circuit boards, cutting off electricity from multiple backup safety systems as flooding did at Fukushima. The NRC adopted fire-protection regulations in 1980 following a very serious fire in March 1975 at the Browns Ferry nuclear plant in Alabama. A worker using a lit candle to check for air leaks accidentally started a fire in a space below the control room. The fire damaged electrical cables that disabled all of the emergency core cooling systems for the Unit 1 reactor and most of those systems for the Unit 2 reactor. Only heroic actions by workers prevented dual meltdowns that day at Browns Ferry.

The threat of fires remains a major contributor to the risk of core damage at nuclear plants. Decades later, fire safety regulations imposed by the NRC in the wake of Browns Ferry have not been met at roughly half the reactors operating in the United States—including the three reactors at Browns Ferry.

So, lesson one: “It can happen here.” And that reality leads directly, once again, to the most critical question.

“How Safe Is Safe Enough?”

Fukushima and other nuclear plants are not houses of cards waiting for the first gust of wind or ground tremor to collapse. They are generally robust facilities that require many things to go wrong before disaster occurs. Over the years, many things have, mostly without serious consequences.

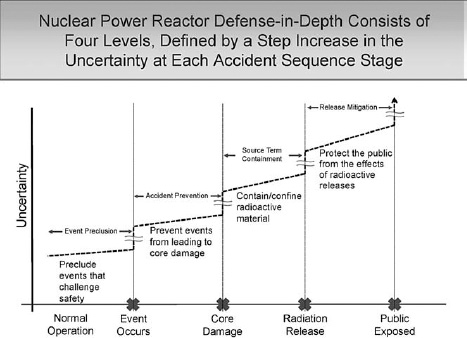

Key to safety at a nuclear plant are multiple barriers engineered to protect the public from radiation releases—the so-called defense-in-depth approach. Each barrier should be independent of the others and provide a safety margin exceeding the worst conditions it might have to endure in any accident envisioned in the design basis of the plant. Regulators regard defense-in-depth as a hedge against uncertainty in the performance of any one barrier.

The design and operation of the damaged reactors at Fukushima Daiichi were consistent with the defense-in-depth principle as commonly applied around the world. Multiple and diverse cooling systems existed to forestall damage to the reactor cores. When cooling was interrupted and core damage did occur, leak-tight containment buildings limited the escape of radioactivity. When containments leaked and a large amount of radioactivity escaped, emergency plans were invoked to evacuate or shelter people.

U.S. Nuclear Regulatory Commission

Defense-in-depth works well—as far as it goes. The concept has succeeded in limiting the frequency of nuclear disasters. Since the 1970s, three nuclear plant accidents have drawn international attention: Three Mile Island in 1979, Chernobyl in 1986, and Fukushima in 2011. Were it not for defense-in-depth, the list could be larger.

Hurricane Andrew, which pummeled Florida in 1992, extensively damaged the Turkey Point nuclear plant near Miami, but defense-in-depth prevented disaster. The plant lost access to its off-site electric power grid for several days, but emergency diesel generators powered essential equipment in the meantime. And while winds knocked over a water tower and extensively damaged a warehouse and training building on site, more robust structures protected other water supplies and essential equipment.

A month after Fukushima, a severe tornado disconnected the Browns Ferry plant from the electrical grid. That June, the Fort Calhoun nuclear plant in Nebraska experienced flooding that temporarily made it an island in the Missouri River, and in August the North Anna nuclear plant in Virginia was shaken by an earthquake of larger magnitude than it was designed to withstand. In each case, the conditions caused by the accident did not exceed the safety margins to failure—from a loss of off-site power at Browns Ferry, a flood at Fort Calhoun, or an earthquake at North Anna.

But did these events prove the inherent safety of nuclear plants, as the U.S. industry and regulators claim? Or did they constitute accidents avoided not by good foresight, but rather by good fortune?

For all its virtues, defense-in-depth has an Achilles’ heel, one rarely mentioned in safety pep talks. It is known as the common-mode failure. That happens when a single event results in conditions exceeding the safety margins of all the defense-in-depth barriers, cutting through them like a hatchet through a layer cake.

Common-mode failure is what flooding caused at Fukushima and what fire caused at Browns Ferry. Flooding or fire took out all the redundant systems needed to cool the reactor cores, the systems needed to keep the containments from overheating and leaking, and the systems needed to help predict the path and extent of the radioactive plumes. At Browns Ferry, workers managed to employ ad hoc measures in time to prevent disaster. At Fukushima, time ran out.

Defense-in-depth is both a blessing and a curse. It allows many things to go wrong before a nuclear plant disaster occurs. But when too many problems arise or a common-mode failure disables many systems, defense-in-depth can topple like a row of dominoes. The risk of common-mode failure can be reduced through enhancing defense-in-depth, but it can never be eliminated.

The true curse of defense-in-depth is that it has fostered complacency. The existence of multiple layers of defense has excused inattention to weaknesses in each individual layer, increasing the vulnerability to common-mode failure.

Fukushima Daiichi was a well-defended nuclear plant by accepted standards, with robust, redundant layers of protection. When the earthquake knocked out the off-site electrical grid, emergency diesel generators stood ready as the backup power source. Each of the six reactor units had at least two of these generators (one unit had three). A single emergency generator could provide all the power needed for cooling a core and other essential tasks, but defense-in-depth made sure every reactor had at least one spare.

It didn’t help. The generators were protected, like the reactors themselves, by the seawall erected along the coast. When the tsunami washed over the seawall, it disabled all but one of the emergency generators or their electrical connections.

Even without the generators, defense-in-depth offered protection. Banks of batteries were ready to power a minimal subset of safety equipment while damage to the AC power systems was being repaired. The battery capacity at Fukushima was eight hours per unit, assumed to be ample time for workers to either restore an emergency diesel generator or recover the electrical grid. But it took nine days to partially reconnect the plant to the grid and even longer to restore the generators.

At Fukushima, as in the United States and elsewhere, reactor operators were trained in emergency procedures for responding to severe accidents. These procedures instructed them to take steps like venting the containment to reduce dangerously high pressure and enable cooling water to enter. But the manuals did not envision the conditions the operators actually faced—for example, the need to operate vents manually in darkness—and thus workers could not implement these procedures in time to prevent the meltdowns.

The last defense-in-depth barrier was evacuation. But at Fukushima, emergency planning proved ineffective at protecting the public. Evacuation areas had to be repeatedly expanded in an ad hoc manner, and in some cases the decisions were made far too late to prevent radiation exposures to many evacuees.

All of Fukushima’s defensive barriers failed for the same reason. Each had a limit that provided too little safety margin to avert failure. Had just one barrier remained intact, the plant might well have successfully endured the one-two punch from the earthquake and tsunami or at the bare minimum, the public would have been protected from the worst radiation effects.

The chance that all the barriers might fall was never part of the planning. In effect, the nuclear establishment was riding a carousel, confident that the passing scenery of anticipated incidents would never change. Lost in the process was this reality: the brass ring for this not-so-merry-go-round involves both foreseeing hazards and developing independent, robust defense-in-depth barriers to accommodate unforeseen hazards. One without the other has been repeatedly shown, at tremendous cost, to be insufficient.

Severe accidents like Fukushima render the status quo untenable for the nuclear establishment. Something has to change. But meaningful change—a true reduction in potential danger to the public—will not occur until regulators and industry look ahead and to the sides, at what could happen, not solely at what has happened.

But such an approach would be a turnabout for the nuclear establishment. Historically, when responding to events like Three Mile Island, the industry and regulators have worked hard to narrow the scope of the response, simply patching holes in the existing safety net rather than asking whether a better net is needed. To put it bluntly, unless this process is radically overhauled, it will take many more nuclear disasters and many more innocent victims to make the safety net as strong as it should be today.

Highway departments could put up roadside signs saying “Don’t Go Too Fast” or “Drive at a Reasonable Pace.” Instead, they put up signs reading, for example, “Speed Limit 55” or “Maximum Speed 20” so that drivers understand what is expected and law enforcement officers know when to issue traffic tickets. The former signs would constitute entirely useless measures: a car wrapped around a tree must have been traveling too fast, but another barreling through a school zone at 120 miles per hour must be operating at a reasonable speed if it doesn’t strike any children. Safety requires specificity. Lack of specificity invites a free-for-all.

Such is precisely what the NRC’s Near-Term Task Force tried to avoid in its proposals for reducing vulnerabilities at U.S. nuclear plants. The NTTF started its report with a recommendation for fundamental change. It called on the NRC to redefine its historical safety threshold of “adequate protection,” this time establishing a clear foundation to guide both regulators and plant owners in addressing beyond-design-basis accidents.

In essence, the NTTF’s first recommendation urged the NRC to formally recognize that beyond-design-basis accidents need to be guarded against with unambiguous requirements based on robust defense-in-depth and well-defined safety margins. In this way, plant owners as well as NRC reviewers and inspectors would better be able to agree on what was acceptable. Inspectors would have a legal basis for declaring violations should plant owners fail to meet the requirements; at the same time, plant owners would be better protected from arbitrary rulings.

The NTTF did not propose taking a sledgehammer to the existing system. Instead it recommended creating a new category of accident scenarios to cover a range of possibilities not envisioned in the design bases of existing nuclear plants. This new category of “extended design-basis accidents” could include aspects of some of the extreme conditions experienced at Fukushima. A new set of regulations would be created for extended design-basis accidents, eliminating the “patchwork” that currently governed beyond-design-basis accidents. However, the requirements themselves would be less stringent than those for design-basis accidents.

The task force believed that the NRC had come to depend too much on the results of highly uncertain risk calculations that reinforced the belief that severe accidents were very unlikely. That, in turn, had provided the NRC with a rationale to shrink safety margins and weaken defense-in-depth. To remedy the problem, the task force requested that the commission formally consider “the completeness and effectiveness of each level of defense-in-depth” as an essential element of adequate protection. The task force also wanted the NRC to reduce its reliance on industry voluntary initiatives, which were largely outside of regulatory control, and instead develop its own “strong program for dealing with the unexpected, including severe accidents.”

The task force members believed that once the first proposal was implemented, establishing a well-defined framework for decision making, their other recommendations would fall neatly into place. Absent that implementation, each recommendation would become bogged down as equipment quality specifications, maintenance requirements, and training protocols got hashed out on a case-by-case basis.

But when the majority of the commissioners directed the staff in 2011 to postpone addressing the first recommendation and focus on the remaining recommendations, the game was lost even before the opening kickoff.

The NTTF’s Recommendation 1 was akin to the severe accident rulemaking effort scuttled nearly three decades earlier, when the NRC considered expanding the scope of its regulations to address beyond-design accidents. Then, as now, the perceived need for regulatory “discipline,” as well as industry opposition to an expansion of the NRC’s enforcement powers, limited the scope of reform. The commission seemed to be ignoring a major lesson of Fukushima Daiichi: namely, that the “fighting the last war” approach taken after Three Mile Island was simply not good enough.

Consider the order for mitigation strategies issued by the NRC to all plant owners on March 12, 2012, a year and a day after Fukushima. One part of the order required that plant owners “provide reasonable protection for the associated equipment from external events” like tornadoes, hurricanes, earthquakes, and floods. “Full compliance shall include procedures, guidance, training, and acquisition, staging, or installing of equipment needed for the strategies,” the order read.

But what is “reasonable protection”? What kind of “guidance” would be adequate, and how rigorous would be the training be? The NTTF’s first proposal would have required specific definitions. Now, without a concrete standard, NRC inspectors will be ill equipped to challenge protection levels they deem unreasonable. Conversely, plant owners will be defenseless against pressure from the NRC to provide more “reasonable” levels of protection.2

A second example: another order the NRC issued on March 12, 2012, required the owners of boiling water reactors with Mark I and II containments to install reliable hardened containment vents. They left for another day a decision on whether the radioactive gas from containment should be filtered before being vented to the atmosphere.

Such vents are primarily intended to be used before core damage occurs, when the gas in the containment would not be highly radioactive and filters would not normally be needed. However, as Fukushima made clear, it is possible that the vents will have to be used after core damage occurs, to keep it from getting worse. In that case, filters could be crucial to reduce the amount of radioactivity released. The presence of filters could also make venting decisions less stressful for operators in the event that they weren’t sure whether or not core damage was taking place. In November 2012, the NRC staff recommended that filters be installed in the vent pipes, primarily as a defense-in-depth measure.

But what might seem a simple, logical decision—install a $15 million filter to reduce the chance of tens of billions of dollars’ worth of land contamination as well as harm to the public—got complicated. The nuclear industry launched a campaign to persuade the NRC commissioners that filters weren’t necessary. A key part of the industry’s argument was that plant owners could reduce radioactive releases more effectively by using FLEX equipment.

Vent filters would only work, the argument went, if the containment remained intact. If the containment failed, radioactive releases would bypass the filters anyway. And sophisticated FLEX cooling strategies could keep radioactivity inside the containment in the first place. Further, the absence of filters at Fukushima might not have caused the widespread land contamination in any event because it wasn’t clear that the largest releases occurred through the vents; the radioactivity may have escaped another way.

The NRC staff countered by claiming that the FLEX strategies were too complicated to rely on: they rested on too many assumptions about what might be taking place within a reactor in crisis and what operators would be capable of doing. In contrast, a passive filter could be counted on under most circumstances to do its job—filter any radioactivity that passed through the vent pipes. The staff argued that filters would be warranted as a defense-in-depth measure. The staff also pointed out that many other countries, like Sweden, simply required vent filters to be installed decades ago as a prudent step.

Without an explicit requirement to consider defense-in-depth, as the NTTF had called for in its first recommendation, the NRC commissioners could feel free to reject the staff’s arguments. In March 2013, they voted 3-2 to delay a requirement that filters be installed, and recommended that the staff consider other alternatives to prevent the release of radiation during an accident. However, at the same time the commissioners voted to require that the vents themselves be upgraded to be functional in a severe accident. This second decision didn’t make much sense: if the commissioners believed the vents might be needed in a severe accident, then what excuse could there be for not equipping them with filters?

As the Fukushima disaster recedes in public memory, and the NRC sends mixed signals, the nuclear establishment has begun relying on the FLEX program to address more and more of the severe accident safety issues identified by the task force and the NRC staff. It has come to see FLEX not as a short-term measure but as an enduring solution—all that is needed to patch any holes in the safety net.

Three months before the vote on the filters, the commissioners decided to slow down the development of a new station blackout rule—one they had originally identified as a priority. In part, it was because of their confidence in FLEX. The NRC later notified Congress that it had rejected a number of other safety proposals, such as adding “multiple and diverse instruments to measure [plant] parameters” and requiring all plants to “install dedicated bunkers with independent power supplies and cooling systems,” because it believed that FLEX was sufficient. But it is unclear how FLEX would obviate either the need for additional reliable instrumentation or the desirability of a bunkered emergency cooling system. Indeed, both measures would support FLEX capabilities should a crisis occur.

Despite the willingness of the NRC to give FLEX a resounding vote of confidence, there were three important defense-in-depth issues that could not be dismissed with a wave of the FLEX magic wand. These issues had been flagged by the NRC staff post-Fukushima as issues that were of great public concern and deserved consideration.

The first related to the U.S. practice of densely packing spent fuel pools, a situation that some critics had long pointed to as a safety hazard and that the NRC had long countered was perfectly safe.

In the aftermath of Fukushima, it turned out that the Unit 4 spent fuel pool—the subject of so much alarm during the crisis—had escaped damage after all (see the appendix for more on this). That finding allowed some in the industry and the NRC to contend that concerns about spent fuel pool risks were unfounded. Their claims did not take into account the fact that U.S. spent fuel pools typically contain several times as much fuel as Unit 4 did, and therefore could experience damage much more quickly in the event of loss of cooling or a catastrophic rupture. The industry argued in turn that FLEX was designed to provide emergency cooling of spent fuel pools. But that would require operator actions at a time when attention would likely be torn by other exigencies, as happened at Fukushima.

Accelerating the transfer of spent fuel to dry casks would enhance safety by passive means. The NRC pledged to look at this issue again, but it was accorded a low priority.

The second and third issues related to emergency planning zones and to distribution of potassium iodide tablets to the public to reduce the threat from radioactive iodine. Fukushima demonstrated that a severe accident could cause radiation exposures of concern to people as far as twenty-five miles from the release site. Indeed, the worst-case projections of both the Japanese and U.S. governments found that even Tokyo, more than one hundred miles away, was similarly threatened.

Nonetheless, the NRC continued to defend the adequacy of a ten-mile planning zone for emergency measures such as evacuation and potassium iodide distribution. The agency argued that evacuation zones could always be expanded if necessary during an emergency—a position hard to reconcile with the likely difficulty of achieving orderly evacuations of people who had not previously known they might need to flee from a nuclear plant accident one day. (Prodded into action by a petition from an activist group, the Nuclear Information and Resource Service, the NRC agreed to look into the emergency planning question, but it is on a very slow track.)

One final issue to consider is the risk of land contamination, something that could be an enormous problem even if all evacuation measures were successful. In the past the NRC has assessed potential accident consequences solely in terms of early fatalities and latent cancer deaths, but Fukushima showed that widespread land contamination, and the economic and social upheaval it creates, must also be counted.3

The NRC staff proposed to the commissioners that the NRC address this issue in revising its guidelines for calculating cost-benefit analyses, but the commissioners did not show much interest. It, too, appears to be on a slow track.

As for the fate of the NTTF’s Recommendation 1—revising the regulatory framework? That seems to have slipped not only off the front burner but possibly off the stove. In February 2013, the NRC staff failed to meet the commissioner’s deadline for a proposal related to the recommendation and asked for more time. As of this writing, Recommendation 1 continues to fade as a priority, with the NRC staff contending that “it is acceptable, from the standpoint of safety, to maintain the existing regulatory processes, policy and framework.”

Safety IOUs are worse than worthless. They represent vulnerabilities at operating nuclear plants that the NRC knows to exist but that have not yet been fixed. They are, simply put, disasters waiting to happen.

The NRC’s practice of identifying a safety problem and accepting a non-solution continues. The post-Fukushima proposals are just the latest example—albeit the most worrisome.

Severe reactor accidents will continue to happen as long as the nuclear establishment pretends they won’t happen. That thinking makes luck one of the defense-in-depth barriers. Until the NRC acknowledges the real possibility of severe accidents, and begins to take corrective actions, the public will be protected only to the extent that luck holds out.

Of course trade-offs are inevitable. It would be ideal if every defense-in-depth barrier were fully and independently protective against known hazards, but realistically that price tag would likely be prohibitive. The nuclear industry is quick to oppose new safety rules on the basis of cost, which is hardly surprising given the concerns of shareholders as well as ratepayers.

On the other hand, one must consider the price tag, in both economic and human terms, of an accident like Fukushima. Ask TEPCO’s shareowners—as well as the Japanese public—today what they would have paid to avoid that accident.

So how safe is safe enough? In that critical decision, the public has largely been shut out of the discussion. This is true in the United States, in Japan, and everywhere else nuclear plants are in operation. Nuclear development, expansion, and oversight have largely occurred behind a curtain.

Nuclear technology is extremely complex. Its advocates, in their zeal to promote that technology, have glossed over unknowns and uncertainties, thrown up a screen of arcane terminology, and set safety standards with unquantifiable thresholds such as “adequate protection.” In the process, the nuclear industry has come to believe its own story.

Regulators too often have come to believe that there is a firmer technical basis for their decisions than actually exists. Officials, in particular, must grapple with overseeing a technology that few thoroughly understand, especially when things go wrong. Fukushima demonstrated that.

Meanwhile, average citizens have been lulled into believing that nuclear power plants are safe neighbors, needing no attention or concern because the owners are responsible and the regulators are thorough. Yet it is those citizens’ health, livelihoods, homes, and property that may be permanently jeopardized by the failure of this flawed system.

The public needs to be fully informed of uncertainties, of risks and benefits, and of the trade-offs involved. Scientists and policy makers must be candid about what they know—and don’t know; about what they can honestly promise—and can’t promise. And once full disclosures are made, the people must be given the final voice in setting policy. They must be the arbiters of what is acceptable and how the government acts to ensure their protection.

What that decision-making process will look like is unclear. One thing is certain: we’re nowhere close to it now.

This chapter has focused on two questions inspired by Fukushima: who is to blame for the accident and what can be done to prevent the next one? By now it should be clear that the entire nuclear establishment is responsible, rather than just TEPCO and its regulators. Even if indicted, however, the nuclear establishment likely could not be convicted. For it is sheer insanity to keep doing the same thing over and over hoping that the outcome will be different the next time.

What is needed is a new, commonsense approach to safety, one that realistically weighs risks and counterbalances them with proven, not theoretical, safety requirements. The NRC must protect against severe accidents, not merely pretend they cannot occur.

How can that best be achieved? First, the NRC needs to conduct a comprehensive safety review with the blinders off. That must take place with what former NRC Commissioner Peter Bradford calls regulatory “skepticism.” The commission staff—and the five commissioners—should stop worrying so much about maintaining regulatory “discipline” and start worrying more about the regulatory tunnel vision that could cause important risks to be missed or dismissed.

That safety review must come in the form of hands-on, real-time regulatory oversight. Every plant in the United States should undergo the kind of in-depth examination of severe accident vulnerabilities that the NRC contemplated in the 1980s but fell short of implementing.

The first step is adoption of a technically sound analysis method that takes into account the deficiencies in risk assessment that critics have noted over the decades, particularly the failure to fully factor in uncertainty. Issues that are not well understood need to be included in error estimates, not simply ignored. Setting the safety bar at x must carry the associated policy question “What if x plus 1 happens?”

Fortunately, the NRC does not have to start from scratch to do a sound safety analysis. Each nuclear plant that has applied for a twenty-year license renewal from the NRC—around 75 percent of all U.S. plants—has conducted a study called a “severe accident mitigation alternatives” (SAMA) analysis as part of the environmental review required under the National Environmental Policy Act.

A SAFETY FRAMEWORK READY FOR ACTION

A SAMA analysis entails identifying and evaluating hardware and procedure modifications that have the potential to reduce the risk from severe accidents, then determining whether the value of the safety benefits justifies their cost.

Oddly enough, even though the plant owners and the NRC have identified dozens of measures that would pass this cost-benefit test and thus might be prudent investments, none have had to be implemented under the law. That’s because the NRC has thrown into the equation its contorted backfit rule. The rule means that for the changes to be required they also must represent a “substantial safety enhancement”—a standard very hard to meet given the low risk estimates generated by the industry’s calculations.

Thus, the SAMA process has been merely an academic exercise. But the upgrades identified in the SAMA analyses provide a comprehensive list of changes that could reduce severe accident risk at each plant.

The SAMA changes are a starting point. They should be reevaluated under a new framework, one that better accounts for uncertainties and the limitations of computer models, improves the methodology for calculating costs and benefits, and allows the public to have a say in the answer to the question “How safe is safe enough?” This process would produce a guide for plant upgrades that could fundamentally improve safety of the entire reactor fleet and provide the public with a yardstick by which to measure performance.

Another tool for assessing severe accident risks would be a stress test program—an analysis of how each plant would fare when subjected to a variety of realistic natural disasters and other accident initiators. (As for industry’s much-touted but untested FLEX “fixes,” they could be taken into account, but their limitations and vulnerabilities would be fair game in any analysis.)

Before the testing process begins, another change is essential: the public, not the industry, must first determine what is a passing grade.

These SAMA analyses represent an unvarnished checklist of the changes needed at each nuclear plant in the United States to drive down the risk of an American Fukushima. Using that information as a roadmap for enhanced regulation and operations is not the entire answer, but it is a first step in better understanding the risks of nuclear power and how to control them.

Once the question “How safe is safe enough?” is answered, a second question must be asked and resolved, again with input from the public. That question is: “How much proof is enough”?

In other words, how best to prove that industry and regulators are actually complying with the new rules, both in letter and in spirit? The NRC’s regulatory process is among the most convoluted and opaque; just as in Japan, public trust has suffered as a result.

In the end, the NRC must be able to tell the American public, “We’ve taken every reasonable step to protect you.” And it must be the public, not industry or bureaucrats, who define “reasonable.”

As Japan was marking the second anniversary of the Fukushima Daiichi accident, the NRC held its annual Regulatory Information Conference, the twenty-fifth, once again attracting a large domestic and international crowd of regulators, industry representatives, and others.

During the two-day session, many presentations were devoted to the lessons learned from Fukushima, including technical discussions about core damage, flooding and seismic risks, and regulatory reforms. But by now it was apparent that little sentiment existed within the NRC for major changes, including those urged by the commission’s own Near-Term Task Force to expand the realm of “adequate protection.” The NRC was back to business as usual, focused on small holes in the safety net, ignoring the fundamental lesson of Fukushima: This accident should have been no surprise, and without wholesale regulatory and safety changes, another was likely.

One of the final events of the conference was a panel discussion featuring the agency’s four regional administrators and two nuclear industry officials, who fielded questions from an audience of other nuclear insiders. The subjects ranged from dealing with the public in the post-Fukushima era to the added workload of inspectors at U.S. reactors. The mood was upbeat, the give-and-take friendly.

But amid the camaraderie, one member of the panel seemed impatient to deliver a message to the audience. When it came to addressing the overarching lessons of Fukushima—for regulators and industry people alike—he brought unique credentials to the task. The speaker was the NRC’s own Chuck Casto, who had arrived in Japan in the first chaotic days of the accident and remained there for almost a year as an advisor.

Now, as the conference wound to a close, Casto was eager to offer some words of advice. The public does not understand the NRC’s underlying safety philosophy of “adequate protection,” he cautioned. “They want to see us charging out there making things safer and safer, to be pro-safety. If this degree is safe, a little bit more is more safe,” he told the audience.

A short time later, his voice filling with emotion, Casto spoke of the brave operators at Fukushima, whom he called “an incredible set of heroes.”

“This industry over its fifty-some years has had a lot of heroes,” he continued. He spoke about Browns Ferry, Three Mile Island, and Chernobyl. Those and other events, Casto told his audience, make the way forward clear. “We honor and we respect the heroes that we’ve had in this industry over fifty years—but we don’t want any more.

“We have to have processes and procedures and equipment and regulators that don’t put people in a position where they have to take heroic action to protect the health and safety of the public,” Casto said. “What we really have to work on is no more heroes.”